In this article, you’ll learn how to incorporate a real-time video call feature into the WhatsApp Clone Compose project with Jetpack Compose and Stream’s versatile Compose Video SDK.

For a comprehensive understanding of the project's architecture, layer structure, and theming, start with the earlier article, Building a Real-Time Android WhatsApp Clone With Jetpack Compose.

Before you begin, we recommend cloning the WhatsApp Clone Compose repository to your local device using the command below and then opening the project in Android Studio.

1git clone https://github.com/GetStream/WhatsApp-clone-compose.git

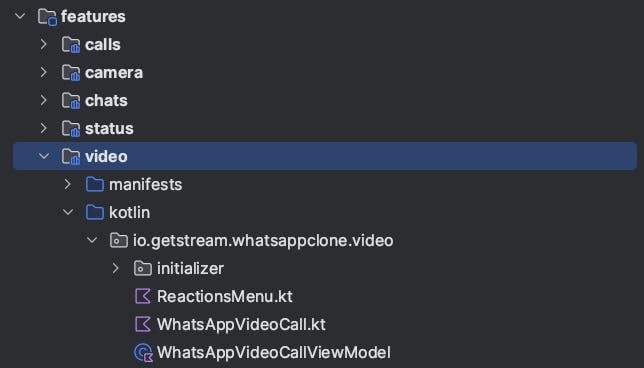

You will be working primarily in the features>video module, which can be found in your Android Studio as shown below:

Add Gradle Dependencies

To get started, you need to add the Stream Video Compose SDK and Jetpack Compose dependencies in your app’s build.gradle.kts file found in app/build.gradle.kts.

12345678910111213dependencies { // Stream Video Compose SDK implementation("io.getstream:stream-video-android-ui-compose:0.5.1") // Optionally add Jetpack Compose if Android studio didn't automatically include them implementation(platform("androidx.compose:compose-bom:2023.10.01")) implementation("androidx.activity:activity-compose:1.7.2") implementation("androidx.compose.ui:ui") implementation("androidx.compose.ui:ui-tooling") implementation("androidx.compose.runtime:runtime") implementation("androidx.compose.foundation:foundation") implementation("androidx.compose.material:material") }

In this tutorial, you'll utilize Compose UI components to render video streams with Stream Video, so you need to import the stream-video-android-compose package. However, if you prefer to create your own UI components, you can opt for stream-video-android-core, which provides only the core functionalities for video communication. For more details, check out the Stream Video Android Architecture.

Configure Secret Properties

Before diving in, configure secret properties for this project. You can get the Stream API key following these instructions. Once you get the API key, create a secrets.properties file on the root project directory with the text below using your API key:

STREAM_API_KEY=REPLACE WITH YOUR API KEYNow you’re ready to build the project on your devices. After successfully running the project on your device, you'll see the result below:

Initialize Stream Video SDK

To get started, initialize the Android Video SDK to utilize the Video Call APIs. This project uses App Startup to configure initialization:

12345678910111213141516171819class StreamVideoInitializer : Initializer<Unit> { override fun create(context: Context) { val userId = "stream" StreamVideoBuilder( context = context, apiKey = BuildConfig.STREAM_API_KEY, token = devToken(userId), user = User( id = userId, name = "stream", image = "http://placekitten.com/200/300", role = "admin" ) ).build() } override fun dependencies(): List<Class<out Initializer<*>>> = emptyList() }

In the WhatsApp clone project grouped all initializer in the MainInitializer for the sake of clarity. In a real-world scenario, you can follow this initialization approach, initialize in your Application class, or in a dedicated dependency injection (DI) module.

Implement Joining a Call

Next, let’s implement the creation and joining of a call using the Video SDK. The Stream SDK offers APIs that simplify the implementation of complex processes into just a few lines of code, as shown below:

1234567fun joinCall(type: String, id: String) { viewModelScope.launch { val streamVideo = StreamVideo.instance() val call = streamVideo.call(type = type, id = id) call.join(create = true) } }

You can obtain the Call instance from the StreamVideo object using the call() method, as demonstrated in the code above. You should specify the call type and call id, which determine different video permissions and channels.

Once you've obtained the appropriate call instance, you can initiate a call using the call.join() method, which handles all the necessary processes. This function is responsible for your application's connection to Stream's socket server, enabling the exchange of audio and video streams. By calling this method, your application establishes communication with the Stream server, allowing the transmission and reception of media streams via WebRTC protocols.

You can provide parameters like ring, notify, and createOptions when joining a call, depending on your specific requirements. For additional details, please refer to the Joining & Creating Calls section for references.

Now, let’s create a ViewModel that includes the joinCall function and manages UI states for a call screen:

1234567891011121314151617181920212223242526@HiltViewModel class WhatsAppVideoCallViewModel @Inject constructor( private val composeNavigator: AppComposeNavigator ) : ViewModel() { private val videoMutableUiState = MutableStateFlow<WhatsAppVideoUiState>(WhatsAppVideoUiState.Loading) val videoUiSate: StateFlow<WhatsAppVideoUiState> = videoMutableUiState fun joinCall(type: String, id: String) { viewModelScope.launch { val streamVideo = StreamVideo.instance() val call = streamVideo.call(type = type, id = id) val result = call.join(create = true) result.onSuccess { videoMutableUiState.value = WhatsAppVideoUiState.Success(call) }.onError { videoMutableUiState.value = WhatsAppVideoUiState.Error } } } fun navigateUp() { composeNavigator.navigateUp() } }

The call.join() method returns a Result, representing whether the joining request was successful or failed. You can manage UI states based on the result using the onSuccess and onError extensions.

Video Call Screen With Jetpack Compose

It's time to implement the video call screen using Jetpack Compose. The Stream Video SDK for Jetpack Compose provides pre-built and user-friendly UI components, so there's no steep learning curve involved.

One of the most convenient ways to implement a video call screen is by using the CallContent component, a high-level component containing various UI elements.

123456@Composable fun WhatsAppVideoCallScreen(call: Call) { VideoTheme { CallContent(call = call) } }

Once you build the code snippet above, you’ll see the result below:

The CallContent contains various UI elements, such as an app bar, participants grid, and control action buttons. The best part is that you can easily customize these elements according to your preferences. If you're interested in crafting your unique style for a video calling screen, be sure to explore the detailed documentation available in the CallContent documentation.

Note:

CallContentalso takes care of device permissions, including camera and microphone access, so you don't need to manage them separately.

Next, let's tailor the call screen to match the call style, whether audio or video. To set up the audio call screen, you can easily disable the call camera and related features in the control actions, as demonstrated in the example below:

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556575859606162636465666768697071727374757677787980@Composable private fun WhatsAppVideoCallContent( call: Call, videoCall: Boolean, onBackPressed: () -> Unit ) { val isCameraEnabled by call.camera.isEnabled.collectAsStateWithLifecycle() val isMicrophoneEnabled by call.microphone.isEnabled.collectAsStateWithLifecycle() DisposableEffect(key1 = call.id) { if (!videoCall) { call.camera.setEnabled(false) } onDispose { call.leave() } } VideoTheme { Box(modifier = Modifier.fillMaxSize()) { CallContent( call = call, onBackPressed = onBackPressed, controlsContent = { if (videoCall) { ControlActions( call = call, actions = listOf( { ToggleCameraAction( modifier = Modifier.size(52.dp), isCameraEnabled = isCameraEnabled, onCallAction = { call.camera.setEnabled(it.isEnabled) } ) }, { ToggleMicrophoneAction( modifier = Modifier.size(52.dp), isMicrophoneEnabled = isMicrophoneEnabled, onCallAction = { call.microphone.setEnabled(it.isEnabled) } ) }, { FlipCameraAction( modifier = Modifier.size(52.dp), onCallAction = { call.camera.flip() } ) }, { LeaveCallAction( modifier = Modifier.size(52.dp), onCallAction = { onBackPressed.invoke() } ) } ) ) } else { ControlActions( call = call, actions = listOf( { ToggleMicrophoneAction( modifier = Modifier.size(52.dp), isMicrophoneEnabled = isMicrophoneEnabled, onCallAction = { call.microphone.setEnabled(it.isEnabled) } ) }, { LeaveCallAction( modifier = Modifier.size(52.dp), onCallAction = { onBackPressed.invoke() } ) } ) ) } } ) } } }

As demonstrated in the code above, you can access and control various physical device components, including the camera, microphone, and speakerphone. You have the flexibility to observe their status and manually enable or disable them based on your requirements or simulation.

For more information, check out the Camera & Microphone and Control Actions documentation.

Finally, you can finish setting up the video call screen by joining a call and observing the UI states during the joining process:

12345678910111213141516171819202122232425@Composable fun WhatsAppVideoCall( id: String, videoCall: Boolean, viewModel: WhatsAppVideoCallViewModel = hiltViewModel() ) { val uiState by viewModel.videoUiSate.collectAsStateWithLifecycle() LaunchedEffect(key1 = id) { viewModel.joinCall(type = "default", id = id.replace(":", "")) } when (uiState) { is WhatsAppVideoUiState.Success -> WhatsAppVideoCallContent( call = (uiState as WhatsAppVideoUiState.Success).data, videoCall = videoCall, onBackPressed = { viewModel.navigateUp() } ) is WhatsAppVideoUiState.Error -> WhatsAppVideoCallError() else -> WhatsAppVideoLoading() } }

Real-Time Emoji Reactions

Sending real-time emoji reactions is one of the essential features in modern applications. The Stream Video SDK supports sending reactions seamlessly in both the core and UI components.

The CallContent component already includes reaction animations, and you can send a reaction using call.sendReaction. You can also implement your own reaction dialog, allowing users to choose which reaction they want to send like the code below:

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108private data class ReactionItemData(val displayText: String, val emojiCode: String) private object DefaultReactionsMenuData { val mainReaction = ReactionItemData("Raise hand", ":raise-hand:") val defaultReactions = listOf( ReactionItemData("Fireworks", ":fireworks:"), ReactionItemData("Wave", ":hello:"), ReactionItemData("Like", ":raise-hand:"), ReactionItemData("Dislike", ":hate:"), ReactionItemData("Smile", ":smile:"), ReactionItemData("Heart", ":heart:") ) } @Composable internal fun ReactionsMenu( call: Call, reactionMapper: ReactionMapper, onDismiss: () -> Unit ) { val scope = rememberCoroutineScope() val modifier = Modifier .background( color = VideoTheme.colors.barsBackground, shape = RoundedCornerShape(2.dp) ) .wrapContentWidth() val onEmojiSelected: (emoji: String) -> Unit = { sendReaction(scope, call, it, onDismiss) } Dialog(onDismiss) { Card( modifier = modifier.wrapContentWidth(), backgroundColor = VideoTheme.colors.barsBackground ) { Column(Modifier.padding(16.dp)) { Row(horizontalArrangement = Arrangement.Center) { ReactionItem( modifier = Modifier .background( color = VideoTheme.colors.appBackground, shape = RoundedCornerShape(2.dp) ) .fillMaxWidth(), textModifier = Modifier.fillMaxWidth(), reactionMapper = reactionMapper, reaction = DefaultReactionsMenuData.mainReaction, onEmojiSelected = onEmojiSelected ) } FlowRow( horizontalArrangement = Arrangement.Center, maxItemsInEachRow = 3, verticalArrangement = Arrangement.Center ) { DefaultReactionsMenuData.defaultReactions.forEach { ReactionItem( modifier = modifier, reactionMapper = reactionMapper, onEmojiSelected = onEmojiSelected, reaction = it ) } } } } } } @Composable private fun ReactionItem( modifier: Modifier = Modifier, textModifier: Modifier = Modifier, reactionMapper: ReactionMapper, reaction: ReactionItemData, onEmojiSelected: (emoji: String) -> Unit ) { val mappedEmoji = reactionMapper.map(reaction.emojiCode) Box( modifier = modifier .clickable { onEmojiSelected(reaction.emojiCode) } .padding(2.dp) ) { Text( textAlign = TextAlign.Center, modifier = textModifier.padding(12.dp), text = "$mappedEmoji ${reaction.displayText}", color = VideoTheme.colors.textHighEmphasis ) } } @Preview @Preview(uiMode = Configuration.UI_MODE_NIGHT_YES) @Composable private fun ReactionMenuPreview() { VideoTheme { StreamMockUtils.initializeStreamVideo(LocalContext.current) ReactionsMenu( call = mockCall, reactionMapper = ReactionMapper.defaultReactionMapper(), onDismiss = { } ) } }

Once you partially compile the ReactionsMenu composable function, you’ll see the preview result below on your Android Studio:

Finally, you can complete the call screen by adding these reaction dialog components inside the WhatsAppVideoCallContent composable function.

1234567891011121314151617181920212223242526272829303132333435@Composable private fun WhatsAppVideoCallContent( .. ) { var isShowingReactionDialog by remember { mutableStateOf(false) } VideoTheme { Box(modifier = Modifier.fillMaxSize()) { CallContent( call = call, controlsContent = { ControlActions( call = call, actions = listOf( { ReactionAction( modifier = Modifier.size(52.dp), onCallAction = { isShowingReactionDialog = true } ) }, .. ) ) } ) if (isShowingReactionDialog) { ReactionsMenu( call = call, reactionMapper = ReactionMapper.defaultReactionMapper(), onDismiss = { isShowingReactionDialog = false } ) } } }

Once you've built the project, you'll see the final result below:

Test Multiple Participants Joining on Stream Dashboard

Stream offers a convenient method for testing multiple participants joining a video call on your Stream Dashboard. To do this, navigate to your dashboard and select the Video & Audio tab from the left-hand menu. You'll be directed to a screen that looks like the one below:

Next, click the Create Call button, and you'll be directed to the video call screen, where you'll find various details about the call, including the call ID and call type. Take note of the call ID and join the call from your Dashboard.

Lastly, update the call ID you noted from the Dashboard in your Android Studio project and run the project.

1val call = streamVideo.call(type =”default”, id = CALL_ID_FROM_DASHBOARD)

After successfully joining the call from your app, you'll observe the result below:

Wrapping Up

In this post, we explored how to integrate video calling features into the WhatsApp Clone Compose project using Stream’s versatile Compose Video SDK. Try the Compose Video Tutorial or check out the open-source projects below on GitHub:

- Twitch Clone Compose: Twitch clone project demonstrates modern Android development built with Jetpack Compose and Stream Chat/Video SDK for Compose.

- Dogfooding: Dogfooding is an example app that demonstrates Stream Video SDK for Android with modern Android tech stacks, such as Compose, Hilt, and Coroutines.

- Meeting Room Compose: A real-time meeting room app built with Jetpack Compose to demonstrate video communications.

You can find the author of this article on Twitter @github_skydoves or GitHub if you have any questions or feedback. If you’d like to stay up to date with Stream, follow us on Twitter @getstream_io for more great technical content.

As always, happy coding!

— Jaewoong