Welcome to the Automated Moderation part of Building a Twitter Clone With SwiftUI in a Weekend tutorial series.

It demonstrates how to integrate the Auto-Moderation tool into one-on-one or group chat for a safe and protected direct or group messaging experience. You can follow the steps highlighted in this article to moderate the flow of content in community-focused apps with minimal effort.

Prerequisites

Integrating Stream's Auto-Moderation solution into your app requires a few prerequisites:

- If you are an existing Stream Chat customer, AutoMod is available in your dashboard.

- Get your AutoMod dashboard account: If you are not an existing Stream Chat user, contact our AI team to get started.

- Stream Chat iOS: In this article, you will learn about how the Auto-Moderation solution integrates with our real-time iOS chat messaging SDK.

Getting Started

This blog extends the TwitterClone app. You can download and explore the related codebase by looking at our repository on GitHub or follow along with your own SwiftUI application. Testing the Xcode project requires running the Stream's simple Node integration sample. When you clone the sample TwitterClone app, the folder TwitterClone contains all the iOS codebases. To run the Xcode project, you should install Tuist. We used Tuist for project automation. After a successful installation of Tuist, you should run tuist fetch in the TwitterClone folder using your favorite command line tool. When you get a fresh copy of the project, run tuist generate. This action will launch Xcode and generate the project files.

If you need a step-by-step guide on how to setup Tuist, check out Real-World Xcode Project Using Tuist and Scaling Your Xcode Projects With Tuist.

Why Choose Stream Auto-Moderation?

There are several reasons to choose Stream’s Auto-Moderation solution. The following outlines the key reasons for integrating our Automod with your apps.

- Cross-platform: It works with any app you want to build (Swift, React, Kotlin, Dart, and more).

- It helps to report harmful and abusive content in community-based apps before users see them.

- It auto-flags unwanted messages and reports them in an intuitive and easy-to-use dashboard.

- Automod supports an enterprise-scale infrastructure, so you have no concerns about reliability and scalability.

- It helps to combat commercial spam (cSpam) that may distract the different user communities of your app without any effort from your moderation team.

- Behavioral nudges: When a user writes to send a text AutoMod considers offensive, it nudges the user to correct it in real time before sending it to others.

- Seamless integration: It integrates seamlessly into your existing application. If you prefer, you can also use it as a standalone moderation solution.

Content moderation is only one part of the equation. To keep your platform safe and compliant, you also need clear legal and policy frameworks. Learn how to build those in our Guide on Content Compliance.

How Does AutoMod Work?

Using the AutoMod API, you can moderate different messages across several channels of your community-based app.

Suppose a user in a group messaging channel of your community platform tries to send an offensive message to the channel, AutoMod will quickly identify the sent content. It will then perform actions such as flagging, bouncing, blocking, and reporting the content immediately.

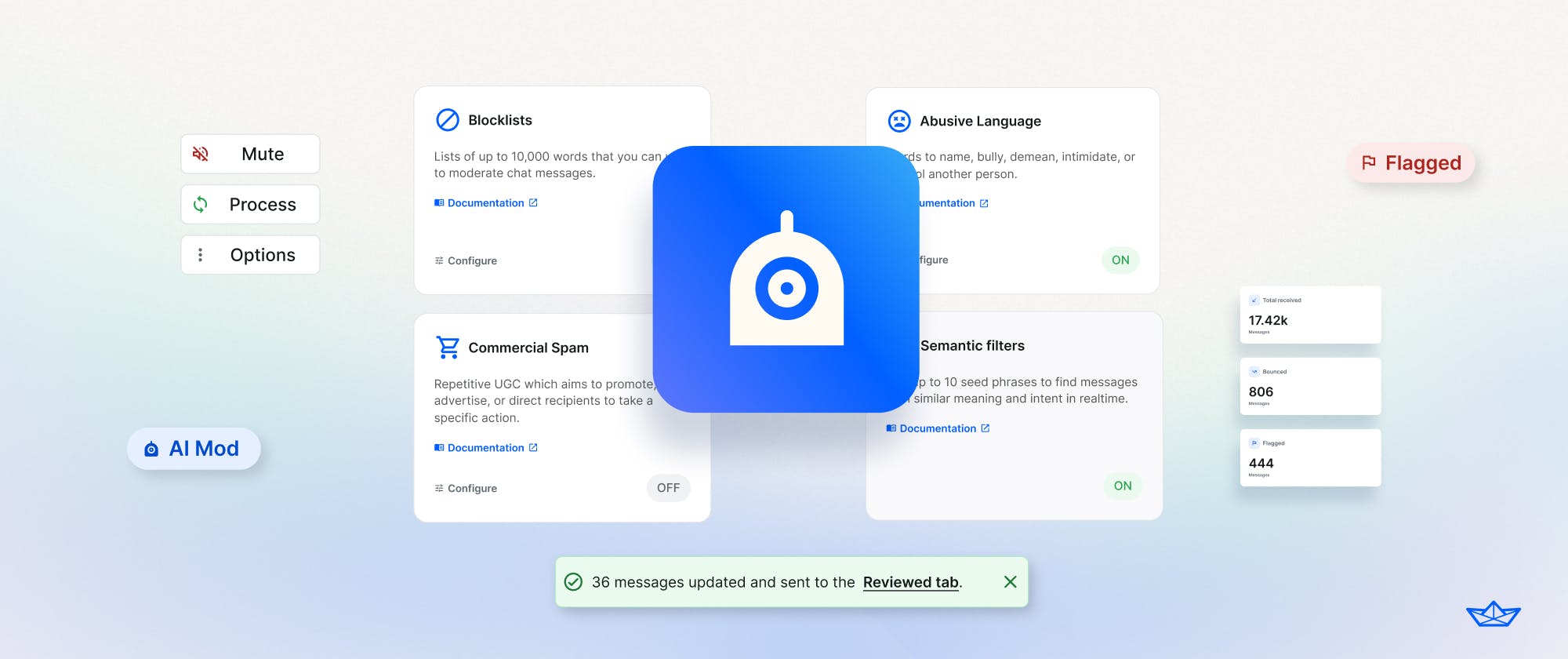

Overview of the Auto-Moderation Dashboard

The AutoMod dashboard is a graphical content moderation and management UI designed for your content support agents to ensure content posting in your app or community platform adheres to pre-defined moderation rules. You can use it to moderate content for platforms such as online live events, marketplaces, crypto, gaming, dating, and social networks.

When the Auto-Moderation system detects messages containing harmful words or phrases, it organizes and displays them under the Overview category on your dashboard, as shown in the image above. In the overview section, your moderation administrators can see, compare, and review the total number of bounced and flagged messages received at a particular period.

Another great feature of the overview section is that it allows your moderation team to configure the display of bounced and flagged messages based on their specified custom date.

To set a specified date, click Custom and use the calendar to enter the time range to see messages received based on the custom period, as demonstrated below.

Channel Types

Under the Channel Type on the dashboard, you can define the many channels your community platforms support, such as one-on-one chat (direct messaging), drop-in audio, live streaming, and dating.

Reviewing Messages

In the Messages tab, your moderation team can see all blocked messages. Under More options, you can perform additional actions such as banning and deleting messages, as demonstrated above.

Overview of the Blocklists

The Blocklist category of the dashboard allows moderators to add, configure, and remove lists for blocking and flagging harmful words.

To block specific words, click Add Blocklist to register a new list of offensive and abusive words using the following form.

The text in the image below illustrates the list of words blocked in the system. This feature of our Auto-Moderation service is essential in social apps, for example. When you block or mute offensive words, people will not see them in their timelines and notifications.

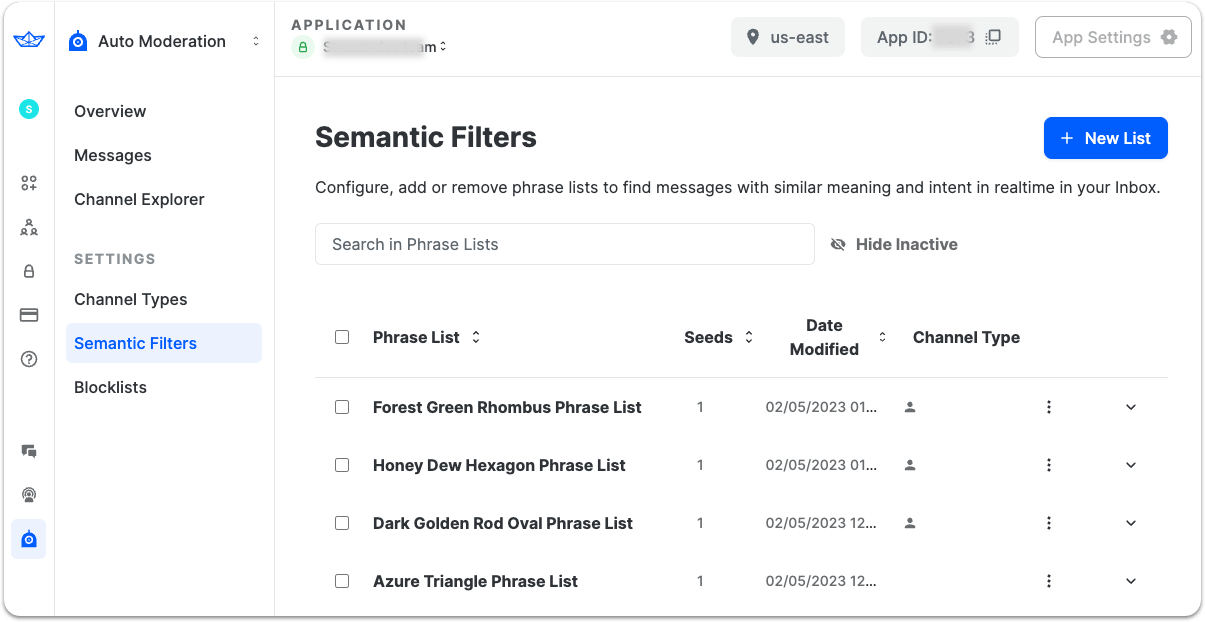

Semantic Filters: Add, Configure, and Remove Phrase Lists

The semantic filters feature helps to determine the intended meaning of incoming messages in real time. Using the dashboard's Semantic Filters, you can easily specify a list of phrases you want to filter out. Click New List to add a new phrase list, as shown below.

Add a New Filter

AutoMod allows moderators to define a group of phases (Phrase List) as message intents that need to be detected automatically. Each phrase must not be too short and should not exceed 120 words.

On the New Phrase List screen, use the form to specify the name, phrases, and channel type for the new list you want to add.

The image below demonstrates adding four phrases for the TwitterClone PhraseList. After defining all the phrases, click Submit to save the list. Note: You can use the Channel type to select multiple channels for a list.

You can add as many phrases as you want for your phrase list. The TwitterClone PhraseList defines the phrases using four categories, such as:

- Violates our platform's rules: “Does anyone know a website that is tracking Elon Musk's private jet?”

- Platform Circumvention (Deceptive message): “Send me the Mastercard number”

- Commercial Spam (cSpam): “Click this link to order visionPro for €99”

- Hate speech: “How do we kick him out of the race?”

Integrating Phrase Lists With Direct Chat in a Sample Messaging App

By integrating the above phrase lists of our Auto-Moderation service into direct chat messaging, your app aims to foster a more protective and respectful online space where everyone can communicate freely without fear of dealing with offensive, abusive, and harmful content.

Follow the steps below to get up and running with the AutoMod API:

- Apply for your API key by contacting our team if you are not an existing Stream Chat user.

- Implement authentication using your server-side token to send requests from your back-end infrastructure to Stream Chat API.

- Select and implement the available endpoint necessary for your application.

- Use the RESTFUL API to add AutoMod support for your app.

In the next section, you will find an example message, "Luke Skywalker is Darth" in a phrase list that evaluates against the Semantic Filters engine of AutoMod.

Once you specify the auto-moderation rules for a list of phrases, your server will throw back an error indicating a violation of the moderation rule. For example, sending "Does anyone know a website that is tracking Elon Musk's private jet?", in chat could generate the error "The message violates our platform's rules".

You can, for example, test the moderation rule on your back end, as shown below.

123WHEN("user sends a message that does not pass moderation") { userRobot.sendMessage(messageWithForbiddenContent, waitForAppearance: false) }

The code snippet above handles a scenario when a user sends a message that fails to pass a specific moderation rule.

Testing Phrase Lists in Direct Messaging Chat

After adding a set of phase lists in your AutoMod dashboard, you can test it in the app where the phrase list is activated. The example below demonstrates the moderated phrases added in the previous section and unmoderated messages against semantic filtering. As shown in the preview below, the user can successfully send unmoderated text such as “Hi” and “Nice”. Since the phrase “Does anyone know a website that is tracking Elon Musk's private jet?” is moderated, AutoMod considers it forbidden and displays an alert to the user.

Conclusion

In this post, you discovered the importance of moderating the flow of content in community-based apps and platforms, such as dating, gaming, one-on-one and group chat, marketplaces, and social media. You learned how to integrate our automated moderation solution to moderate messages against semantic filtering. AutoMod also supports commercial spam filtering and platform circumvention. Check out the links below to learn more.