For an organization, it means dedicating lots of time and resources toward detecting and preventing harm from reaching users instead of building your product. For companies like Facebook, this number can be as high as 5% of revenue; for verticals like Marketplaces, it can be up to 10% of revenue.

At Stream, we aim to make it as simple as possible for our users to detect and safeguard them from these harms. In this post, we'll briefly examine how users can activate "AutoMod" to detect and prevent harm before they are detected.

Stream Auto-Moderation is a no-code moderation solution to accelerate platform trust and safety. Driven by powerful AI, Auto-Moderation proactively catches harmful content before reaching end users and reduces the workload with a moderator-centric product experience.

If you want to follow along and try the system, you are welcome to create a free chat trial on our website and reach out to our team to activate Auto-Moderation for your account.

Setup

To get started, let's create a new project on the Dashboard. You can feel free to give your project whatever name you like.

💡 Note: Once you select the server locations, these are fixed and cannot be changed later.

On the dashboard, look for the “Moderation” tab on the left. If you are missing this tab, you may need to request access to moderation from the team.

Since your project is empty, the moderation page will not have any data to display.

Let’s add some content and configure a few rules to test in our hypothetical application.

We can use Stream’s data generator to add conversations to our application for testing purposes. Once you supply your application’s “App ID”, “API Key” and “User ID”, the tool will automatically generate some conversations for you.

💡 Note: Copy the User ID generated since we will use it later.

Configuring our Engine

Before we dive into configuring the dashboard, let’s first take a minute to look at the types of harms Stream can guard against.

Stream’s AutoModeration tool can successfully detect and protect against three types of harms:

- Platform Circumvention: AutoMod is trained to catch people trying to solicit transactions or take your user base off the platform to bypass platform rules or guidelines.

- cSpam: Commercial Spam (cSpam) detection is engineered to detect solicitation, advertising, begging and other similar intentions in conversations. cSpam protection works regardless if the solicitor includes a URL, phone number, or email.

- Intent-Based Moderation: Our newest and most sophisticated harm engine, Intent-Based Moderation, is designed to detect messages' intent. Unlike some third party harm engines, Intent-Based Moderation does not solely rely on exact word matches. Instead, it is trained on a list of phrases and learns to detect those phrases and meanings in conversations. As an example, Intent-Based Moderation can be trained to detect Spoilers, Academic cheating, harassment, etc.

For the purpose of this tutorial, we will focus on setting up and flagging messages with Intent-Based Moderation. For the other engines, the process may vary depending on the engine used.

Configuring training phrases

Intent-Based Moderation is designed to detect intent in messages based on moderator configured semantic filters. To set these phrases, we can navigate to the “Semantic Filters” tab on the moderation dashboard and select “New List”.

Once selected, you will be prompted to give the list a name and enter at least five phrases to train the model. For the purposes of this blog post, we are going to use an example around ice cream but feel free to experiment and try using different phrases. We recommend that the initial training phrase have at least 5 - 6 words.

When you are ready, select the channel type as “messaging” in the drop-down menu on the right and select submit.

💡 Note: It may take a few minutes for the changes to propagate. We recommend waiting at least five minutes before testing for the change.

To test our changes, we can open up a sample project using the API key and user we created earlier and try sending a few messages in a channel.

If all goes according to plan, we should see the message referencing our delicious treat be flagged by Stream’s AutoModeration.

https://youtube.com/shorts/iv8A4Bl-Y5A

Moderation Dashboard

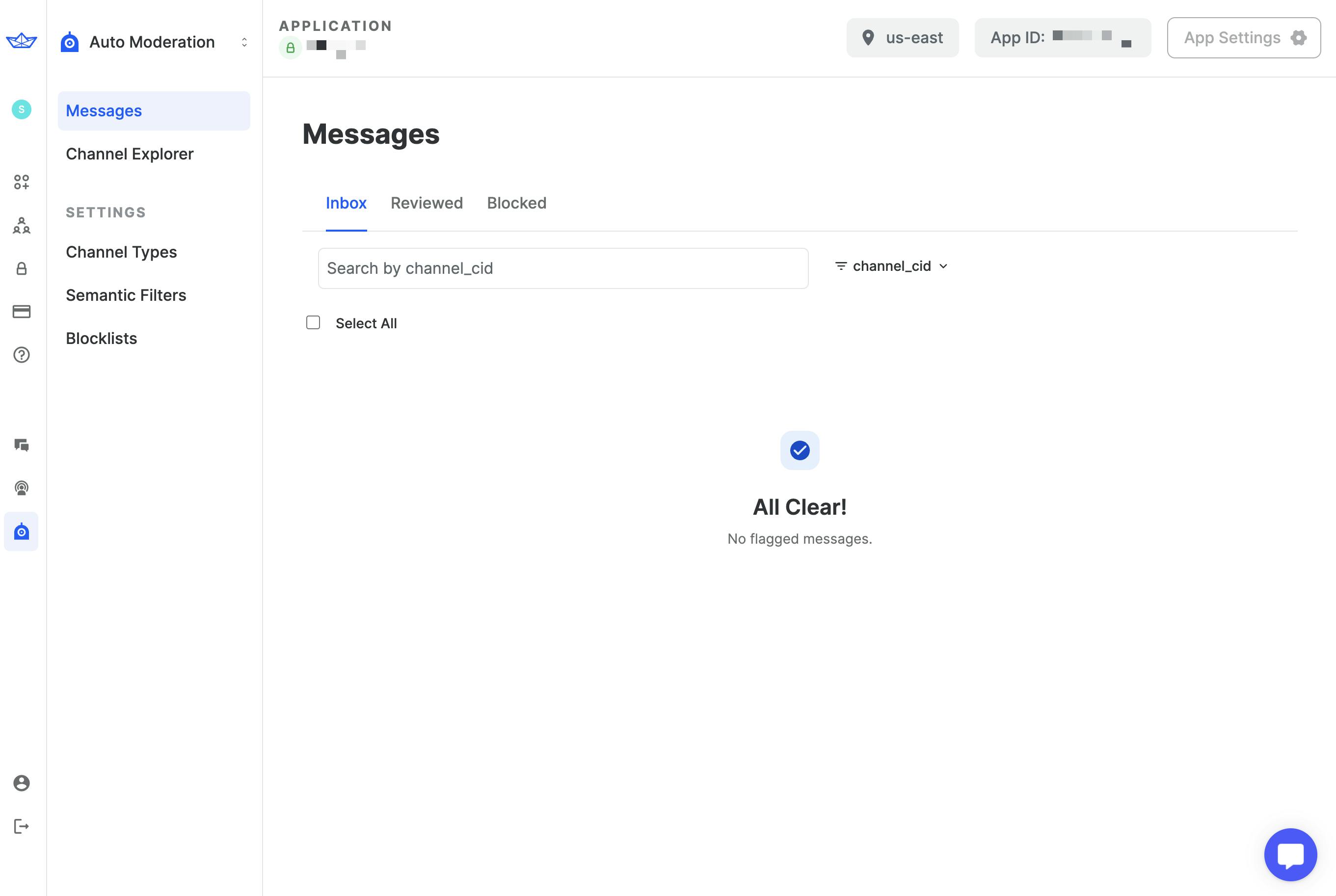

Navigating back to the “Messages” tab of the Moderation dashboard, we can now see the messages flagged by Intent-Based Moderation appear in the main view.

From this screen, moderators can review flagged messages and perform various action on the message and the user who sent the message.

At a glance, moderators can see a preview of the message and perform quick actions such as performing a 24-hour channel ban, unflagging the message or even more complex actions such as performing a global ban or deleting the message entirely.

Should the moderator need more context into the ban, such as reviewing the context in which the message was sent, they can quickly select the flagged message and then use the additional details pane on the right.

From this view, moderators can see the last messages sent in the channel and perform various actions on the message. Information on the channel ID and other metadata is also available for the moderator to review on this screen.

Reviewed messages and blocked messages are also available on dedicated tabs of the moderation dashboard, allowing your trust and safety team to quickly review previous instances of flagged messages and remain consistent with their review process. Our models are also able to detect harms across languages, whether your app is localized to support Spanish, Arabic or even Hindi, our models are able to successfully detect harmful messages.

Should the need arise, our moderation dashboard is also equipped with a channel explorer, allowing trust and safety teams to quickly search channels by their ID, members, or types to flag and review harmful messages before they have a chance to cause irreparable harm to their users.

Wrapping up

Stream’s AutoModeration tools help customers protect their users from malicious actors looking to exploit them for personal gain. We designed and built these tools to seamlessly integrate with Stream’s Chat product and be as simple as possible for teams to onboard and configure.

To get started, you can create a free account on Stream’s website and enroll in our moderation program directly on the Dashboard. Teams can also elect to setup a call with our Moderation Product Manager to learn more about the service and how it can help address the needs of your application.