Whether building a social app or managing a brand's online presence, moderation is key to balancing free expression with user safety, brand trust, and legal compliance.

Get started! Activate your free Stream account today and start prototyping.

The scale of moderating social media is massive. For example, Meta flagged and removed over 16 million content pieces between January and March 2024.

Even governments are raising the bar: the EU's Digital Services Act, the UK's Online Safety Bill, and new U.S. state laws are creating strict rules for content governance.

Platforms without strong content moderation may open the door to inappropriate speech, misinformation, or harassment.

This article breaks down the benefits of social media moderation, what user-generated content requires scrutiny, effective methods, best practices, moderation tools, and how to support the people doing this work.

What Is Social Media Moderation?

Social media content moderation is reviewing, filtering, and managing user-generated content (UGC) on platforms like Facebook and Instagram. It similarly covers moderating brand-managed channels to create a safe, respectful community.

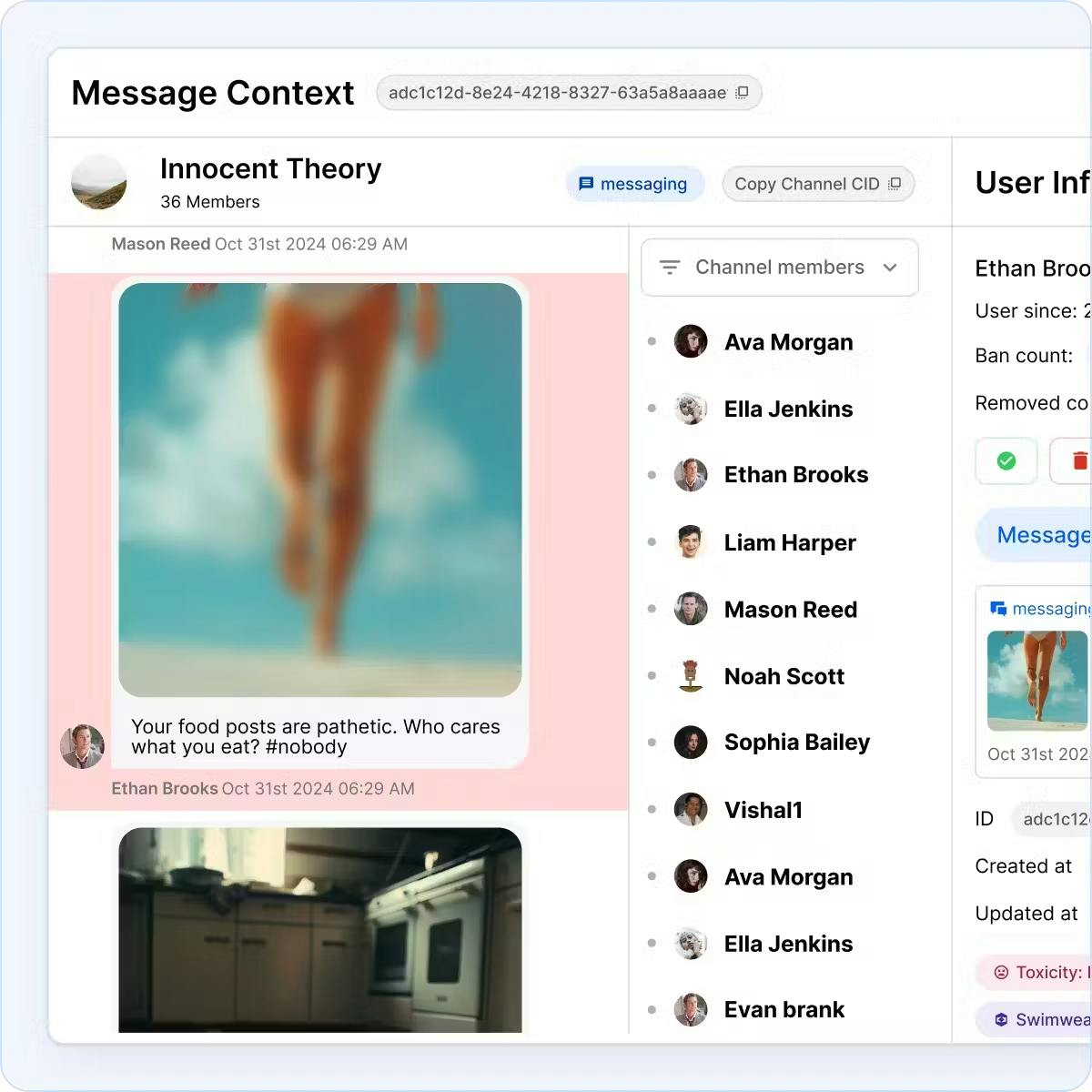

This process involves filtering keywords, flagging suspicious behavior, blurring sensitive content, or limiting visibility, often using a mix of automation and human review. The goal is to reduce harm, enforce guidelines, and maintain community standards without compromising user experience.

Why Is It Important?

Social media moderation is important for many reasons, including:

User Safety

Moderating removes harmful content, violent threats, and abusive comments so individuals feel safe. Users who feel protected keep returning to the platform, which minimizes customer churn.

Brand and Platform Trust

When a brand overlooks inappropriate posts or remarks on its platforms, people take note. Advertisers do as well. Remaining aware of such content and taking apt steps to deal with it communicates a strong message that the platform takes moderation seriously.

Legal and Regulatory Compliance

Many countries now have strict rules about illegal content. If harmful content isn't removed quickly, companies may face lawsuits or big fines. Moderating effectively helps you stay compliant and avoid risks before they turn serious.

Community Health and Retention

Online communities flourish when individuals sense they are part of a community, even amidst differing viewpoints. People want to feel heard, respected, and protected; monitoring and removing harmful UGC makes this happen.

For instance, Instagram's AI nudges users before posting potentially offensive comments. Also, its "Restrict" feature allows users to quietly limit bullies' interactions, reducing harm without escalating conflict.

Preventing the Spread of Harm (Virality)

Detecting and removing UGC that promotes hate, violence, and misinformation stops the posts from going viral. It also reduces algorithmic amplification by limiting visibility.

What Types of Content Need Moderation?

UGC isn't limited only to text posts. From live video to chat threads, moderators must adapt to various media formats.

Text and Chat

Text and chat moderation involves reviewing for hate speech, spam, threats, or misinformation that can escalate in comments, tweets, status updates, direct messages, and live chat.

Images and Video

Visual content can be harder to control. Harmful material, like graphic violence, may be obvious, or it may be cleverly hidden in memes, backgrounds, or cropped images.

Audio and Voice

Platforms like Clubhouse and X Spaces have introduced voice-based interactions that can carry verbal abuse. Unlike text or video, audio is challenging to scan automatically, and detecting harmful tone or intent often requires context. Here, real-time moderation can be complex. Moderators must act quickly to address violations as they happen, often without the benefit of review or delay.

Activity Feeds

Phishing scams and spam posts can appear in activity feeds. For example, a fake image promoting counterfeit goods may tag dozens of random users to widen its reach.

These reflect broader categories. Here are more specific types that platforms and brands must watch for:

-

Nudity and Sexual Content: Includes explicit photos, deepfakes, unsolicited sexual content, or exploitative material.

-

Bullying and Harassment: Personal attacks, dogpiling (mass harassment), intimidation, or targeting behavior.

-

Extremism and Hate: Racist slurs or derogatory, abusive content targeting identity groups.

-

Misinformation and Disinformation: False information shared intentionally (disinformation) or unintentionally (misinformation), such as conspiracy theories.

-

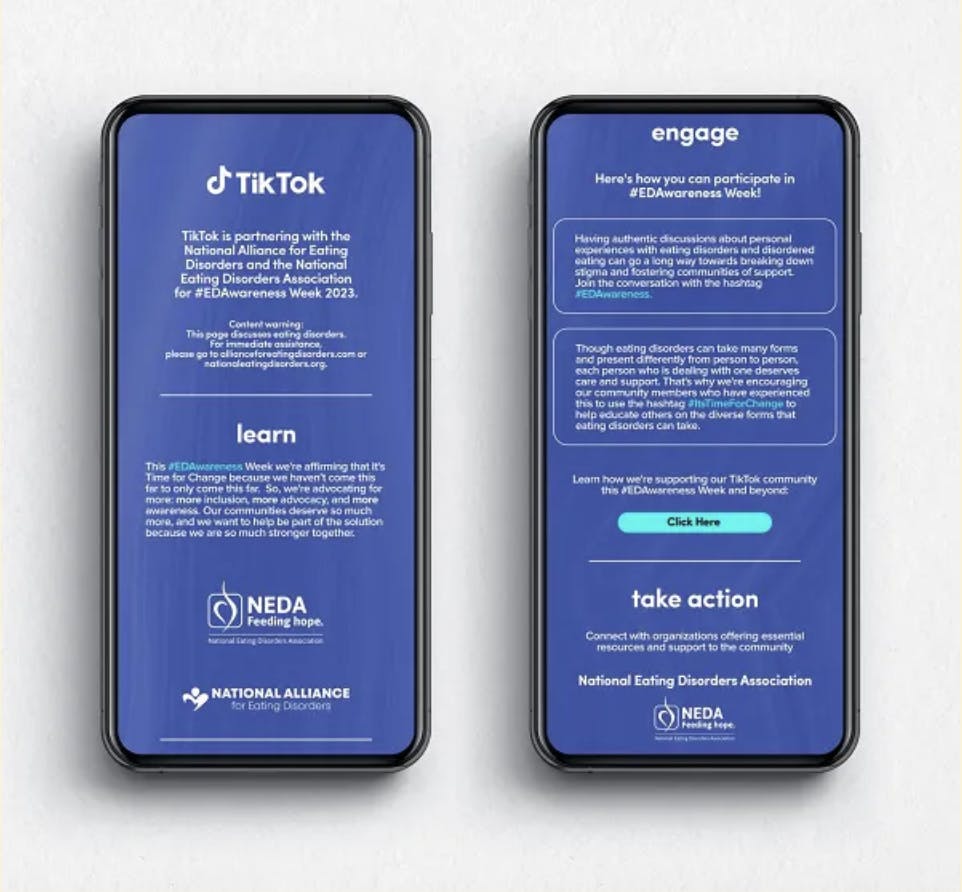

Self-Harm or Suicide Content: Posts or conversations promoting self-injury, glorifying eating disorders, or depicting suicidal ideation.

-

Illegal Content: Child sexual abuse material (CSAM), drug sales, or threats of violence.

Methods of Social Media Moderation

There are several different methods for handling social content.

The following are five of the most widely used:

1. Pre-Moderation

In this approach, every post is reviewed before it appears publicly. Nothing goes live until someone, either a human or a machine, gives it the green light.

It's especially useful in high-risk environments to keep everything safe and on-message, like kids' apps. But it's also resource-heavy. If your platform has millions of users, reviewing everything without causing delays or backlogs is difficult.

2. Reactive Moderation

In reactive moderation, UGC is published instantly and is sent for review only after someone flags or reports it, such as a user or a mod.

It's less resource-intensive than pre-moderation but also riskier. Harmful content might stay up for hours or days before moderators can take action.

3. Distributed Moderation

This is where the community itself participates to maintain order.

Think of Reddit's upvote/downvote system, where users can push good content to the top and quietly ignore the rest. It sounds democratic, and when it works, it's great.

But it can also be swayed by groupthink or bad actors. What gets downvoted isn't always what's inappropriate; it's sometimes just what's unpopular or contrarian. While distributed reviewing can be helpful, it's rarely enough on its own.

For niche or tight-knit online communities, it's a good first line of defense.

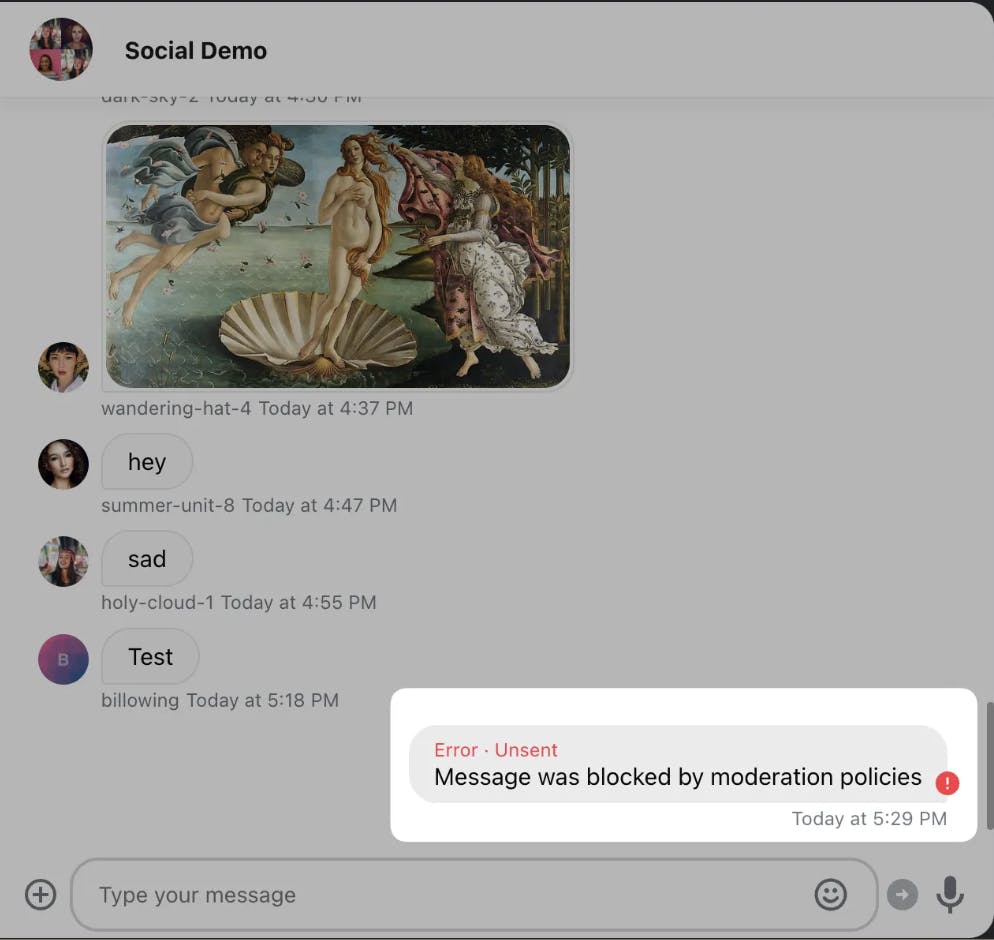

4. Automated Moderation

This is a review powered by AI systems. For example, TikTok uses AI to detect and remove many violations automatically before human reviewers ever get involved. These tools are fast and tireless, and they can scale easily.

But AI content moderation isn't perfect. It may not be able to catch cultural context or sarcasm. Automation helps with basic, high-volume content filtering.

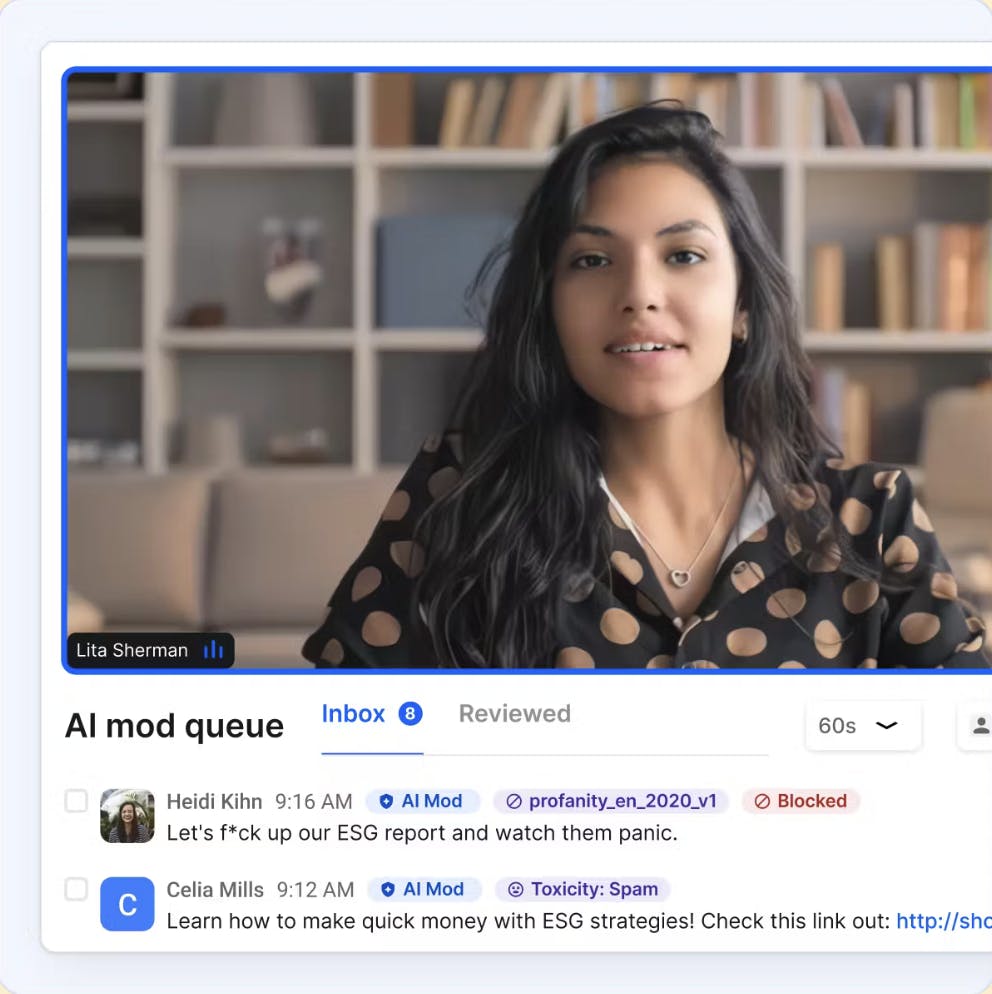

5. Hybrid Moderation

In the hybrid approach, digital platforms combine automation and human oversight.

Here's how it usually works: AI scans everything first. Obvious violations get removed automatically. Tricky or borderline content passes to humans for a closer look. Humans bring emotional intelligence and ethical thinking to the final judgment.

Facebook has tens of thousands of human mods working alongside AI tools. That way, harmful UGC doesn't fall through the cracks, and innocent posts aren't wrongly taken down.

How Do Users Report Objectionable Content?

Both platforms and individuals play a vital role in identifying and responding to inappropriate content to keep social media users safe.

What Users Can Do

Most social apps and websites give users simple tools to filter unwanted content on the spot.

Report

Users can flag posts, comments, profiles, or messages that violate platform guidelines.

Block

Blocking someone stops them from viewing your content, messaging, following, or tagging you.

Mute

Muting hides another user's posts from your feed without notifying them.

Leave or Speak Up

When platforms fail to act, users often push for better control by raising concerns publicly, organizing campaigns, or choosing to leave. This kind of collective pressure can drive meaningful change, forcing companies to improve policies and invest in safety tools.

What Platforms and Brands Can Do

Platforms and brands can use a layered set of actions.

Blur

Some UGC may include sensitive images or videos. These can be blurred by default, with a warning message like "This content may be disturbing to some viewers" before users choose to view it.

Label

Platforms often add warnings to posts to reduce harm without fully removing the content, such as "This contains misinformation" or "Sensitive content."

Restrict Visibility

Offensive content can be deprioritized in feeds, made unsearchable, or shown only to a limited audience.

Hold for Review

Some social platforms automatically pause suspicious posts, comments, or replies for manual review before publication.

Blocklist

Moderators use lists of banned keywords, URLs, phrases, or usernames to automatically filter and reject content before it reaches the public.

Auto-Delete

AI filters can instantly delete known hate speech or slurs based on predefined rules and blocklists.

Account Actions

Social accounts that repeatedly violate rules may receive warnings, temporary suspensions, or permanent bans.

IP Banning

To prevent repeat offenders from making new accounts, platforms can block access from specific IP addresses.

Shadow Banning

A subtle tactic where the offender can still post, but no one else sees their content.

Best Practices To Moderate Content

Effective moderating is about building the right systems, tone, and infrastructure from the start. In the next section, we'll cover best practices.

Platform-Specific Best Practices

Have Detailed User Community Guidelines

Clear, accessible guidelines help users understand what is and isn't acceptable. Good community standards include:

-

Examples of prohibited content

-

Expectations for tone and behavior

-

Enforcement actions for violations

-

How users can appeal decisions

Build a Crisis Protocol

Sensitive or large-scale issues, like coordinated harassment, require thoughtful action. A well-defined response playbook (that includes defining what a crisis means to you and an escalation matrix) helps teams act quickly and responsibly.

Offer Multilingual Moderation

If your platform serves a global audience, remember to use multilingual moderating. Beyond translation, effective review considers cultural context and nuance. What's offensive in one region may not be in another.

Use Tiered Levels

Not every violation needs a ban. Use tiered responses like warnings, mutes, timeouts, and bans to keep actions proportionate and fair.

Collaborate With Experts

For sensitive topics like self-harm, disordered eating, or political misinformation, work with NGOs, health professionals, or policy experts.

TikTok partnered with the National Eating Disorders Association to design specific filters and help resources triggered by key terms.

Evaluate Build vs. Buy Options

Assess whether to develop in-house moderation systems or leverage third-party solutions for your platform. Pre-built solutions are quicker to implement, so some platforms adopt moderation APIs or similar tools.

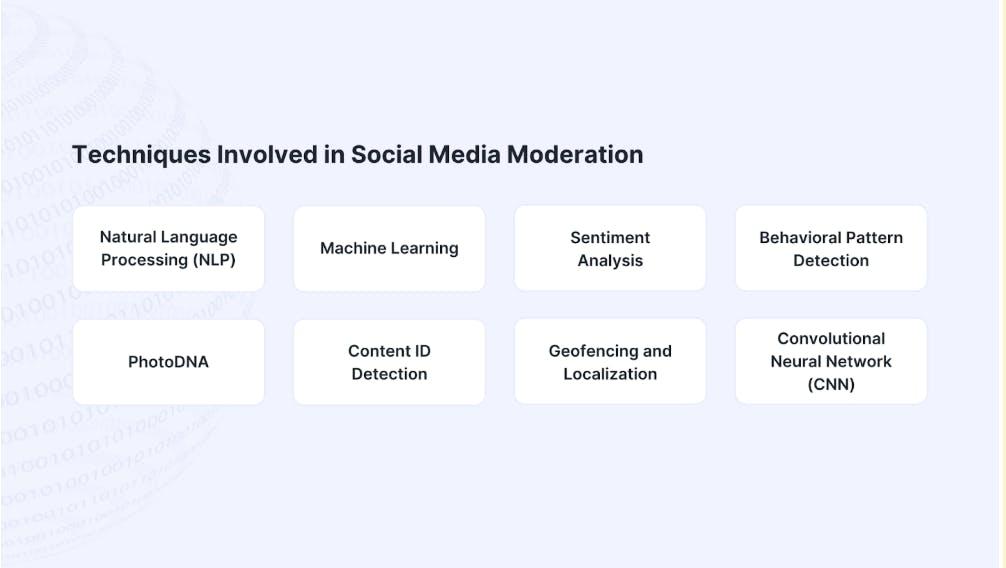

Learn About the Various Techniques Involved in Reviewing Social Media

While human mods bring empathy, technology adds speed and scale.

Here are some core technologies platforms use.

-

Natural Language Processing (NLP): Helps detect toxic or harmful language patterns.

-

Machine Learning: Learns from past actions to improve future accuracy.

-

Sentiment Analysis: Gauges user intent or tone at scale.

-

Behavioral Pattern Detection: Tracks unusual user behavior like rapid posting and mass tagging.

-

PhotoDNA: Matches images against known illegal databases.

-

Content ID Detection: Scans uploaded media (video/audio) against copyrighted content databases.

-

Geofencing and Localization: Adjusts rules by location, language, or local laws.

-

Convolutional Neural Networks (CNNs): Scans user-uploaded photos and flags potential violations.

Brand-Specific Best Practices

Empower Users To Shape Their Experience

Let users filter what they see, who they interact with, and how they report abuse. For instance, Instagram allows users to mute comments.

Train Moderators Like Product Experts

Invest in onboarding that includes context, tone calibration, and escalation flows. Train them on evolving digital language (like algospeak, used to bypass filters and algorithms).

Follow Privacy and Data Laws

Make sure you're GDPR, CCPA, and COPPA compliant (If collecting user feedback, comments, or form inputs).

The Human Element: Supporting Social Media Moderators

While reviewing social media protects users, it can harm the content moderators if systems don't account for their emotional well-being.

Here's how social media platforms and brands can build more humane environments and reduce the negative impact:

Normalize Emotional Response

Create an open space in training and team culture for moderators to talk about how they feel without shame or career risk.

Design Systems That Reduce Exposure

Use AI blurring, audio muting, or pre-filtering to minimize direct exposure to harmful UGC unless absolutely necessary.

Offer Rotational Downtime

Give moderators opportunities to switch between different types of content queues. Even short breaks from distressing material can make a big difference.

Involve Moderators in Policy Decisions

Involve them in shaping rules, updating filters, and rewriting user policies. It shows respect for their expertise.

Acknowledge the Job Publicly

Whether through internal appreciation or public messaging, let mods know and show that their work matters.

Best Tools for Social Media Moderation

Let's look at some essential tools that help automate and simplify moderation work.

Moderation APIs and Platforms

These tools let platforms detect and remove harmful UGC using built-in models.

Sightengine

Sightengine offers image, video, and text moderation for a comprehensive 110+ classes. It also detects AI content, including music, images, and deepfakes. Some other noteworthy features are verification for profile pictures and the detection of duplicate content, abusive links, distortion attempts, and restricted activities like vaping and gambling.

Hive

Hive moderates a wide range of media types, including images, videos, GIFs, audio, and livestreams; CSAM detection is available for image, video, and text formats. Hive comes with AI content identification that assigns confidence scores to suspected artificial content. It has a centralized dashboard for their customers' human moderators, where teams can customize rules and view analytics.

WebPurify

WebPurify specializes in detecting profanity, hate terms, nudity, and violence in images, video, and text. Companies can outsource their human moderation needs to WebPurify, including for Metaverse and other VR applications. WebPurify also offers consulting services for crisis management, moderation guidelines, risk audits, and more.

NLP and AI Toolkits

These tools use machine learning to understand language and detect possible harm.

Perspective API

Perspective API analyzes user comments and gives each one a score. The scores help decide which to send for review and what feedback to give to commenters. It also helps readers spot interesting and engaging comments.

OpenAI

OpenAI's models can classify, summarize, and understand user language to detect subtle or hidden harm. The output from OpenAI's moderation models includes different content categories, showing which types of harmful content might be present. Each category comes with a confidence score, so you can see how strongly the model believes a violation exists.

Azure AI Content Safety

Azure AI Content Safety supports over 100 languages and helps detect harmful content in both text and images. Its APIs scan for sexual content, violence, hate, and self-harm, using severity scores to guide action. While it flags many risks, it does not detect illegal child exploitation content.

Social Content Review Tools

These tools help marketing and community teams manage and pre-approve UGC.

Planable

Planable makes it easy to moderate social media content right where it's created and scheduled. It's possible to review and manage comments from one place so that you don't have to switch tools. You can also group comments by sentiment to spot and handle negative or off-topic replies.

Sprout Social

Sprout Social lets you monitor and moderate comments across all your social media profiles from one dashboard. It also helps you manage business reviews from platforms like Google, Yelp, and app stores in one stream. You can even track and respond to comments on paid ads.

Making Social Spaces Healthier

Effective social media moderation balances competing values: safety and expression, scale and quality, and automation and human judgment.

For platforms, responsible review practices build user trust and regulatory compliance. For brands managing social presence, understanding the principles of moderating creates healthy user engagement.

Whether building custom solutions or using ready-made tools to moderate UGC, the key is thoughtfulness, transparency, and respect for both users and reviewers. This creates environments where meaningful connection flourishes while reducing the risks of unsafe behavior.