Moderating the vast amount of content on digital platforms is a challenge. People post content daily, making it tough to filter out harmful content like hate speech. Users are at risk of encountering negative material with inadequate moderation, which can undermine the integrity of online platforms.

AI content moderation offers an efficient solution, ensuring safer online environments. This article delves into AI content moderation, its mechanisms, advantages, and future outlooks.

What is Content Moderation?

Content moderation is the management of user-generated content on digital platforms. It makes sure that this content, whether text, images, or videos, adheres to specific guidelines and standards. Content moderation is a manual task, with human content moderators reviewing each piece of content against set rules.

Yet, this approach faces challenges with scalability and subjective judgment. Human moderators can't keep up with the sheer amount of user-generated data on each platform. Also, what one moderator finds acceptable, another might not — AI assistants can help.

Content moderation is crucial for maintaining safe and respectful online environments. It's the only way to protect users from harmful content such as hate speech, misinformation, and graphic images. It also maintains compliance with legal standards and preserves the integrity of the platform.

How Does AI Content Moderation Work?

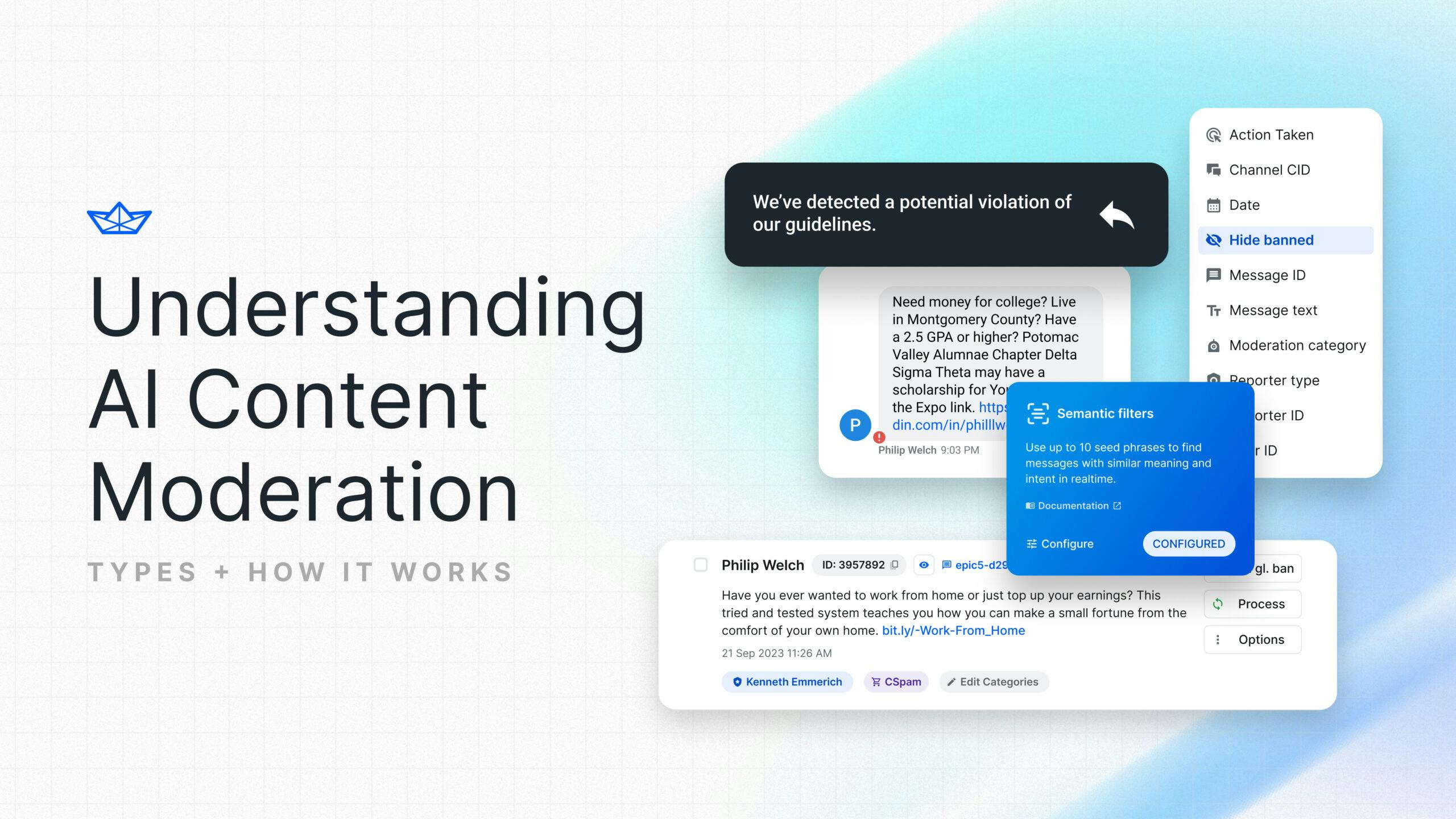

AI content moderation employs artificial intelligence to manage user-generated content on digital platforms. It's a sophisticated system that can help identify and moderate potentially harmful content, but how does it work?

Let's take a closer look.

The Mechanics of AI Content Moderation

AI algorithms employed in content moderation use a blend of advanced techniques. Machine learning enables these systems to learn from data, which improves their ability to spot inappropriate content over time. Natural language processing (NLP) is crucial for understanding the nuances of language. NLP helps AI discern context in text-based content.

Image recognition allows AI to analyze visual content. It can identify inappropriate material, from explicit imagery to subtle visual cues. These methods enable AI to provide an analysis of content types, making the moderation process efficient.

For example, Stream's Intent Designer engine uses advanced language models that can detect messages with similar meanings to certain phrases, making it easier for moderators to flag and review potentially harmful content.

The Impact of AI on Content Moderation

Nearly 30% of users between 18 and 34 years old say that social media platforms should have stricter content moderation policies.

AI enhances content moderation efforts. It reduces human moderators' workload by speeding up the moderation process and automating manual review. AI also adapts over time, recognizing evolving forms of inappropriate content.

The Challenge of AI-Generated Content

AI-generated content, like deep fakes or synthetic media, poses a unique moderation challenge. Such content can harm reputations, with damage often done before it's revealed as fake. As AI's content creation abilities advance, ironically, so must AI tools for detecting such content. Humans alone can't keep pace with the enormous volume of content that AI creates.

Continuous improvement in AI detection models is crucial to counteract evolving AI-generated content forms. These models must also be updated to prevent bypassing the various types of content moderation. This ensures AI doesn't facilitate the creation of harmful content.

What are the Benefits of AI Content Moderation?

AI content moderation offers numerous benefits that are essential for managing the increasing volume of digital content efficiently and effectively. These advantages address many of the core moderation challenges faced by digital platforms.

Increased Efficiency and Scalability

AI has dramatically enhanced the efficiency of content moderation. With this technology, massive volumes of data are processed at remarkable speed. This capability is crucial, considering that the average person generates 102 MB of data every minute. That's 147,456 MB (or about 147.5 GB) of data daily.

AI's scalability enables platforms to manage the increasing volume of user-generated content effectively. This capability is achieved without a proportional increase in human moderation resources. However, this doesn't imply that human moderators are becoming redundant. Their critical role in handling more nuanced and complex content scenarios remains vital.

Thus, AI's capacity for efficient large-scale content moderation allows human moderators to focus their expertise where most needed. This approach balances the speed of AI with the nuanced judgment of human moderators.

Improved Accuracy and Consistency

AI content moderation operates with a clear-cut decision-making algorithm, significantly reducing human error and bias and leading to more consistent content moderation outcomes. And AI's learning and adaptive capabilities enhance its precision in understanding community guidelines and identifying inappropriate content over time.

However, it's important to recognize and address the potential for unconscious bias in AI training models. As AI systems learn from data, ensuring these models are free from inadvertent biases is crucial. This attention to detail helps reflect diverse perspectives, maintaining fairness and accuracy in content moderation decisions while aligning with community standards.

Proactive Content Moderation

AI content moderation is notably proactive. It doesn't just wait for users to report problematic content; instead, it actively scans and flags issues that violate community standards before they're even noticed. This proactive approach is particularly effective because AI can analyze entire user feeds, not just individual posts.

This means it's more likely to detect subtle patterns and trends that human moderators might otherwise miss. By identifying these broader patterns, AI helps to maintain safer and more respectful online environments, preemptively addressing potential issues.

Supporting Human Moderators

AI content moderation offers human content moderators a range of benefits by managing routine tasks. This allows them to concentrate on complex moderation issues requiring human insight and discretion. A major benefit of AI's involvement is the reduced exposure of human moderators to harmful content, which can positively impact their mental health.

AI's role in handling the bulk of content moderation helps create a better work environment for human moderators. It balances the workload and reduces the stress of constant exposure to potentially disturbing content.

What Are The Different Types Of AI Content Moderation?

AI content moderation processes encompass various types, each with unique advantages and challenges. Understanding these types helps select the right platform approach based on their needs and content dynamics.

Pre-moderation

Pre-moderation is an AI-powered content moderation process that involves a thorough review of content before its publication. AI systems manage the entire process, scanning the content against predefined criteria and checking for elements that might violate platform guidelines.

This type of moderation guarantees that only appropriate, high-quality content is published, significantly reducing harmful material appearing on the platform. It offers robust control, which is crucial for platforms where content integrity and safety are top priorities.

However, the trade-off is a slower content approval process, which can impact real-time engagement and lead to user frustration, especially on platforms where timely content posting is essential.

Post-moderation

Post-moderation is a dynamic approach where users upload content that the AI reviews promptly. This method provides immediate visibility of user posts, fostering active engagement and interaction on the platform.

The key benefit of post-moderation is its ability to maintain a lively, real-time user experience while still exercising a degree of control over content quality. The challenge, however, is the brief window where potentially inappropriate content may be visible before AI reviews it.

This calls for highly efficient AI systems capable of rapidly assessing and moderating content to minimize risks and clear community guidelines to avoid false positives. Post-moderation is most effective for platforms like social media and online forums, where the immediacy of user interaction is essential. Still, there's also a need to safeguard against harmful content.

Reactive Moderation

Reactive moderation hinges on user participation. Users report inappropriate content like hate speech, which an AI reviews against community guidelines. This approach allows online communities to play a direct role in content regulation, significantly reducing the need for a large-scale moderation team. However, the effectiveness of this method depends entirely on user engagement.

In cases where users don't report content for review, problematic material can remain unaddressed. This delay in moderation can be a concern, especially when immediate action is required.

Reactive moderation is particularly effective on platforms with a strong community presence, with a user base actively involved in maintaining the platform's content standards and overall integrity.

Distributed Moderation

Distributed moderation allows community members to vote on the appropriateness of content, thereby making all of them unofficial content moderators. AI systems then use these collective votes to determine whether the content should be visible on the platform. This method engages the community in content governance and leverages the collective judgment of diverse viewpoints.

However, it has its challenges, including the potential for bias if certain groups of users dominate the voting process, and the content moderation workflow of a platform doesn't account for this. The method's effectiveness can also vary depending on the types and amounts of content.

Distributed moderation is particularly effective in environments with high user engagement and a need for collective decision-making. It suits platforms like specialized social networks or community-focused forums, where users are deeply invested in the content's quality and integrity.

Hybrid Moderation

Hybrid moderation expertly combines AI's rapid, automated screening with the discerning, nuanced judgment of human moderators. Initially, AI-powered content moderation tools filter out clear violations of content guidelines, efficiently managing vast quantities of data. This initial screening significantly reduces the volume of content needing human review.

Human moderators then make judgment calls on more complex, ambiguous cases where context and subtlety are key. While this method provides thorough and sensitive content moderation, it requires significant resources and well-coordinated protocols to effectively delineate the roles of AI and human moderators.

Hybrid moderation offers a comprehensive approach to content management, ideal for large platforms like major social media platforms. It combines swift AI processing with the essential human touch, adeptly handling sensitive or intricate content moderation scenarios.

Proactive Moderation

Proactive moderation in AI content moderation involves AI systems identifying and preventing the spread of harmful content before it becomes visible to users. This approach proactively shields users from negative content exposure, enhancing the overall user experience by maintaining a clean and safe platform.

However, it requires sophisticated AI that can comprehend context and nuances, which can be complex and challenging to develop. Proactive moderation is especially effective for platforms where rapid content response is vital, such as live streaming services. It's particularly beneficial in environments with high user interaction rates, where the immediate impact of harmful content can be significant.

AI Content Moderation Predictions For 2025

The AI-powered content moderation market is evolving rapidly. Data Bridge Market Research shows that this market is expected to grow significantly from 2022 to 2029, reaching an estimated value of $14 billion by 2029. This growth is driven by increased user-generated content across online platforms.

Here's how the content moderation market might change and evolve in the coming years:

-

Enhanced efficiency and accuracy: Expectations are high for AI to become even more efficient in content moderation. This is partly due to machine learning algorithms becoming more advanced, leading to higher accuracy in recognizing and filtering content. These improvements mean quicker and more reliable moderation.

-

Improved contextual understanding: AI's ability to interpret the context and subtleties in content is set to advance significantly. Developments in natural language processing will enable AI to better understand the intricacies of language, while image recognition technology enhancements will aid in more accurately analyzing visual content. This will also improve the occurrence of false positives.

-

Addressing AI-generated content: As AI-generated content like deep fakes becomes more prevalent, AI tools are predicted to evolve to counteract this challenge. This involves integrating advanced detection tools capable of identifying and moderating synthetic media, ensuring the authenticity of content on platforms.

-

Increased adoption and integration: The use of AI in content moderation is expected to expand across more platforms, including smaller websites and online communities that don't use AI at this stage. Stream, for example, plans to expand its support for various message types and introduce new features in our chat SDK. This signals a trend toward deeper integration of AI moderation tools within platform ecosystems.

-

Ethical and legal considerations: There will likely be a greater focus on addressing ethical considerations such as bias and privacy in AI moderation. Legal frameworks and regulations designed to address these challenges in content moderation are also expected to evolve, shaping how this powerful tool is used for content moderation and ensuring its responsible application.

These predictions indicate a trajectory where AI content moderation becomes more refined, adaptable, and integral to digital platform management.

What's Next?

AI's evolving role in content moderation transforms how we manage digital interactions. It's not just about filtering content anymore — it's about enhancing user experience, trust and safety on digital platforms.