Unfortunately, gaming communities can turn toxic. What should be a place for players to connect over a shared love of a game becomes a hostile environment filled with harassment and intimidation. Trolls, bad actors, and bots harass other players, post offensive or discriminatory messages, or share spam content. New players feel unwelcome or intimidated, making it hard to bring new members into the community.

If your gaming community turns toxic, it'll damage your reputation and the playing experience for many of your users. So product and community managers need to understand the signs of a toxic community and what they can do to combat bad behavior and stop toxicity from spreading.

Five Toxic Community Warning Signs

Your community managers will be best placed to spot the signs of toxic behavior spreading through your community. So most of these signs are things they should watch out for, though we've also identified a couple of metrics that product managers can use as an early indicator.

1. Player Harassment

According to a leading anti-hate organization, ADL, 86% of adults and 66% of young people experienced harassment in online multiplayer games in 2022. These numbers have grown from the previous year, with high levels seen across many popular games, including League of Legends, Call of Duty, and Counter Strike.

It can be difficult to draw the line between "trash talk," which is just part of competitive gameplay, and harassment. Some players may attempt to excuse their bad behavior by saying it was "just a joke." But harassment can take many forms:

-

Trolling or griefing

-

Calling players offensive or discriminatory names

-

Making physical threats toward players

-

Bullying players over a sustained period of time

-

Stalking (online or offline)

-

Sexual harassment

-

Doxing

-

Swatting

ADL's research found that only 19% of gamers said that harassment had no impact on how they played. Harassment significantly impacts players' enjoyment of a game and their sense of community belonging.

2. Spread of Extremist Content

ADL found that "hate and extremism in online games have worsened since last year," with exposure to white supremacist ideas in these games more than doubling (from 8% to 20%.)

If your community chat is being used to share or promote extremist ideas, that's a clear indicator of a growing number of toxic members. These could include messages containing racist or homophobic sentiments, white supremacist ideas, or anti-women and "manosphere" ideology.

If you have a small number of toxic community members, they may share those ideas in the chat, but the messages don't get any traction. People don't engage with them. But if more people are discussing and sharing similar ideas, that suggests a spread of toxic behavior in the community.

3. Lack of Consequences for Toxic Behavior

A lack of consequences for toxic behavior is a warning sign that behavior will become more common quite quickly.

For example, if a community member frequently shares spam messages in the chat, other members will likely flag or report them to your moderators. But if those reports have no consequences and the member is allowed to keep posting in the chat, they will continue to behave in that way.

Even worse, once other players or members see that behavior go unchecked, it gives them the confidence to act the same way. Then, toxic behavior can spread quickly and easily across the community, as members see they can continue within the community regardless.

Learn more about the importance of moderating in-game chat.

4. Increase in Messages Flagged for Offensive Content

Product and community managers can use analytics data from your in-game and community chat messages to identify signs of increasing toxicity levels. Look for messages flagged by your auto-moderation system or reported by other players to measure changing levels of toxic behavior in your community.

For example, you may see more messages picked up by your automod system for spam, profanity, or other abusive content. Additionally, you may see an increase in messages flagged or reported by community members for containing extremist or harmful content, or users reported for harassment or abusive behavior.

Track the percentage of flagged messages over time. This will help you identify whether your community has just a few toxic members in isolation, or if that behavior is spreading into the wider community.

5. Plateauing Player or Member Numbers

Your player or member numbers are another metric to track to keep an eye on community toxicity levels. If membership numbers are plateauing, or you see lots of new sign-ups that don't become repeat visitors or players, that could indicate a toxic environment within your community.

Toxic communities are often unwelcoming and hostile environments for new players. Veteran members may be aggressive or offensive towards new players, discouraging them from trying out the game and becoming embedded in its community.

Tips to Stop Your Community from Turning Toxic

Product and community managers can work together to implement various tools, processes, and strategies to stop toxic behavior and players from spreading through your community. These tips will help you reduce the impact of toxic behavior and respond appropriately to its presence in the community.

Create and Enforce Your Harassment Policy

Your policies and terms of service lay out behavior and content that isn't allowed in your community. A written policy that users agree to when they join is your first line of defense against toxic behavior. Because if moderators and community managers see behavior that goes against your stated policy, they can use it to explain why they're banning or muting a specific user.

For example, Roblox has a public-facing policy against extremism. This helps reassure users and community members that extremist messages and ideas won't be tolerated on the platform.

But having a policy is just the first step. Your moderators, community managers, and internal team need to consistently enforce it to stop toxic behavior from spreading across your community. This could include banning users, limiting their access, preventing users from posting in the chat, or giving them reduced functionality.

Provide Moderator Training to Ensure Consistent Enforcement

Community managers should provide training, resources, and escalation guidelines for your chat moderation team. This will help them know what to look out for and how to handle it.

Detailed training and guidelines will mean that toxic behavior is handled consistently, no matter which moderator is on shift. This will mean toxic behavior can't slip through the cracks to spread through your community because you have clear policies and processes for recognizing, reacting to, and stopping the spread of harassment.

Give Users More Control Over What They See

What feels like fun "trash talk" to one member may feel like a personal attack to someone else. Give users more control over the content they see and the members they interact with, to limit the spread of toxic behavior and create a more welcoming environment.

User control is a key focus for many companies --- for example, Amazon has registered a patent that lets players choose what behavior they'll tolerate when gaming. This would ensure the most aggressive or toxic players only connect with players with similar behavioral profiles while stopping that behavior from impacting other members' experience in the community.

While that tool is still in the works, you can help players find the right space for themselves in your community. For example, you could set up different channels in your chat around different topics, with varying guidelines for acceptable behavior.

Help Users Protect Their Anonymity

A lot of toxic behavior in gaming communities involves harassment based on users' identities --- for example, their race, gender, sexuality, or religion. While some users may share that information when interacting with other members, it may also be viewable on their profile.

You can help users protect their identities and give toxic members less ammo for harassment by reducing the amount of personal information you collect during the sign-up process. You can further protect users by collecting minimal personally identifiable information, such as postal addresses or locations. This makes it harder for people to dox players, which is where they share their personal information without permission.

For example, non-profit organization YesHelp helps its users maintain their privacy and anonymity as much as possible. They explained, "Making users feel safe and comfortable on YesHelp means keeping personal profiles minimal and contact information private, giving agency to those seeking help."

While YesHelp isn't a gaming community, product and community managers could follow a similar approach. Collecting limited details can help protect users against the actions of a few toxic individual members.

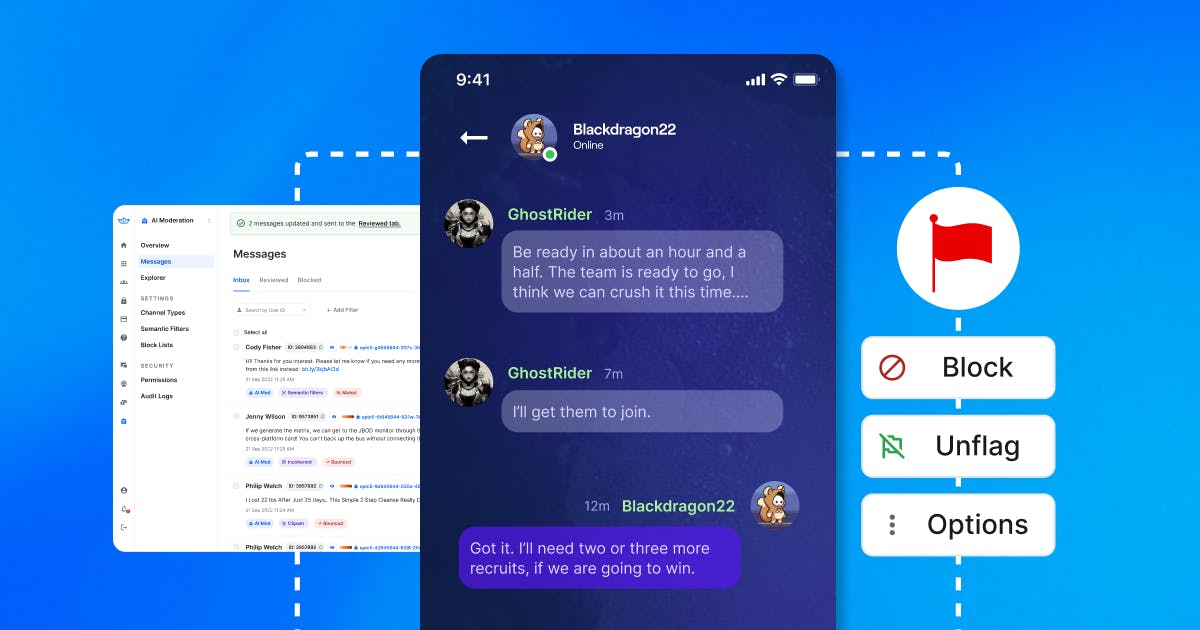

Use Automated Chat Moderation (Automod) as Well as Manual

Automated chat moderation tools can help your manual (human) moderators tackle toxic behavior more quickly.

Automod tools use artificial intelligence, machine learning, sentiment analysis, and intent detection to analyze messages in your community chat, to try and work out if they're harmful. Then, they can automatically flag content if needed.

They can also send nudge messages to users to remind them of your community policies and guidelines if they've typed (but not yet sent) a message that's been identified as harmful. Additionally, some automod tools have user recognition functionality. This means they can recognize repeat offenders and ban (or shadow ban) them from the chat.

Using automated and manual chat moderation means harmful messages are removed more quickly than if you were relying on human moderation alone. Because even if other users report harmful messages immediately, it will always take your team a little while to investigate and act on those reports.

Set Chat Message Limits

Chat limits set an upper limit on the number of messages a user can post within a short time period. This stops people from flooding the chat with spam links or harmful content, such as offensive images or abusive messages.

Whether you build or buy your in-app chat, make sure you can set message limits. If your in-house team is building your chat feature, this is essential functionality to include. Equally, if you're going to use a ready-built chat solution, look for one with this functionality.

Turn Off Link or Image Sharing

Images and links are one way toxic users share harmful content. Make it harder for people to do so by turning off users' ability to share images or links in public or private (DM) chat messages. You may want to disable this functionality entirely or only turn it off for users who repeatedly demonstrate toxic behavior.

While link and image sharing isn't the only way users can share harmful content, you can introduce a small barrier to this behavior that helps to protect users against this behavior. It also helps to reduce users' exposure to harmful content coming from outside the game or community,

Your chosen chat solution should have options for controlling users' ability to share links and images built-in. Alternatively, if your developers are building your own chat functionality, product managers should request that this feature is included in their roadmap.

Build a Safer Community for Your Users

Effective moderation is crucial for reducing toxicity within your community. Make sure your community manager understands how best to moderate community chat, to keep users safe and create an enjoyable, healthy community for your members.\

We've put together our ultimate guide to chat moderation --- read it now to learn more about community and chat moderation.