Picture this: Netflix serves over 15 billion hours of content monthly, Twitch handles an average of 2.55 million concurrent viewers (with peaks above 3.8 million during major events), and Zoom facilitates billions of meeting minutes—all relying on fundamentally different streaming technologies under the hood.

Whether you’re binge-watching Netflix or tuning into a live broadcast, live streaming protocols quietly power the experience behind the scenes .

These protocols represent decades of engineering evolution, each solving specific technical challenges around latency, scalability, and compatibility. The most widely deployed protocols today—HLS (HTTP Live Streaming), MPEG-DASH (Dynamic Adaptive Streaming over HTTP), RTMP (Real-Time Messaging Protocol), and WebRTC (Web Real-Time Communication)—each emerged from different technological eras and address distinct use cases.

Choosing between these protocols can mean the difference between a seamless user experience and frustrated viewers abandoning your stream. A sports betting app requires sub-second latency to prevent arbitrage opportunities, while a Netflix-style platform prioritizes smooth playback at scale.

In this guide, we'll break down how these technologies work at the packet level, their architectural trade-offs, and how to choose the right protocol when adding live streaming to your application.

Why is Livestreaming Fundamentally Difficult?

To understand modern streaming protocols, we must first appreciate the monumental engineering challenges that streaming presents. When the Internet first emerged in the 1970s and gained widespread adoption in the 1990s, it was architected around a simple premise: moving complete files from point A to point B. The foundational protocols—TCP for reliability, HTTP for web content, FTP for file transfers—all assumed you had time to wait for complete data transmission.

Streaming video, however, demands something the early internet was never designed for: continuous, time-sensitive data delivery with strict quality-of-service requirements.

Consider the mathematical reality: a 4K video stream at 60fps requires approximately 10-50 Mbps of sustained bandwidth depending on codec efficiency—AV1 can deliver broadcast-quality 4K/60 at 10-15 Mbps with modern encoders, HEVC around 15-25 Mbps, while H.264 typically requires 35-50 Mbps. Each frame must arrive within 16.67 milliseconds to maintain smooth playback. Missing this deadline creates visible stuttering, while packet loss can corrupt entire frames.

The Technical Challenges

Network Variability: Unlike file downloads that can retry failed transmissions indefinitely, live streams must adapt to constantly changing network conditions. A mobile user walking from WiFi to cellular connection might see their available bandwidth drop from 100 Mbps to 5 Mbps in seconds. The streaming protocol must detect this change and adapt without interrupting playback.

The Codec Complexity: Early video compression was primitive by today's standards. MPEG-1, developed in 1988, achieved roughly 1.5 Mbps for VHS-quality video. Modern codecs like H.265/HEVC and AV1 can deliver 4K video in the same bandwidth, but this efficiency comes at the cost of computational complexity. Real-time encoding requires significant processing power, while decoding must happen in under 33ms per frame to maintain 30fps playback.

Buffering vs. Latency Trade-off: Every streaming system faces a fundamental tension between reliability and latency. Larger buffers provide smoother playback by absorbing network jitter but increase delay between the live event and viewer reception. This relationship means that achieving both low latency and high reliability requires sophisticated protocol design.

Scale Economics: Perhaps most challenging is the economic reality of streaming at scale. A popular live stream might serve 100,000 concurrent viewers, requiring 5-50 Gbps of outbound bandwidth depending on quality. Traditional client-server architectures would require massive, expensive infrastructure. This drove the development of Content Delivery Networks (CDNs) and adaptive protocols that could leverage HTTP caching infrastructure.

You may remember the infamous Napster, LimeWire, or peer-to-peer file-sharing applications that dominated the early 2000s. These systems actually demonstrated some principles we see in modern streaming—distributed load sharing and adaptive quality—but they prioritized file integrity over real-time delivery, making them unsuitable for live content.

The early internet's 56k dial-up connections (actual throughput: ~44-50 kbps) simply couldn't support quality video streaming. Even basic audio streaming required aggressive compression and often resulted in frequent buffering. High latency was endemic—satellite internet connections could introduce 500-700ms of delay just from the physics of geostationary orbit, making interactive applications nearly impossible.

Early Solutions and Their Lessons

The first generation of streaming solutions emerged in the mid-1990s as engineers attempted to retrofit real-time capabilities onto the existing internet infrastructure. These early efforts, while primitive by today's standards, established fundamental principles that continue to influence modern protocols.

RealNetworks and the Buffering Era

RealPlayer, launched in 1995, pioneered the concept of progressive downloading combined with aggressive buffering. The system would begin playback after accumulating 10-30 seconds of content, providing a buffer against network variability. While this resulted in significant delay, it demonstrated that adaptive buffering could enable streaming over unreliable connections.

RealNetworks' technical innovation lay in their use of RTSP (Real-Time Streaming Protocol)—a control protocol that works in concert with RTP/RTCP for media delivery. Unlike modern HLS/DASH which use HTTP for both control and media transport, RTSP established the session concept where clients maintained stateful connections to servers, enabling features like pause/resume and seeking that would later become standard.

Microsoft's NetShow and the Plugin Wars

Microsoft's NetShow (later Windows Media Player) introduced the concept of multiple bitrate streams and client-side quality adaptation. Their architecture included three key innovations:

- Server-side transcoding: Live content was encoded into multiple quality levels simultaneously

- Client bandwidth detection: Players performed network tests to estimate available bandwidth

- Dynamic quality switching: Clients could request different quality streams mid-playback

However, these early systems required proprietary plugins and codecs, creating significant compatibility barriers. The "plugin wars" of the late 1990s—with RealPlayer, Windows Media Player, and QuickTime each demanding their own browser extensions—demonstrated the critical importance of open standards for widespread adoption.

Flash and the RTMP Revolution

The introduction of Adobe Flash and RTMP (Real-Time Messaging Protocol) in the early 2000s represented a quantum leap in streaming capabilities. Flash provided a nearly universal browser plugin, while RTMP delivered genuine low-latency streaming capabilities that enabled the first generation of interactive live platforms like Justin.tv (which later became Twitch) and Ustream.

RTMP's technical architecture introduced several concepts that remain influential today:

- Persistent connections: Unlike HTTP's request-response model, RTMP maintained long-lived TCP connections

- Message multiplexing: Multiple data types (audio, video, metadata) could be interleaved over a single connection

- Low-level flow control: The protocol included mechanisms for congestion control and retransmission

Peer-to-Peer Experiments

Projects like CoolStreaming attempted to solve the bandwidth cost problem through peer-to-peer distribution. The concept was elegant: instead of a single server sending to N clients (requiring N×bandwidth), have clients forward chunks to each other, dramatically reducing server load.

However, P2P streaming revealed fundamental challenges that persist today:

- Reliability: Peers joining and leaving unpredictably created instability

- Latency: Multi-hop distribution increased delay

- Quality control: No central authority could ensure stream quality

- NAT traversal: Home routers made direct peer connections difficult

These early experiments laid the groundwork for modern CDN architectures and even influenced blockchain-based streaming solutions being developed today.

RTMP: The Low-Latency Pioneer

The Real-Time Messaging Protocol (RTMP) emerged from Macromedia in the early 2000s as a solution to Adobe Flash's need for real-time communication capabilities. While Flash began as an animation platform, the introduction of video capabilities created demand for a protocol that could deliver synchronized audio and video with minimal delay. RTMP's development was driven by requirements from online gaming, video conferencing, and the emerging live-streaming market.

RTMP's lasting influence extends far beyond its Flash origins. The protocol established architectural patterns that continue to influence modern streaming systems, including persistent connections, message multiplexing, and sophisticated flow control mechanisms. Understanding RTMP provides insight into fundamental streaming challenges and solutions that remain relevant today.

Deep Dive: RTMP's Technical Architecture

RTMP operates over a persistent TCP connection, establishing a full-duplex communication channel between client and server. This persistent connection model contrasts sharply with HTTP's stateless request-response pattern, enabling real-time bidirectional communication essential for interactive applications.

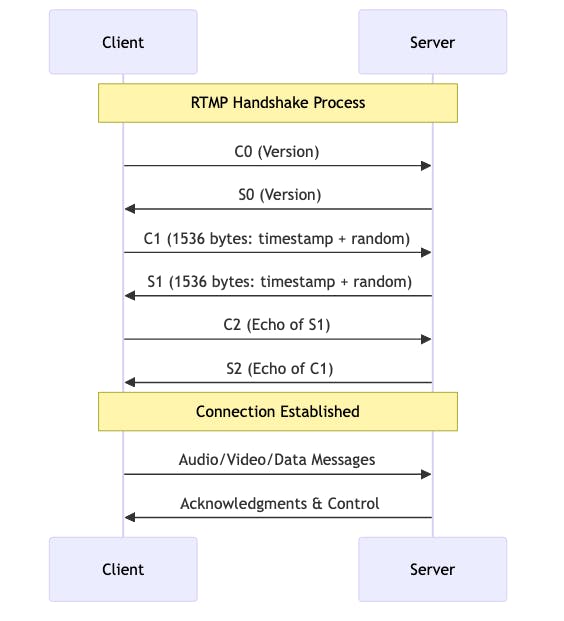

The RTMP handshake establishes protocol compatibility, verifies versions, and exposes timestamp information that applications can use for RTT heuristics and initial buffering parameters. The basic handshake negotiates protocol version and synchronizes clocks; encryption requires RTMPE (Adobe's encrypted variant) which adds Diffie-Hellman key exchange on top of the standard handshake, while vanilla RTMP remains cleartext.

Message Architecture and Multiplexing

RTMP's genius lies in its message multiplexing system, which enables multiple logical streams over a single TCP connection. Each message contains a message type (audio: type 8, video: type 9, metadata: type 18), timestamp for synchronization, message length, and stream ID for multiplexing.

The chunking mechanism breaks large messages into smaller units (default 128 bytes, though both sides can negotiate larger values like 4096 bytes), preventing large video frames from blocking audio transmission. This interleaving ensures that audio packets—critical for perceived quality—aren't delayed by large video keyframes. Audio messages typically receive higher priority as a best practice convention (often using chunk-stream ID 4), though this prioritization isn't mandated by the protocol itself.

Advanced Features: Adaptive Bitrate and Flow Control

RTMP includes sophisticated bandwidth management through bandwidth detection, where clients and servers exchange available capacity information for proactive quality adjustment. The protocol includes explicit bandwidth negotiation, message prioritization with audio receiving higher priority, and automatic repeat requests for critical messages like seek commands.

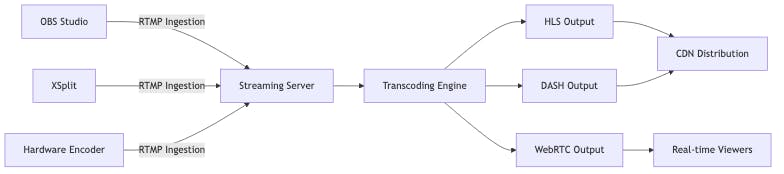

Modern RTMP servers have evolved far beyond Adobe Media Server. Nginx with RTMP module provides open-source RTMP ingestion with HLS/DASH output, Wowza Streaming Engine offers enterprise clustering and advanced transcoding, while cloud solutions like AWS Elemental and Azure Media Services provide managed RTMP ingestion.

Technical Limitations and Modern Alternatives

While RTMP excels at ingestion, several limitations explain its reduced role in direct content delivery:

TCP Head-of-Line Blocking: RTMP's TCP foundation ensures reliability but can introduce latency spikes when packet loss occurs. TCP's in-order delivery requirement means a single lost packet blocks all subsequent data until retransmission completes.

Flash Dependency: Original RTMP playback required Flash Player, which introduced security vulnerabilities and performance overhead. Modern browsers' Flash deprecation eliminated RTMP's primary playback mechanism.

Limited Scalability: RTMP's persistent connection model requires dedicated server resources per viewer, limiting scalability compared to HTTP-based protocols that leverage CDN caching.

Firewall Traversal: RTMP's custom TCP protocol faces challenges with corporate firewalls and NAT devices compared to HTTP-based alternatives that use standard web ports.

Despite these limitations, RTMP's influence on streaming architecture remains profound. Its message multiplexing concepts influenced WebRTC's design, while its adaptive quality mechanisms laid groundwork for modern ABR algorithms. For ingestion workflows requiring reliability and low latency, RTMP remains the industry standard, cementing its position as a foundational technology in the streaming ecosystem.

HLS and LL-HLS: Apple's HTTP Revolution

HTTP Live Streaming (HLS) represents one of the most significant paradigm shifts in streaming technology, fundamentally changing how video content is delivered across the internet. Introduced by Apple in 2009 alongside the iPhone 3GS, HLS was designed to solve a critical problem: delivering high-quality video to mobile devices with limited processing power, variable network conditions, and the need for exceptional battery life.

HLS's revolutionary insight was leveraging the existing HTTP infrastructure—web servers, CDNs, and caching mechanisms—rather than requiring specialized streaming servers. This architectural decision enabled massive scalability while maintaining compatibility with existing internet infrastructure, making HLS the most widely deployed streaming protocol today.

The Technical Revolution: Segmented Streaming

HLS's core innovation lies in transforming continuous video streams into discrete, independently playable segments. This segmentation approach solves multiple fundamental streaming challenges simultaneously:

Segment Generation Process

The HLS encoding process involves several sophisticated steps:

- Source Preprocessing: Live or recorded video is analyzed for scene changes, motion complexity, and audio characteristics to optimize encoding parameters

- Adaptive Encoding: Content is simultaneously encoded into multiple quality levels (typically 4-8 variants) with different bitrates, resolutions, and codec parameters

- Temporal Segmentation: Each quality level is divided into segments of fixed duration (typically 2-10 seconds), with each segment beginning with an IDR (Instantaneous Decoder Refresh) frame to ensure independent playability

- Container Packaging: Segments are packaged in MPEG-TS or fragmented MP4 format, optimized for HTTP delivery and CDN caching

Manifest File Architecture

The HLS manifest system uses two levels of playlists:

Master Playlist (.m3u8): Contains metadata about all available stream variants

#EXTM3U

#EXT-X-VERSION:6

#EXT-X-STREAM-INF:BANDWIDTH=2000000,RESOLUTION=1280x720,CODECS="avc1.64001f,mp4a.40.2"

720p/playlist.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=5000000,RESOLUTION=1920x1080,CODECS="avc1.640028,mp4a.40.2"

1080p/playlist.m3u8Media Playlist: Lists the actual segment files for each quality level

#EXTM3U

#EXT-X-VERSION:6

#EXT-X-TARGETDURATION:10

#EXT-X-MEDIA-SEQUENCE:0

#EXTINF:9.009,

segment0.ts

#EXTINF:9.009,

segment1.tsThis two-tier system enables efficient bandwidth usage—clients download the lightweight master playlist once, then continuously fetch updated media playlists for their selected quality level.

Advanced HLS Features and Optimizations

Adaptive Bitrate Algorithm Deep Dive

Modern HLS implementations use sophisticated algorithms to balance video quality, rebuffering events, and bandwidth efficiency. The typical ABR algorithm considers:

Bandwidth Estimation: Clients maintain exponentially weighted moving averages of download speeds for recent segments, with recent measurements weighted more heavily to respond to changing conditions.

Buffer Health: The algorithm monitors playback buffer levels, being more conservative with quality increases when buffer levels are low to prevent rebuffering events.

Viewport Optimization: Advanced implementations consider device capabilities and screen size, avoiding high-resolution streams on small mobile displays to conserve bandwidth and battery.

CDN Integration and Edge Optimization

HLS's HTTP foundation enables sophisticated CDN optimizations:

Segment Caching: Each segment acts as an independent cacheable object, enabling efficient distribution through edge servers worldwide. Popular content can be cached close to viewers, dramatically reducing origin server load.

Predictive Prefetching: CDNs can analyze viewing patterns to predictively cache upcoming segments and quality levels, improving performance during quality transitions.

Geographic Load Balancing: The manifest system enables real-time redirection to optimal edge servers based on client location and current server load.

Low-Latency HLS: Engineering Sub-Second Streaming

Traditional HLS faced a fundamental mathematical constraint: with 6-second segments and a 3-segment buffer, end-to-end latency approached 30-45 seconds. This delay proved acceptable for on-demand content but problematic for live events where viewers expect near-real-time experiences.

Low-Latency HLS (LL-HLS), introduced by Apple in 2019, represents a sophisticated engineering solution that maintains HLS's scalability benefits while achieving 2-5 second latency.

Partial Segment Innovation

LL-HLS's breakthrough innovation is the partial segment concept:

Temporal Division: Each traditional segment (e.g., 6 seconds) is divided into smaller partials (e.g., 6 partials of 1 second each). These partials are generated and made available as soon as encoding completes, rather than waiting for the full segment.

Initialization Section Reference: Partials reference the same initialization section through EXT-X-MAP tags; players must fetch the appropriate initialization section before they can decode the first partial.

Manifest Updates: The playlist is updated as each partial becomes available, providing near-real-time content availability information to clients.

HTTP/2 and Blocking Requests

LL-HLS leverages HTTP/2 features for efficiency:

Multiplexed Connections: A single HTTP/2 connection handles manifest updates and segment downloads, reducing connection overhead and improving performance on mobile networks.

Server Push: Servers can optionally proactively push upcoming partials to clients, though this optimization is seldom used in practice due to limited CDN support for pushing large partials and browser limitations.

Blocking Requests: Clients can send "blocking" playlist requests that don't return until new content is available, reducing polling overhead while maintaining responsiveness.

Backwards Compatibility Strategy

LL-HLS maintains compatibility with existing HLS infrastructure through backwards compatibility built into the spec. A single LL-HLS playlist is already backwards-compatible with traditional HLS players, which simply ignore the #EXT-X-PART lines they don't understand. Some deployments may choose to generate dual manifests (traditional HLS and LL-HLS) as a deployment optimization, but this is not mandated by the specification.

Real-World Performance and Optimization

Mobile Network Optimizations

HLS implementations include numerous mobile-specific optimizations:

Network Type Detection: Players adjust quality selection algorithms based on detected network type (WiFi, 4G, 5G), with more aggressive quality increases on unlimited connections.

Battery Optimization: Quality algorithms consider device battery level and thermal state, reducing quality on overheating devices to prevent thermal throttling.

Cellular Data Management: Advanced implementations respect iOS and Android data usage preferences, automatically reducing quality on metered connections.

Content-Aware Encoding

Modern HLS systems use content analysis to optimize encoding parameters:

Scene Complexity Analysis: Encoders analyze temporal and spatial complexity to allocate bitrate more efficiently, providing higher quality for complex scenes while reducing bitrate for simple content.

Audio Optimization: Separate audio optimization considers content type (music, speech, ambient) to select appropriate codecs and bitrates.

HDR and Wide Color Gamut: Advanced HLS implementations support HDR10 and Dolby Vision, with automatic fallback to SDR for unsupported devices.

HLS's combination of scalability, compatibility, and continuous innovation has made it the backbone of modern streaming infrastructure. From Netflix's global content delivery to live sports broadcasts serving millions of concurrent viewers, HLS provides the foundation for the streaming economy. Its evolution toward low-latency capabilities demonstrates how mature protocols can adapt to new requirements while maintaining their fundamental architectural advantages.

CMAF Integration: The Common Media Application Format (CMAF) represents a significant advancement in streaming standardization. CMAF unifies fragmented MP4 (fMP4) packaging for both HLS and DASH, eliminating the need for separate packaging workflows. This standardization enables broadcasters to create a single fMP4 stream that can be delivered via either protocol, dramatically simplifying content preparation and reducing storage costs. CMAF also enables efficient byte-range requests and improves cache efficiency across CDN infrastructure. Note that while CMAF unifies packaging, multiple encodes are still needed when serving different codecs (H.264, AV1, etc.).

MPEG-DASH: The Open Standard Revolution

MPEG-DASH (Dynamic Adaptive Streaming over HTTP) emerged in 2012 as the international community's response to the fragmented streaming landscape dominated by proprietary protocols. Developed under the auspices of the Moving Picture Experts Group—the same organization that created fundamental video compression standards like MPEG-2 and MPEG-4—DASH represents the first truly open, vendor-neutral standard for adaptive streaming.

The protocol's development was driven by a critical industry need: while HLS provided excellent performance for Apple's ecosystem, its proprietary nature created complications for multi-platform deployments. Android devices, smart TVs, gaming consoles, and set-top boxes often required custom implementations or licensing agreements. MPEG-DASH's creation aimed to establish a unified standard that could serve all platforms while enabling innovation through competition rather than vendor lock-in.

Architectural Philosophy: Codec-Agnostic Design

MPEG-DASH's most significant technical innovation lies in its completely codec-agnostic architecture. Unlike HLS, which initially mandated specific codecs (H.264 for video, AAC for audio), DASH treats codecs as interchangeable components that can be selected based on device capabilities, licensing considerations, and performance requirements.

Container Format Flexibility

MPEG-DASH supports multiple container formats simultaneously:

Fragmented MP4 (fMP4): The most common format, providing excellent compatibility with modern devices and efficient random access capabilities. fMP4's structure enables precise seeking and supports advanced features like multiple audio tracks and subtitle streams.

MPEG Transport Streams (.ts): Legacy compatibility format supporting older devices and broadcast infrastructure. While less efficient than fMP4, TS provides broader compatibility with set-top boxes and embedded devices.

WebM: Open-source container supporting VP8/VP9 video and Vorbis/Opus audio, particularly valuable for cost-conscious deployments avoiding codec licensing fees.

Advanced Codec Support

DASH's codec flexibility enables cutting-edge compression technologies:

Next-Generation Video: Support for H.265/HEVC, VP9, and AV1 enables 4K and 8K streaming at reasonable bitrates. AV1, in particular, provides 30-50% better compression than H.264 while being royalty-free.

Immersive Audio: Dolby Atmos, DTS:X, and spatial audio formats for enhanced audio experiences, particularly important for premium content and virtual reality applications.

HDR and Color: HDR10, HDR10+, and Dolby Vision support with automatic fallback mechanisms for standard dynamic range displays.

Media Presentation Description: The Engine of Adaptability

MPEG-DASH's Media Presentation Description (MPD) represents one of the most sophisticated manifest systems in streaming technology. Unlike HLS's relatively simple playlist format, the MPD provides comprehensive metadata enabling complex adaptive streaming scenarios.

Hierarchical Structure

The MPD organizes content into a multi-level hierarchy:

Period: Time-based segments of content, enabling seamless transitions between different types of media (e.g., ads, main content, different camera angles)

Adaptation Set: Groups of representations that provide alternative encodings of the same content (different bitrates, languages, or accessibility features)

Representation: Specific encoding parameters including resolution, bitrate, codec, and quality metrics

Segment: Individual media chunks that can be requested independently

Advanced Metadata Capabilities

The MPD format supports sophisticated metadata beyond basic streaming parameters:

Content Protection: Integration with Digital Rights Management (DRM) systems including Widevine, PlayReady, and FairPlay, with automatic fallback between systems based on device capabilities.

Accessibility Features: Comprehensive support for closed captions, audio descriptions, sign language video tracks, and navigation aids for visually impaired users.

Timed Metadata: Support for synchronized metadata including advertisements, chapter markers, and interactive elements that can trigger application events.

Technical Deep Dive: Segment Addressing and Initialization

MPEG-DASH employs multiple strategies for segment addressing, each optimized for different deployment scenarios:

Template-Based Addressing

Dynamic URL generation using templates enables efficient CDN distribution:

12345<SegmentTemplate media="video_$Bandwidth$_$Number$.m4s" initialization="video_$Bandwidth$_init.m4s" startNumber="1" duration="4000" timescale="1000"/>

This approach generates URLs dynamically, reducing manifest size and enabling efficient caching strategies.

Segment Timeline Precision

For content with variable segment durations (e.g., live streams with ad insertion), DASH supports precise timeline descriptions:

12345<SegmentTimeline> <S t="0" d="4000" r="10"/> <S d="3000"/> <S d="4000" r="5"/> </SegmentTimeline>

This enables frame-accurate seeking and synchronization across different quality levels.

Initialization Segments

MPEG-DASH separates media initialization data from actual content, enabling efficient quality switching:

Header Information: Codec parameters, decoding configuration, and container structure are delivered once in initialization segments.

Media Segments: Contain only compressed audio/video data, minimizing bandwidth usage during quality transitions.

Quality Switching: Clients can switch quality levels by downloading a new initialization segment followed by media segments at the new quality level.

Advanced Features: Multi-Period and Event Handling

Multi-Period Presentations

MPEG-DASH's period concept enables sophisticated content workflows:

Live Events with Ads: Seamless transitions between live content and advertising with different encoding parameters, quality levels, or even content protection schemes.

Multi-Camera Productions: Sports broadcasts can offer multiple camera angles as different periods, enabling viewer-selectable perspectives.

Episodic Content: TV series can be delivered as single presentations with each episode as a separate period, enabling continuous playback while maintaining distinct encoding optimizations.

Event Stream Processing

DASH includes sophisticated event handling for interactive and advertising applications:

Splice Events: Standards-compliant ad insertion points that maintain synchronization across quality levels and enable seamless ad replacement.

Application Events: Custom metadata delivery enabling interactive features, social media integration, and real-time audience participation.

Emergency Alerts: Support for emergency broadcast systems with automatic quality adjustment and priority handling.

Performance Optimizations and CDN Integration

Intelligent Prefetching

MPEG-DASH enables sophisticated prefetching strategies:

Predictive Quality Selection: Clients can prefetch segments at multiple quality levels, enabling instant quality transitions based on network conditions.

Parallel Downloading: Multiple segments can be downloaded simultaneously across different CDN endpoints, improving performance on high-bandwidth connections.

Range Requests: HTTP byte-range requests enable partial segment downloads for seeking operations, reducing bandwidth usage and improving responsiveness.

Edge Computing Integration

Modern DASH deployments leverage edge computing for optimization:

Just-in-Time Packaging: Content can be stored in high-quality intermediate formats and packaged into DASH segments on-demand at edge servers, reducing storage requirements while maintaining flexibility.

Dynamic Ad Insertion: Server-side ad insertion can be performed at edge locations based on viewer demographics and preferences, maintaining stream continuity while enabling personalized advertising.

Quality Optimization: Edge servers can analyze local network conditions and device capabilities to provide optimized manifest versions for specific geographic regions.

Ecosystem and Industry Adoption

MPEG-DASH's open standard nature has fostered a robust ecosystem of tools and implementations:

Open Source Players: dash.js, ExoPlayer, and VLC provide reference implementations demonstrating best practices and enabling rapid deployment.

Commercial Solutions: Bitmovin, JW Player, and THEOplayer offer enterprise-grade DASH implementations with advanced features and support.

Cloud Services: AWS Elemental, Microsoft Azure Media Services, and Google Cloud Video provide managed DASH packaging and delivery services.

The protocol's vendor neutrality has enabled widespread adoption across diverse industries, from entertainment giants like Netflix and Disney+ to educational platforms, enterprise communications, and government broadcasting systems. Its technical sophistication and standardized approach position MPEG-DASH as a cornerstone technology for the future of streaming media.

WebRTC: Real-Time Communication Redefined

WebRTC (Web Real-Time Communication) represents a fundamental paradigm shift in media transmission, enabling direct peer-to-peer (P2P) communication between devices with latencies typically well under a quarter-second in practice (120-250ms on the public internet, with sub-50ms possible only on LANs or within metro regions). Initiated by Google in 2011 and standardized by the W3C and IETF, WebRTC emerged from the recognition that existing streaming technologies were inadequate for interactive applications.

WebRTC's architecture integrates multiple complex systems working in harmony. The media pipeline includes capture and processing with echo cancellation and noise suppression, real-time encoding with hardware acceleration, network transmission over multiple concurrent paths, and decoding with precise timing control using adaptive jitter buffers.

Connection Establishment and Network Traversal

WebRTC's connection establishment uses the Interactive Connectivity Establishment (ICE) framework to discover optimal network paths. This includes direct connections when devices share networks, STUN servers for NAT traversal, and TURN relays when direct connection is impossible. The process gathers multiple candidate types including host candidates, server reflexive candidates, and peer reflexive candidates.

The signaling process involves Session Description Protocol (SDP) exchange where peers share capability information including supported codecs, encryption methods, and network addresses. All communication uses mandatory encryption through DTLS for data channels and SRTP for media streams, ensuring that media is always encrypted in transit. However, true end-to-end encryption requires additional measures like insertable streams or mesh topologies, as SFUs can terminate DTLS and re-encrypt streams unless E2EE is specifically implemented.

Advanced Protocol Stack and Adaptation

WebRTC's protocol stack prioritizes low latency over guaranteed delivery using UDP for media transmission and SCTP for data channels. The media transport uses RTP for packet sequencing and timing with RTCP providing transmission quality feedback for real-time adaptation.

The platform implements sophisticated congestion control algorithms including Google Congestion Control for delay-based bandwidth estimation and Transport-cc for transport-wide congestion control. Research prototypes exist for BBR-style algorithms, but production builds still rely on GCC/T-CC for stability. Dynamic adaptation operates on multiple timescales: instant frame dropping for immediate congestion response, short-term bitrate and resolution adjustment, and long-term codec optimization.

Modern WebRTC supports Simulcast where senders transmit multiple quality levels simultaneously, and Scalable Video Coding (SVC) with temporal, spatial, and quality layers for fine-grained adaptation. Error resilience mechanisms include Forward Error Correction, selective retransmission through NACK (though aggressive NACK usage increases RTT and jitter, so clients balance FEC vs. retransmit based on RTT budgets), and reference frame selection for recovery from lost keyframes.

Scaling Challenges and Architectural Solutions

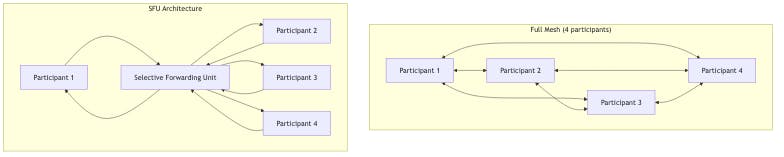

Direct peer-to-peer communication faces the mathematical N² problem where N participants require N×(N-1) connections. Modern deployments employ Selective Forwarding Units (SFUs) where each participant maintains a single connection to the SFU, which selectively forwards streams to other participants based on their capabilities and network conditions.

SFUs perform minimal processing, primarily packet forwarding and metadata manipulation, enabling efficient scaling to thousands of participants. Unlike traditional transcoding servers, they maintain low latency while providing real-time quality adaptation by sending different streams to different participants. For complete comparison, Multipoint Control Units (MCUs) decode and re-encode streams centrally, trading higher CPU usage for lower client bandwidth requirements.

Modern Applications and Use Cases

WebRTC enables interactive live streaming with sub-second latency for real-time audience interaction, live gaming, and interactive education. Enterprise applications include video conferencing with screen sharing and virtual backgrounds, customer support integration, and remote collaboration tools.

Gaming and virtual reality applications leverage WebRTC for cloud gaming with sub-40ms motion-to-photon latency on regional edge deployments, social VR experiences requiring spatial audio synchronization, and collaborative AR where multiple users share virtual objects with real-time interaction.

Performance optimization involves sophisticated bandwidth estimation using Kalman filtering and machine learning, dynamic jitter buffer management balancing latency against quality, and hardware acceleration integration for mobile battery optimization. Security features include media always encrypted in transit through DTLS-SRTP, consent-based device access, and network isolation preventing unauthorized connections.

Protocol Selection Framework: Making the Right Choice

Choosing the optimal streaming protocol requires understanding the complex interplay between user experience requirements, infrastructure constraints, and business objectives. The decision involves multiple dimensions that often present conflicting optimization targets.

Latency Requirements and Application Categories

Ultra-Low Latency (< 500ms): WebRTC remains unmatched for applications requiring real-time interaction including video conferencing, online gaming, remote control applications, live betting platforms, and interactive education where immediate feedback is critical.

Low Latency (2-5 seconds): LL-HLS provides optimal balance of low latency with HTTP scalability, ideal for live sports broadcasting, interactive live streaming with chat integration, auction platforms, and breaking news where timely delivery matters but massive scale is required.

Standard Latency (10-30 seconds): Traditional HLS and MPEG-DASH excel for large-scale distribution including on-demand content libraries, large-scale live events, educational content, and cost-sensitive deployments prioritizing CDN efficiency over immediacy.

Scalability and Infrastructure Considerations

CDN-friendly protocols leverage HTTP caching for massive scale where popular content achieves 95%+ cache hit rates, edge servers provide global low-latency access, and bandwidth costs scale sub-linearly with audience size. Real-time protocols require specialized infrastructure with dedicated resources per user, sophisticated load balancing, and optimized geographic routing.

Modern platforms increasingly adopt hybrid architectures combining RTMP for reliable ingestion, automatic transcoding to HLS/DASH for scalable distribution, and WebRTC for interactive features. This approach includes device capability detection, network condition monitoring for real-time protocol switching, and user preference settings for latency versus quality trade-offs.

Economic and Technical Trade-offs

Infrastructure costs vary significantly between protocols and these figures represent rough ballpark estimates. CDN and bandwidth costs for HLS/DASH range from $0.02-0.10 per GB delivered, scaling down with volume. WebRTC costs $0.10-0.50 per participant-hour with high variability based on encoding settings, quality levels, and server infrastructure. For RTMP, costs are primarily bandwidth ($0.02-0.06 per GB) plus encoder instance costs, rather than being priced per concurrent stream.

Development considerations include standards-based protocols benefiting from extensive tooling and documentation, WebRTC requiring specialized expertise but enabling unique interactive features, and legacy protocol support potentially requiring custom development while leveraging existing infrastructure.

Quality optimization involves modern codecs like H.265 and AV1 reducing bandwidth costs but increasing encoding complexity, hardware acceleration availability varying by protocol and environment, and quality assessment metrics enabling objective optimization. Multiple quality levels increase storage and encoding costs, but intelligent selection reduces bandwidth usage while machine learning-driven optimization can reduce costs while maintaining quality.

Platform Compatibility and Implementation Strategy

Browser support varies with HLS having native Safari support, while other browsers require Media Source Extensions (MSE) with fragmented MP4 or Transport Streams via JavaScript libraries like hls.js (this difference between native and JS-driven playback can significantly affect memory and CPU usage). DASH provides universal MSE support across modern browsers, WebRTC offers native support in all major browsers, and RTMP requires deprecated Flash Player or specialized plugins.

Mobile platforms show distinct preferences with iOS providing HLS native support and WebRTC through Safari and apps, Android preferring DASH with HLS via ExoPlayer and native WebRTC, automatic bandwidth management on cellular networks, and battery optimization requiring hardware acceleration and efficient codecs.

Smart TV and connected device support often shows limitations with set-top boxes frequently limited to H.264/MPEG-TS favoring traditional HLS, gaming consoles having variable support requiring testing, smart TVs showing manufacturer-specific implementations with significant variation, and streaming devices like Roku, Apple TV, and Chromecast each having distinct capabilities.

The protocol selection process should prioritize user experience goals over technical preferences. Success depends on choosing technologies that align with user expectations and business objectives, whether building video conferencing platforms, launching streaming services, or adding live features to existing applications.

Low Latency Streaming with Stream Video

Stream Video supports ultra-low-latency live streaming using WebRTC, achieving latencies of less than 500 milliseconds. This enables real-time interactions, such as live video streaming, with audio and video captured and streamed live for users to comment and interact in real time.

Stream's API allows broadcasting from your device or via RTMP, facilitating integration with various streaming setups. The platform is designed to scale efficiently, accommodating millions of participants through its global edge network. Stream also offers features like backstage mode for configuring streams before going live, recording capabilities, and flexible user roles to support broadcasts with moderators and multiple participants.