Content moderation is crucial for maintaining a safe and positive user experience. Stream's Moderation API offers a powerful solution for integrating robust moderation capabilities into your applications. Stream's Moderation Dashboard enables developers to prevent users from posting harmful content and build custom moderation workflows tailored to their specific needs.

This article will explore the key features and functionalities of the AI Moderation API and provide developers with the knowledge to effectively implement content moderation in their platforms.

Key Components of the Moderation API

Moderation Check Endpoint

The Moderation Check Endpoint enables real-time content moderation by analyzing submitted content and providing actionable recommendations. This comprehensive API supports multiple content formats, including text messages, images, and video content.

The process follows these simple steps:

- Set up your moderation policy with defined rules and actions (see Policy Setup)

- Submit content for review along with your policy key

- The API analyzes the content using configured moderation engines and rules

- Receive an immediate recommendation based on the analysis

By integrating this endpoint into your application workflow, you can efficiently maintain content quality and ensure compliance with your platform’s standards while providing a safe environment for your users.

In the check response you can see what the recommended action for the provided content is.

Typical actions include:

- Keep: Content is deemed appropriate

- Flag: Content is marked for further review

- Remove: Content is recommended for removal due to policy violations

- Shadow Block: Content is hidden from other users without notifying the creator (applicable to chat products only)

Configuring Moderation API

This API endpoint allows developers to enable or disable specific harm engines and configure moderation rules. Stream supports various moderation engines, such as toxicity detection, platform circumvention prevention, AI Text Engine, and Image Moderation Engine. We plan to introduce more in the future. Each engine processes content and provides relevant classifications. As a customer, you don’t need to handle each result individually—that would be overwhelming. Instead, we’ve simplified the process by allowing you to map these classifications to a few values representing “Recommended Actions.”

These recommended actions are handled automatically in Stream’s Chat and Activity Feeds products. For custom moderation, you’ll receive the final recommended action through the check endpoint, allowing you to take appropriate action on the content.

You typically call the moderation config endpoint as follows:

12345678910111213141516171819202122232425262728await client.moderation.upsertConfig({ key: "unique_config_key", ai_text_config: { rules: [ {label: "SCAM", action: "flag"}, {label: "SEXUAL_HARASSMENT", severity_rules [ {severity: "low", action: "flag"}, {severity: "medium", action: "flag"}, {severity: "high", action: "remove"}, {severity: "critical", action: "remove"} ]} ] }, block_list_config: { rules: [ { name: 'blocklistname', action: 'remove', }, ], }, ai_image_config: { rules: [{ label: 'Non-Explicit Nudity', action: 'flag' }], }, automod_platform_circumvention_config: { rules: [{action: "remove", label: "platform_circumvention", threshold: 0.5}] } });

Flagging Content

Sometimes, content that might be harmful is presented to end users, and an end user wants to report such an incident.

That’s where our flag endpoint comes in. Flagging content marks it for review by moderators. Flagged items appear on the Stream dashboard, where moderators can take appropriate action.

1234567891011await client.moderation.flag( "entity_type", "entity_id", "entity_creator_id", "reason for flag", { custom: {}, moderation_payload: { text: ["this is shit"] }, user_id: "user_id", // only for server side usage }, );

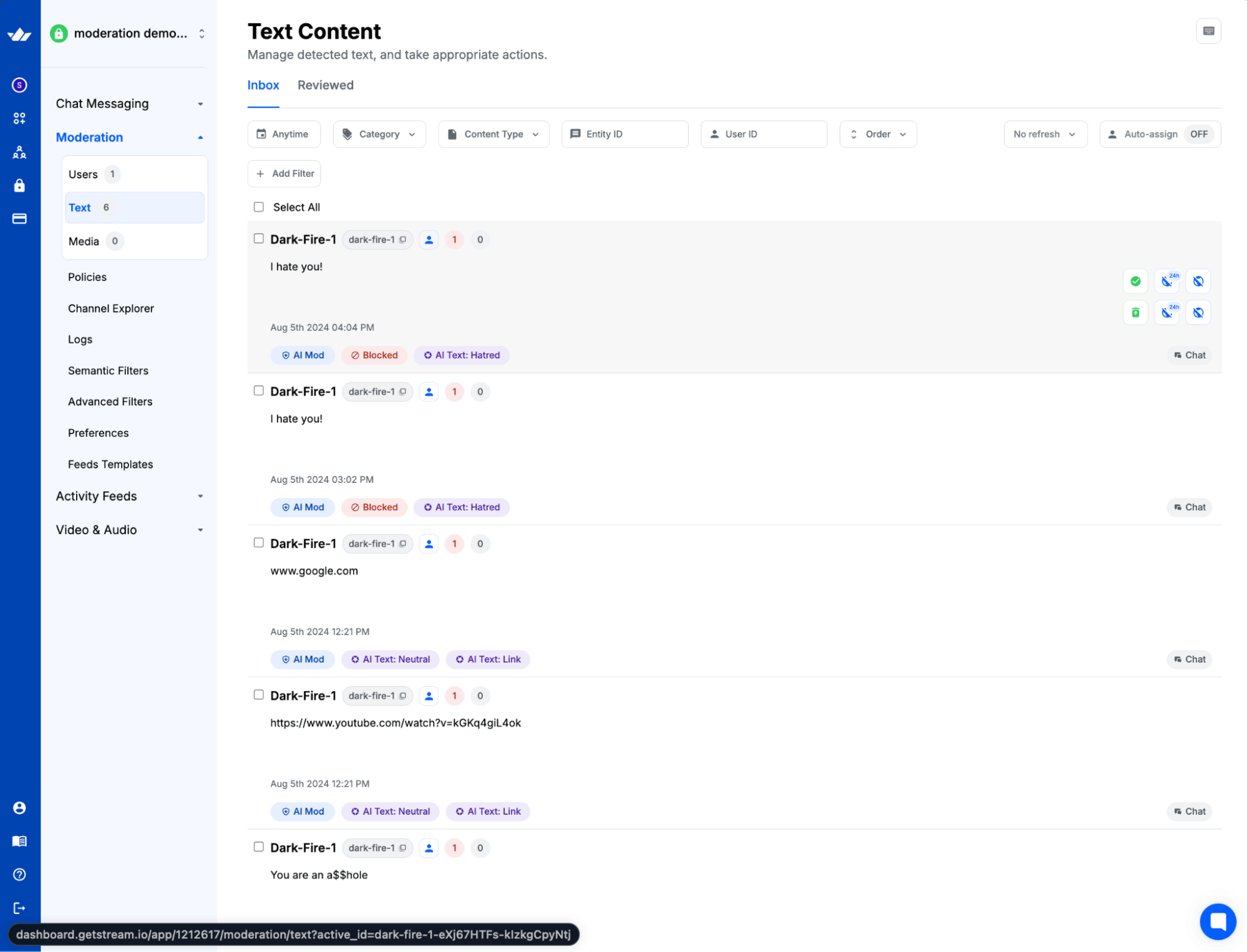

Here’s an example of how flagged messages would look on Stream’s dashboard.

Query Review Queue

You will likely want your moderators to use a custom dashboard specific to their workflow. Otherwise, they need to log in to Stream’s dashboard all the time. That’s where the Query Review Queue comes in.

123456789const filters = { entity_type: "some_entity_type" }; const sort = [{ field: "created_at", direction: -1 }]; const options = { next: null }; // cursor pagination const { items, next } = await client.moderation.queryReviewQueue( filters, sort, options, );

The Query Review Queue endpoint enables developers to retrieve and manage flagged content. It supports a wide range of filters and sorting options, making it easy to build custom moderation dashboards and workflows.

Submit Action

This endpoint allows you to perform specific actions on review queue items or flagged content. It’s the underlying API used on Stream’s moderation dashboard when a moderator deletes a flagged message or unblocks a blocked message.

This endpoint serves multiple purposes:

- For Stream’s chat or activity feeds products: It enables you to delete messages or ban users from your custom moderation dashboard.

- For in-house products: You can inform Stream about actions taken on review queue items and ensure these actions are reflected in Stream’s moderation dashboard.

1await client.moderation.submitAction("action_type", "item_id");

Webhooks

Stream's Moderation API includes webhook support, allowing developers to implement custom logic for moderation events. Webhook events include:

review_queue_item.new: Notifies of new content available for reviewreview_queue_item.updated: Notifies when existing flagged content receives additional flags or when a moderator performs an action

Implementing Moderation with Stream's API

To implement moderation using Stream's API, developers should follow these general steps:

- Set up the moderation configuration using the Upsert Config endpoint

- Use the Moderation Check Endpoint to submit content for moderation

- Implement webhook listeners to receive real-time notifications about flagged content

- Use the Query Review Queue endpoint to retrieve and manage flagged content

- Utilize the Submit Action endpoint to take appropriate actions on flagged content

Conclusion

Stream's Moderation API provides a comprehensive solution for content moderation, offering flexibility and powerful features to help developers maintain a safe and positive user experience. By leveraging the various endpoints and webhooks provided by the API, developers can create robust moderation systems tailored to their specific needs, ensuring that their platforms remain free from harmful content and fostering a healthy in-app community and user interactions.