Marketplaces only work when people trust each other.

Buyers trust that listings accurately represent what they’re purchasing. Sellers trust they won’t be scammed, harassed, or pushed off the platform by bad actors. And both trust that the marketplace itself is actively protecting them, not reacting after damage is already done.

As marketplaces scale, maintaining that trust becomes harder. User-generated content grows exponentially, scams become more sophisticated, and moderation decisions become more complex across regions, categories, and languages.

That’s why content moderation is no longer just a Trust & Safety concern for marketplaces.

It’s core platform infrastructure.

In this guide, we’ll break down how marketplaces can moderate content effectively at scale, what capabilities they need, and why strong moderation increasingly acts as a competitive advantage.

Why Content Moderation Matters for Marketplaces

Marketplaces are uniquely exposed to abuse because they enable direct interaction between strangers. Unlike traditional ecommerce, marketplaces don’t control inventory, pricing, or communication—users do.

Every buyer–seller interaction creates risk and opportunity.

The global content moderation market is projected to reach about $26.1 billion by 2031, growing rapidly as platforms scale UGC.

UGC Directly Impacts Conversion, Retention, And Gross Merchandise Value (GMV)

Listings, images, reviews, profiles, and chat messages all influence whether a transaction happens. When content feels trustworthy:

- Buyers complete purchases with confidence

- Sellers stay active and engaged

- Successful transactions compound into higher liquidity and GMV

When moderation fails, the opposite happens. Buyers hesitate. Sellers churn. Support costs increase. Even a small number of bad experiences can ripple across the app and stall growth.

Poor Moderation Leads To Scams, Churn, And Brand Damage

Unmoderated marketplaces quickly attract abuse, including:

- Fraudulent listings and impersonation

- Payment scams and off-platform solicitation

- Harassment and coercive messaging

- Fake or manipulated reviews

These issues can undermine the marketplace’s reputation, create toxic communities, invite regulatory scrutiny, and make acquisition more expensive over time.

What Marketplace Content Needs Moderation

Moderation isn’t limited to a single content type. Abuse spreads across surfaces, and effective moderation must account for the full ecosystem.

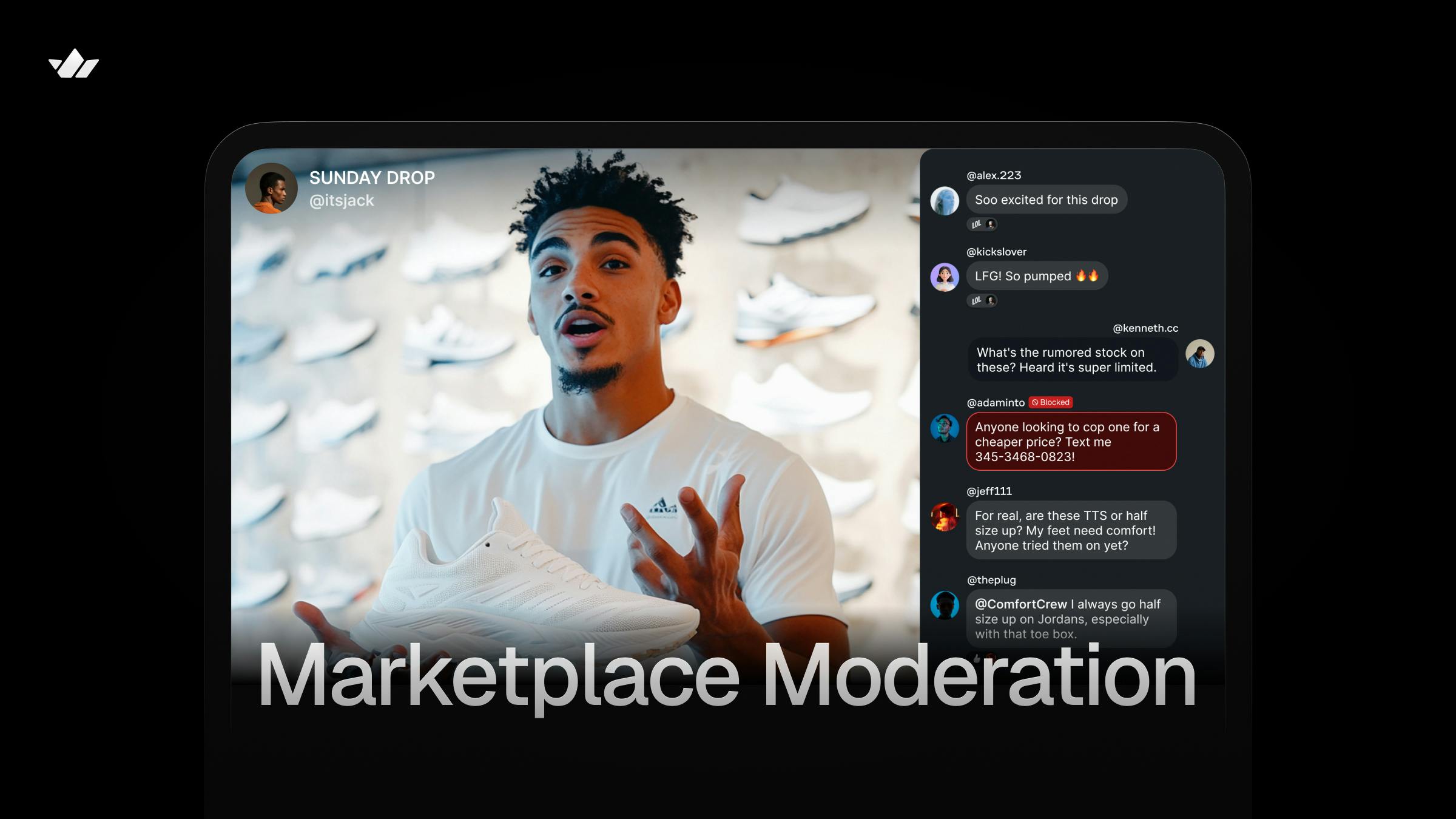

Buyer–Seller Chat and Messaging

Chat is often the highest-risk surface. It’s where:

- Scammers push users toward off-platform payments

- Personal information is requested

- Harassment and pressure tactics escalate quickly

Because chat happens in real time, chat moderation must happen in real time, too.

Marketplace Example: Peerspace

As Peerspace scaled, hosts increasingly encountered phishing and impersonation attempts through third-party messaging tools. By keeping conversations in-app and applying real-time moderation rules with Stream, Peerspace reduced harmful messages reaching hosts and reinforced trust during the most sensitive stage of the transaction: pre-booking negotiations.

Listings and Item Descriptions

Listings define what’s available on the marketplace, and how credible it feels. Moderation helps prevent:

- Prohibited or illegal items

- Misleading claims and false representations

- Spam or duplicate listings

- IP and trademark violations

Poor listing quality erodes buyer trust and harms discovery algorithms.

Marketplace Example: Furnished Finder

By tying buyer–seller chat directly to property listings, Furnished Finder preserved context around pricing, availability, and expectations. This reduced risky off-platform negotiation and made moderation more effective, reinforcing trust in both listings and sellers.

Images and Video

Visual content introduces additional complexity. Images and video may:

- Misrepresent products or services

- Contain explicit or unsafe material

- Be stolen, manipulated, or AI-generated deceptively

As visual commerce grows, image and video moderation becomes essential for protecting buyers.

Reviews and Ratings

Reviews are critical trust signals, but only when they’re authentic. Marketplaces must moderate:

- Fake or incentivized reviews

- Review bombing campaigns

- Harassment disguised as feedback

Without enforcement, reviews lose their value entirely.

Marketplace Example: Tradeblock (Secondhand Marketplace)

Tradeblock operates in a high-value resale environment where trust directly impacts transaction success. Integrating Stream Chat, review moderation, and audit logs helps preserve authenticity while still allowing sellers to appeal decisions, critical when individual transactions involve significant financial risk.

User Profiles

Profiles often serve as the first credibility check. Moderation helps prevent:

- Impersonation

- Inappropriate usernames or bios

- External links meant to bypass platform safeguards

Catching abuse at the profile level prevents it from spreading elsewhere.

Essential Moderation Capabilities

To manage these marketplace risks at scale, apps need layered moderation systems, not isolated tools.

Text, Image, and Video Moderation

AI-driven moderation applies consistent rules across text, images, and video, so users can’t bypass enforcement by switching formats.

Around 40% of online content is moderated by AI tools, with most platforms using a hybrid model combining automation and human review.

Scam and Spam Detection

Scams evolve constantly. Effective moderation looks beyond individual messages to detect patterns such as:

- Repeated off-platform payment requests

- Similar messages sent to many users

- Coordinated activity across accounts

This requires behavior-aware systems, not just keyword filters.

Marketplace Example: Peerspace

Peerspace implemented custom AI prompts to detect nuanced phishing and impersonation attempts. This approach reduced reliance on brittle keyword rules and improved detection accuracy as scam tactics evolved.

Velocity Limits and Behavior-Based Rules

Behavioral signals often surface abuse faster than content alone. Common examples include:

- Messaging too many users in a short period

- Rapid listing creation and deletion

- Copy-paste messaging across conversations

Velocity limits help stop abuse before it escalates.

Marketplace Example: Partiful (Social Platform)

While not a marketplace, Stream customer Partiful experiences rapid bursts of UGC around viral events. Behavior-based limits help manage spam and abuse during peak activity, illustrating how velocity rules are essential anywhere user activity scales faster than human review.

Review Queues and Audit Logs

Human review workflows support:

- Appeals and disputes

- Regulatory and compliance needs

- Transparency and accountability

Audit logs help teams refine moderation decisions over time.

Marketplace Example: Peerspace

Peerspace uses moderation dashboards to review flagged messages, analyze AI labels, and respond to user reports efficiently. This visibility enables Trust & Safety teams to move from reactive cleanup to proactive enforcement as the marketplace grows.

Setting Clear Marketplace Community Guidelines

Moderation is far more effective when users understand expectations upfront.

Marketplace-Specific Rules

Generic community guidelines aren’t enough. Apps need clear rules around:

- What can and cannot be sold

- Acceptable buyer–seller communication

- Whether off-platform payments are allowed

- What constitutes review manipulation

These rules should reflect real marketplace risks, not theoretical ones.

Communicating Guidelines to Users

Guidelines should appear where decisions are made:

- During listing creation

- Inline within chat when risky behavior is detected

- Through clear reporting and appeal flows

Consistent enforcement builds trust, even when moderation decisions are unpopular.

How to Moderate Marketplace Content at Scale

As user bases grow, moderation must shift from reactive cleanup to proactive prevention.

Pre-Moderation vs. Post-Moderation

- Pre-moderation blocks high-risk content before it’s visible. It’s ideal for chat, listings, and media.

- Post-moderation allows content to go live but removes it quickly if violations are detected, often used for reviews.

Most apps use a hybrid approach based on risk and urgency.

AI-Powered Moderation

AI enables marketplaces to:

- Moderate content in real time

- Apply rules consistently across regions

- Scale without linear increases in headcount

Without automation, moderation simply doesn’t scale.

On major platforms like Facebook and YouTube, AI systems detect ~95% of hate speech and ~93–94% of problematic videos before human review.

Human Review For Nuance And Accountability

Human moderators provide judgment where automation falls short:

- Handling appeals

- Reviewing edge cases

- Improving training data and enforcement logic

The strongest moderation systems treat AI and humans as complementary.

Approximately 78% of social platforms use a mix of AI and human moderators.

Best Practices for Moderation

Effective moderation intervenes at the right moment, in the right place, with the right level of enforcement. This extends beyond removing bad content.

The following best practices consistently separate resilient marketplaces from reactive ones.

Prioritize Real-Time Protection Where Risk Is Highest

In marketplaces, timing matters. Chat scams and harassment can escalate in seconds. Real-time moderation allows platforms to:

- Block high-risk messages before delivery

- Insert warnings when suspicious behavior is detected

- Temporarily restrict accounts exhibiting scam patterns

Early intervention protects users before harm occurs and keeps abuse from spreading across the marketplace.

Apply Consistent Rules Across Every Marketplace Surface

Users experience marketplaces holistically. Inconsistent enforcement across chat, listings, reviews, and profiles weakens trust and invites exploitation.

Best-in-class marketplaces define a single set of behavioral standards and apply them consistently, adjusting only where risk truly differs.

Use Automation To Handle Volume, Not Judgment

Automation excels at scale:

- Detecting known scam patterns

- Enforcing velocity limits

- Flagging obvious violations

But automation alone shouldn’t make nuanced decisions. Human judgment remains essential for fairness and credibility.

Design Human Review For Escalation

Human reviewers add the most value when focused on:

- Appeals and disputes

- High-impact enforcement decisions

- Improving automated systems

Well-designed workflows prevent reviewers from being overwhelmed by obvious violations.

Moderate Based On Behavior, Not Just Content

Some of the most damaging abuse emerges from patterns over time. Behavior-based moderation helps detect:

- Coordinated fraud

- Repeated attempts to bypass safeguards

- Networked abuse across accounts

Combining content and behavior signals enables earlier, more accurate intervention.

Introduce Friction Before Punishment

Immediate bans aren’t always the best first response. Contextual friction, such as warnings or temporary limits, can stop harmful behavior while preserving legitimate users.

This approach helps distinguish malicious actors from those who simply don’t understand the rules.

Adapt Moderation By Region, Category, And Maturity

Global marketplaces face different risks depending on geography and category. Effective systems allow teams to:

- Customize rules by region

- Apply stricter limits to new users

- Relax restrictions for trusted sellers

Flexibility is critical for scaling safely.

Treat Moderation as a Continuous System

Abuse evolves. Static moderation systems fail.

Successful marketplaces:

- Regularly review enforcement outcomes

- Monitor false positives and appeals

- Update rules as new scams emerge

- Use audit logs to improve accountability

Moderation should evolve alongside the marketplace itself.

Platforms like TikTok have removed over in a single quarter due to policy violations, showing the huge scale of automated and manual takedowns.

Top Marketplace Moderation Tools: Build vs. Buy

Marketplaces typically choose between building internally, adopting point solutions, or using moderation APIs.

In-House Moderation Systems

Building internally offers control, but requires ongoing investment in engineering, maintenance, and model updates. As abuse evolves, in-house systems can quickly become resource-intensive.

Point Solutions

Point solutions may excel at individual tasks, like image moderation, but create fragmented enforcement. Abuse rarely stays in silos, and gaps are easily exploited.

Moderation APIs

Moderation APIs provide unified coverage across chat, listings, media, and reviews. They reduce time to value, lower operational burden, and evolve continuously as threats change.

For many marketplaces, APIs offer the most scalable path forward.

Frequently Asked Questions

- How do you moderate marketplace chat?

Marketplace chat is moderated using real-time AI, behavior-based rules, velocity limits, and human escalation for edge cases.

- How do marketplaces moderate user-generated content?

Most marketplaces layer automated moderation with human review across text, images, video, and user behavior.

- How do you handle multi-language marketplaces?

Multi-language marketplaces rely on language-aware models and region-specific enforcement rules to ensure consistent moderation.

- How much does content moderation cost?

Content moderation costs vary, but automation significantly reduces long-term operational overhead compared to manual review alone.

Moderation as a Competitive Advantage

The safest marketplaces both remove bad actors and actively shape better behavior.

When moderation is proactive, consistent, and built into the core user experience, it does more than prevent harm. It creates an environment where high-quality buyers and sellers feel confident participating, transacting, and returning over time.

Safer Marketplaces Attract Better Buyers And Sellers

Trust in a marketplace should be measurable. Buyers complete transactions more often when listings are credible, reviews are authentic, and communication feels safe. Sellers stay engaged when they know scams, harassment, and impersonation are actively prevented.

Strong moderation raises the bar for participation. Over time, this naturally filters out bad actors and attracts users who contribute to a healthier marketplace ecosystem.

Nearly all consumers consult reviews before buying, and products with reviews are far more likely to be purchased. According to recent research, products with 5 or more reviews are ~270% more likely to be bought than those without reviews.

Trust Drives Liquidity And Long-Term Growth

Marketplace growth depends on liquidity: the ability for buyers and sellers to reliably find and transact with one another. Content moderation plays a direct role in this by:

- Increasing successful transactions

- Reducing churn caused by scams or abuse

- Lowering support and dispute costs

- Protecting brand reputation as the platform scales

When trust compounds, liquidity follows, and so does sustainable growth.

Turning Moderation Into A Scalable Advantage With Stream

Building and maintaining moderation in-house is complex, costly, and never finished. Scams evolve, abuse patterns shift, and global platforms need moderation that works across chat, listings, reviews, images, and video, often in real time.

Stream’s AI Moderation is designed specifically for these marketplace realities. It provides:

- Real-time text, image, and video moderation across your app

- Built-in scam and spam detection tailored to buyer–seller interactions

- Behavior-based rules and velocity limits to stop abuse early

- Human review workflows and audit logs for accountability and compliance

By unifying moderation across real-time chat and asynchronous UGC, Stream helps marketplaces protect trust without slowing growth or increasing operational burden.

In competitive markets, content moderation means building a buyer-seller experience that users choose and continue to trust.