Many people (especially developers) want to use the new DeepSeek R1 thinking model but are concerned about sending their data to DeepSeek.

Read this article to learn how to use and run the DeepSeek R1 reasoning model locally and without the Internet or using a trusted hosting service. You run the model offline, so your private data stays with you and does not leave your machine to any LLM hosting provider (DeepSeek). Similarly, with a trusted hosting service, your data goes to the third-party hosting provider instead of DeepSeek.

Activate your free Stream account and start building in-app chat, video, activity feeds, and moderation today.

What is DeepSeek R1?

The OpenAI o1 and State-of-the-Art (SOTA) models like the OpenAI o3 and DeepSeek R1 solve complex problems in mathematics, coding, science, and other fields. These models can think about input prompts from user queries and go through reasoning steps or Chain of Thought (CoT) before generating a final solution.

Note: Since first publishing this article, the above three language models no longer stand alone. A growing number of models now include explicit reasoning or chain-of-thought modes, enabling deeper reasoning across mathematics, coding, science and many other fields.

Why Do People Want To Use R1 but Have Privacy Concerns?

The R1 model is undeniably one of the best reasoning models in the world. Its capabilities have drawn a lot of attention and concerns in the developer communities on X, Reddit, LinkedIn, and other social media platforms.

However, there is some false information and wrong takes on using the language models provided by DeepSeek. For example, some people perceive DeepSeek as a side project, not a company. Others think DeepSeek may use users’ data for other purposes rather than what is stated in its privacy policy. Like OpenAI, the hosted version of DeepSeek Chat may collect users' data and use it for training and improving their models.

With that said, it does not mean you should not trust using the hosted DeepSeek Chat. It works similarly to ChatGPT and is an excellent tool for testing and generating responses with the DeepSeek R1 model. Some people and companies do not want DeepSeek to collect their data because of privacy concerns.

Additionally, DeepSeek is based in China, and several people are worried about sharing their private information with a company based in China.

How can one download, install, and run the DeepSeek R1 family of thinking models without sharing their information with DeepSeek? Continue reading to explore how you and your team can run the DeepSeek R1 models locally, without the Internet, or using EU and USA-based hosting services.

Why DeepSeek R1?

DeepSeek, the company behind the R1 model, recently made it to the main-stream Large Language Model (LLM) providers, joining the major players like OpenAI, Google, Anthropic, Meta AI, GroqInc, Mistral, and others.

The DeepSeek R1 model is open-source and costs less than the OpenAI o1 models. Being open-source provides long-term benefits for the machine learning and developer communities. People can reproduce their versions of the R1 models for different use cases.

Despite its lower cost, it delivers performance on par with the OpenAI o1 models. Its incredible reasoning capabilities make it an excellent alternative to the OpenAI o1 models.

With its intriguing reasoning capabilities and low cost, many people, including developers, want to use it to power their AI applications but are concerned about sending their data to DeepSeek. It is true that using the DeepSeek R1 model with a platform like DeepSeek Chat, your data will be collected by DeepSeek. However, you can run the DeepSeek R1 model entirely offline on your machine or use hosting services to run the model to build your AI app.

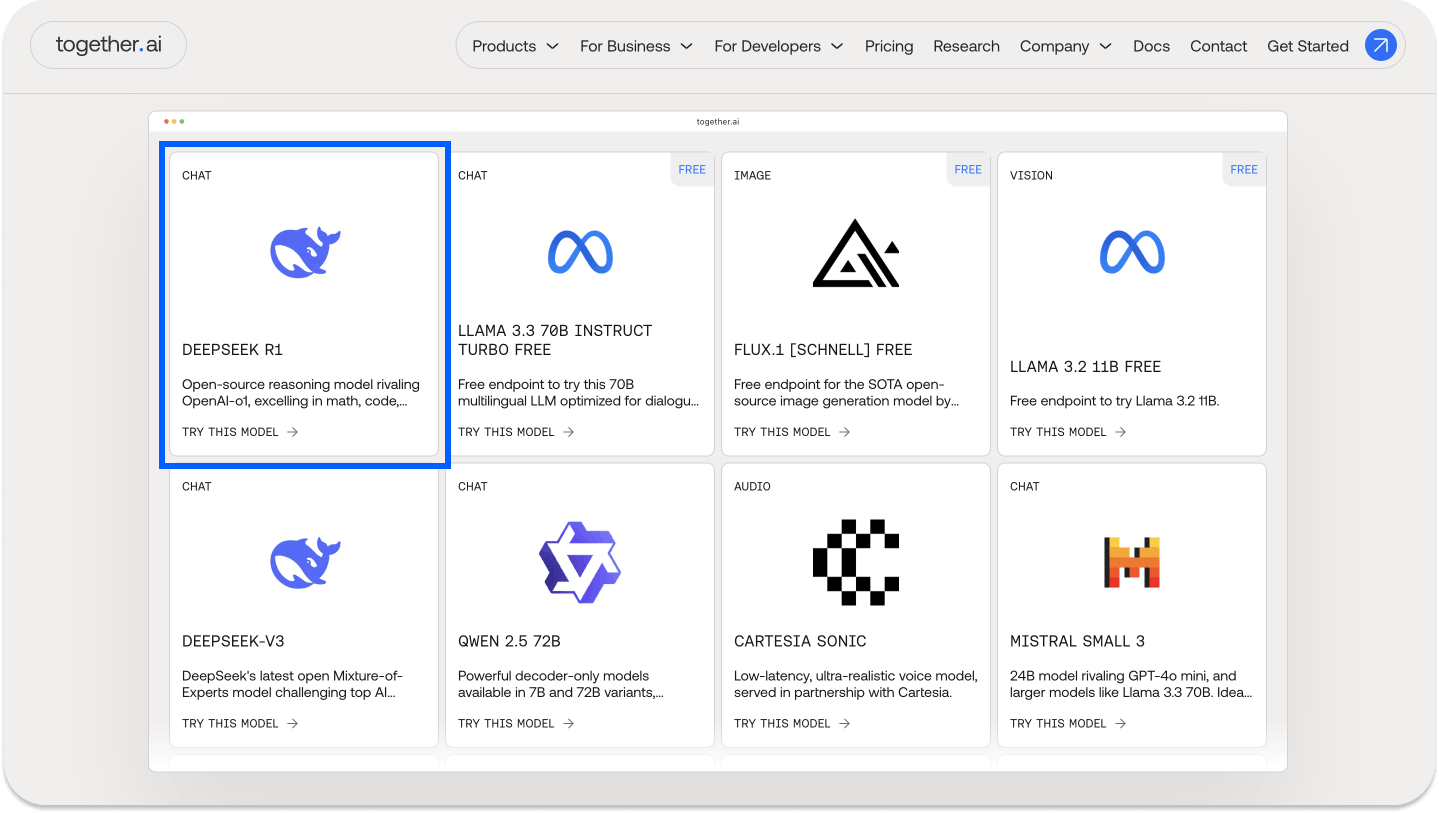

Like other Large Language Models (LLMs), you can run and test the original DeepSeek R1 model as well as the DeepSeek R1 family of distilled models on your machine using local LLM hosting tools. One benefit of using the R1 models is that they are available on hosting platforms like Groq, together.ai, and others. Using the models via these platforms is a good alternative to using them directly through the DeepSeek Chat and APIs. Microsoft recently made the R1 model and the distilled versions available on its Azure AI Foundry and GitHub.

What is a Local-First LLM Tool?

When using LLMs like ChatGPT or Claude, you are using models hosted by OpenAI and Anthropic, so your prompts and data may be collected by these providers for training and enhancing the capabilities of their models.

If you have concerns about sending your data to these LLM providers, you can use a local-first LLM tool to run your preferred models offline. A local-first LLM tool is a tool that allows you to chat and test models without using a network. Using tools like LMStudio, Ollama, and Jan, you can chat with any model you prefer, for example, the DeepSeek R1 model 100% offline.

Learn more about local-first LLM tools in one of our recent articles and YouTube tutorials.

The Must-Have Local LLM Tools For DeepSeek R1

Since the release of the DeepSeek R1 model, there have been an increasing number of local LLM platforms to download and use the model without connecting to the Internet. The following are the three best applications you can use to run R1 offline at the time of writing this article. We will update the article occasionally as the number of local LLM tools support increases for R1.

LMStudio

LMStudio provides access to distilled versions of DeepSeek R1 that can be run offline. To begin, download LMStudio, launch it, and click the Discover tab on the left panel to download, install, and run any distilled version of R1. Watch Run DeepSeek R1 Locally With LMStudio on YouTube for a step-by-step quick guide.

Ollama

Using Ollama, you can run the DeepSeek R1 model 100% without a network using a single command. To begin, install Ollama, then run the following command to pull and run the DeepSeek R1 model:

1ollama run deepseek-r1

You can also pull and run the following distilled Qwen and Llama versions of the DeepSeek R1 model.

Qwen Distilled DeepSeekR1 Models

- DeepSeek-R1-Distill-Qwen-1.5B:

ollama run deepseek-r1:1.5b - DeepSeek-R1-Distill-Qwen-7B:

ollama run deepseek-r1:7b - DeepSeek-R1-Distill-Qwen-14B:

ollama run deepseek-r1:14b - DeepSeek-R1-Distill-Qwen-32B:

ollama run deepseek-r1:32b

Llama Distilled DeepSeekR1 Models

- DeepSeek-R1-Distill-Llama-8B:

ollama run deepseek-r1:8b - DeepSeek-R1-Distill-Llama-70B:

ollama run deepseek-r1:70b

The preview below demonstrates how to run the DeepSeek-R1-Distill-Llama-8B with Ollama.

Watch Run DeepSeek R1 + Ollama Local LLM Tool on YouTube for a quick walkthrough.

Jan: Chat With DeepSeek R1 Offline

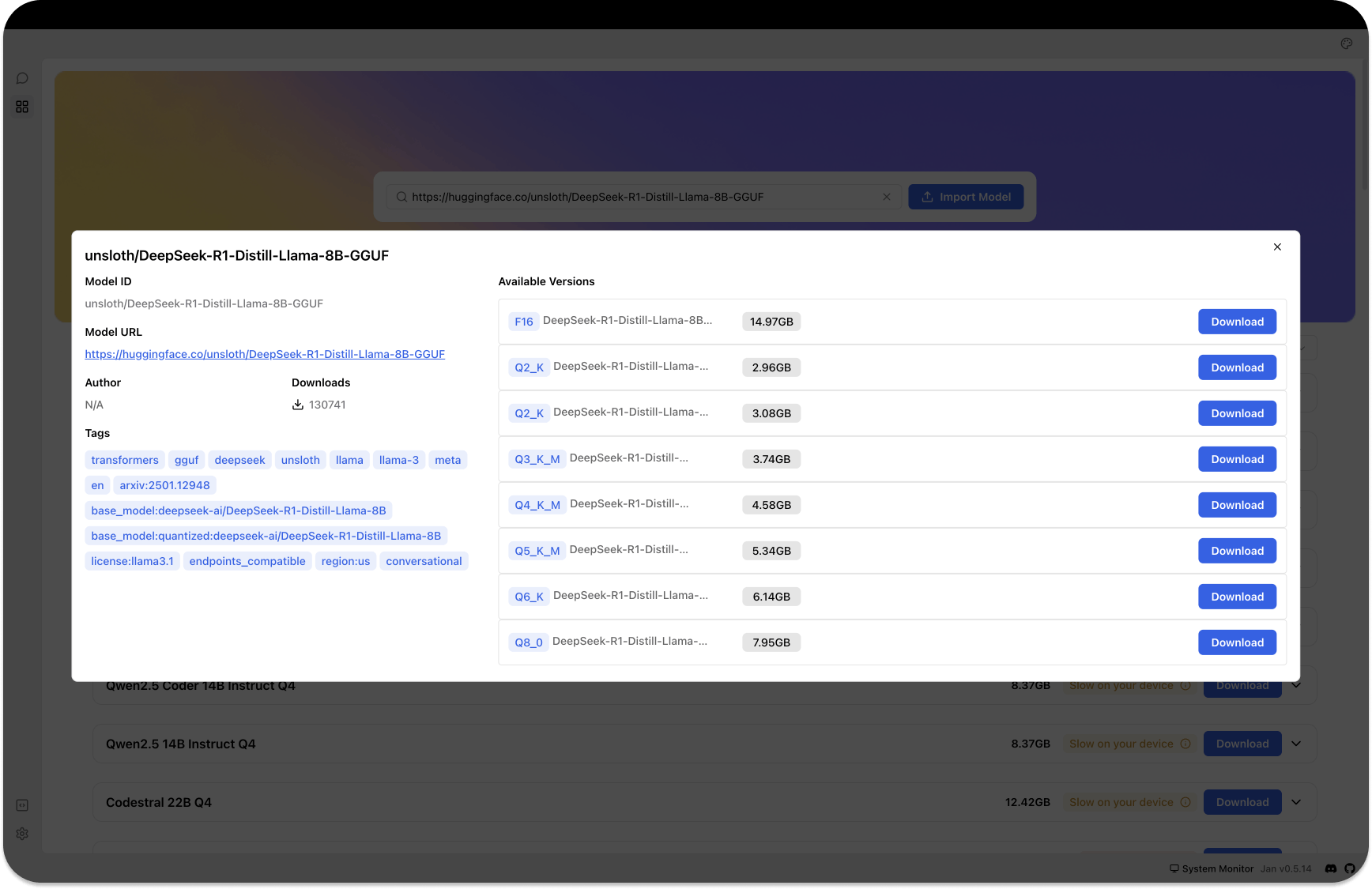

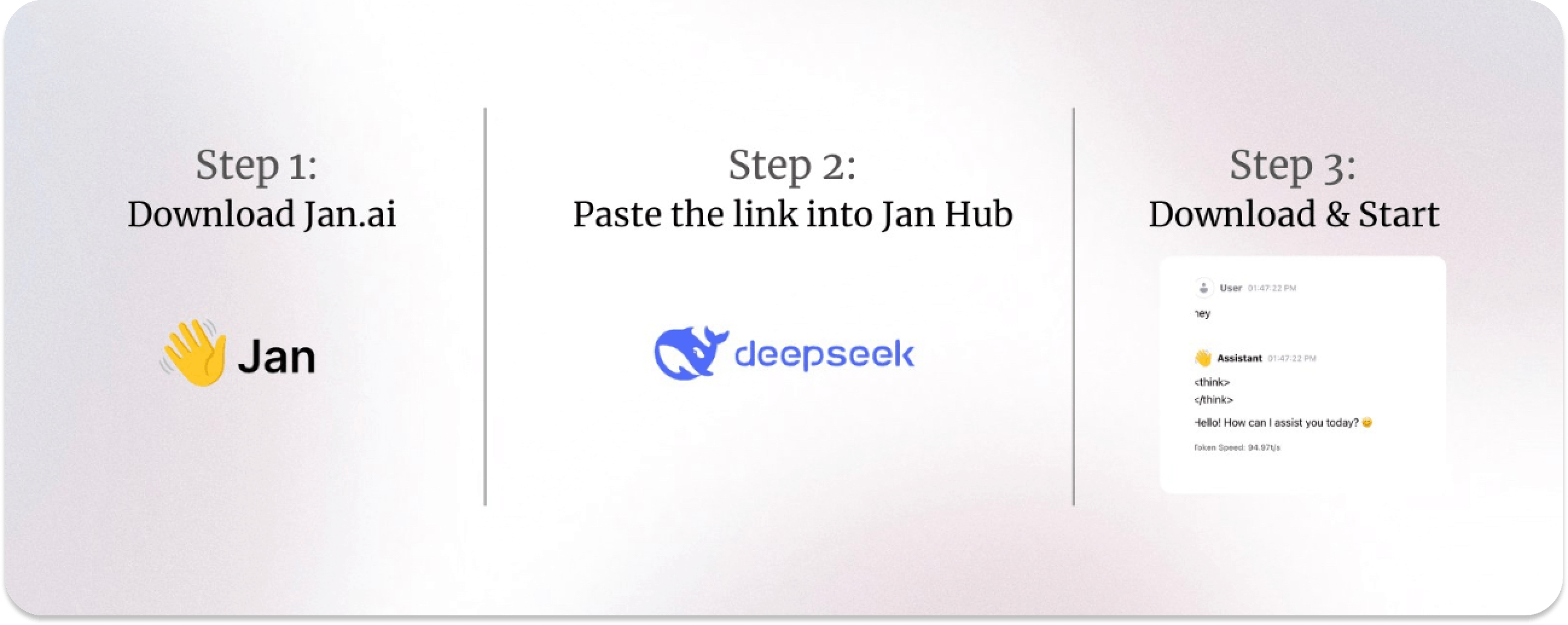

Jan describes itself as an open-source ChatGPT alternative. It is a local-first LLM tool that runs the DeepSeek R1 models 100% offline. Using Jan to run DeepSeek R1 requires only the three steps illustrated in the image below.

To start, download Jan and head to the Hub tab on the left panel to search and download any of the following distilled R1 GGUF models from Hugging Face.

DeepSeek R1 Qwen Distilled Models

- 1.5B: https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-1.5B-GGUF

- 7B: https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-7B-GGUF

- 14B: https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-14B-GGUF

- 32B: https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-32B-GGUF

DeepSeek R1 Llama Distilled Models

- 8B: https://huggingface.co/unsloth/DeepSeek-R1-Distill-Llama-8B-GGUF

- 70B: https://huggingface.co/unsloth/DeepSeek-R1-Distill-Llama-70B-GGUF

Once you download any distilled R1 models with Jan, you can run it as demonstrated in the preview below.

Other Alternative: Use Enterprise-Ready LLM Hosting For DepSeek R1

Although the DeepSeek R1 model was released recently, some trusted LLM hosting platforms support it. If you do not want to use the offline approaches outlined above, you can access the model from any of the following providers. There may be several LLM hosting platforms missing from those stated here. However, the following are leading platforms where you can access the DeepSeek R1 model and its distills.

Groq

Groq supports the DeepSeek-R1-Distill-Llama-70B version. To use it, visit https://groq.com/ and run the model directly on the home page.

Alternatively, you can run the R1 model on Groq by clicking the Dev Console button at the top right of the homepage, as demonstrated in the preview below.

Azure AI Foundry

As the preview above shows, you can access distilled versions of DeepSeek R1 on Microsoft’s Aure AI Foundry. Visit the Azure AI Foundry website to get started.

Other popular LLM hosting platforms you can run distilled models of DeepSeek R1 include the following links.

- https://fireworks.ai/

- https://www.together.ai/

- https://openrouter.ai/

- https://chatllm.abacus.ai/

- https://chutes.ai/

The Future of DeepSeek R1 and Open-Source Models

In this article, you learned how to run the DeepSeek R1 model offline using local-first LLM tools such as LMStudio, Ollama, and Jan. You also learned how to use scalable, and enterprise-ready LLM hosting platforms to run the model. The DeepSeek R1 model is an excellent alternative to the OpenAI o1 models, with the ability to reason to accomplish highly demanding and logical tasks.

At the time of writing, DeepSeek R1 is available in Azure AI Foundry's Model Catalog. On Groq, the available option is the DeepSeek-R1 distilled family (e.g., DeepSeek-R1-Distill-Llama-70B), which delivers R1-style reasoning on Groq hardware.

In the future, we expect to see more companies and open-source developers reproduce the DeepSeek R1 model and make it available for different use cases. Additionally, many local-first LLM tools and hosting services may support the DeepSeek R1 model and its distilled versions.