Live streaming has become one of the most popular ways for people to connect, entertain, and build communities online. But along with its rapid growth comes an undeniable challenge: keeping those communities safe.

Research shows that a majority of livestream viewers and creators have encountered harassment, hate speech, or abuse during broadcasts, with some studies estimating that over 70% of streamers report facing harassment at some point. This kind of toxicity can drive viewers away, discourage creators, and damage the reputation of the platform itself.

Why does this matter? Because safety and enjoyment go hand in hand. When users feel confident that they won't be attacked or exposed to harmful content, they're more likely to engage, return, and even invest in premium experiences. For platforms, effective moderation protects brand reputation, reduces churn, and builds long-term trust with both viewers and creators.

What Is Livestream Moderation?

Livestream moderation is the process of monitoring and managing both the chat and the video stream itself to prevent harmful or disruptive behavior.

This involves:

-

Chat moderation, where text comments are scanned for harassment, hate speech, spam, or inappropriate content.

-

Video moderation, where live audio and visuals are monitored for violations like nudity, violence, or the promotion of harmful activity.

-

Real-time enforcement, where moderators or AI tools can mute, block, or ban offenders to protect the community without derailing the stream.

Done well, livestream moderation keeps communities inclusive and fun while also shielding platforms from reputational damage and regulatory risk. It's the foundation for the five strategies that follow.

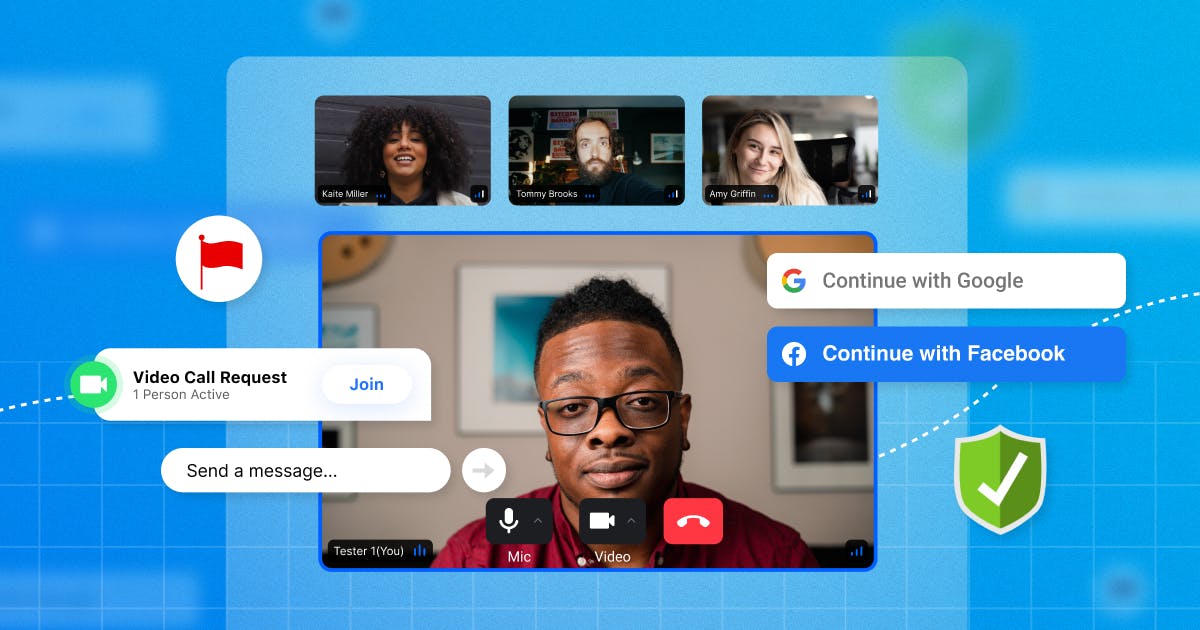

1. Use Third-Party Authentication APIs

Third-party authentication can enforce secure login standards while preserving your users' experiences. Using these APIs allows users to log in through another established account like Google, Facebook, or Apple. It's also convenient for the user — they have one less login to fill out or remember and one less company to give their information to.

For you, using a third-party API for authentication means less liability if a data breach occurs. The impact on your business isn't as significant or detrimental as when the data you directly collect from your customers is compromised. It also supports regulatory compliance: many leading third-party providers are already built to align with standards like GDPR, CCPA, HIPAA, and SOC 2, ensuring that sensitive data such as health or financial information is stored and processed in accordance with strict privacy laws. Leveraging these providers allows your team to inherit that compliance posture rather than shouldering it alone.

You can adopt and implement third-party authentication from Google, Facebook, or another source. Once you've adopted an API, you can add buttons to the login page for users to sign in using other applications (e.g., "Sign in with Google" or "Sign in with Facebook").

The downside to third-party authentication is that the user might not have an account with the providers you offer them. If they don't have an established account, they'll have to create one to sign in to your application, and that could deter them from using it. While your third-party authentication service provider will handle this process for you, you can avoid potential user deterrence by offering users multiple options of third-party apps to sign in through.

2. Enable Text Chat

Text chats help to initiate and supplement video chat conversations and give users a secondary way to communicate if any issues arise. Text chats are especially useful for moderating a large audience. When someone hosts a video for a large audience, it can be difficult to see and address everyone. With a text chat, it's more visible when someone needs assistance or help.

Beyond moderation, text chat also helps viewers connect with each other while watching a livestream. Reactions, side conversations, and shared commentary create a sense of community that video alone can’t provide. This makes streams more interactive and keeps audiences engaged longer.

Build or adopt a function using an API — like Stream — for text message chatting that is connected to the video stream. If you opt for an API, use one that is fully customizable, so you can put the privacy and safety controls in your users' hands.

It would also be valuable to have a language-translating function, so regardless of the user's language, they can still get assistance when they need it. Use a translation API, like Google Cloud Translation or DeepL, that has high accuracy and is easily integrated into your application.

Other features that make text chat more robust include:

-

Threaded replies so conversations don't get lost in fast-moving chats

-

Pinned messages for announcements or community rules

-

Lightweight engagement tools like polls, emoji reactions, or quick reactions that let viewers participate without overwhelming the conversation

-

User tagging/mentions to call attention to moderators, hosts, or other viewers

-

Custom filters that let users or moderators control which content they see

-

Moderator tools such as timeouts, bans, or highlighting flagged content for review

3. Enable Avatars and/or Background Blurring to Anonymize the Live-Streaming Experience

Some people prefer to remain anonymous during live streams. Avatars allow them to participate without showing their face or identity, while background blurring is most useful in formats where multiple participants may be on video (such as group calls, panel discussions, or interactive webinars).

You'll need to adopt plugins (for either avatars, background blurring, or both) so that when your users log in, they can go to their video settings and choose an avatar or choose to blur their background.

There are plugins for both background blurring and avatars. Avatar software will sync a 3D avatar with a real person (a live streamer) and overlay the image on top of their face and body. The software uses video to capture the streamer's motions and move the avatar along with the streamer's body and expressions.

Background blurring technology takes a live feed, detects the background, and applies a blur to it. Programmers can even control the amount of blur that a background has or set it as entirely opaque.

If you use plugins, make sure they are secure for your users. Assess what permissions each plugin is asking for and decline permissions if something seems suspicious or unsafe for your end users.

4. Moderate Content to Mitigate Bad Behavior

You can moderate specific content to identify and mitigate bad behavior, including harassment, hate speech, and bullying. Your app's reputation is at stake — if your app becomes known for allowing harassment, it can become costly when users decide to stop streaming on your app and use a different one.

Traditionally, developers would handle content moderation in chats by giving control to the users to block certain words or language or ban certain people when they become offensive. The maintenance for this kind of moderation can be time-consuming for developers to stay on top of. As bad behavior and harmful language evolve, your moderation has to evolve with them.

Now, there are new AI tools that aim to automatically identify when bad behavior is occurring (on both video and chat).

Each option has its advantages and disadvantages — AI may be more efficient but more expensive; traditional methods may be more cost-effective but too time-consuming. You have to identify which route works for your developers and the rest of the product team.

Live Video Moderation

Text chat isn't the only area where abuse happens. Livestream video itself can expose viewers to nudity, violence, or illegal activity. Real-time video moderation uses AI models trained to detect visual violations and alert moderators immediately. This could mean auto-pausing a stream, blurring flagged content until reviewed, or escalating cases to human moderators for final judgment. Video moderation helps platforms comply with safety regulations and ensures offensive material isn't broadcast unchecked.

Chat Moderation (AI + Human)

Moderating livestream chat requires a hybrid approach. AI-powered tools can filter slurs, spam, or harassment at scale and adapt to evolving language. But human moderators are still critical for handling nuance and context---for example, telling the difference between a sarcastic joke and a targeted insult. Platforms often combine both. AI does the heavy lifting of flagging potential violations, while human moderators review edge cases and appeals to keep enforcement fair.

User Reporting

Even with advanced AI and moderator oversight, it's impossible to catch everything in real time. That's why user reporting systems are essential. Simple features like a "Report" button on chat messages or streams allow users to flag harmful content directly. Robust reporting systems also let users add context (e.g., screenshots, timestamps, or categories of abuse), which helps moderators respond more quickly and effectively. Transparency is key here---platforms should confirm reports, outline what happens next, and communicate outcomes when possible to build user trust.

When these three elements (video moderation, chat moderation, and user reporting) are combined, platforms can better balance safety, fairness, and scalability. The goal isn't to over-police users but to make livestreams a place where people feel protected enough to participate freely.

5. Implement Privacy Controls When Searching for Other Users

Users should be able to control how others can find them and which information is visible and searchable. Your users want to be able to engage with content and communities but also feel safe knowing that random or potentially harmful people can't contact them without their consent. Each person's boundaries will be different, so your features should be as flexible as possible.

There are a few functions to focus on to implement privacy controls that each user can change on their own.

Implement settings that allow users to share certain information that they do want to show but hide information they don't want to share. For example, many users might want to show their birthday without the year but not their location, while other users will opt to share their location. Users should also be able to control whether or not their profile shows that they're online — some users will prefer not to show their active status.

In addition to dictating what information is visible, allow users to define how they can be searched. For example, some folks are fine with being searchable by their name, phone number, and/or email address, whereas other users only want to be found by their name and nothing else. Others will not want to be found at all.

Visibility functions can also help improve privacy. Allow users to choose if they're only visible to "friends" or if they can be found by "friends of friends" or "any user." For example, Discord gives users granular control over who can send them friend requests (everyone, friends of friends, or no one), and Twitch allows streamers to toggle features like follower-only chat to limit unwanted contact. These kinds of settings put boundaries directly in the user’s hands while still allowing them to participate in communities on their terms.

The Future of Livestreaming Safety

As livestreaming continues to grow across gaming, entertainment, education, and commerce, safety expectations will only rise. The future of livestream moderation is likely to include:

-

Multimodal moderation that analyzes video, audio, and text together in real time to spot harmful content across all formats.

-

LLM-powered AI tools that better understand slang, context, and multiple languages---reducing false positives while catching nuanced abuse.

-

Built-in safety by design, where platforms bake privacy and moderation features into the user experience instead of treating them as afterthoughts.

-

Transparent appeals and reporting systems, so users trust that moderation is fair and not arbitrary.

-

Stronger compliance alignment, with regulations like the EU Digital Services Act and Online Safety Acts requiring platforms to prove they're protecting users.

The end goal is the same: to make livestreaming safe enough that people feel free to engage, but flexible enough that communities don't feel over-policed. Platforms that can balance these priorities will be the ones that retain users, attract creators, and avoid reputational or regulatory risks.

Safety Is Key For Successful Live Stream Chat Moderation

To keep your users happy, they have to feel confident that their information and privacy are protected. To build an application that your users will keep coming back to, it needs the right functions and features to ensure their safety — functions that let them control how other users interact with them and find them on your application and features, like third-party authentication, that make it convenient for them to use your application.

Stream's video calling and livestreaming APIs make safety a top priority and feature full end-to-end encryption. With Stream's API, you can fully customize it to be up to your audience's security standards. Plus, all video calls through Stream's API are SOC 2, HIPAA, and ISO 27001 compliant. Just as important, it gives you the flexibility to add the moderation tools and privacy features your audience expects.