Companion project for this article: https://github.com/GetStream/generative_ai_with_flutter

Introduction

For the past few years, Generative AI has been one of the most talked-about subjects in the developer world. While this conversation began in the early to mid-2010s with simpler models and fewer people affected, it has grown exponentially, with compute power becoming more easily available on devices and newer types of AI models obliterating earlier benchmarks set by established models.

With the aforementioned advances, it was inevitable that AI would be used in most apps for specific creative features. Since these models are significantly heavy and require quite a lot of memory and computing power, they are usually exposed as APIs by the companies creating them.

However, as of the time of writing this post, there are early models designed to run on smaller devices such as phones. In the future, phones will likely come with additional hardware to specifically run AI computation, similar to how GPUs came along to handle graphic processing with increasingly taxing visual needs on devices.

For now, we focus on integrating these APIs into Flutter apps to allow developers to create AI-based features in their apps. For this post, we dive into Google’s Generative AI SDK, but the same principles apply to any other SDK. While this article focuses on adding generative AI to Flutter apps, I believe knowing what you’re working with is always important - I needed to know how a clutch assembly worked before I agreed to drive a car. Hence, this post also contains some background behind the models that have allowed the generative AI revolution to occur: Large Language Models. We will also be using Stream's Flutter Chat SDK as the foundation layer for handling all of our conversations between our LLM and the user.

Looking Into Large Language Models (LLMs)

Whether it’s Google’s Gemini or OpenAI’s ChatGPT, the base model that has taken the AI world by storm is called a Large Language Model (LLM). You may have heard of Neural Networks and how they will change the world for many years now.

However, while there was some progress in the field throughout the years, it often did not directly affect the lives of many. LLMs are also based on neural networks; however, various breakthroughs allowed them to surpass any existing model on a path toward unprecedented capabilities in natural language understanding and generation.

One of the key breakthroughs is the transformer architecture, introduced in the seminal paper "Attention is All You Need.” Transformers allow efficient processing of long-range dependencies in sequences, making them highly effective for tasks such as language translation, text summarisation, and dialogue generation.

Advancements in training strategies, such as pre-training on vast amounts of text data followed by fine-tuning on specific tasks, have significantly improved the performance of LLMs. These models can now generate coherent and contextually relevant text, mimicking human-like language patterns to a remarkable extent.

With their ability to comprehend and generate text at a level never seen before, LLMs are reshaping various industries, including customer service, content creation, and language translation. They hold the potential to revolutionize how we interact with technology, opening doors to new applications and opportunities that were previously unimaginable.

Understanding Attention

From the paper referred to in the previous section, attention refers to a mechanism that allows the model to focus on different parts of the input sequence when processing it. This mechanism is crucial for understanding and generating coherent and contextually relevant text.

The attention mechanism assigns weights to different words or tokens in the input sequence, suggesting their relative importance or relevance to the currently processed word. These weights are calculated based on the similarity between the current word and each word in the input sequence.

Words more similar to the current word receive higher weights, while less relevant words receive lower weights. These word weights are also relevant to embeddings, discussed later in this post.

The google_generative_ai package

The google_generative_ai package created by Google is a wrapper over their generative AI APIs and allows developers to interact with various AI models in their Flutter apps. The package supports various use-cases, most if not all of which we will go through in this article.

To add the package to your Flutter app, add the respective dependency in the pubspec.yaml file:

12dependencies: google_generative_ai: ^0.2.2

No special permissions are required for the package.

Supported Models Under the Package

The google_generative_ai package supports three main models: Gemini, Embeddings, and Retrieval. PaLM models that were a precursor to the Gemini models are also supported as legacy models and should be expected to be removed in due time. Gemini models come in two forms - Pro and Pro Vision.

Pro models deal primarily with text input prompts, while Pro Vision models are multi-modal and support both text and images as input. Gemini also has an Ultra version that is not supported by the API at the time of writing but should likely be expected soon. An embedding model is also provided, which will be looked into later in this article.

The final model on this list is the AQA Retrieval model. AQA stands for Attributed Question Answering. The AQA model functions differently from the normal Gemini model. It can be used for specific tasks like these:

- Answers questions based on sources: You can provide a question and relevant source materials, after which the AQA model extracts an answer directly related to the source.

- Estimates answer confidence: The AQA model doesn't just provide an answer. It also estimates how likely the answer is correct based on the context provided.

Getting Your API Key

Before you use the package, you need to get an API key for the Gemini API. Please note that the API may not be available in certain regions, such as the EU, due to privacy restrictions, so please verify if you live in a region that is supported.

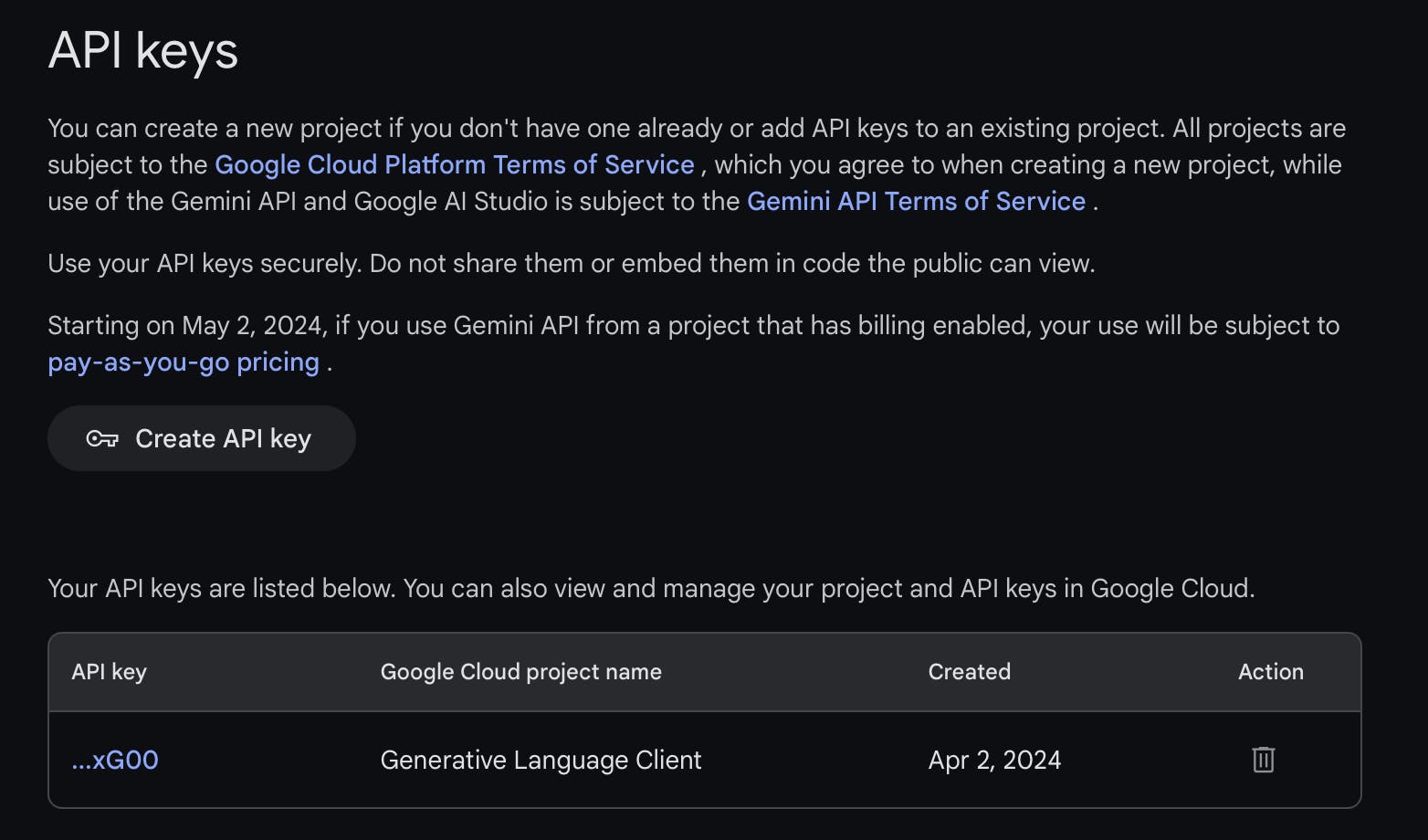

To create an API key, go to Google AI Studio, log in with your Google account, and select the Create API key button:

You can then use the generated key for your project.

Configuring Your Model

To initialize the model in the package, you can use the GenerativeModel class and specify your API key as well as the AI model you intend to use:

1234var model = GenerativeModel( model: 'gemini-pro', apiKey: GenAIConfig.geminiApiKey, );

Every prompt you send to the model includes parameter values that control how the model generates a response. You can set the same properties through the generationConfig parameter of the same class:

123456789101112var model = GenerativeModel( model: 'gemini-pro', apiKey: GenAIConfig.geminiApiKey, generationConfig: GenerationConfig( candidateCount: 2, stopSequences: ["END"], maxOutputTokens: 500, temperature: 10.0, topK: 1, topP: 0.5, ) );

The model can generate different results for different parameter values. You can adjust the configuration parameters below:

- Max Output Tokens: A token roughly equates to four characters. The "Max output tokens" setting defines the upper limit of tokens the response can generate. For instance, a limit of 100 tokens might produce approximately 60-80 words.

- Temperature: This parameter influences the randomness of token selection. A lower temperature setting suits prompts needing more deterministic or specific responses. In contrast, a higher temperature can foster more varied or imaginative outputs.

- TopK: Setting

topKto 1 means the model chooses the most probable token from its vocabulary for the next token (greedy decoding). AtopKof 3, however, allows the model to pick the next token from the three most probable options, based on the temperature setting. - TopP: This parameter enables token selection starting from the most probable, accumulating until the sum of probabilities reaches the

topPthreshold. For example, with tokens A, B, and C having probabilities of 0.3, 0.2, and 0.1, respectively, and atopPvalue of 0.5, the model will choose either A or B for the next token, utilizing the temperature setting, and exclude C from consideration. - Candidate Count: Specifies the maximum number of unique responses to generate. A candidate count of 2 means the model will provide two distinct response options.

- Stop Sequences: You can specify some sequences of characters whose appearance in the output stops the API from generating a further answer. Let’s say we specify that we include the word “END” in the stop sequences. If the hypothetical result generated by the LLM would be “START MIDDLE END TERMINATE” without the sequence, after adding the sequence, it would turn to “START MIDDLE.”

You can fine-tune the values of each parameter to tailor the generative model to your specific needs. The LLM Concepts Guide provides a deeper understanding of large language models (LLMs) and their configurable parameters.

Safety Settings

The parameters mentioned in the previous section are to control how the LLM decides the answer. However, it does not block any content that may be inappropriate for the user. For this, the API also lists safety settings that allow you to block certain kinds of content. You can also decide the probability threshold for these being blocked and set strict limits on some while being lenient on others.

Here is an example:

12345678var model = GenerativeModel( model: 'gemini-pro', apiKey: GenAIConfig.geminiApiKey, safetySettings: [ SafetySetting(HarmCategory.dangerousContent, HarmBlockThreshold.medium), SafetySetting(HarmCategory.hateSpeech, HarmBlockThreshold.high), ], );

In this example, we add two safety settings blocking dangerous content and hate speech with different thresholds. The categories you can add for safety settings are: dangerous content, hate speech, harassment, and sexually explicit content.

Text to Text Generation

The majority of users of Generative AI use text to text generation, meaning that they give a prompt as an input and get generated text as a response. For this purpose, you can create a GenerativeModel instance and use a model suited for text-to-text generation such as Gemini Pro.

The prompt is supplied as an instance of the Content class, and you can then use the model.generativeContent() method to query the API for generating a response:

12345678var model = GenerativeModel( model: 'gemini-pro', apiKey: GenAIConfig.geminiApiKey, ); final content = [Content.text("YOUR_PROMPT_HERE")]; final response = await model.generateContent(content);

Note that you can pass in the previously mentioned safety settings and generation configuration to the generateContent() request as well if you only want to use them for the single query:

123456789101112var model = GenerativeModel( model: 'gemini-pro', apiKey: GenAIConfig.geminiApiKey, ); final content = [Content.text("YOUR_PROMPT_HERE")]; final response = await model.generateContent( content, safetySettings: [...], generationConfig: GenerationConfig(...), );

Multimodal Generation

Multimodal generation involves generating outputs that incorporate information from multiple modalities, such as text, images, audio, or other forms of data. In this example, we supply an image and a text prompt to generate a response:

1234567891011121314151617181920212223// File selected from the image_picker plugin XFile? _image; var model = GenerativeModel( model: 'gemini-pro-vision', apiKey: GenAIConfig.geminiApiKey, ); var prompt = 'YOUR_PROMPT_HERE'; var imgBytes = await _image!.readAsBytes(); // Lookup function from the mime plugin var imageMimeType = lookupMimeType(_image!.path); final content = [ Content.multi([ TextPart(prompt), DataPart(imageMimeType, imgBytes), ]), ]; final response = await model.generateContent(content);

For this, we need to provide two or more parts for the content. Here, one is the text part and the other is the image part. We create a similar Content instance but use the Content.multi() constructor to indicate that we have multiple parts to the content. The DataPart class allows us to provide the actual image data to the model.

Creating a Chat Interface

Unlike simple text-to-text generation, chat needs to keep track of earlier messages and use them as context for generating further messages. You can use a Flutter chat SDK to get started. The process is quite similar to text-to-text generation, however, we also need to create a ChatSession instance through model.startChat() which starts a session:

12345678910var model = GenerativeModel( model: 'gemini-pro', apiKey: GenAIConfig.geminiApiKey, ); var chatSession = model.startChat(); final content = Content.text("YOUR_PROMPT_HERE"); var response = await chatSession.sendMessage(content);

Messages can be sent in the chat session with chatSession.sendMessage() .

The chat session also keeps track of the chat history and can be accessed through chatSession.history:

12345678910111213141516var model = GenerativeModel( model: 'gemini-pro', apiKey: GenAIConfig.geminiApiKey, ); var chatSession = model.startChat(); for (var content in chatSession.history) { for (var part in content.parts) { if(part is TextPart) { print(part.text); } else if (part is DataPart) { print('Data Part'); } } }

Using Embeddings

Internally, LLMs store a large map of the meaning, usage, and relationships between many words/bits of text. This also exists in other models, such as Diffusion models for image generation, allowing them to better understand what an image is supposed to depict.

Textual embeddings, in essence, are numerical representations of words, phrases, or entire documents derived from natural language data. These representations store semantic and syntactic properties of text. The concept is grounded in the idea that words with similar meanings or contexts should have similar numerical representations.

LLMs consider the surrounding words when encoding each word's representation. This allows them to capture the nuances of word meaning in different contexts. For example, the word "bank" can refer to a financial institution or the side of a river, and LLMs can differentiate between these meanings based on context.

The embedding model provided by Google gives us this numerical representation for the input bit of data. We can use this data to infer the similarity between any two bits or their relationships.

Using an embedding model is similar to text-to-text generation. The ‘embedding-001’ model, for instance allows us to convert the provided bit of text to the vector representation:

12345678var model = GenerativeModel( model: 'embedding-001', apiKey: GenAIConfig.apiKey, ); final content = Content.text("YOUR_PROMPT_HERE"); final response = await model.embedContent(content);

To learn more about embeddings, try out this course by Google.

Conclusion

You've now explored the principles of Google's Generative AI, the Gemini SDK, and the implementation of AI chatbot systems with text generation and photo reasoning capabilities. Generative AI offers a wide array of applications that can significantly enhance the user experience within your app. For additional insights and examples, be sure to visit the GitHub repositories linked below:

If you have any questions or feedback, you can find the author of this article on Twitter @DevenJoshi7 or GitHub. If you’d like to stay updated with Stream, follow us on X @getstream_io for more great technical content.