In 2024, Facebook deleted 5.8 million posts because of hate speech infractions. This is only one platform and a single violation type.

Every day, platforms and applications designed for user-generated content (UGC) get numerous uploads of text, audio, images, and video posts. The majority is benign and aligns with corporate policies, but some may be unsafe or breach legal standards.

To maintain safety and fairness, the companies managing these platforms must establish and enforce guidelines and regulations as defined in a content moderation policy.

In this article, you'll discover the key components for developing a successful content moderation policy.

What Is a Content Moderation Policy?

A content moderation policy is a comprehensive internal set of rules that defines what is allowed, restricted, or banned on a platform. This policy is far more detailed than what the public sees.

It's different from community guidelines and enforcement logic, so the three should not be confused.

Community guidelines are a tapered-down, public-facing version of the policy. They educate users on permitted and prohibited behavior on the platform.

Enforcement logic activates the content policies by establishing the processes for flagging, removal, and appeals.

Community guidelines are apparent to users, yet the enforcement logic functions behind the scenes to implement the rules broadly. The policy is what defines them.

How to Craft a Content Moderation Policy

Building a content moderation policy starts with understanding your platform, your users, and the types of content you expect. A strong policy balances clarity with flexibility. It should guide moderators, satisfy regulators, and help users feel safe without stifling authentic engagement.

Here's a quick reference to the core components of a strong content moderation policy. Use this as a checklist before diving deeper into each section.

| Step | Focus | Description |

|---|---|---|

| Understand the Platform and Its Users | Audience & Content | Know who will create the content and what that content should look like. |

| Define Non-Permissible Content in Depth | Rules | Lay out what is and isn’t allowed. |

| Address Cultural and Linguistic Sensitivities | Context | Account for differences in culture, language, religion, and more. |

| Refer to Applicable Laws and Compliance Needs | Legal | Ensure your platform complies with relevant laws and regulations. |

| Define Your Moderation Approach | Strategy | Determine the when, who, and how of moderation. |

| Define Enforcement Actions and Escalation Tiers | Consequences | Decide what actions apply to violations and how to escalate repeat offenses. |

| Define User-Driven Reporting Methods | User Input | Provide clear ways for users to report harmful content. |

| Design a Fair and Transparent Appeals Process | Accountability | Inform users how to appeal moderation decisions. |

| Enable Policy Updates, Changelogs, and Scalability | Flexibility | Update rules as needed and log those changes as your product and audience grow. |

| Define Internal Governance and Ownership | Roles | Clarify responsibilities across teams and departments. |

| Take Care of Human Moderators | Well-being | Protect the mental health and safety of moderators. |

| Draft and Publish Community Guidelines | Communication | Share user-friendly rules, enforcement actions, and expectations. |

| Use Data to Improve Your Policy | Iteration | Review moderation metrics and audits to refine your policy. |

Understand the Platform and Its Users

The first step in creating a content moderation policy is to define exactly what kind of UGC your platform will host. Will it be livestreams, social media posts, community discussions, player-uploaded gaming assets, or something else entirely? The format matters because each comes with its own challenges.

A livestream may require rapid response systems, while a news site must focus on moderating comments for violations like spam and hate speech.

You also need to understand your users: their age, region, literacy, and cultural orientation. You must also learn their reasons for joining the platform. Are they there to network, learn, be entertained, or express themselves? This data helps you understand what they see as acceptable.

For example, a Gen Z-heavy social app will handle content moderation much differently than a professional forum. A 22-year-old student might be interested in sharing silly memes and may be more comfortable with edgy humor, but a 45-year-old business professional will likely want their work-centric platform to keep conversation respectful.

Creating audience personas can ground your thinking and give you a clear picture of your users and their expectations.

Define Non-Permissible Content in Full Depth

Most platforms have a basic list of what is not allowed. While details vary, the main categories usually include:

-

Violence: UGC showing physical harm, injury, or death.

-

Bullying and harassment: Actions from individuals or coordinated groups that intend to intimidate, shame, or harm a person.

-

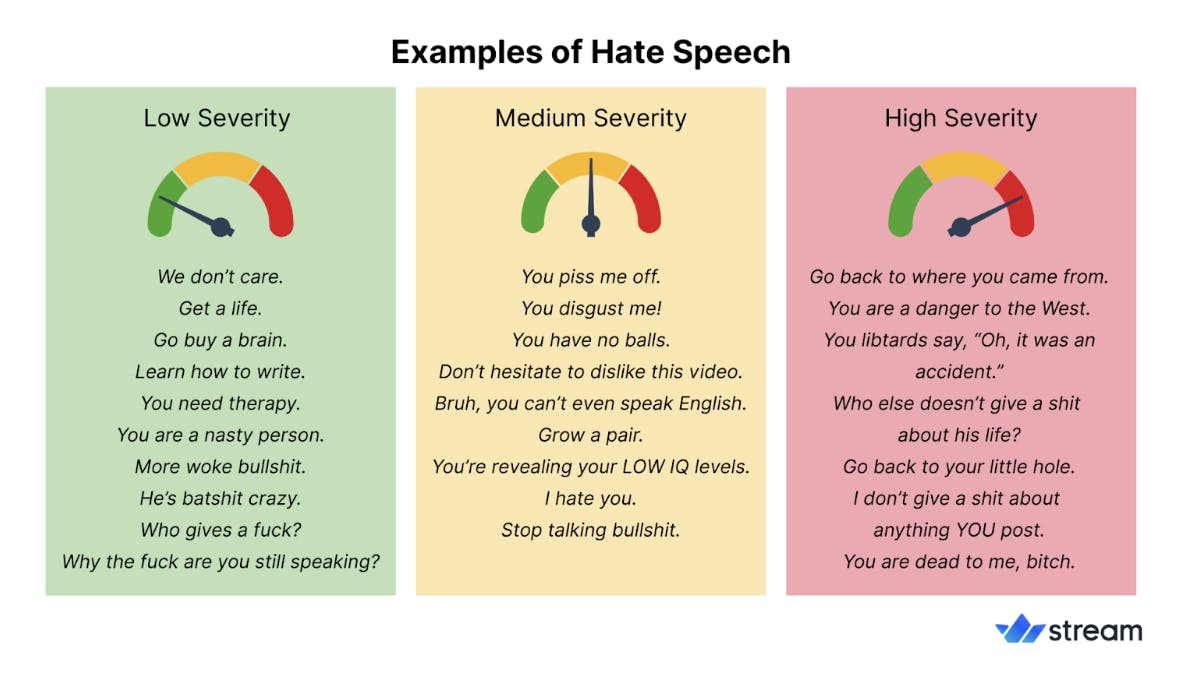

Hate speech: Offensive content meant to attack or dehumanize based on race, religion, gender, or other protected traits (like slurs, coded language, and dog whistles).

-

Misinformation: False or misleading information that can cause harm or confusion. This includes inaccurate claims about public health, political processes, or fabricated news stories.

-

Child sexual abuse material (CSAM): Any sexual or exploitative representation of minors in images, videos, or written descriptions.

-

Self-harm / Suicide: Content that promotes, encourages, or instructs on self-injury or ending one's life.

-

Sexual content: Material depicting sexual acts or explicit nudity.

-

Fraud / Spam / Scams: Attempts to deceive people for financial or personal gain, like unwanted mass messaging and deceptive schemes.

-

Restricted goods: UGC promoting, selling, or distributing banned, illegal, or dangerous products like firearms, narcotics, and fake goods.

-

Intellectual property abuse: Using copyrighted material without permission.

-

Political content: Messages promoting a political agenda on behalf of a government or political group.

However, remember to go deeper than just mentioning the typical list. Cover the context, intent, and thresholds.

Violence in a news documentary is not the same as streaming a real attack. Satire, journalism, and educational content may sometimes have to break the rules on paper, but they may still be allowed because they serve public interest.

For these edge cases, moderators can create a decision tree that maps out possible scenarios for better decision-making.

And don't forget the risks that are unique to your platform. A gaming forum might get flooded with violent memes or account phishing attempts. A livestream app might cause a political scandal to spread in real time.

Your policy must match what's actually happening in your space.

Address Cultural and Linguistic Sensitivities

Norms vary by region, religion, language, dialect, and many other factors.

Linguistically, casual banter in one dialect might sound like abuse to speakers of the same language in a different region. What counts as hate speech in one language might have a softer meaning in another.

Translating idioms and phrases from their original language can change the meaning and tone, causing confusion or offense.

Culturally, some topics can be legal yet still spark debates, such as LGBTQ+ rights or religious imagery.

Given these factors, platforms should research these differences and apply region-specific content guidelines. Using culturally aware translation standards can help preserve meaning and reduce disputes. Additionally, offering context labels, content warnings, or optional viewing settings gives users more control over the content they engage with.

Refer to Applicable Laws and Compliance Needs

Every region has laid out laws regarding data sharing and use. Here are some examples from different countries:

-

Children's Online Privacy Protection Act (COPPA): Protects the collection and use of children's online data in the U.S.

-

Digital Services Act (DSA): Regulates online platform liability in the EU around illegal content handling.

-

IT Rules 2021: Governs online content compliance in India, focusing on grievance redressal and content takedowns.

-

General Data Protection Regulation (GDPR): Protects EU personal data if data access is part of moderation

-

California Consumer Privacy Act (CCPA): Safeguards California consumer data and gives users the right to access, delete, and restrict the sale of their personal data.

These laws are designed so that platforms can't:

-

Exploit users' personal information without their knowledge.

-

Ignore illegal or harmful content when they become aware of it.

-

Avoid responsibility for how their algorithms amplify certain content.

Not following these rules can trigger consequences. For instance, under the GDPR, penalties can reach up to €20 million or 4% of a company's annual global turnover, whichever is higher. Apart from fines, violations can lead to lawsuits and loss of user trust.

Define Your Moderation Approach

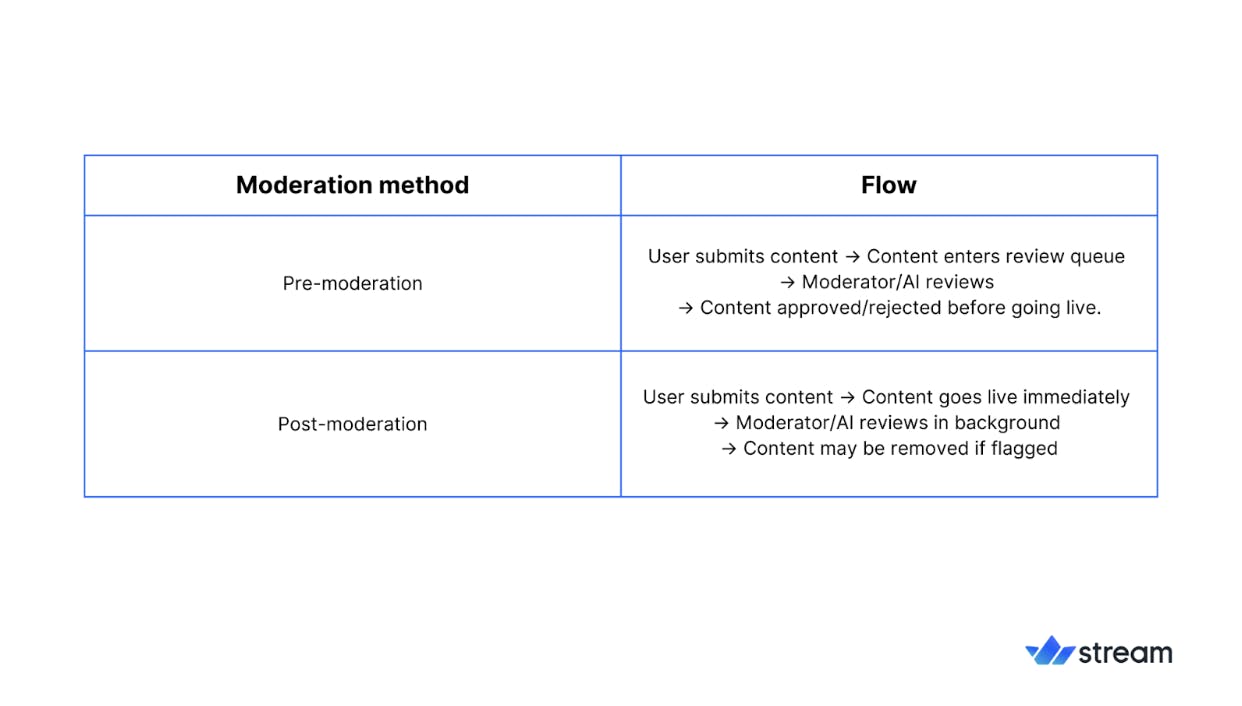

There are two main ways you can moderate UGC online: before or after it's posted.

1. Pre-Emptive Moderation (Before Upload)

This method checks all text, images, or videos before users can post, blocking harmful material before anyone sees it.

It uses filters, artificial intelligence (AI) tools, and keyword checks to detect issues, keeping unsafe content off the site entirely. Many social media platforms also use moderation APIs to scan uploads in real time and flag risky material.

2. Reactive Moderation (After Upload)

UGC is posted without review but can be flagged or removed after users or systems find problems requiring action.

This allows faster sharing while still fixing harmful posts through community reports or automated scanning tools.

Your policy should also lay out who and what moderates your users' content.

Automated systems scan content using AI to detect threats or violations, working quickly across millions of posts daily. It also helps to:

-

Reduce the workload on human reviewers by handling routine cases

-

Detect sentiment to catch early signs of harassment or abuse

-

Spot unusual activity patterns through anomaly detection

For example, Gumtree saw a 25% reduction in fraud messages by implementing AI moderation.

This approach offers fast, consistent checks, but might miss complex cases that need human judgment or local cultural understanding.

Human Moderation

Trained moderators review suspicious content to make fair and balanced decisions. They handle sensitive, nuanced situations AI can't fully judge, ensuring context is respected across the platform's communities.

Hybrid Moderation

AI tools filter most UGC automatically, but human intervention is needed in uncertain or high-risk cases. It combines machine speed with human judgment, reducing mistakes while keeping moderation efficient even for large platforms.

Community Moderation

Platform members flag, review, or remove content based on rules, often earning trust or special permissions over time. This works well in active communities where members care about keeping conversations welcoming. For example, volunteers on Reddit create custom moderation rules to align with each community's culture.

Define Enforcement Actions and Escalation Tiers

When someone breaks the rules online, you can employ different enforcement actions to prevent or remove offenses based on their severity and the user's history. Some effective actions are:

-

Soft warnings or labels politely tell users about the issue, giving a clear signal to change their behavior immediately.

-

Temporary bans block the user for some time.

-

Permanent bans mean the user's account is suspended indefinitely.

-

Content removal deletes harmful posts or comments, so other users don't see them.

-

Shadow banning hides a user's posts from some or all others without their knowledge, reducing the impact of negative content.

-

Legal escalation gets law enforcement involved if someone does something illegal.

-

Blocklists add violating accounts or phrases to a list, so future posts are automatically stopped before reaching the site.

You must also determine what actions to take for repeat offenders. If a user continuously harasses another person, then this could be the escalation sequence:

-

First offense: Soft warning with an explanation of the rules.

-

Second offense: 48-hour ban and content removal.

-

Third offense: Permanent ban.

Define User-Driven Reporting Methods

Users should also have a way to report bad online behavior. For instance, YouTube gives copyright holders a web form in 80+ languages where they can request the removal of stolen content.

Many platforms let users flag a post or block an account from contacting them, seeing their profile, or commenting on their posts. Users can also mute others, which hides their activity from your view without blocking or removing them.

If an account exists just to scam, harass, or spread harmful content, users can report the whole profile. Some platforms allow them to attach screenshots or other proof, helping moderators to fully understand what happened before making a concrete decision.

When these tools are easy to find and use, people feel safe and confident to use your platform.

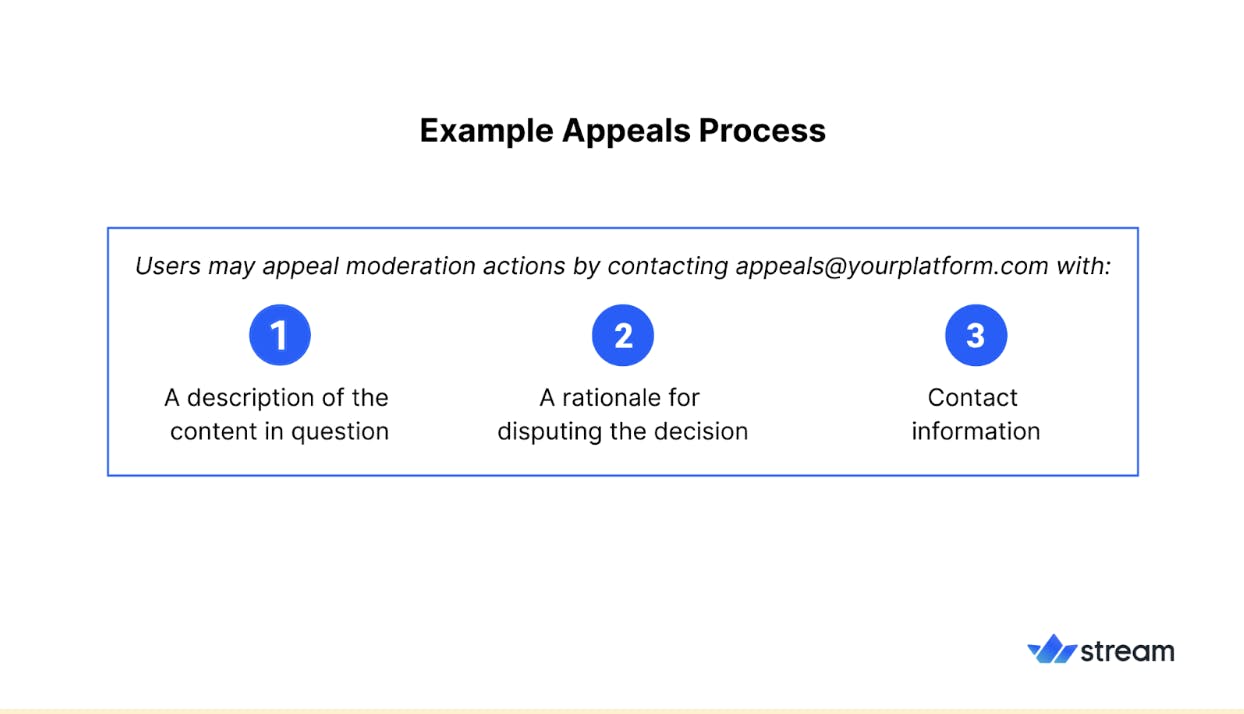

Design a Fair and Transparent Appeals Process

Designing a fair, transparent appeals process is one of the best ways a platform can show it values both users and accountability.

Clear instructions guide users on exactly how to request a review or "second look" at their case.

For example, if you have an appeal form, explicitly tell them what to include: the post ID, why it needs to be evaluated, and any screenshots that support their claim. And don't forget to offer the form in multiple languages and formats, so nobody is excluded.

Once someone sends an appeal, commit to response times. Aiming to reply within 24 to 48 hours whenever possible is ideal.

For more complex cases, be honest about delays and explain the reason. Even small status updates matter; a "we've received your request, and it's in review" message can reassure the account holder that you've heard their concerns.

Also, certain types of UGC deserve a guaranteed human look. Anything tied to cultural nuance, satire, legal disputes, or potential real-world harm should not be left to automated systems alone. In tricky cases, pull in more than one perspective, so decisions aren't made in isolation, such as bringing in the Trust & Safety team plus a regional expert.

When you close an appeal, explain the decision with the reasoning behind it, whether in the reporting or reported party's favor.

Behind the scenes, log every appeal and its result, so your own team can spot patterns and fix underlying issues. If the same types of decisions keep getting overturned, take that as a signal that your rules or enforcement need work.

Enable Policy Updates, Changelogs, and Scalability

Content rules are mutable. They adapt to changes in laws, trends, and online behaviors. This is why you must review policies on a set schedule.

For instance, you might revisit the policy once a quarter to make sure enforcement is consistent, fair, and in line with what's best for the product and its audience.

In addition, keep a public changelog, so users know what's changed, why, and how it will affect them. Also, maintain an internal changelog to help moderators apply the newest rules correctly across the platform.

Finally, remember that good policies must work at scale. YouTube's copyright rules have to handle millions of uploads every day. Scalable rules often use clear definitions, priority levels, and flexible guidelines so moderators can act quickly without losing fairness as your platform grows.

Define Internal Governance and Ownership

Internal governance is simply about knowing who is responsible when things get challenging. Without that, even the best moderation workflows can feel clumsy.

Every department should understand where they step in because clear ownership matters when a case turns urgent.

The legal team's role is to read the fine print of various laws and match it to the platform's rules. They should also be able to warn you when an action or inadequate enforcement might backfire and get you involved in high-risk cases, like failing to address unlawful behavior before it spreads. For example, platforms like Roblox have faced regulatory and public pressure for failing to adequately protect minors, underscoring how legal risks can escalate quickly if enforcement falls short.

The trust and safety team sets the tone for your entire policy. They're the ones watching patterns, rewriting rules when new abuse methods show up, and training the moderation team to stay consistent.

The engineering team connects moderation tools, fixes outages before anyone notices, and builds dashboards that show real-time spikes in risky activity.

Public relations shape statements that are factual yet calm, protecting the brand without revealing user details.

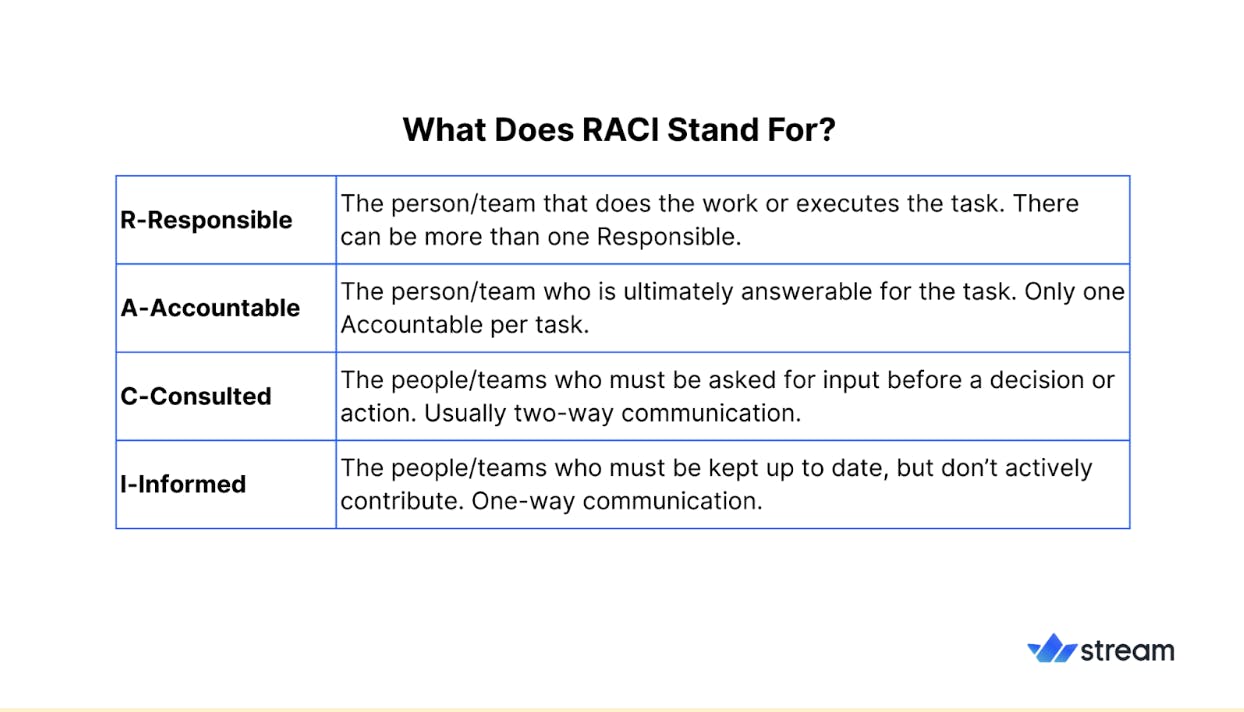

A Responsible, Accountable, Consulted, Informed (RACI) chart can provide stronger clarity into each team's role in moderating your platform.

A RACI chart is a simple table that shows who plays which role in a process:

-

Responsible: The person or people actually doing the work, like the moderator reviewing a flagged post.

-

Accountable: The individual or group with the final say or signs off on the outcome, like a trust and safety lead.

-

Consulted: The team member(s) whose input shapes the decision, such as legal for high-risk cases.

-

Informed: Those in the organization who just need to know what happened, like updating the product and PR teams after a major policy change.

The chart clarifies, in advance, who does what for each kind of task or case. Instead of debating ownership during a crisis, you can point to the chart and act quickly to resolve it.

Take Care of Human Moderators

Moderation can feel emotionally heavy. Removing hate speech, violent images, and other malicious content takes a toll on your mods' well-being.

Consider providing confidential mental health support to moderators, like a certain number of paid counseling sessions per year or access to emergency support after distressing incidents. Lay these resources out clearly in the policy.

Consider shift patterns to limit exposure. You can rotate reviewers out of graphic content queues after two hours or 20 cases, then place them on low-risk tasks for recovery.

Track cumulative exposure per person, so patterns are visible and managers can intervene early. Use automation to remove routine, low-risk items from human queues.

Use anonymized pulse checks to spot burnout and track retention and overtime as early warning signals. Compensate moderators fairly and offer career paths like transitioning to a senior moderator or a quality reviewer. Respect, pay, and progression matter for quality and institutional memory.

If you outsource some moderation work, use specialist agencies that provide training, local language capacity, and formal well-being programs. Treat outsourced teams as full partners and share playbooks, data, and mental health resources with them.

Draft and Publish Community Guidelines

Community guidelines are the public-facing document of your internal policy. Here are a few things to consider when drafting one:

-

Use short sentences, everyday words, and avoid legal terms. Test translations with native speaking editors, so the meaning stays intact.

-

Give translators context by adding notes, screenshots, or sample posts, so tone and intent survive localization.

-

Match the tone to your audience. A creator community might respond better to constructive examples ("Here's how to credit another artist") instead of only warnings. A professional forum might prefer direct, formal phrasing.

-

Pair each major prohibition with an enforcement step and a short reason. Example: "Posting personal addresses results in content removal and account suspension for violating privacy and creating a safety risk."

-

Make the appeals process visible on every enforcement notice, letting users know their options immediately.

-

Keep a public changelog of rule updates, along with a brief explanation. For big changes, invite feedback through public comments.

-

Test major rewrites in a few communities first, track appeal rates and sentiment, then expand or adjust before platform-wide rollout.

Use Data to Improve Your Policy

Reviewing your moderation practices data regularly is important to observe how well the rules work in the real world.

If several reports roll in for something not covered by your current policy, this is likely a sign that the existing rules haven't kept up. Similarly, if individual mods act on the same violation differently and cause appeals to skyrocket, you'll need to revisit the phrasing of their role handbook or schedule a team-wide training session.

Audits are a great way to show you where teams are interpreting rules differently or where an automated filter is quietly pulling in safe posts with the bad ones.

Patterns matter, too. Maybe certain violations spike every Friday night. The more you dig into these patterns, the easier it is to tweak the policy and improve its accuracy.

Finally, if you make visible updates based on the data and explain them in plain language, people notice. It shows your company is paying attention to the community, not just enforcing a set of rules you wrote some time back without a second thought.

Conclusion

An effective content moderation policy is the foundation for building a platform people can rely on. Whether you're running a global social app or a niche community, the policy can shape how moderators act and how users engage.

Remember that no two platforms have the same needs, so your policy must reflect your audience and their risk profile.

Moderation is an ongoing commitment. As your community grows, revisit your policy with fresh insight, real data, and conversations. The right moderation tools and services can support that work.