There was a time when the only weapon in the chat moderator's arsenal was a simple keyword list. You would be adding new words and phrases to your filters as they came up, always in reactive mode. Maybe you have regexes to help. Perhaps you build out a team.

But you're always chasing the latest slang, the newest way to spell spam with special characters, or the creative workarounds that spread through bad actor networks faster than you can update your blocklists. This meant moderators were always playing defense on their own platforms.

But that was then. Now, the landscape has shifted so that moderators can anticipate and prevent harm before it happens, catching coded language and coordinated attacks as they happen, not after the damage is done.

Today's chat moderation stack bears little resemblance to those early keyword filters. Modern tools use large language models (LLMs) to understand sarcasm and context. They detect harassment patterns across entire conversations, not just individual messages. They analyze images for embedded text, scan video streams in real-time, and predict when a conversation is about to turn toxic before it actually does.

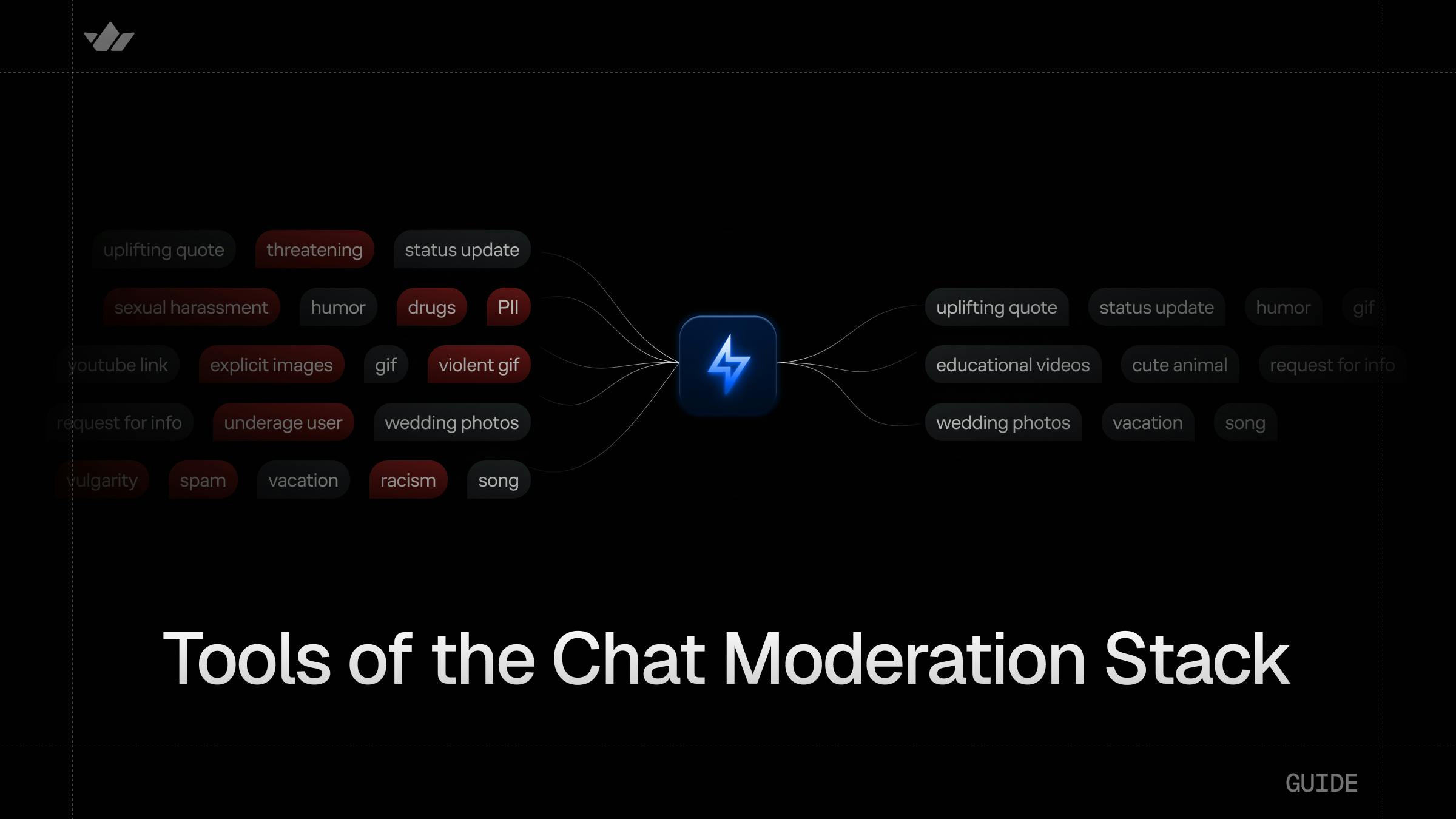

Here, we want to take you through how the top 10 essential chat moderation tools in today's moderation stack work together as a system.

The Content Detection Layer

The foundation of any modern moderation system is understanding what's actually being said, shown, or implied across every format bad actors might exploit.

1. LLM-Powered Content Review

LLMs have changed everything about text moderation. Where keyword filters see "k1ll" and either block it (including "k1ll the enemy in this game") or miss it entirely when it becomes "k¡ll," LLMs understand intent and context.

With LLMs, your moderation system can detect:

-

Sarcastic praise that's actually harassment ("Oh great, another brilliant take from our resident genius")

-

Context-dependent threats ("I know where you work" vs "I know where you work, maybe we can grab lunch")

-

Coded language and dog whistles that evolve daily

-

Grooming patterns that start innocently but follow predictable escalation paths

Modern systems can detect nearly any type of harmful content, ranging from hate speech and harassment to scams and attempts to circumvent the platform. Platforms can configure severity levels (high, medium, low) for each harm type, allowing graduated responses rather than binary decisions.

Pro: Dramatically reduces false positives by understanding context, allowing gaming communities to discuss nuances without triggering filters.

Con: Costs significantly more per message analyzed than keyword filtering and can introduce unpredictable latency spikes.

2. Multilingual Text Moderation

The ability to easily moderate different languages addresses another significant gap in traditional systems. Your Korean users were getting harassed in Korean while your English-only moderation team slept. Your Spanish-speaking community has developed its own ecosystem of policy violations that are invisible to your filters.

Modern multilingual systems detect:

-

Toxicity and harassment across 50+ languages simultaneously

-

Cross-language attacks (English users harassing Spanish speakers)

-

Regional slang and cultural context that makes something offensive in Brazil but not in Portugal

-

Script-mixing used to evade detection ("थैंक्स for being so स्टुपिड")

Pro: Enables consistent safety standards across global communities without hiring native speakers for every language.

Con: Accuracy drops significantly for languages with less training data, especially for dialect-specific harassment and cultural context.

3. AI Image Moderation

Text is just the beginning. Bad actors quickly learn that most platforms check text but ignore everything else. Images have been a massive loophole where harmful content hides in plain sight:

| What It Catches | Why It Matters |

|---|---|

| Text embedded in images (memes with slurs) | Most toxic memes rely on text that keyword filters can't see |

| NSFW content, including explicit and non-explicit nudity | Prevents ambient harassment through avatars and unwanted exposure |

| Violence and visually disturbing content | Stops traumatic content from reaching users |

| Manipulated/deepfake images | Critical for preventing non-consensual intimate images |

| Hate symbols and extremist imagery | Catches visual dog whistles that text analysis misses |

| Drug, tobacco, alcohol, and gambling content | Enables age-appropriate content filtering |

OCR capability is particularly crucial. Bad actors screenshot text to evade filters, create meme templates with embedded hate speech, and share phone numbers or addresses as images to avoid detection. Without image analysis, you're essentially blind to a massive portion of policy violations.

Modern image moderation systems enable platforms to set confidence thresholds ranging from 1 to 100 for each category. A threshold of 90 catches only highly confident matches, while a threshold of 60 provides more comprehensive coverage with an acceptable false positive rate.

Pro: Catches the massive blind spot of text-in-images where most toxic memes and screenshot workarounds hide.

Con: Processing delays can create noticeable lag in image-heavy conversations.

4. Live and Recorded Video Moderation

Video is the fastest-growing content type, so moderation of both on-demand and streaming video is necessary in any community. Real-time video analysis means:

-

Streamers can't flash offensive content for a few seconds to avoid detection

-

Recorded videos get scanned frame-by-frame for policy violations

-

Audio tracks get transcribed and analyzed for verbal abuse

-

Visual and audio analysis work together to catch context (threatening gestures with verbal threats)

Think about what this prevents: A gaming streamer briefly showing extremist content "as a joke." Tutorial videos that suddenly cut to graphic violence. Seemingly innocent kids' content that contains hidden inappropriate material.

Pro: Prevents streamers from flashing offensive content for split seconds to evade detection.

Con: Expensive to run at scale, often becoming the most significant moderation cost despite being a smaller content percentage.

Part 2: The Context Layer

Detection is just data. Intelligence is understanding what that data actually means and what's about to happen next.

5. Context-Aware Escalation

Looking at messages in isolation is like judging a movie by random frames.

Consider the message, "I'll see you after school." That can have a completely different meaning depending on the conversation history. Maybe the first comes after discussing a study group, while the second follows twenty messages of increasingly aggressive threats. Single-message analysis cannot distinguish between them.

Context-aware systems evaluate patterns across time:

-

They can catch grooming behaviors that escalate over weeks from friendly to personal to isolated to exploitative.

-

They spot coordinated harassment where 50 users each send one "innocent" message that together form an attack.

-

They identify brigading campaigns timed across multiple channels and recognize love-bombing followed by manipulation in relationship abuse patterns.

Group dynamics reveal pile-ons, mob formation, and exclusion patterns that individual message review would miss entirely. Platforms can configure detection windows from as short as 30 minutes to as long as 30 days, capturing both immediate harassment spikes and slow-burn campaigns. For example, a system might ban users who send five or more spam messages within one hour, or flag accounts that post three or more hate speech messages within 24 hours.

Pro: Identifies grooming and harassment patterns that would look innocent when viewing messages individually.

Con: Requires storing and analyzing conversation history, which raises privacy concerns and complicates data retention.

6. Severity Levels for Early Intervention

Severity levels uses NLP to identify the intensity and urgency of harmful content within conversations. Instead of waiting for explicit policy violations, it gives moderators a chance to step in before conflicts spiral out of control.

Modern moderation systems continuously analyze message patterns to identify potential escalation based on behavioral indicators such as:

-

Frequency and volume of Critical messages from a single user

-

Targeting patterns

-

Repetition of flagged or rule-adjacent terms

When the system detects these patterns, it enables graduated interventions:

| Risk Level | Automated Response |

|---|---|

| Low | Gentle nudge or topic suggestion |

| Medium | Automated cool-down timer |

| High | Priority moderator alert + resource injection |

| Critical | Immediate human intervention required |

The challenge is calibration. Different communities tolerate different behaviors: gaming environments, for example, may require higher thresholds than support or education spaces. Dark humor between friends shouldn't trigger the same response as genuine threats. Cultural communication styles vary widely.

Pro: Promotes early intervention and reduces harm before violations occur.

Con: Requires continuous tuning to minimize false positives and adapt to community context.

Part 3: The Operational Control Layer

Intelligence without execution is just an expensive report. Your operational control layer turns insights into consistent, scalable actions that actually protect your community.

7. Custom Rule Builder

Your gaming community needs different rules during tournament streams than during casual play. Your mental health support channels require different thresholds than your meme channels. Every community is different, but engineering resources are finite.

No-code rule builders let non-technical team members create sophisticated policies without touching code. Here's what rule builders enable:

-

Conditional Logic: IF user_reputation < 20 AND message contains link AND account_age < 7 days THEN quarantine

-

Time-Based Rules: Stricter moderation during school hours, relaxed rules for adult-hours content

-

Event Triggers: Auto-escalate moderation during live events when brigading risk peaks

-

User Segment Policies: Different rules for subscribers, new users, verified accounts, repeat offenders

Modern rule builders distinguish between user-type rules, which track behavior over time, and content-type rules, which evaluate individual pieces immediately. Platforms can combine multiple condition types, including text analysis, image detection, account age checks, custom user properties, and content frequency thresholds.

Pro: Empowers non-technical staff to adjust policies instantly without waiting for engineering sprints.

Con: Rule proliferation can create conflicts and unexpected interactions that become impossible to debug.

8. Moderator Dashboard & Workflow Tools

Effective dashboards organize content into three primary queues:

-

Users Queue for account-level issues

-

Text Queue for message violations

-

Media Queue for image and video content

Moderators can take specific actions, including marking content as reviewed, issuing permanent or temporary bans, deleting users or their content, and unblocking messages that were mistakenly flagged.

Good dashboards surface high-risk situations first, using AI severity scores and user reputation to prioritize queues. They show complete context in one view: the reported message, the whole conversation thread, user history, previous moderator actions, and similar cases for consistency. These workflow improvements enable moderators to work up to 25% faster while maintaining consistency and accuracy.

Pro: Significantly increases moderator efficiency through better context and batch actions.

Con: Requires significant training investment and can overwhelm new moderators with information density.

9. User & Message Reporting API

Traditional reporting systems fail because they're either too simple (just a "report" button that moderators have to interpret) or too complex (lengthy forms that users abandon).

Reporting APIs should strike a balance with structured categories that guide users while gathering actionable intelligence. These APIs expose methods like flagUser(), flagMessage(), banUser(), and blockUser() that integrate directly with application interfaces, allowing users to report issues without leaving the platform experience.

Community reporting also trains automated systems. Every confirmed report becomes a labeled training example. Every false report helps reduce the likelihood of future false positives. The community literally teaches your AI what they consider harmful in their specific context.

Pro: Transforms your community into a detection network while training your AI on real user preferences.

Con: Coordinated false reporting can be weaponized to harass users through the moderation system itself.

Part 4: The Measurement and Compliance Layer

You can't improve what you don't measure, and you can't prove compliance without evidence. Modern moderation requires both operational intelligence and regulatory documentation.

10. Analytics & Compliance Reporting

Basic metrics tell you nothing useful. "We reviewed 1 million messages" doesn't indicate whether you caught the right ones. "99% accuracy" is meaningless if that 1% includes child safety violations. "24-hour response time" doesn't matter if harm spreads in the first 30 seconds.

Modern analytics track patterns and outcomes. You need to understand which harm types are growing or shrinking, when and where violations spike, which user segments face disproportionate abuse, and whether your interventions actually prevent recurrence or merely play a game of whack-a-mole.

| Metric Type | What It Actually Tells You |

|---|---|

| Harm velocity | Whether problems are growing faster than solutions |

| Intervention effectiveness | If your actions prevent repeated violations |

| False positive rates by demographic | Whether you're unfairly impacting certain groups |

| Time-to-detection | How much damage occurs before you respond |

| Recidivism rates | Whether bad actors return with new tactics |

Comprehensive dashboards track AI-detected violations, manually flagged content, total moderator actions, and user-specific metrics, such as ban counts and appeal rates. These systems maintain ISO 27001, SOC 2, GDPR, and COPPA certifications, generating the audit trails required for compliance reporting.

Pro: Provides defensible audit trails that satisfy regulators while revealing which interventions actually work.

Con: Can create analysis paralysis where teams spend more time debating metrics than improving safety.

Building Your Moderation Stack

The journey from keyword lists to modern moderation is a journey from reactive defense to proactive protection. These 10 tools function as an integrated system, where LLMs feed sentiment analysis, which triggers custom rules that populate dashboards, generating compliance reports.

The investment encompasses technology, configuration, and training. The future isn't about perfect prevention but systematic protection that evolves with emerging threats. Successful platforms view moderation not as a cost center, but as the foundation that makes genuine community possible.