AI chatbots have become a familiar feature in many modern applications. Yet, practical questions remain: “How should we integrate a chatbot into our service?”

To explore this question, I launched an experimental project: a sommelier chatbot. Wine is a domain rich with variety and unfamiliar terms—perfect for testing how helpful an AI assistant can be in guiding users through complex needs and how naturally it can be integrated into a real application interface.

This project focused on:

- Designing an AI chatbot that recommends wines through real-time conversation

- Embedding the chatbot within an actual chat interface

- Gaining insights on conversational UX—such as message flow, context awareness, and user engagement

From a technical perspective, I used Stream Chat to implement the chat UI. This chat SDK handles the messaging logic out of the box, allowing me to concentrate on AI behaviour and user experience. While Stream is an excellent tool, this post focuses less on the SDK itself and more on how an AI chatbot can be practically integrated into a service.

If you’re considering adding a chatbot to your product or just want to explore what’s possible, this post might offer some useful reference points.

Disclaimer: This project is based on the demo app Wine Butler and AI-generated wine data. Information such as grape varieties, food pairings, and country of origin may not reflect real-world wine knowledge.

Limitations of the Original UX

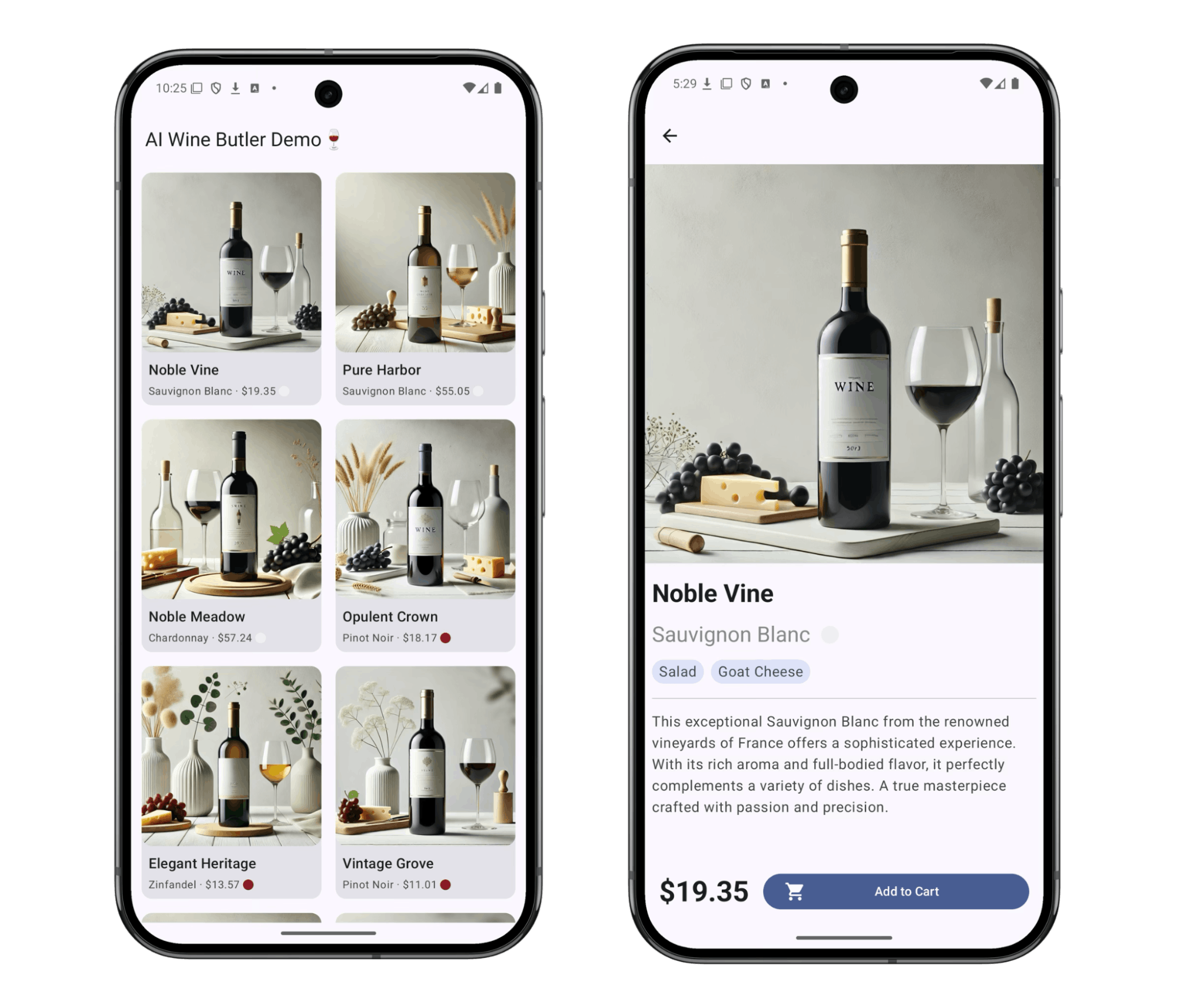

The Wine Butler app includes metadata such as grape varieties, price ranges, and food pairings for each wine. However, on the wine list screen, users can only see basic information such as the name, type, and price. To view detailed information, they must navigate to each wine’s page.

For example, if a user is looking for “a wine under $20 that pairs well with meat,” the current structure forces them to manually browse and filter through the list—a time-consuming and inconvenient process.

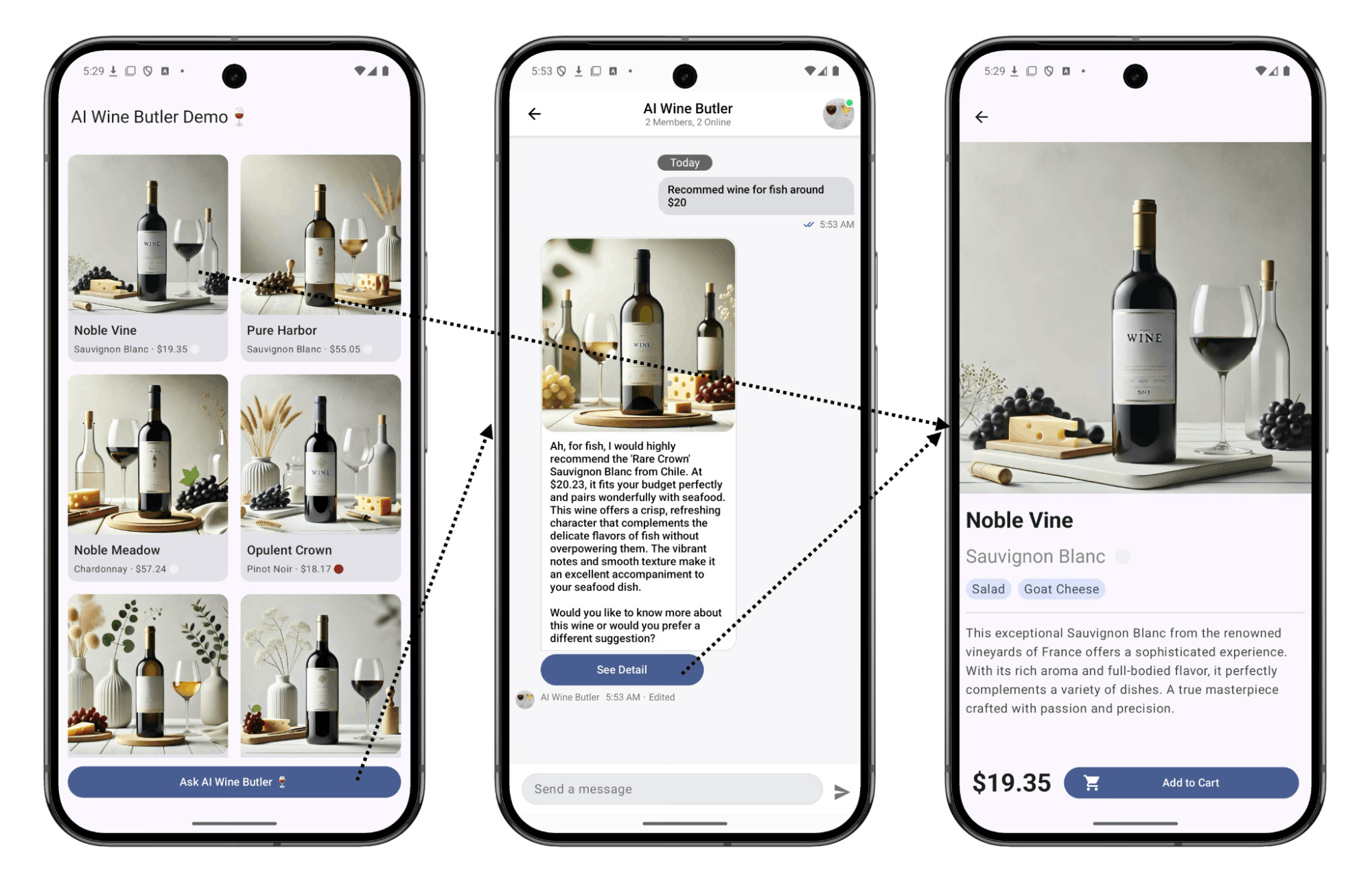

To streamline this experience, we added an AI chatbot and a quick-access button to launch it. Now, users can simply describe their preferences in natural language, and the chatbot will suggest matching wines. From there, they can jump directly to the wine’s detail page.

This approach addresses the limitations of list-based navigation and delivers a more flexible, personalized search experience powered by AI.

Implementation

The project was built using Node.js on the backend, and Android (Kotlin with Jetpack Compose) on the frontend. If you're working in a similar environment, I highly recommend reading Build an AI Assistant for Android Using Compose.

To build the chatbot’s messaging interface, I used the Stream Chat SDK. One of the biggest advantages of Stream is that it provides almost all the essential components for chat functionality out of the box. This allowed me to focus entirely on the interaction logic between the user and the AI, rather than low-level chat infrastructure.

The SDK handled message flow, state synchronization, and UI rendering, freeing me up to concentrate on designing the chatbot’s behavior and user experience.

Message Structure

To effectively deliver wine recommendations, the chatbot needed to send more than plain text—it also had to include structured data such as product IDs, wine images, and message status indicators.

Stream Chat supports adding custom fields to each message via \extraData\, which allowed me to define a custom message format like this:

| Field | Description | Type |

|---|---|---|

| text | Chat message text | Default field |

| attachments | Wine image | Default field |

| wine_id | Recommended wine ID | Custom field |

| ai_generated | Indicates whether the AI-generated the message | Custom field |

| generating | Indicates if the message is still being composed | Custom field |

In Kotlin, you can access these custom fields like this:

1val Message.wineId: String? get() \= extraData\["wine\_id"\] as? String

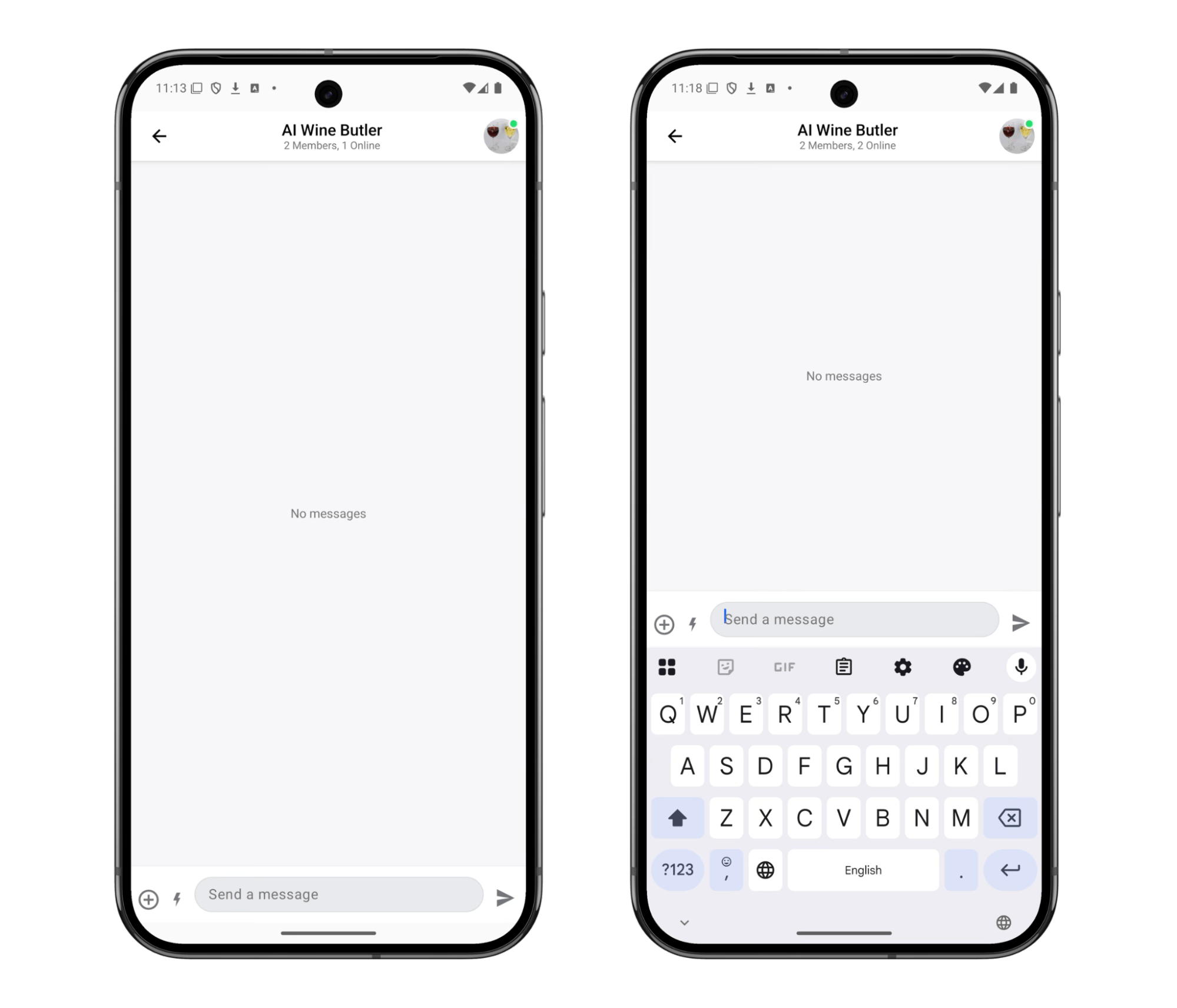

Building Chat UI and Customizing Messages

The Stream Chat SDK provides prebuilt UI components that make it easy to render a complete chat interface—just specify the channel ID, and you're ready to go.

1234567891011121314151617181920212223@Composable fun MessageScreen( cid: String, onBackPressed: () \-\> Unit ) { val context \= LocalContext.current val viewModelFactory \= remember { MessagesViewModelFactory( context \= context, channelId \= cid, messageLimit \= 30 ) } BackHandler(onBack \= onBackPressed) ChatTheme { MessagesScreen( viewModelFactory \= viewModelFactory, onBackPressed \= onBackPressed ) } }

Beyond just rendering messages, I used Stream’s ChatComponentFactory to customize how individual messages are displayed.

1234567ChatTheme( componentFactory = object : ChatComponentFactory { // ... } ) { // ... }

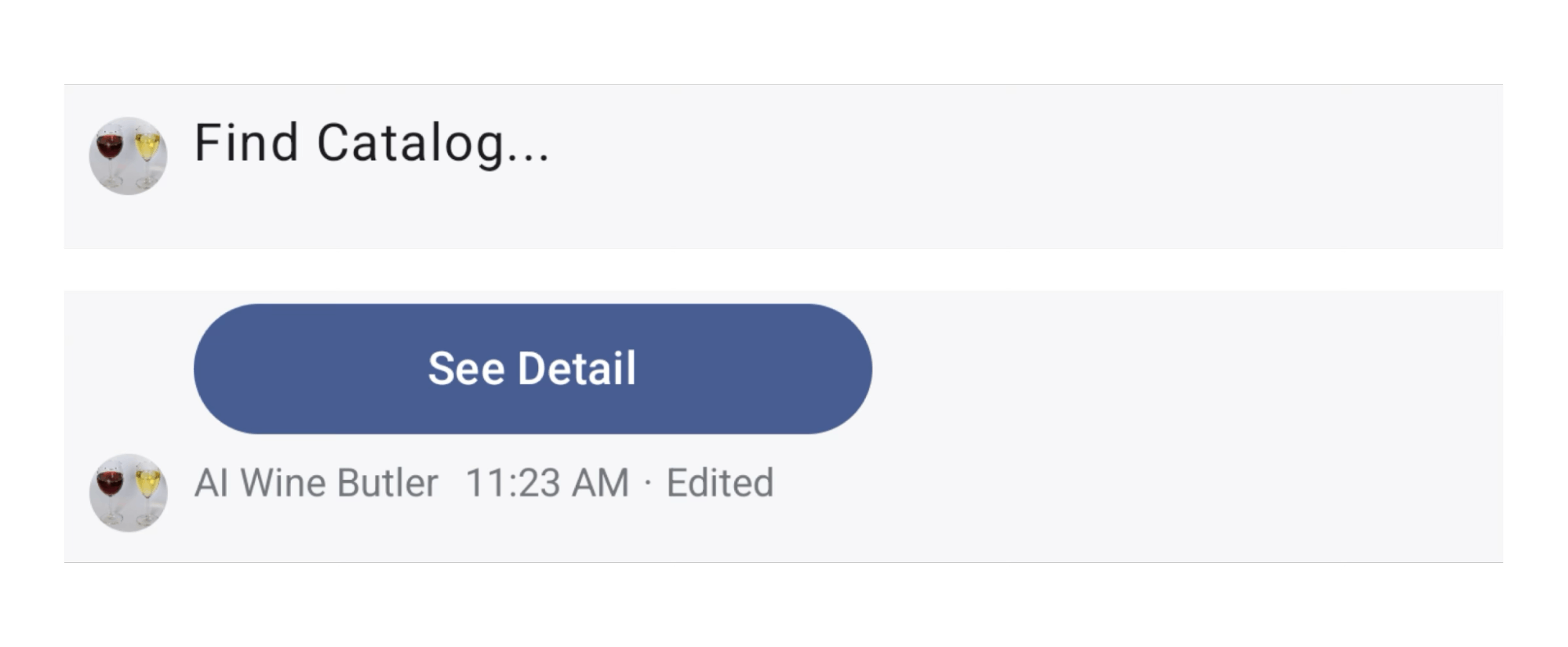

In this project, I implemented two key customizations:

- While the AI is composing a message, show a placeholder like “Finding catalog…”

- When a message includes a wine recommendation, display a “See Detail” button linking to the product page.

To support this, I leveraged the custom message fields (ai_generated, generating, and wine_id) and overrode MessageFooterContent to render different components based on the message state.

123456789101112131415161718192021222324252627282930313233// MessageExtension.kt val Message.wineId: String? get() \= extraData\["wine\_id"\] as? String val Message.isAiGenerating: Boolean get() \= extraData\["ai\_generated"\] as? Boolean \== true && extraData\["generating"\] as? Boolean \== true // MessageScreen.kt ChatTheme( componentFactory \= object : ChatComponentFactory { @Composable override fun MessageFooterContent(messageItem: MessageItemState) { if (messageItem.message.isAiGenerating) { // custom field Text("Find Catalog...") } else { Column { messageItem.message.wineId?.let { wineId \-\> // custom field Button( onClick \= { onWineClick(wineId) }, ) { Text("See Detail") } } super.MessageFooterContent(messageItem) } } } } ) { ... }

This setup allowed the UI to adapt in real time based on the chatbot’s status and message context.

Thanks to the flexibility of the Stream SDK, I delivered a tailored user experience—without having to build complex chat logic from scratch.

Setting Up the Backend

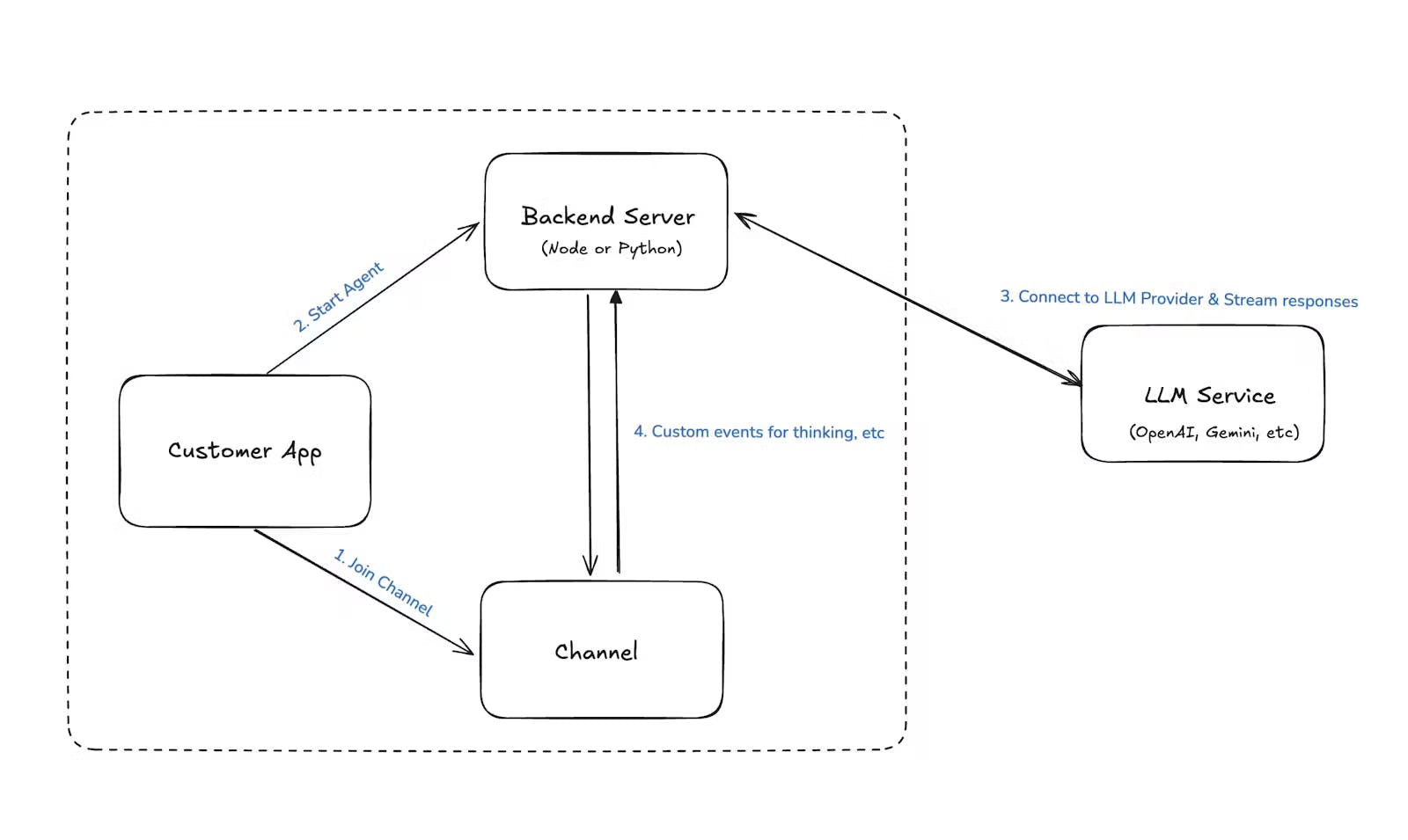

For the backend, I referred to the chat-ai-sample project introduced in Build an AI Assistant for Android Using Compose and adapted it for a Node.js environment.

Whenever a user entered the chat, I initialized a dedicated AI agent instance (\AnthropicAgent\) for that user on the server side.

To detect user messages in real time, I used Stream SDK’s event subscription feature to listen for the \message.new\ event—this triggered the AI response generation workflow.

123456789101112131415// agentController.ts const agent \= await createAgent(user\_id, channel\_type, channel\_id\_updated); await agent.init(); // AnthropicAgent.ts init \= async () \=\> { const apiKey \= process.env.ANTHROPIC\_API\_KEY as string | undefined; if (\!apiKey) { throw new Error("Anthropic API key is required"); } this.anthropic \= new Anthropic({ apiKey }); // subscribe new message event this.chatClient.on("message.new", this.handleMessage); };

Skip AI Assistant’s Message

Not every message should trigger an AI response.

To avoid responding to messages generated by the chatbot itself, we used the previously defined \ai\_generated\ flag to filter them out:

1234567891011// AnthropicAgent.ts private handleMessage \= async (e: Event\<DefaultGenerics\>) \=\> { ... if (\!e.message || e.message.ai\_generated) { console.log('Skip handling ai generated message'); return; } ... const message \= e.message.text; if (\!message) return; }

Communicate with AI

Here’s the message flow I implemented to handle AI responses:

- Merge recent messages to build conversation context

- Send a temporary placeholder message indicating the AI is “typing.”

- Request a response from the AI model using the constructed context

- Use \

partialUpdate\to replace the placeholder with the final reply once the response is received

12345678910111213141516171819202122232425262728293031323334353637383940414243444546private handleMessage \= async (e: Event\<DefaultGenerics\>) \=\> { ... // 1\. Merge recent messages to create conversation context const messages \= this.channel.state.messages .slice(-10) .filter((msg) \=\> msg.text && msg.text.trim() \!== '') .map\<MessageParam\>((message) \=\> ({ role: message.user?.id.startsWith('ai-wine-butler-from') ? 'assistant' : 'user', content: message.text || '', })); if (e.message.parent\_id \!== undefined) { messages.push({ role: 'user', content: message, }); } // 2\. Send a temporary empty message to indicate the AI is “typing” const { message: channelMessage } \= await this.channel.sendMessage({ text: '', ai\_generated: true, generating: true, }); // 3\. Make an actual request to the AI model and receive a response const aiAgentMessage \= await this.anthropic.messages.create({ max\_tokens: 1024, messages, model: 'claude-3-5-sonnet-20241022', }); // 4\. Use partialUpdate to replace the placeholder message with the final reply await this.chatClient.partialUpdateMessage(channelMessage.id, { set: { text: aiAgentMessage.content .filter((c) \=\> c.type \== 'text') .map((c) \=\> c.text) .join(''), generating: false, }, }); ... }

This setup allowed the chatbot to respond in a natural, real-time flow. By leveraging Stream’s message event system, I could detect user input and dynamically update the chat based on the AI’s responses.

Structuring AI Responses: Prompt Engineering

Just building a chatbot isn’t enough to make it production-ready.

By default, most large language models act as general-purpose assistants—they can answer a wide range of questions but often lack domain-specific knowledge or awareness of business constraints.

For instance, asking the AI to recommend wines based on specific criteria or limiting suggestions to stock items requires clear, explicit instructions.

That’s where prompt engineering comes in. By crafting well-structured prompts, I guided the AI to adopt a defined role and generate responses in a consistent, structured format.

Defining the Role: Wine Butler

First, I defined a clear persona for the AI:

You are a friendly AI wine butler who answers questions about wine and recommends the perfect wine for any occasion.

Providing Context: Wine Catalog

One of the strengths of large language models is their ability to reason over structured data. To take advantage of this, I passed the entire wine catalog to the AI as a JSON string, enabling it to be recommended only from the available inventory.

The wines we have in stock are as follows:

1${JSON.stringify(wines, null, 2)}

Behavioral Guidance: Defining Detailed Instructions

In addition to defining its role, I gave the AI detailed behavioral guidelines to follow across different situations:

- Answer any wine-related questions from the user.

- Recommend wines based on the user’s preferences, occasion, or food pairing, using a courteous and elegant tone.

- Only suggest wines that are currently in stock.

- Provide clear and simple explanations if the user asks about wine knowledge.

- Politely decline to answer if the question is too difficult or inappropriate.

Output Format: Enforcing a JSON Response

I needed the AI to return its responses in a strict JSON format to enable custom rendering on the client side. To enforce this, I added a formatting rule at the end of the prompt:

All responses must be in JSON format, using escape characters like ‘n’ for line breaks. Do not include markdown or explanations—only return the JSON.

Required fields:

- text: A conversational message including a recommendation and a follow-up question

- attachments: If a wine is recommended, include an image

- wine_id: The ID of the recommended wine (if any)

12345678910{ "text": "string", "attachments": [ { "type": "image (constant)", "image_url": "(wine image url)" } ], "wine_id": "string?" }

Providing System Prompt

To ensure consistent behavior from the AI, I added the full prompt as a system-level instruction at the beginning of the conversation context.

In this implementation, the system prompt is injected as the first message in the list:

12345678910111213private handleMessage = async (e: Event<DefaultGenerics>) => { // ... const systemPrompt = "You are a friendly AI wine butler who..."; const messages = [ { role: 'user', // or 'system' depending on your LLM setup content: systemPrompt, } as MessageParam, ...this.channel.state.messages // ... ]; }

Parsing JSON and Updating Messages

The AI's response is returned as a JSON string, so I parsed it and used \partialUpdateMessage\ to update the placeholder message on the UI:

1234567891011121314151617private handleMessage = async (e: Event<DefaultGenerics>) => { // ... await this.chatClient.partialUpdateMessage(channelMessage.id, { set: { ...JSON.parse( aiAgentMessage.content .filter((c) => c.type == 'text') .map((c) => c.text) .join('') ), generating: false, }, }); // ... }

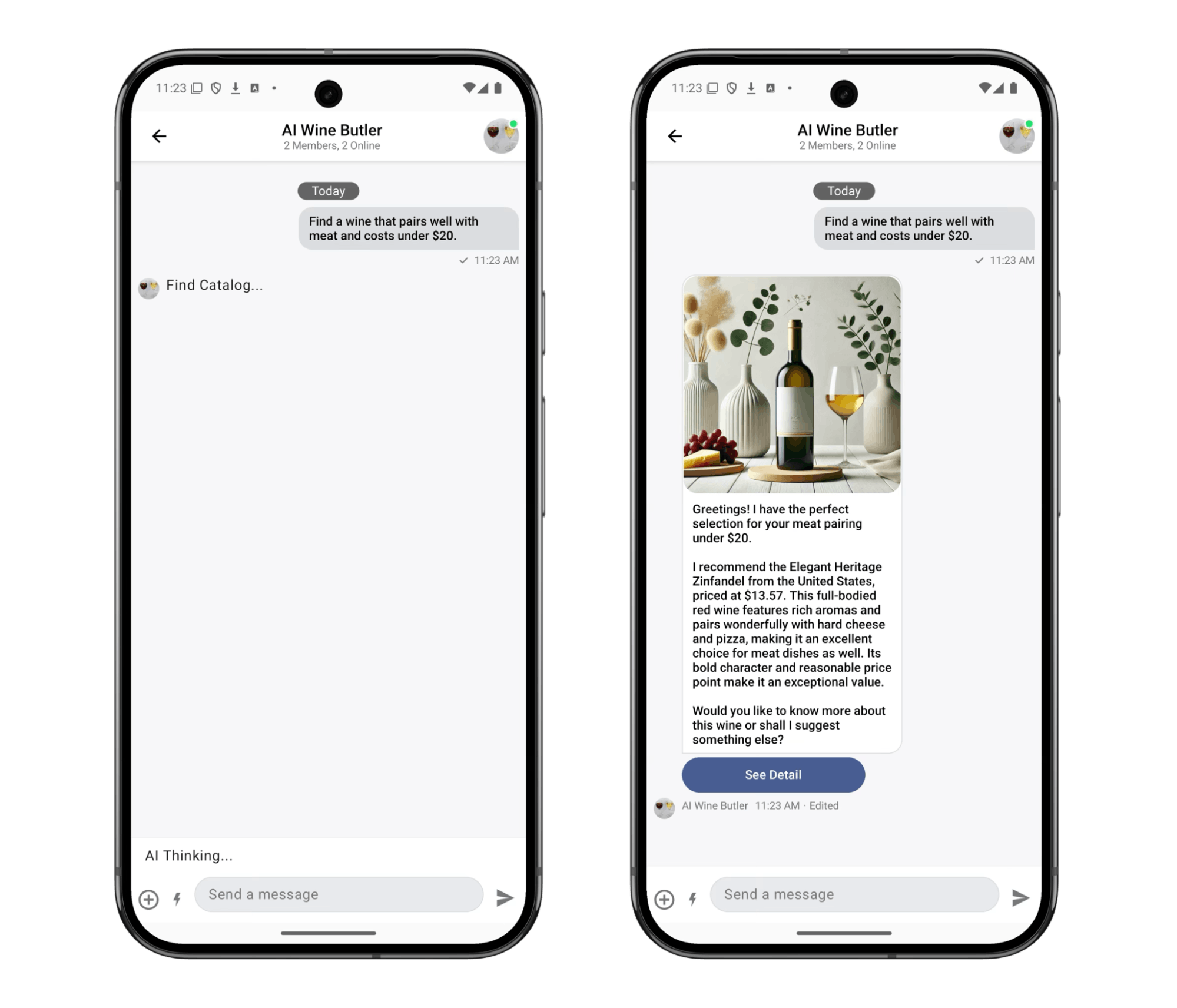

TA-DA!

The AI responded exactly as intended—with a recommendation message, a wine image, and a “See Detail” button linking to the product page. All the components worked together to create a smooth and cohesive user experience.

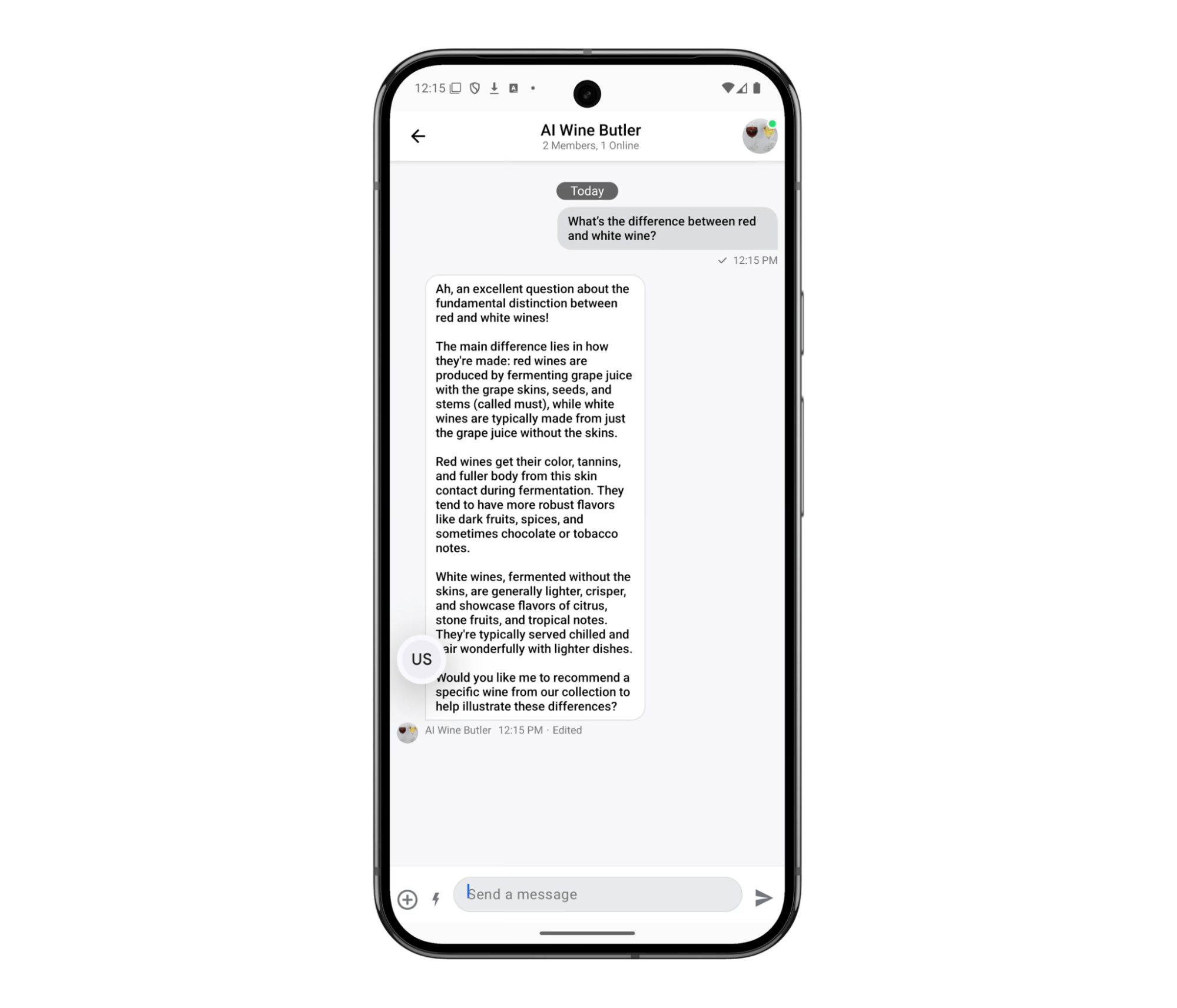

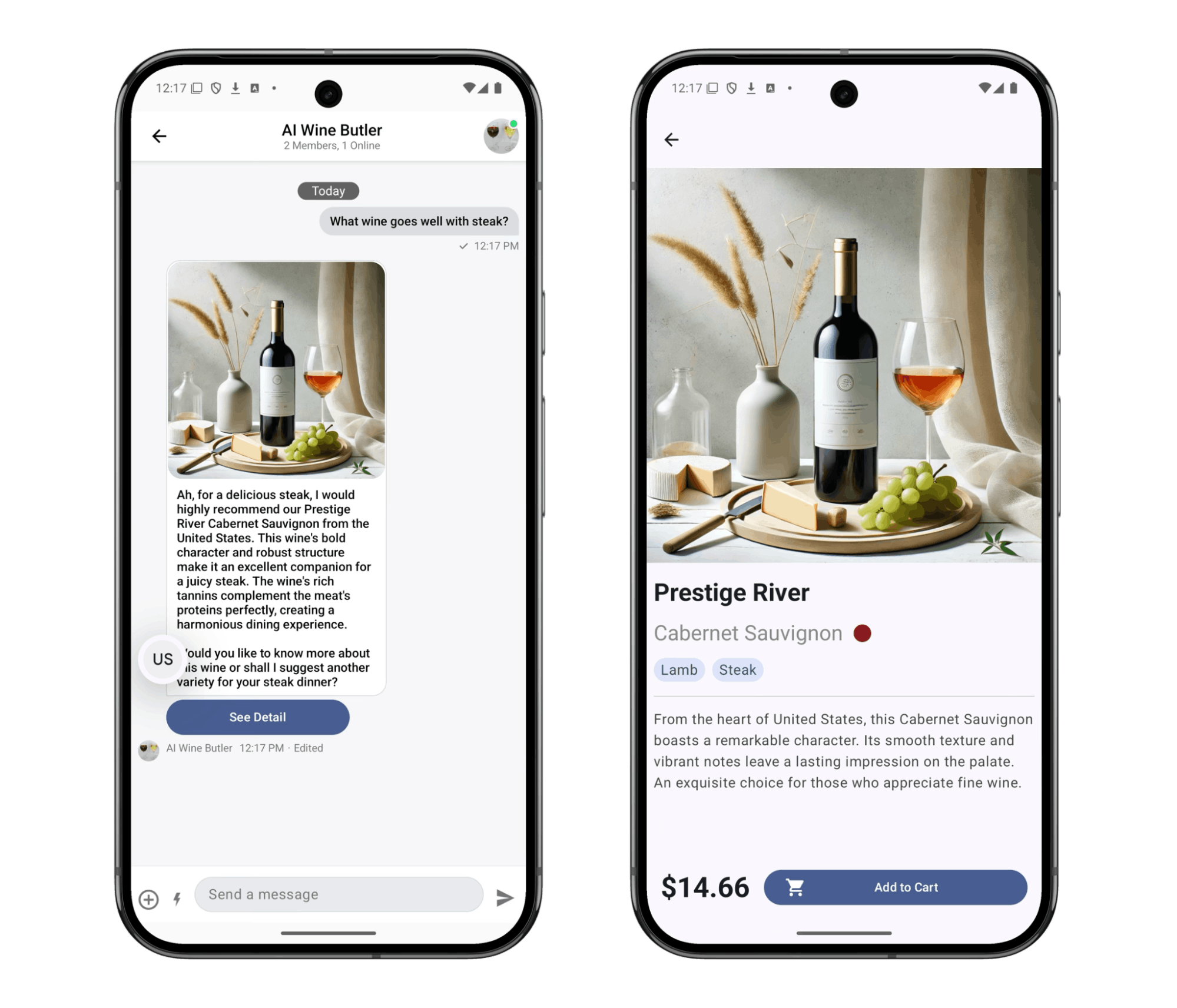

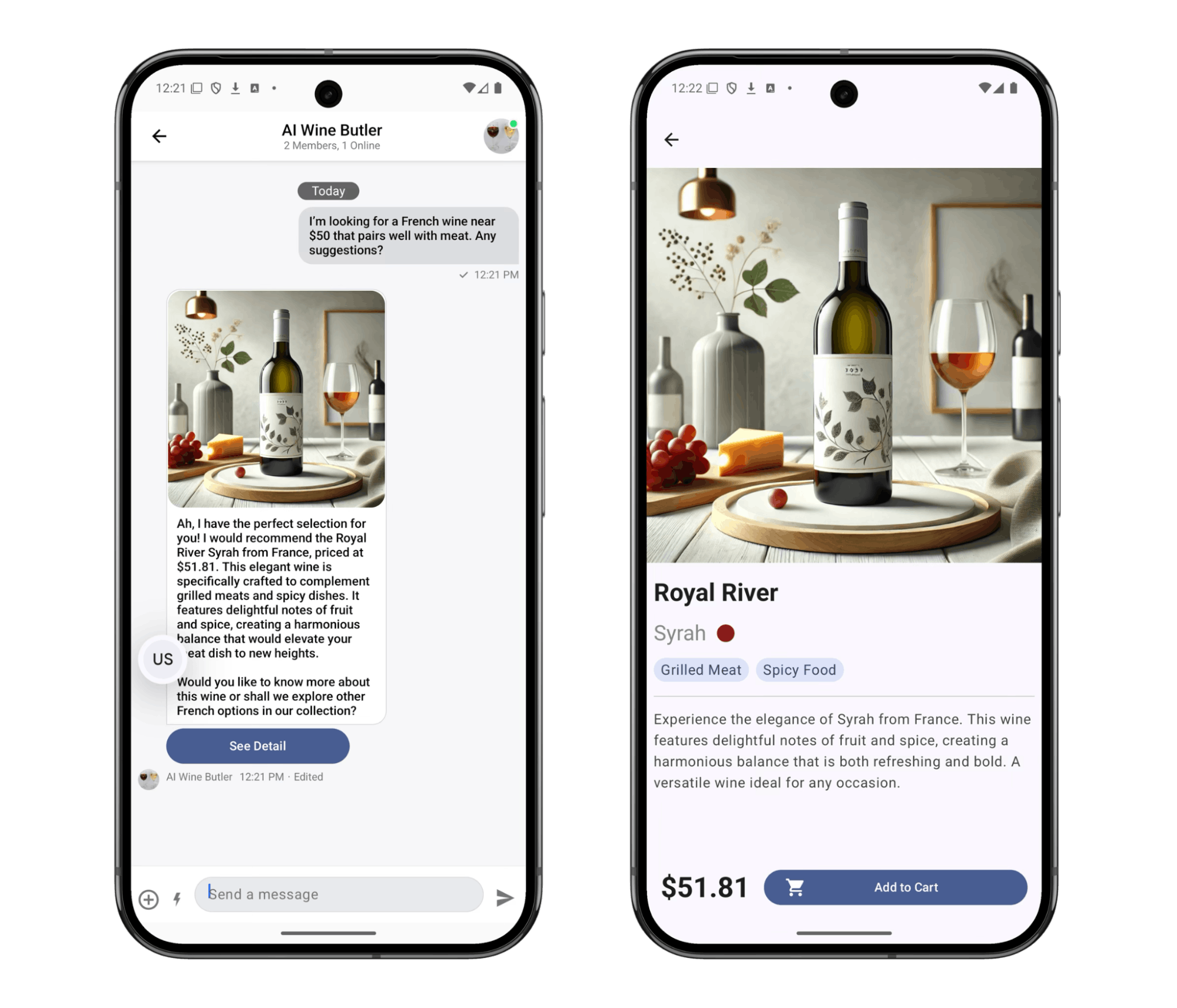

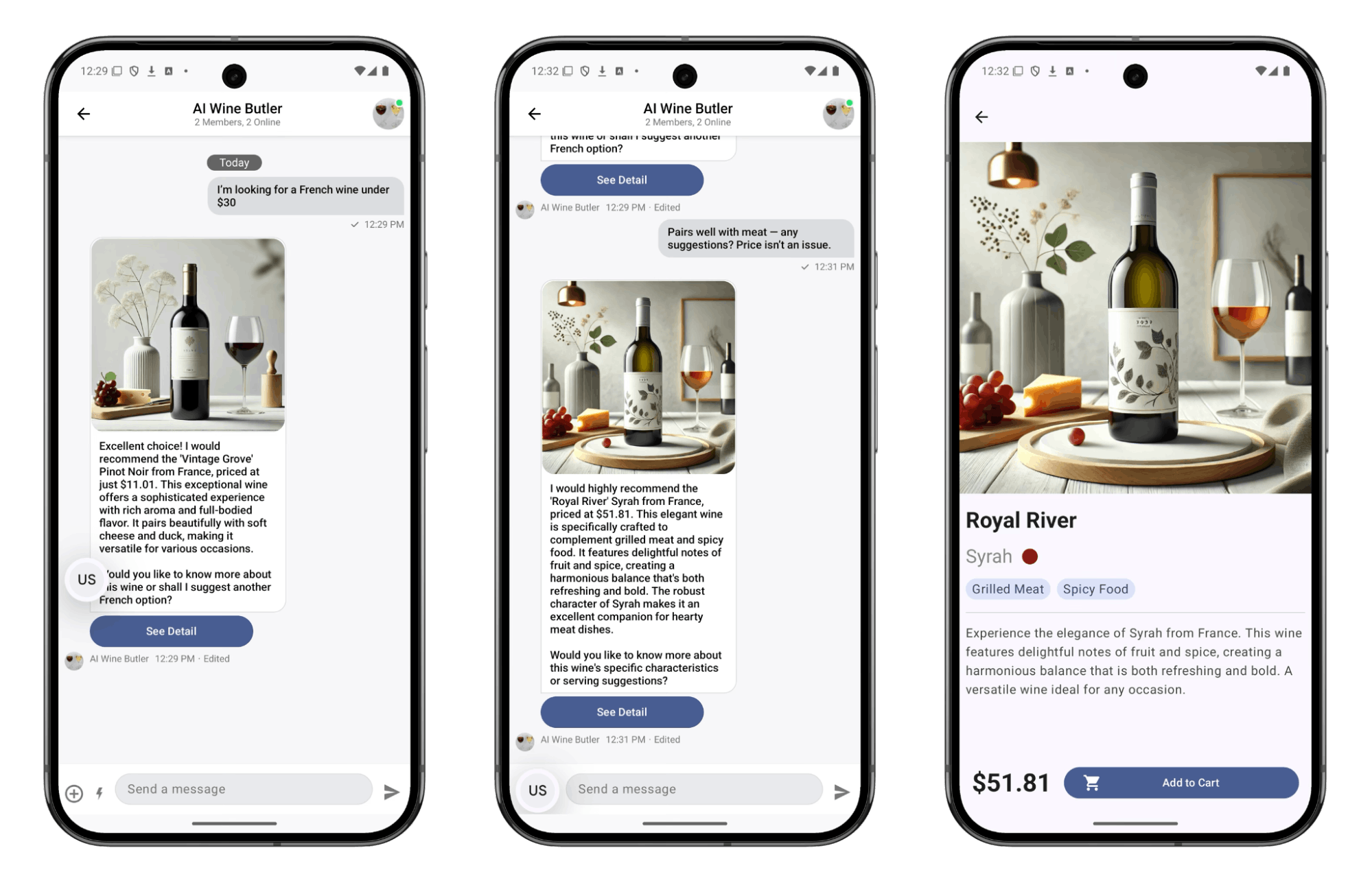

Demo Examples

Here are a few real examples of how the AI Wine Butler responded to user requests in practice.

Beyond basic Q&A, the chatbot successfully handled complex scenarios—including conditional logic, contextual awareness, and multi-turn conversations—with impressive fluidity.

Ask for General Wine Knowledge

Price-Based Recommendations

Food Pairing Suggestions

Multi-Criteria Requests

Context-Aware Follow-Ups

I first asked for a “French wine under $30” and then followed up with: “Actually, forget the price—just make sure it pairs well with meat.”

The chatbot remembered the previous context and adjusted its recommendation accordingly.

Future Improvement

While I successfully built and validated the core chatbot functionality, I’d like to explore several technical enhancements to make the system more production-ready.

Response Streaming

ChatGPT and Claude support streaming, allowing the client to receive token-by-token messages. This creates a smoother, more natural user experience—text appears to be typed in real time.

In this project, however, I required the AI to return a fully formatted JSON response. Due to parsing constraints, streaming wasn’t feasible. I’d like to explore ways to preserve the structured format while improving the timing and feel of AI responses.

Expanding the AI’s Tool Usage

Modern LLM support tools use built-in mechanisms such as function calls, API integration, and data filtering.

If applied to this project, it could unlock new capabilities like:

- Triggering a product search API based on user input

- Programmatically interacting with the Stream chat system

With these additions, the chatbot could go beyond static text replies and take actual, dynamic actions based on user intent—making it more powerful and interactive.

Final Thoughts

AI chatbots are no longer a novelty. But for many teams, the real challenge lies in figuring out how to integrate them practically into a product—and understanding what’s genuinely possible once they’re in place.

This project was my way of exploring that question. By embedding an AI assistant directly into an existing UI—and connecting prompts, data, and chat logic—I was able to run focused experiments and quickly validate what worked.

Tools like Stream Chat helped streamline the infrastructure side, allowing me to focus on AI behavior and the user experience.

I hope this post provides a realistic and practical reference for anyone considering AI chatbot integration—whether you're preparing for a full rollout or just starting with a small prototype.