This segment is the eleventh installment of a tutorial series focused on how to create a full-stack application using Flask and Stream. In this article, we walk through setting up our web app for deployment using Zappa and AWS Lambda. Be sure to check out the Github repo to follow along!

This portion of the tutorial assumes that you already have an Amazon Web Services account set up, as well as the AWS CLI set up on your development machine. If you have not done so already, you can get an AWS here, and download and install the AWS CLI here

While we are using AWS for this deployment, you can easily deploy with Heroku, Docker, or any tool of your choosing.

Getting Started

Zappa is a Python library that allows you to simply deploy your apps as serverless functions using AWS, all from the command line.

What is Serverless?

With a traditional HTTP server, the server is processing requests one by one as they come in, remaining online 24/7. If the queue of incoming requests grows too large, some requests will time out. With Zappa, each request is given its own virtual HTTP "server" by Amazon API Gateway. AWS handles the horizontal scaling automatically, so no requests ever time out. Each request then calls your application from a memory cache in AWS Lambda and returns the response via Python's WSGI interface. After your app returns, the "server" dies. Serverless has several benefits over more conventional deployments, giving you infinite scalability without having to set up multiple containers or load-balancers. Best of all, in most cases, it is free!

Preliminary Setup

This portion of the tutorial assumes that you already have an Amazon Web Services account set up, as well as the AWS CLI set up on your development machine. If you have not already done so, you can get an AWS here, as well as download and install the AWS CLI here

Out With The Old

For us to take advantage of this style of deployment, we need to make some adjustments to our current application to persist data and send emails to users. Since our app is hosted in temporary instances, SQLite won't be able to persist the data stored between sessions, as the app is torn down when it is inactive. To handle this situation, we spin up an instance of PostgreSQL using AWS RDS to give us persistent data storage. Additionally, AWS Lambda does not allow emails to send with our current method, so we have to update our code to use AWS SES instead.

Multi-Stream

Since we are using a new database, we have to do something with our existing data hosted on Stream. The first option is to delete all of the data you have currently by individually purging each one of your Stream Feeds. Alternatively, you can set up a new application with the same Feed Groups and simply abstract the credentials within your app to the config.py file, allowing you to have two separate Stream instances for development and production. I have chosen the latter and separated the credentials for each into the DevelopmentConfig and ProductionConfig classes. By taking this step, you can easily test in your development environment while not impacting your production application. You can see the changes I have made here (in config.py)

#...

class Config:

SECRET_KEY = os.environ.get('SECRET_KEY', 'secret')

MAIL_SERVER = os.environ.get('MAIL_SERVER', 'smtp.googlemail.com')

MAIL_PORT = int(os.environ.get('MAIL_PORT', '587'))

MAIL_USE_TLS = os.environ.get('MAIL_USE_TLS', 'true').lower() in \

['true', 'on', '1']

MAIL_USERNAME = os.environ.get('MAIL_USERNAME')

MAIL_PASSWORD = os.environ.get('MAIL_PASSWORD')

SQLALCHEMY_TRACK_MODIFICATIONS = False

OFFBRAND_MAIL_SUBJECT_PREFIX = '[Offbrand]'

OFFBRAND_MAIL_SENDER = 'Offbrand Admin <admin@offbrand.co>'

OFFBRAND_ADMIN = os.environ.get('OFFBRAND_ADMIN')

SQLALCHEMY_TRACK_MODIFICATIONS = False

SQLALCHEMY_RECORD_QUERIES = True

OFFBRAND_RESULTS_PER_PAGE = 15

@staticmethod

def init_app(app):

pass

class DevelopmentConfig(Config):

STREAM_API_KEY = ''#Your API key here

STREAM_SECRET = ''#Your Secret here

DEBUG = True

SQLALCHEMY_DATABASE_URI = os.environ.get('DEV_DATABASE_URL') or \

'sqlite:///' + os.path.join(basedir, 'data-dev.sqlite')

class TestingConfig(Config):

STREAM_API_KEY = ''#Your API key here

STREAM_SECRET = ''#Your Secret here

TESTING = True

SQLALCHEMY_DATABASE_URI = os.environ.get('TEST_DATABASE_URL') or \

'sqlite://'

WTF_CSRF_ENABLED = False

class ProductionConfig(Config):

STREAM_API_KEY = ''#Your API key here

STREAM_SECRET = ''#Your Secret here

SQLALCHEMY_DATABASE_URI = os.environ.get('DATABASE_URL') or \

'sqlite:///' + os.path.join(basedir, 'data.sqlite')

@classmethod

def init_app(cls, app):

Config.init_app(app)

#...

Be sure to update your templates to pass through these credentials for any that are requesting data from Stream.

Installing Packages

To get us started, we'll need to install the packages that we'll be using. First is boto3, a Python library for interacting with AWS services, in our case, AWS Simple Email Service (SES). In your virtual environment CLI, run pip install boto3.

Our next package is psycopg2, which is a Python Postgres integration library that works with SQLalchemy to process our database requests. There are some difficulties with newer versions of psycopg2 when deploying on AWS Lambda, so we use an older version instead. Once again in the CLI, run 'pip install psycopg2==2.7.1'

The final library we'll be installing is Zappa. We can install this with pip install zappa

Next Steps

Now that we have our underlying libraries set up, we need to boot up the underlying services. The first that we are going to address is the database. In order to persist our data between sessions, we need to create a static database instance. I use AWS Relational Database Service with PostgreSQL.

DANGER WILL ROBINSON!

There are certain selections that I use in this particular tutorial out of simplicity and vary between inadvisable to downright dangerous for a public deployment, such as making the instance publicly accessible as well as allowing all inbound traffic. In your own production applications, you should use a VPC with a NAP to secure your web app; however, these steps range far beyond the scope of this particular tutorial.

Back To Your Regularly Scheduled Broadcast

After you navigate to the AWS Console after signing in, open the RDS tab under services. Select the Databases tab found in the left-hand navigation screen and click on 'Create Database.'

We use a Standard Create with a PostgreSQL Engine. Under templates, be sure to select the Free Tier for this tutorial to ensure you don't incur any charges for the tutorial. Customize the DB instance identifier (I used 'offbrand-tutorial') and create your own master username and master password to access the instance. You have already selected the free tier (the DB instance size), so storage should be automatically assigned for you. Finally, under the Additional Connectivity Configuration dropdown underneath Connectivity, allow the instance to be publicly accessible. Now you can create the database.

Once the database has finished setting up (you can track its progress in the main RDS Database screen), we use the configuration to set it up in our app. After you click on the link given by the DB-identifier, scroll down to the security group rules section. After you select the security group for Inbound traffic, scroll down to the bottom of the page and select the 'Inbound Rules' tab. Then, click 'Edit Inbound Rules' and in the 'Source' field, enter '0.0.0.0/0', and add the rule. These steps allow that all incoming requests are given with valid credentials.

It is crucial to state that this setting, especially when dealing with sensitive information such as logins or personal identification information, is very unsafe for real-world builds. In your own production applications, you should use a secure access point from a reliable IP.

After the security settings are configured, navigate back to the database instance screen and retrieve the endpoint address from the 'Connectivity & Security' tab. Be sure to also have your DB Name, Master Username, and Password handy as we go to set up the connection in our app.

Connecting To The Database

As we head back to our application, we can add the database instance to our configuration (in config.py)

#... class DevelopmentConfig(Config): SQLALCHEMY_DATABASE_URL = 'postgresql://DBNAME:PASSWORD@ENDPOINT/MASTER_USERNAME' #...

Your database is now set up and ready to receive new data in production.

Our next step is to set up an email client. The first thing that we need to do is to register our email address in AWS SES. Navigate to the SES page under 'Services' on the AWS Console. Once there, under 'Identity Management,' select 'Email Addresses and Verify a New Email Address.' Enter in the email you wish to use for your application and click 'Verify This Email Address.' Once you have received the confirmation email, click the confirmation link, and your email is added to 'Confirmed Email Addresses.' Finally, to ensure that accounts are not used for spam purposes, your account is placed in a 'Sandbox,' meaning that you can only send emails using SES to other confirmed email addresses. To exit the Sandbox, you need to request permission from AWS directly (with steps outlined here)

In With The New

Once you are confirmed and (hopefully) your email has been taken out of the Sandbox, we can start to edit the functions for your app to use SES (in app/email.py)

from flask import current_app, render_template

import boto3

from botocore.exceptions import ClientError

def send_email(to, subject, template, **kwargs):

"""Function to send email for user confirmations/updates, uses

a threading model to not offload the processing from the main

thread"""

client = boto3.client('ses', region_name=current_app.config['AWS_REGION_NAME'],

aws_access_key_id=current_app.config['AWS_ACCESS_KEY_ID'],

aws_secret_access_key=current_app.config['AWS_SECRET_ACCESS_KEY'])

try:

response = client.send_email(

Destination={

'ToAddresses': [

to,

],

},

Message={

'Body': {

'Html': {

'Charset': 'UTF-8',

'Data': render_template(template + '.html', **kwargs),

},

'Text': {

'Charset': 'UTF-8',

'Data': render_template(template + '.txt', **kwargs),

},

},

'Subject': {

'Charset': 'UTF-8',

'Data': subject,

},

},

Source=''# Your Amazon Registered Address,

)

except ClientError as e:

print(e.response['Error']['Message'])

else:

print("Email sent! Message ID:")

print(response['MessageId'])

return True

As you can see, we are using the Boto3 client to execute this function. You need AWS credentials to access your account programmatically. You can set up these permissions in the 'My Security Credentials' link under your username in the top navbar of the AWS Console.

Once there, open the 'Users' page under 'Access Management' on the left-hand navigation bar. You can add a user with the 'Add User' tab at the top.

I have used 'Offbrand' as the username, allowing Programmatic access in the 'Access Type' selections. Once finished, click the 'Next: Permissions' button to continue. On the Permission screen, we attach an existing policy directly, so navigate to that selection. In the Search bar, type 'SES', and select the 'AmazonSESFullAccess' field before moving on to the next page. We won't add any tags to this user, so continue past this step and head to the 'Review' page before clicking 'Create User.' After these steps, you are directed to a page outlining the Access Key ID, as well as the Secret Access Key. Be sure to store these securely and add them to the configuration file in the app (in config.py)

https://gist.github.com/Porter97/66bb1f6b36f57a0653b65628e88292ee

Final Steps

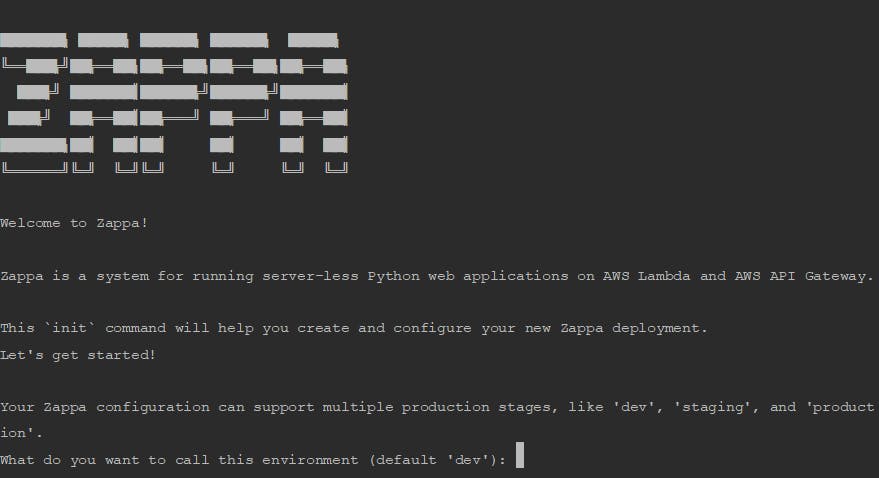

Now that our underlying functions are finished, we can start to deploy our app. Initialize Zappa by running zappa init in the CLI. The command prompt leads you through a few setup steps.

Setting The Stage

Zappa allows you to have multiple production stages, whether that is for development, testing, or production. For our version, we use production.'

Log Buckets

Next, you are asked to create an S3 bucket to store the logs generated by your deployment. As S3 buckets must be globally unique, Zappa generates a unique namespace and create a bucket for you, so we can skip through this step using the default.

Frameworks

Zappa attempts to determine what type of framework (Django, Pyramid, Flask) you are using when you initialize and automatically detect the app function. As our web app uses blueprints, Zappa's typical route is wrong in this case. Therefore, we need to edit the app function to be 'application.app'.

Thinking Globally

Finally, you are asked if you would like to deploy the app in all AWS regions to enhance the response speed using closer "edge" locations. Given that this is our first time deploying and that this is a tutorial, we use the default, 'No Value.' Double-check the zappa_settings.json file that is output, which should look something like this:

Now that our project is initialized and the JSON file has been created, we can deploy the package. Use zappa deploy in the CLI.

As a final step, we add the environmental variable to let AWS Lambda know that we are running the production version of the app (in the newly created zappa_settings.json) before updating the deployment with zappa update production.

{

"production": {

"app_function": "application.app",

"aws_region": "your-region",

"profile_name": "default",

"project_name": "offbrand-tutori",

"runtime": "python3.6",

"s3_bucket": ""

"environment_variables": {

"FLASK_CONFIG": "production"

}

}

}

Once the deployment is updated, you are able to see the address where the app is hosted in the command line. You can also easily set up custom domains in API Gateway and Route 53.

Final Thoughts

You have now finished building and deploying a full web application using Flask and Stream! Your app is complete with a permanent database on AWS and client-side data retrieval from Stream, an in-depth user authentication, and account functions, styling, and content upload. You can also test the functionality of your site, update the structure of your content and collections, and basically anything else you can imagine!

If you have made it this far, take some time to congratulate yourself for the hard work that you have put in. In the next series, I show you how to take things to the next level using React to make a Single Page Application integrating all of the functionality you have learned here, plus some incredibly cool stuff involving Stream Chat!

As always, thanks for reading, and happy coding!

Note: The next post in this series can be found here