When adding live video to your applications on Stream, we recommend checking out our newly released Video API!

Using Stream Video, developers can build live video calling and conferencing, voice calling, audio rooms, and livestreaming from a single unified API, complete with our fully customizable UI Kits across all major frontend platforms.

To learn more, check out our Video homepage or dive directly into the code using one of our SDKs.

In this guide, we'll create the a basic Psychotherapy App for iOS with Stream Chat, for its fully featured chat components, and Dolby.io, for its excellent audio and video capabilities. Both offerings are HIPAA compliant. When you finish following the steps, you'll get an app like in the image below. Additionally, it will be compatible with iOS's light and dark mode features.

If you get lost while following this guide, you can get the completed Xcode project in this GitHub repo: psychotherapy-app-ios. Let's get started with our teletherapy app development!

What is Stream Chat?

Build real-time chat in less time. Rapidly ship in-app messaging with our highly reliable chat infrastructure. Drive in-app conversion, engagement, and retention with the Stream Chat messaging platform API & SDKs.

What is Dolby.io's Client SDK?

Dolby Interactivity APIs provide a platform for unified communications and collaboration. In-flow communications refers to the combination of voice, video, and messaging integrated into your application in a way that is cohesive for your end-users. This is in contrast to out-of-app communications where users must stop using your application and instead turn to third-party tools.

Requirements

Set Up Project

Create the Xcode Project

First, we open Xcode and create an iOS App project.

And make sure to select 'Storyboard' for the User Interface.

Install Dependencies

To install the Stream Chat and Dolby.io's Client SDK dependencies, we'll use CocoaPods. If you prefer Carthage, both frameworks support it as well.

In the folder where you saved the project, run pod init and add StreamChat and VoxeetUXKit to the Podfile. It should look similar to this:

Note: if you're not seeing the Podfile below due to a bug in dev.to, refresh this page.

https://gist.github.com/cardoso/dc73028434d48b005d50e91f67a7dda3

After you do that, run pod install, wait a bit for it to finish, and open the project via the .xcworkspace that was created.

Configure the Stream Chat Dashboard

Sign up at GetStream.io, create the application, and make sure to select development instead of production.

To make things simple for now, let's disable both auth checks and permission checks. Make sure to hit save. When your app is in production, you should keep these enabled.

For more infomration, visit the documentation about authentication and permissions.

Now, save your Stream credentials, as we'll need them to configure the chat in the app. Since we disabled auth and permissions, we'll only really need the key for now, but in production, you'll use the secret in your backend to implement proper authentication to issue user tokens for Stream Chat, so users can interact with your app securely.

As you can see, I've censored my keys. You should make sure to keep your credentials secure.

Configure the Dolby.io Dashboard

Configuring the Dolby.io dashboard is simpler. Just create an account there, and it should already set up an initial application for you.

Now, save your credentials, as we'll need them to power the audio and video streaming in the app. As with the Stream credentials, you use these for development. In production, you'll need to set up proper authentication. It's described in detail here.

Configure Stream Chat and Dolby.io's SDKs

The first step with code is to configure the Stream and Dolby SDK with the credentials from the dashboards. Open the AppDelegate.swift file and modify it, so it looks similar to this:

import UIKit

import StreamChatClient

import VoxeetSDK

import VoxeetUXKit

@UIApplicationMain

class AppDelegate: UIResponder, UIApplicationDelegate {

...

func application(_ application: UIApplication, didFinishLaunchingWithOptions launchOptions: [UIApplication.LaunchOptionsKey: Any]?) -> Bool {

// Override point for customization after application launch.

Client.configureShared(.init(apiKey: "[api_key]", logOptions: .info))

VoxeetSDK.shared.initialize(consumerKey: "[consumer_key]", consumerSecret: "[consumer_secret]")

VoxeetUXKit.shared.initialize()

return true

}

...

}

That code initializes the Dolby.io and Stream Chat SDKs with credentials you got in the previous two steps.

Create the Join Screen

Let's start building the "Join" screen. This screen consists of two UIButton instances. One to join as the Patient, and the other to join as the Therapist. This is, of course, an oversimplification to make this tutorial short and get to the chat, audio, and video chat features faster. In your complete app, you'll need proper registration, database, and all that. For this tutorial, the screen will look similar to the screenshot below.

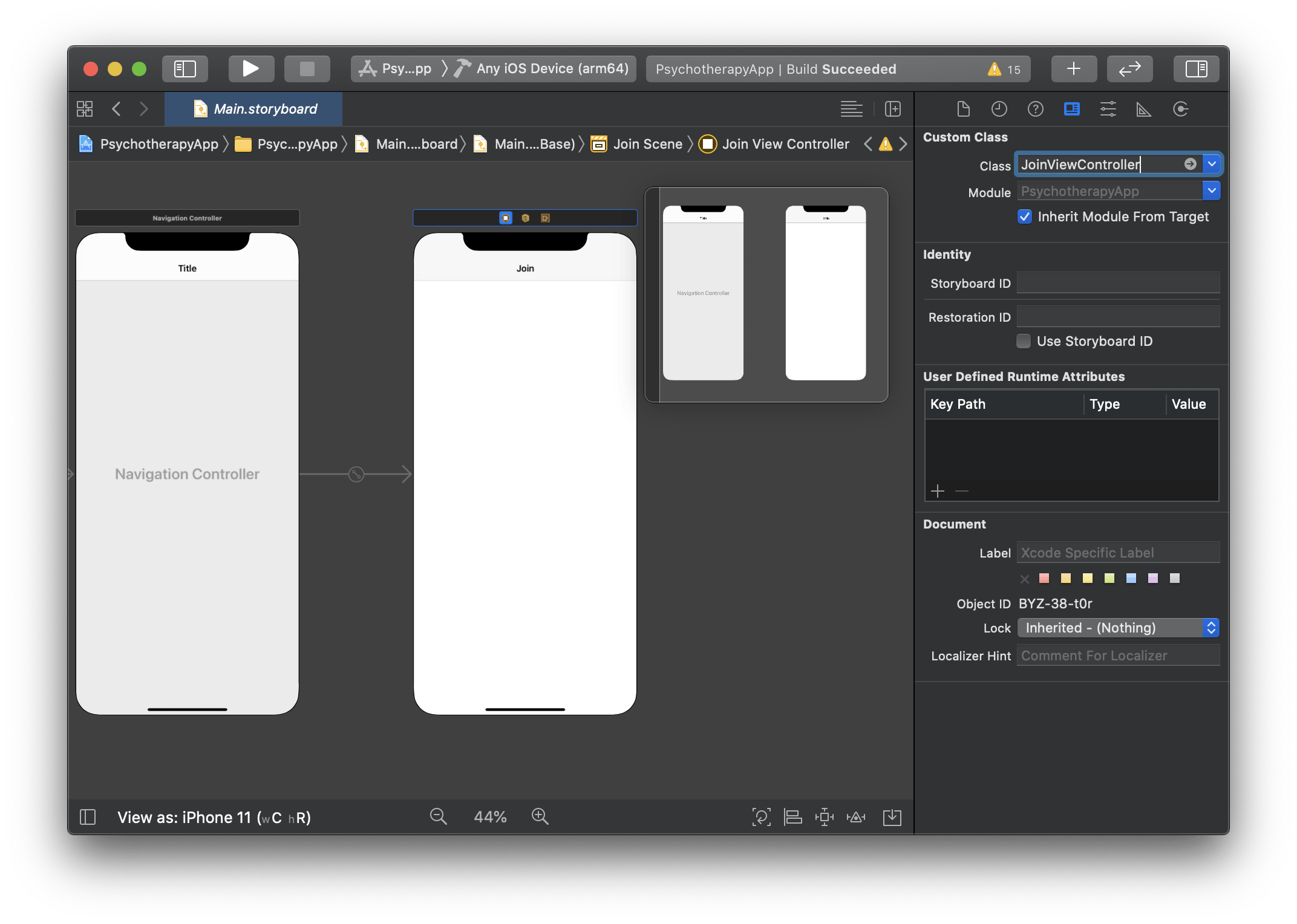

Go to the storyboard, select the default view controller, and click Editor > Embed In > Navigation Controller. That will place it under a navigation controller, which we'll use to navigate to the channel screen.

Make sure to rename ViewController to JoinViewController, so you don't get confused later on. You can do this easily by right-clicking on ViewController in ViewController.swift and selecting refactor.

To make things simple, let's leave the storyboard like this and use only code from now on. To set up the two buttons, we need the following code in JoinViewController.swift:

import UIKit

class JoinViewController: UIViewController {

let patientButton = UIButton()

let therapistButton = UIButton()

override func viewDidLoad() {

super.viewDidLoad()

title = "Join"

setupViews()

setupConstraints()

setupHandlers()

}

}

That code sets up the views, the constraints, and the handlers we need. Let's start by extending JoinViewController to define setupViews:

extension JoinViewController {

func setupViews() {

setupPatientButton()

setupTherapistButton()

}

func setupPatientButton() {

patientButton.translatesAutoresizingMaskIntoConstraints = false

patientButton.setTitleColor(.systemBlue, for: .normal)

patientButton.setTitle("Patient / Client 🙍♂️", for: .normal)

patientButton.titleLabel?.font = .systemFont(ofSize: 32)

view.addSubview(patientButton)

}

func setupTherapistButton() {

therapistButton.translatesAutoresizingMaskIntoConstraints = false

therapistButton.setTitleColor(.systemBlue, for: .normal)

therapistButton.setTitle("Therapist 👩💼", for: .normal)

therapistButton.titleLabel?.font = .systemFont(ofSize: 32)

view.addSubview(therapistButton)

}

}

That code will create the buttons and add them to the controller's view. Next, we need to define constraints between the three. Let's do this by extending JoinViewController to define setupConstraints:

extension JoinViewController {

func setupConstraints() {

view.addConstraints([

patientButton.centerXAnchor.constraint(equalTo: view.centerXAnchor),

patientButton.centerYAnchor.constraint(equalTo: view.safeAreaLayoutGuide.centerYAnchor, constant: -100),

therapistButton.centerXAnchor.constraint(equalTo: view.centerXAnchor),

therapistButton.centerYAnchor.constraint(equalTo: patientButton.centerYAnchor, constant: 100)

])

}

}

That code will make sure the patientButton stays in the center of the screen and the therapistButton below it. Now we need to set up the handler for when the user presses the buttons. Let's do this again by extending the controller to define setupHandlers:

import StreamChat

extension JoinViewController {

func setupHandlers() {

patientButton.addTarget(self, action: #selector(handlePatientButtonPress), for: .touchUpInside)

therapistButton.addTarget(self, action: #selector(handleTherapistButtonPress), for: .touchUpInside)

}

@objc func handlePatientButtonPress() {

let therapyVC = TherapyViewController()

therapyVC.setupPatient()

navigationController?.pushViewController(therapyVC, animated: true)

}

@objc func handleTherapistButtonPress() {

let therapyVC = TherapyViewController()

therapyVC.setupTherapist()

navigationController?.pushViewController(therapyVC, animated: true)

}

}

That code will make it so when the user presses the button a TherapyViewController is created and set up for the therapist or patient, depending on which button was pressed. We'll create TherapyViewController in the next step.

Create the Therapy Screen

Now, let's create the screen where the patient and therapist will talk via chat and where they can begin a video call. We'll start by defining TherapyViewController. It will look similar to the screenshots below.

The first step is to create a TherapyViewController.swift file and paste the code below.

import StreamChat

import StreamChatClient

class TherapyViewController: ChatViewController {

let patient = User(id: "Patient")

let therapist = User(id: "Therapist")

lazy var channel = Client.shared.channel(members: [patient, therapist])

override func viewDidLoad() {

super.viewDidLoad()

setupViews()

setupHandlers()

}

}

That code defines a subclass of ChatViewController, which provides most of the chat behavior and UI we need. It also defines the patient and therapist User objects and a Channel object between the two. These objects will be used to interact with the Stream API. On viewDidLoad, we also call setupViews and setupHandlers to set up the views and handlers needed. We'll define those functions next.

But, let's first define the setupPatient function that sets the current Stream Chat user as the patient, and the setupTherapist function that sets it as the therapist.

import StreamChatCore

import StreamChatClient

extension TherapyViewController {

func setupPatient() {

Client.shared.set(user: patient, token: .development)

self.presenter = .init(channel: channel)

}

func setupTherapist() {

Client.shared.set(user: therapist, token: .development)

self.presenter = .init(channel: channel)

}

}

Now we define setupViews to set up the views we need.

import UIKit

extension TherapyViewController {

func setupViews() {

setupCallButton()

}

func setupCallButton() {

let button = UIBarButtonItem()

button.image = UIImage(systemName: "phone")

navigationItem.rightBarButtonItem = button

}

}

Those functions will display a button which starts a call. For it to work, we'll need to define setupHandlers as well.

import Foundation

extension TherapyViewController {

func setupHandlers() {

setupCallButtonHandler()

}

func setupCallButtonHandler() {

navigationItem.rightBarButtonItem?.target = self

navigationItem.rightBarButtonItem?.action = #selector(callButtonPressed)

}

@objc func callButtonPressed() {

startCall()

}

}

Those functions set callButtonPressed as the function to be called when the call button is pressed, which in turn calls startCall, which we define next.

import VoxeetSDK

import VoxeetUXKit

extension TherapyViewController {

func startCall() {

let options = VTConferenceOptions()

options.alias = "patient+therapist"

VoxeetSDK.shared.conference.create(options: options, success: { conf in

VoxeetSDK.shared.conference.join(conference: conf)

}, fail: { error in

print(error)

})

}

}

Finally, that function uses the Dolby.io SDK to start a conference call.

Configure Usage Descriptions

If you use the app now, you'll be able to chat, but pressing the call button will crash the application. That happens because we need to configure the usage descriptions for microphone and video in the Info.plist file. To do this, just open Info.plist and set the NSMicrophoneUsageDescription and NSCameraUsageDescription keys as pictured below.

Finally, we open the app in two devices, and, from the chat, we can start a call.

Next Steps with the Psychotherapy App

Congratulations! You've built the basis of a functioning psychotherapy app with Stream Chat and Dolby.io. I encourage you to browse through Stream Chat's docs, Dolby.io's docs, and experiment with the project you just built. If you're interested in the business side of things, read about how Stream Chat provides HIPAA compliance and how Dolby.io provides HIPAA compliance. Good luck on your teletherapy app development!