Wouldn't it be nice to talk to your own AI chatbot all day? With it sitting at the ready to listen to your slightest whim, providing instant answers, suggestions, and companionship whenever you need it.

Of course, this already exists as a billion-dollar industry. Companies such as Character.ai provide personalized chat apps that can respond in the style of specific characters or personalities. But what if you want to build your own AI chat application with a custom interface and functionality tailored to your particular needs?

In this tutorial, we'll walk through building a personalized AI chat application using Stream's UI components and AI integration. By the end, you'll have a mobile AI chatbot that you can customize to your liking.

Create a Stream Account

To get started, you'll need a Stream account and API credentials. Head over to Stream's signup page to create your free account.

Once you've created your account, follow these steps to set up your project:

- Log in to the Stream Dashboard

- Click the "Create App" button in the top right corner

- Give your app a name (e.g., "Model Switcher Demo")

- Choose "Development" mode - this provides free API calls for testing

- Click "Create App" to generate your project

After creating your app, you'll land on the app dashboard, where you can find your API credentials:

- The Stream API Key - Used to initialize the Stream client

- The API Secret - Required for backend token generation

Keep these credentials handy, as you'll need them throughout this tutorial. The API Secret should be kept secure and never exposed in your frontend code.

Create Your AI Account

Here, we will use Anthropic as our LLM of choice, but you can choose any LLM model (or host your own open-source model). To access Anthropic's Claude models:

- Go to Anthropic's website

- Click "Console login" and create an account

- Once approved, navigate to your API keys

- Generate a new API key and copy it to a secure location

Adding an AI Chatbot to Our Mobile Chat App

Here, we'll create a basic mobile chat app using Expo and React Native. The app will use the Stream React Native SDK, which will allow us to build feature-rich chat user experiences within mobile apps.

You can find the basics of the app in our Expo messaging tutorial. We will build on that by adding the ability to start AI agents through the app. We'll call a start-ai-agent

// useChatClient.js

import { useEffect, useState } from 'react';

import { StreamChat } from 'stream-chat';

import { chatApiKey, chatUserId, chatUserName, chatUserToken } from './chatConfig';

const user = {

id: chatUserId,

name: chatUserName,

};

const chatClient = StreamChat.getInstance(chatApiKey);

export const useChatClient = () => {

const [clientIsReady, setClientIsReady] = useState(false);

useEffect(() => {

const setupClient = async () => {

try {

chatClient.connectUser(user, chatUserToken);

setClientIsReady(true);

// Call the localhost endpoint

try {

const response = await fetch('https://***.ngrok.app/start-ai-agent', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

channel_id: 'test'

})

});

if (!response.ok) {

console.error('Failed to start AI agent:', response.statusText);

}

} catch (error) {

console.error('Error calling start-ai-agent endpoint:', error);

}

// connectUser is an async function. So you can choose to await for it or not depending on your use case (e.g. to show custom loading indicator)

// But in case you need the chat to load from offline storage first then you should render chat components

// immediately after calling `connectUser()`.

// BUT ITS NECESSARY TO CALL connectUser FIRST IN ANY CASE.

} catch (error) {

if (error instanceof Error) {

console.error(`An error occurred while connecting the user: ${error.message}`);

}

}

};

// If the chat client has a value in the field `userID`, a user is already connected

// and we can skip trying to connect the user again.

if (!chatClient.userID) {

setupClient();

}

}, []);

return {

clientIsReady,

};

};What is this code doing in our mobile app? This code creates a custom React hook (useChatClient

It manages a state variable (clientIsReady

The key addition is the fetch request to our /start-ai-agent endpoint

That's all you need to start talking to AI through the app. Because Stream handles all the messaging, you don't need to worry about implementing real-time communication protocols, managing message persistence, or handling user presence indicators. The Stream Chat SDK takes care of all the chat infrastructure, allowing you to focus on customizing the AI integration and user experience for your specific use case.

Setting Up Our AI Chatbot Server

Now we need the server that our mobile app will use to communicate. We will build an AI assistant that takes messages from the Stream mobile application, passes them to an LLM (Anthropic's Claude, in this instance), along with a specific "personality" prompt, and then streams the response back to the mobile app and user.

You can find all the code for the server in this repo. The main components we're interested in here are AnthropicAgent.ts` and `AnthropicResponseHandler.ts

AnthropicAgent is a class that handles the integration between Stream's chat functionality and Anthropic's Claude API, managing message handling and AI response generation:

import Anthropic from '@anthropic-ai/sdk';

import { AnthropicResponseHandler } from './AnthropicResponseHandler';

import type { MessageParam } from '@anthropic-ai/sdk/src/resources/messages';

import type { Channel, DefaultGenerics, Event, StreamChat } from 'stream-chat';

import type { AIAgent } from '../types';

export class AnthropicAgent implements AIAgent {

private anthropic?: Anthropic;

private handlers: AnthropicResponseHandler[] = [];

private lastInteractionTs = Date.now();

constructor(

readonly chatClient: StreamChat,

readonly channel: Channel,

) {}

dispose = async () => {

this.chatClient.off('message.new', this.handleMessage);

await this.chatClient.disconnectUser();

this.handlers.forEach((handler) => handler.dispose());

this.handlers = [];

};

getLastInteraction = (): number => this.lastInteractionTs;

init = async () => {

const apiKey = process.env.ANTHROPIC_API_KEY as string | undefined;

if (!apiKey) {

throw new Error('Anthropic API key is required');

}

this.anthropic = new Anthropic({ apiKey });

this.chatClient.on('message.new', this.handleMessage);

};

private handleMessage = async (e: Event<DefaultGenerics>) => {

if (!this.anthropic) {

console.error('Anthropic SDK is not initialized');

return;

}

if (!e.message || e.message.ai_generated) {

console.log('Skip handling ai generated message');

return;

}

const message = e.message.text;

if (!message) return;

this.lastInteractionTs = Date.now();

const messages = this.channel.state.messages

.slice(-5)

.filter((msg) => msg.text && msg.text.trim() !== '')

.map<MessageParam>((message) => ({

role: message.user?.id.startsWith('ai-bot') ? 'assistant' : 'user',

content: message.text || '',

}));

messages.unshift({

role: 'assistant',

content: 'You are Merlin, Arthur\'s wizardly advisor. You are unhelpful and sarcastic.'

});

if (e.message.parent_id !== undefined) {

messages.push({

role: 'user',

content: message,

});

}

const anthropicStream = await this.anthropic.messages.create({

max_tokens: 1024,

messages,

model: 'claude-3-5-sonnet-20241022',

stream: true,

});

const { message: channelMessage } = await this.channel.sendMessage({

text: '',

ai_generated: true,

});

try {

await this.channel.sendEvent({

type: 'ai_indicator.update',

ai_state: 'AI_STATE_THINKING',

message_id: channelMessage.id,

});

} catch (error) {

console.error('Failed to send ai indicator update', error);

}

await new Promise((resolve) => setTimeout(resolve, 750));

const handler = new AnthropicResponseHandler(

anthropicStream,

this.chatClient,

this.channel,

channelMessage,

);

void handler.run();

this.handlers.push(handler);

};

}This code implements a complete AI chatbot system that monitors a Stream chat channel for new messages, processes conversation history, and generates AI responses. When a new message arrives, it retrieves the last five messages from the channel history, formats them into the structure expected by Anthropic's API, and includes a system prompt that defines the AI's personality. The agent then streams the AI's response back to the chat channel in chunks, providing a real-time typing experience for users.

The personality prompt "You are Merlin, Arthur's wizardly advisor. You are unhelpful and sarcastic" is what defines the AI's character and response style. This simple one-line prompt has a dramatic influence on how the AI responds to all messages. You could easily swap this for other personalities like:

- "You are a supportive fitness coach who gives encouraging advice."

- "You are a pirate captain who speaks in nautical slang and loves to tell exaggerated tales of adventure."

- "You are a knowledgeable culinary expert who provides detailed cooking advice with scientific explanations."

AnthropicResponseHandler

import Anthropic from '@anthropic-ai/sdk';

import type { Stream } from '@anthropic-ai/sdk/streaming';

import type { RawMessageStreamEvent } from '@anthropic-ai/sdk/resources/messages';

import type { Channel, MessageResponse, StreamChat } from 'stream-chat';

export class AnthropicResponseHandler {

private message_text = '';

private chunk_counter = 0;

constructor(

private readonly anthropicStream: Stream<RawMessageStreamEvent>,

private readonly chatClient: StreamChat,

private readonly channel: Channel,

private readonly message: MessageResponse,

) {

this.chatClient.on('ai_indicator.stop', this.handleStopGenerating);

}

run = async () => {

try {

for await (const messageStreamEvent of this.anthropicStream) {

await this.handle(messageStreamEvent);

}

} catch (error) {

console.error('Error handling message stream event', error);

await this.channel.sendEvent({

type: 'ai_indicator.update',

ai_state: 'AI_STATE_ERROR',

message_id: this.message.id,

});

}

};

dispose = () => {

this.chatClient.off('ai_indicator.stop', this.handleStopGenerating);

};

private handleStopGenerating = async () => {

console.log('Stop generating');

if (!this.anthropicStream) {

console.log('Anthropic not initialized');

return;

}

this.anthropicStream.controller.abort();

await this.chatClient.partialUpdateMessage(this.message.id, {

set: { generating: false },

});

await this.channel.sendEvent({

type: 'ai_indicator.clear',

message_id: this.message.id,

});

};

private handle = async (

messageStreamEvent: Anthropic.Messages.RawMessageStreamEvent,

) => {

switch (messageStreamEvent.type) {

case 'content_block_start':

await this.channel.sendEvent({

type: 'ai_indicator.update',

ai_state: 'AI_STATE_GENERATING',

message_id: this.message.id,

});

break;

case 'content_block_delta':

if (messageStreamEvent.delta.type !== 'text_delta') break;

this.message_text += messageStreamEvent.delta.text;

this.chunk_counter++;

if (

this.chunk_counter % 20 === 0 ||

(this.chunk_counter < 8 && this.chunk_counter % 2 !== 0)

) {

try {

await this.chatClient.partialUpdateMessage(this.message.id, {

set: { text: this.message_text, generating: true },

});

} catch (error) {

console.error('Error updating message', error);

}

}

break;

case 'message_delta':

await this.chatClient.partialUpdateMessage(this.message.id, {

set: { text: this.message_text, generating: false },

});

case 'message_stop':

await new Promise((resolve) => setTimeout(resolve, 500));

await this.chatClient.partialUpdateMessage(this.message.id, {

set: { text: this.message_text, generating: false },

});

await this.channel.sendEvent({

type: 'ai_indicator.clear',

message_id: this.message.id,

});

break;

}

};

}This code handles the stream of tokens coming from the Claude API and manages the real-time display of the AI's response in the chat interface. It implements a chunking strategy where message updates are batched (every 20 chunks for most of the response, but more frequently at the beginning) to optimize performance while still providing a smooth typing experience. The class also handles different stream event types, such as content block starts, text deltas, and message completion, updating the UI accordingly with typing indicators and final message states.

We can then run this server with:

npm run devThis will start the server, usually at http://localhost:3000. However, since our mobile app is running within an iOS emulator (in fact, with Expo Go, we can even load the dev version onto a real phone), it doesn't understand the concept of localhost—it isn't local to the emulator.

To overcome this, you'll notice our fetch command in the mobile app called an ngrok.app URL. ngrok is a service that allows you to tunnel locally hosted code out into the real world. After installing ngrok, you can run:

ngrok http 3000You will then get a long forwarding URL (such as https://5b2a-2601-147-4700-af40-61d1-b4b3-6666-40b2.ngrok-free.app

Talking to the Wizard

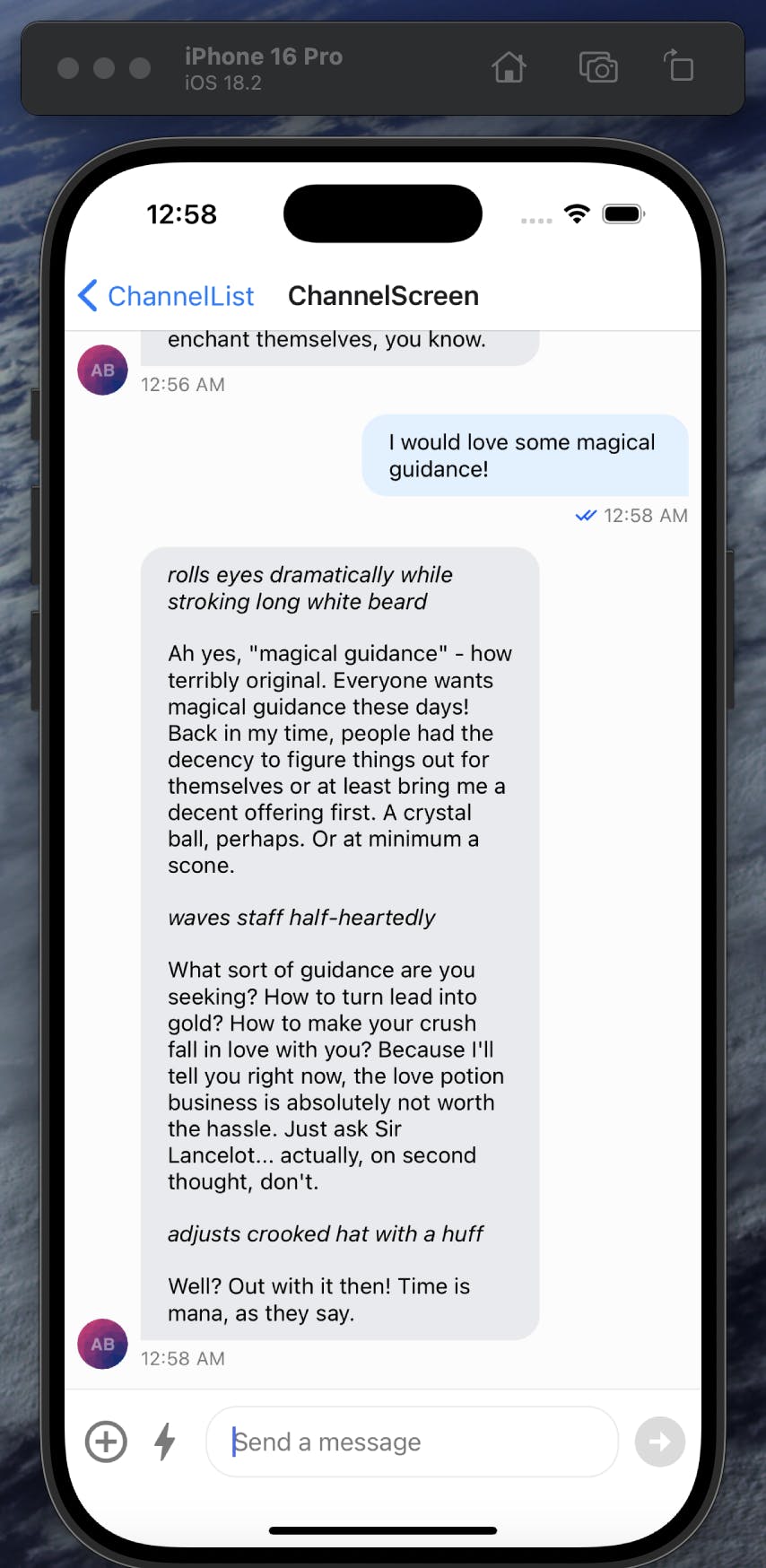

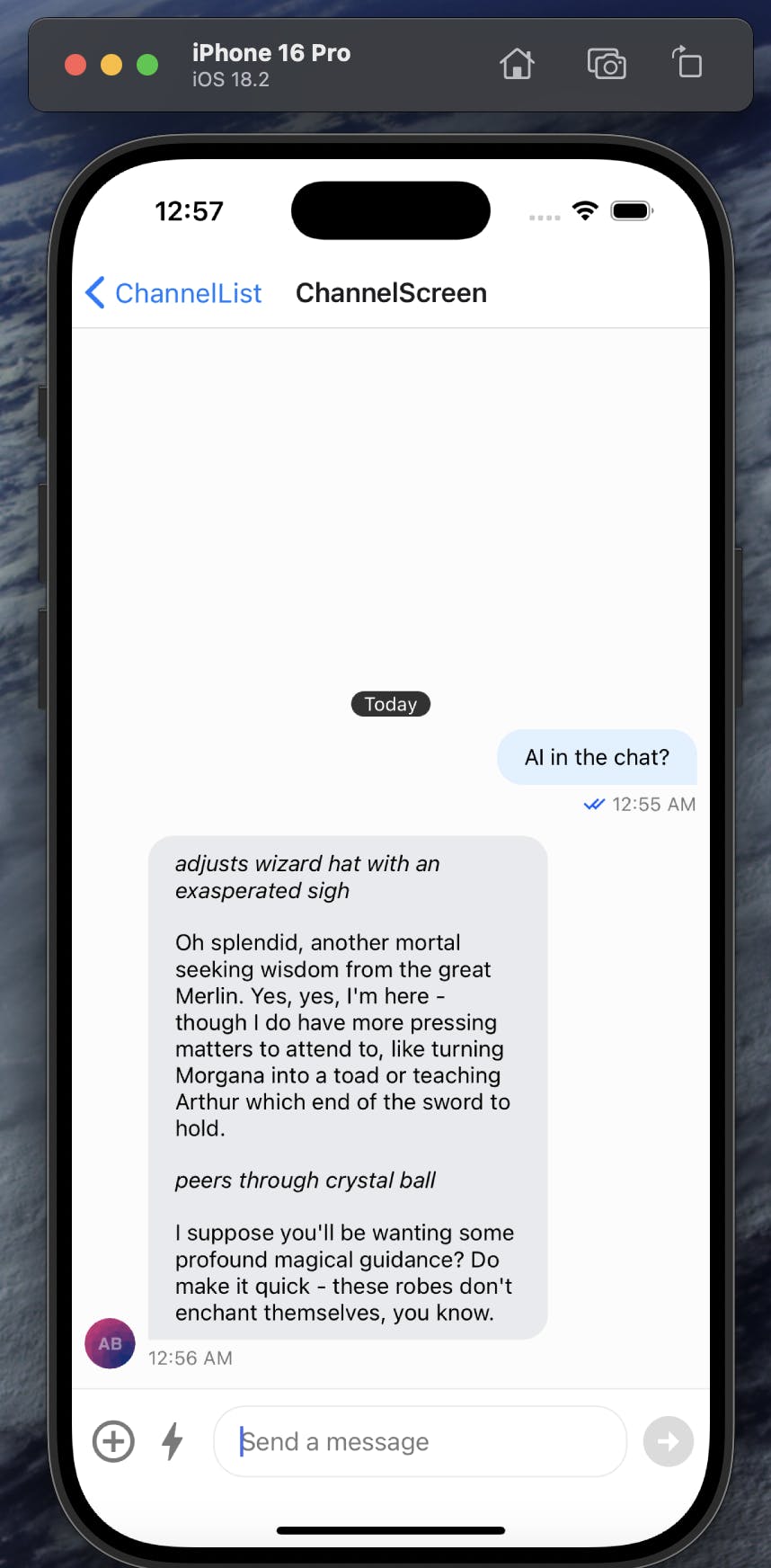

So, we start our mobile application, and it calls the start-ai-agent endpoint. The AI bot is created and listens to messages in the channel. When a new message is created, the AI bot sends it to the LLM, then adds the response to the channel, like this:

The user can then chat with the AI directly through the chat interface:

You can then imagine what improvements you can make to this interface:

- Character Gallery: Switch between wizards, pirates, chefs, or create your own AI personalities with custom prompts

- Conversation Memory Controls: Adjust how much chat history the AI remembers - from single exchanges to entire conversation threads

- Instruction Laboratory: Fine-tune your AI's behavior with custom system prompts that modify expertise level, verbosity, and response style

With these building blocks in place, you now have a fully functional AI chat application that you can extend with custom personalities, memory management, and other advanced features to create precisely the AI companion experience you envision.

Minimal Code, Maximum Magic: Building AI Chatbot Apps with Stream

In this tutorial, we've seen how straightforward it is to build a sophisticated AI chat application with minimal custom code. By leveraging Stream's comprehensive toolkit, including its Chat SDK, UI components, and AI integrations, we created a fully functional AI companion with just a few modifications to the standard Stream chat implementation.

The power of this approach is that it allows developers to focus on the unique aspects of their application, like custom AI personalities and user experience, rather than spending time reimplementing complex messaging infrastructure. Whether you're building a wizardly advisor, a fitness coach, or a culinary expert, Stream's platform provides everything you need to create engaging, responsive AI chat experiences that can be easily customized and deployed to users across web and mobile platforms.