Content moderation has undergone a major shift in recent years.

What once felt like an opaque black box to users has steadily moved toward openness, clarity, and accountability. Historically, a user who had content removed or an account restricted rarely received meaningful information about why the decision happened, who made it, or whether it could be challenged. This lack of clarity left people frustrated, confused, and sometimes unfairly penalized.

Today, the expectations are different. Global frameworks such as the EU Digital Services Act and emerging United States regulations require platforms to offer clear reasoning, consistent explanations, and accessible appeal routes.

But even beyond legal pressure, users now assume that platforms will provide fairness and transparency in the same way that they expect reliability and uptime.

The result? Product teams are balancing two essential goals. They must keep communities safe by detecting and removing harmful content, while also maintaining user trust through clarity and predictable processes.

Understanding Moderation Mistakes and Their Impact

Before discussing appeals and transparency, let's look at both why moderation mistakes occur and why acknowledging them matters.

The Reality of AI and Human Error

No matter how advanced a system becomes, mistakes remain inevitable. AI models can misinterpret intent, struggle with sarcasm, and fail to understand reclaimed slurs or evolving slang.

Cultural context adds further complexity since language varies across regions and communities. Even human reviewers are not immune to misinterpretation, especially when they must process content quickly or lack sufficient context.

Harmful content also evolves more quickly than moderation rules, and models can keep pace with it. Scammers adapt tactics, new symbols appear, and coded language shifts. This constant evolution requires ongoing attention and updates, which introduces opportunities for error.

Why Acknowledging Mistakes Builds Credibility

Users care far more about fairness than perfection. When a platform acknowledges that errors can happen and provides a clear path to challenge them, it builds credibility. Conversely, a system that silently removes content or issues penalties with vague explanations appears arbitrary, even if its decisions are accurate most of the time.

Modern apps operate more like public utilities. They are expected to be stable, consistent, and understandable. When platforms take responsibility for mistakes and explain how decisions are made, they demonstrate respect for their users. This openness also provides internal value by highlighting patterns that can help teams refine models, strengthen reviewer guidelines, and improve policy language.

Real World Examples

Across the industry, high-profile mistakes have highlighted the need for clear appeals and explanations.

YouTube creators have experienced demonetization on safe videos when certain keywords were mistakenly tied to harmful themes. During the early months of the COVID-19 pandemic, Instagram struggled with false flags on medical discussions and updates. The company regained trust by quickly acknowledging the issue, publishing guidance, and adjusting its models.

Each case illustrates that mistakes inevitably happen, and transparency is a necessary part of maintaining credibility.

What Is a Moderation Appeal?

A moderation appeal is a formal request from a user asking a platform to review a moderation action that they believe may be incorrect. This request can apply to a removed message, a blocked image, a flagged video, a ranking downgrade, or even an account restriction.

Appeals are the primary safeguard that ensures users are not unfairly penalized because of an algorithmic error, a misunderstanding, or a lack of context.

Designing a Fair and Efficient Appeals Flow

In a well-designed appeals system, users can easily contest decisions, and moderators can review cases efficiently and consistently.

What an Effective Appeals Process Looks Like

A good appeals flow should feel intuitive and supportive rather than bureaucratic or intimidating. Users should immediately understand why content was flagged and what steps they can take to challenge the decision. The process should be accessible directly within the app, not buried behind email forms or customer support tickets.

Clear expectations also matter. Users appreciate knowing how long a review might take and whether it will be handled by an automated check or a human reviewer. Streamlining the experience through in-line prompts or in-app safety centers eliminates friction and reduces frustration. When platforms make it easy for users to appeal decisions, they signal a commitment to fairness.

When Appeals Should Trigger Human Review

Some appeals require human judgment, especially when they involve nuance or have a high impact. Account suspensions, repeat violations, or any enforcement that could meaningfully change a user's ability to participate in the community should be reviewed by a trained moderator. Cases that involve cultural nuance, ambiguous language, or low AI confidence scores also benefit from human insight.

Establishing consistent reviewer guidelines ensures that human decisions remain stable and unbiased.

Automating Parts of Appeals Without Losing Humanity

Automation can help moderation teams stay efficient while maintaining a fair process. Automatic notifications, system-generated appeal records, and periodic re-evaluation when AI models update all reduce manual workload.

However, automation should complement human judgment, not replace it. Hybrid moderation workflows that combine automated triage with human evaluation in complex cases create a balanced and scalable approach.

The Importance of a Statement of Reasons

After establishing how users can challenge decisions through appeals, the next step is ensuring they understand those decisions in the first place.

That's where a clear Statement of Reasons becomes essential.

What It Is and Why It Matters

A Statement of Reasons is an explanation provided to the user describing why a moderation action occurred. It includes the rule that was violated, how the decision was made, and what impact the action has on the user.

Under the Digital Services Act, this explanation is required for any platform serving EU users. Increasingly, it is viewed as a global standard for fair and transparent moderation.

What a Good Statement of Reasons Includes

Clear Statements of Reasons provide specifics rather than vague generalities.

They:

-

Explain exactly which rule was broken

-

Categorize the violation

-

Tie it to the community guidelines

-

Indicate whether the decision came from AI or a human reviewer

-

And describe how the violation affects the user's content or account.

They also offer a clear path for submitting an appeal. When users understand exactly what happened, they are more likely to accept the decision or provide helpful context in their appeal.

How This Improves User Outcomes

Providing clear explanations reduces confusion and empowers users to correct mistakes, adjust their behavior, or challenge the decision when needed. It helps them understand what a platform expects and prevents repeat issues by replacing guesswork with clarity. Ultimately, transparency strengthens trust and leads to healthier long-term community dynamics.

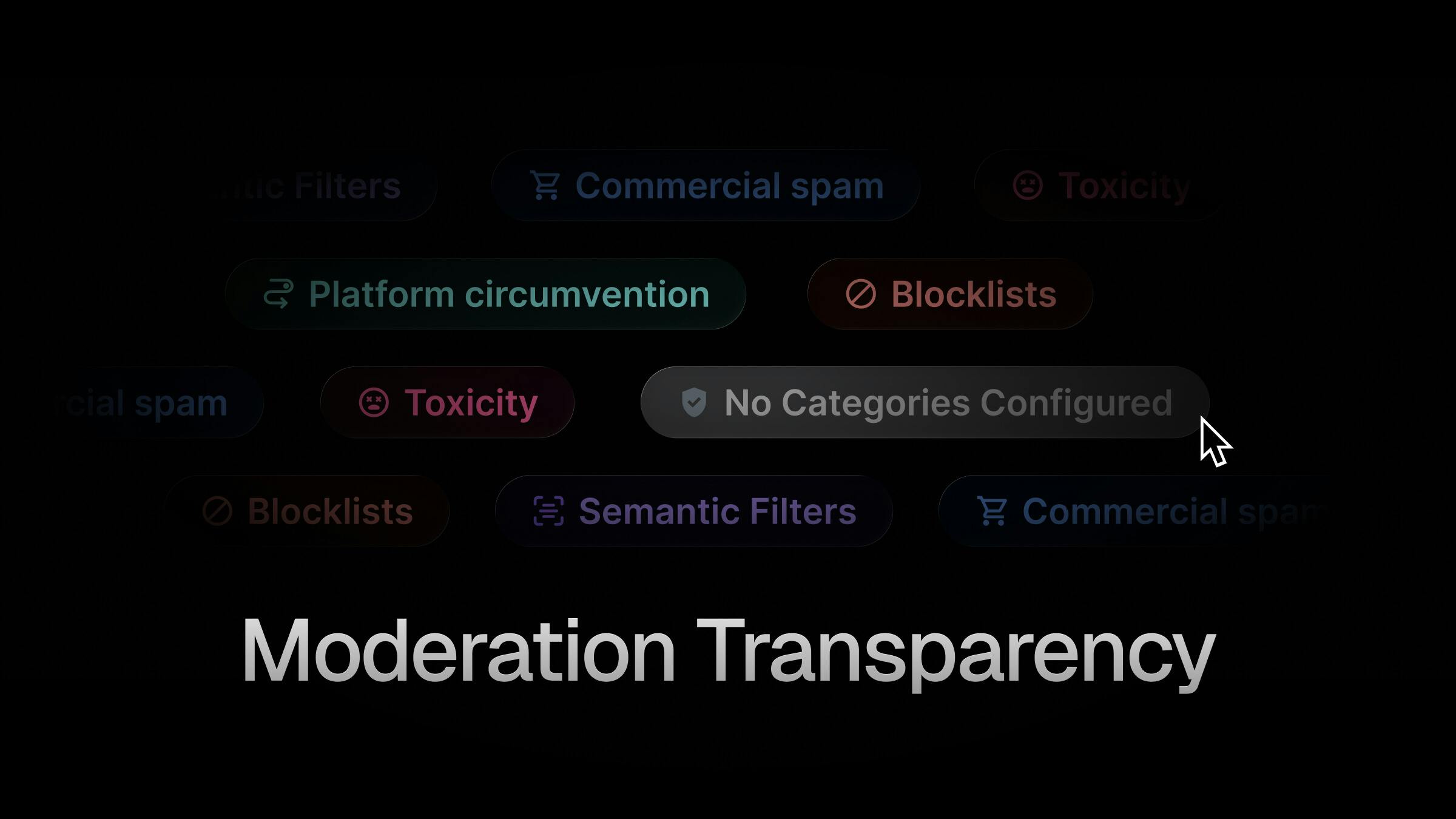

Implementing User Transparency

Once your appeals flow and explanations are in place, it's time to build broader transparency practices that help users understand how moderation works across the entire platform.

Transparency Before Enforcement

Effective transparency begins before any enforcement action occurs. Community guidelines should be written in plain language and include concrete examples of what is allowed and what is not. When users can clearly see how rules apply to real scenarios, they are more likely to follow them. Severity levels and consequences should also be explained so users understand what to expect at each step.

Transparency During Enforcement

When a moderation action occurs, timing and clarity are crucial. Users should receive immediate notifications with consistent language across emails, alerts, and in-app messages. Avoiding vague statements and providing specific explanations helps reduce confusion and encourages cooperation.

Transparency After Enforcement

Users benefit from having access to a history of their moderation events. This includes past violations, explanations of how penalties affect ranking or visibility, and even access to aggregate transparency reports that show broader platform trends. This level of openness creates a predictable environment where users feel informed rather than surprised.

Best Practices for Moderation Transparency

To build a transparent moderation system, start by writing readable, example-based policies that use plain language, concrete examples, and clear definitions so users understand what's allowed and what triggers enforcement.

-

Establish structured violation categories to group offenses into predictable tiers, helping users and reviewers understand how decisions map to rule severity.

-

Provide clear context in every notification by explaining which rule was violated, why the decision was made, and how it affects the user's content or account, avoiding vague, biased, or generic messaging.

-

Review appeals data regularly to identify patterns in overturned decisions, refine model thresholds, update policy language, and strengthen reviewer training. Maintain detailed, up-to-date internal reviewer guidelines so human reviewers handle edge cases consistently and without bias.

-

Finally, use transparency to reinforce trust by sharing aggregated insights, such as transparency reports or user safety metrics, that show users how moderation works across your platform.

How Stream Makes This Easy for Product Teams

Stream provides a complete appeals workflow that can be integrated directly into your app.

When a user disagrees with a moderation action, they can submit an appeal with a single API call. Each appeal is automatically linked to the original violation, so moderators can review it with full context. Developers can offer appeal options directly through inline prompts or build a dedicated user safety center without needing to create custom backend tools.

What Users Experience

For users, the result is a simple and transparent process. Appeals are submitted directly within the app and do not require email threads or guesswork. Users can provide additional context, clarify misunderstandings, or explain how content was intended. This direct and respectful process encourages fairness and reduces frustration.

What Moderators See

For moderators, Stream's dashboard provides instant visibility into appeals. Each case includes the flagged content, the violation details, and the user's message. Moderators can approve, deny, or escalate appeals, and they can add internal notes for auditing and training. Once a decision is made, the outcome is automatically synced back to the user through the app.

Why This Matters

Appeals create a safety net that corrects false positives and misinterpretations. Built-in tools reduce operational overhead, help teams comply with regulations such as the DSA, and increase user trust by showing that moderation decisions are neither arbitrary nor permanent. Users who see fairness in action are more likely to remain loyal and engaged over the long term.

Transparency Is No Longer Optional

Modern content moderation depends on clarity, fairness, and collaboration between AI systems and human reviewers. Trust and Safety is now a central product priority rather than a back office function. A well-built appeals process paired with open communication strengthens community trust, reduces user churn, and helps platforms create safer and more welcoming environments.