Note: This guide was originally published when Stream relied on third-party services for content moderation. Stream now offers its own moderation AI platform—including AI-powered message review, profanity filtering, and moderation dashboards. While the Hive AI integration below remains a valid reference, you may find it faster and easier to get started with Stream's native moderation tools.

Billions of users access mobile and web applications every day and generate countless hours of content, from live-stream videos to real-time messages and more. For the teams that build and maintain these services, enforcing some level of content moderation is a must.

But, how can you keep up with the constant influx of user-generated content? That’s where Hive, the leading provider of enterprise cloud-based AI assistants, can help. Their cloud-hosted deep learning models enable companies to automate the interpretation of video, images, audio, and text, making content moderation scalable, secure, and quicker than building an in-house solution.

Hive’s content moderation models are also easy to integrate and compatible with services like Stream Chat and Feeds. To show you what an integration with Hive might look like for your organization, we’ve created a quick-and-easy integration guide using Hive’s Text and Visual Moderation APIs and Stream Chat.

What Is Content Moderation?

Content moderation is the process of screening user-generated online content for material that violates community standards and taking enforcement actions like removing the content or banning the user who posted it. Platforms of all types rely on some form of moderation to protect themselves and their users from harmful or inappropriate online content.

Beyond a basic obligation to provide a safe and trustworthy online environment, there are three major benefits to content moderation:

-

Retain and grow your user base: users that come across unsavory content or get bombarded with spam are unlikely to stick around. Content moderation helps users have the best possible experience, making them less likely to leave.

-

Protect your brand: users who encounter harmful, gross, or otherwise undesirable content may pass that negative association to your platform’s brand.

-

Protect the brands of your advertisers: users are less likely to engage with an ad positioned near harmful content. As a result, most brands will avoid advertising on poorly moderated platforms

Historically, content moderation systems have relied on human moderators to review content and make enforcement decisions. But these systems are slow to react and scale poorly with large volumes of content. On the other hand, automated content moderation enables proactive screening of user-generated content in real time. These systems are also highly scalable and cost-effective. The catch? Automated moderation (usually) falls behind manual approaches in accuracy and nuance.

Today, though, artificial intelligence is changing the game. New deep learning models can rival the performance of human moderators in content classification tasks. Hive is leading the charge in the content moderation domain, making predictions from its portfolio of cloud-based AI models available as a service. With Hive, platforms can access these models at a fraction of the cost and effort needed to develop internal moderation tools.

Integrating Stream and Hive’s Services

This guide will show you how to build a robust automated moderation system into Stream Chat using Stream Chat SDKs and Hive’s cloud-based AI models. Specifically, we’ll cover how to:

- Create your Stream client/server and initialize Stream Chat

- Extract text and image-based content from Stream Chat messages as they’re sent

- Pass message contents to Hive’s Text Moderation and Visual Moderation APIs

- Interpret Hive’s model classifications and process the API response object

- Leverage Stream Chat SDKs to automate enforcement actions on inappropriate messages based on Hive’s response.

Prerequisites

Prerequisites

To be able to follow along with this integration guide, you’ll need:

- A Stream Account and Stream Application

- A Hive project and credentials

- Ngrok

- Some familiarity with Python (or another scripting language)

- Familiarity with React and webhooks

Python Dependencies

- Stream Chat Python SDK

- Requests to query Hive APIs for model classifications

- JSON to enable us to parse the Hive API response object

Create a Stream Application

To create an application with Stream, you first need to sign up for a free Stream account. Once you’re registered, go to your Stream Dashboard and follow the steps below:

- Select Create App

- Enter your App Name (for example, hive-ai-integration)

- Select your Feeds and Chat server locations

- Set your Environment to Development

- Select Create App

On your application’s Overview page, scroll down to App Access Keys. Here, you’ll find the Key and Secret you need to instantiate Stream Chat in your project.

Create a Hive Project

To integrate Hive moderation into Stream Chat, you’ll need a Hive Text Moderation project and/or a Hive Visual Moderation project. Please reach out to sales@thehive.ai to create a project for your organization.

Once you’ve received access credentials, use these to log in to your Project Dashboard on thehive.ai. There, you can access your Hive API Key.

Your API Key is a unique token for your project used to authenticate all requests to the Hive API – protect it like a password! If you plan to use both the Visual Moderation API and the Text Moderation API, you’ll have different keys for each project.

Create Your Front-End Client

Now that you have everything you need to integrate Hive moderation and Stream Chat, you can create a front-end client. For this integration, we will use the Stream React Chat SDK, but any of our front-end chat SDKs will do.

To create a React project, follow the steps in the React Chat Tutorial. Once you’ve created a project, open it in your preferred IDE.

In App.js, insert the following code:

123456789101112131415161718192021222324252627282930313233343536373839import React from 'react'; import { StreamChat } from 'stream-chat'; import { Chat, Channel, ChannelHeader, MessageInput, MessageList, Thread, Window } from 'stream-chat-react'; import 'stream-chat-react/dist/css/index.css'; const chatClient = StreamChat.getInstance('paacs4xnrdtn'); const userToken = 'eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJ1c2VyX2lkIjoid2l0aGVyZWQtcmVjaXBlLTYifQ.Um30Vi75pWoqSMgDuc8r7R_hwP_FHdApX63xLzWhhfA'; chatClient.connectUser( { id: 'withered-recipe-6', name: 'withered-recipe-6', image: 'https://getstream.io/random_png/?id=withered-recipe-6&name=withered-recipe-6', }, userToken, ); const channel = chatClient.channel('messaging', 'custom_channel_id', { // add as many custom fields as you'd like image: 'https://www.drupal.org/files/project-images/react.png', name: 'Talk about React', members: ['withered-recipe-6'], }); const App = () => ( <Chat client={chatClient} theme='messaging light'> <Channel channel={channel}> <Window> <ChannelHeader /> <MessageList /> <MessageInput /> </Window> <Thread /> </Channel> </Chat> ); export default App;

Note: In the example above, we’ve hardcoded the

userTokenand our project’s API Key for the purposes of this tutorial. In a production application, tokens should be generated server-side and protected along with your API Key.

Run your project with the following command:

12$ npm start $ yarn start

You should have a 1-to-1 chat app up and running:

Set Up a Webhook in Your Stream Dashboard

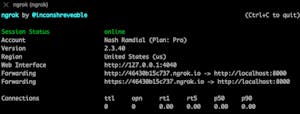

Now, you can set up a webhook in your Stream Dashboard that listens for incoming message events. To create a webhook, you’ll need a public URL that can receive requests. For that, we will use ngrok.

Run the following command to expose a localhost port on your machine:

1$ ngrok http [YOURPORT]

In your terminal, you should get a response like this:

Copy the second HTTPS Forwarding URL and paste it into the Webhook URL field in your Stream Dashboard. After pasting your URL, add the /chatEvent path to the end of it.

It should look similar to the URL below:

https://YOUR-NGROK-URL.ngrok.io/chatEvent

Create Your Python Server

Next, you need to create your Python server. Clone this backend GitHub repo to access the project locally and install the dependencies by running the following command:

1$ pip install -r requirements.txt

To fire up your server, run the following command:

1$ python main.py

Integrating Hive’s Text Moderation API With Stream Chat

With your server and front-end client running, you’re ready to receive live messages and send them to Hive’s Text Moderation endpoint.

Before we do, though, it’s important to understand that Hive does not block content or ban users on its own. Every platform has different policies and sensitivities around which types of content are allowed or prohibited. So instead, the Hive API returns scores for a set of moderation classes that describe any potentially inappropriate material (for example, sexual content, bullying/toxicity, threats, etc.). We can use these scores however we’d like when flagging particular types of content we want to take action on.

This gives us a lot of flexibility in designing and implementing a targeted moderation policy. For the purposes of this guide, though, we’ll stick to a broad approach that flags anything Hive identifies as sensitive for moderator review. Further, any messages that Hive scores as maximum severity trigger an automatic ban on the senders.

Let’s look at the code to see the implementation in detail.

First, go to main.py:

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556import uvicorn import os from starlette.applications import Starlette from starlette.responses import JSONResponse from stream_chat import StreamChat from decimal import * from hive import get_hive_response visual_threshold = Decimal(0.9) text_ban_threshold = 3 text_flag_threshold = 1 banned_classes = ['general_nsfw','general_suggestive'] from hive import get_hive_response hive_api_key = os.environ.get('HIVE_API_KEY') your_api_key = os.environ.get('api_key') your_api_secret = os.environ.get('api_secret') chat = StreamChat(api_key=your_api_key, api_secret=your_api_secret) chat.upsert_user({"id": "hive-bot", "role": "admin"}) app = Starlette(debug=True) @app.route('/chatEvent', methods=['POST']) async def chatEvent(request): data = await request.json() if data["type"] == "message.new": text = data["message"]["text"] hive_response = get_hive_response( text, hive_api_key) filters = hive_response["status"][0]["response"]["text_filters"] for text_filter in filters: moderated_word = text_filter["value"] print("moderated word: "+moderated_word) print("filtered : "+text_filter["type"]) flagged = len(filters) > 0 for output in hive_response["status"][0]["response"]["output"]: for class_ in output["classes"]: print(class_["class"]) print(class_["score"]) score = class_["score"] if score >= text_flag_threshold: print("FLAG") flagged = True if score >= text_ban_threshold: banned = True reason = class_["class"] print("BAN") if flagged: chat.flag_message(data["message"]["id"], user_id="hive-bot") if banned: print(banned) chat.ban_user(user["id"], banned_by_id="hive-bot", timeout=24*60, reason=reason) return JSONResponse({"received": data}) if __name__ == "__main__": uvicorn.run(app, host='0.0.0.0', port=8000)

Let’s explain what’s happening in this snippet:

- You instantiate your

StreamChatclient with your Stream API key and secret. - You define the flag and ban thresholds for text content and specify which visual moderation classes you want to restrict outright on your platform (

'general_nsfw','general_suggestive'). - You also call the

upsert_usermethod, which creates or updates the user specified in the payload. Notice that we’re specifying the user with their user”id”and granting them”admin”permissions. You can find all of your app’s users, their IDs, and their roles in our Chat Explorer.

Note: Admin privileges are required to ban users, but any user in a chat can flag and mute other users. Check out our Permissions docs to learn more.

The chatEvent route handler listens for incoming messages, extracts the raw text from the message object, and passes the ”text” and Hive API key into the imported get_hive_response function.

Our get_hive_response function (imported from hive.py) is fairly simple. Let’s take a look:

The get_hive_response function takes in two arguments: the input_text from a new message and our Text Moderation API key.

It constructs a POST request containing the synchronous (real-time) Hive endpoint, an Authorization header signed with our API key, and the request body data. We then convert the Hive response object into JSON format and return it.

Back in main.py:

Our function loops through the response fields and checks severity scores generated by Hive’s text classification model.

If a score exceeds either of our thresholds (greater than or equal to 1 for flagging or 3 for banning), we return flagged=True and call Stream’s flag_message method, which requires the message ID.

If a score exceeds our ban threshold, we return banned=True, the reason for banning our user, and call the ban_user method, which requires ”admin” permissions.

Understanding the Text Moderation API’s JSON Response Object

To get a better understanding of Hive’s model classifications and the API response object, we’ll look at the response object for an example Stream Chat message. Hive’s Text Moderation defines several independent moderation classes: ”sexual”, ”hate”, ”violence”, ”bullying”, and ”child_exploitation” capture most harmful and inappropriate content, while other classes like ”spam” and ”promotions” flag suspicious links, soliciting follows, etc.

Hive’s model may classify certain text into one or more of these categories based on its understanding of full phrases and sentences in context (including emojis and leetspeak).

To illustrate, let’s see what kind of response we get for an example message.

In your chat app, send this message: “I hate your guts.” This should give you the following JSON response from Hive’s Text Moderation API:

123456789101112131415161718192021222324252627282930313233343536373839"output": [ { "time": 0, "start_char_index": 0, "end_char_index": 16, "classes": [ { "class": "spam", "score": 0 }, { "class": "sexual", "score": 0 }, { "class": "hate", "score": 0 }, { "class": "violence", "score": 0 }, { "class": "bullying", "score": 1 }, { "class": "promotions", "score": 0 }, { "class": "child_exploitation", "score": 0 }, { "class": "phone_number", "score": 0 } ]

Hive’s Text Moderation classified ”I hate your guts” as bullying with a severity score of 1. For context, Hive scores text on an integer scale from 0 (clean) to 3 (most severe), and this message is relatively tame as far as bullying and toxicity come. Scores of 2 and 3 in bullying are given for more threatening or extreme language, encouraging self-harm, etc.

Still, if this message gets sent to Stream Chat, our get_hive_response function will flag the message since the score in the bullying class is equal to the threshold we set (greater than or equal to 1).

Then, our main chatEvent function sends the message to the Chat Moderation dashboard for an allow/remove decision by a moderator.

Since the /chatEvent route handler listens for new message events and calls get_hive_response on the text contents, we should now be able to flag all sensitive messages and pass them to the Chat Moderation feed!

Integrating the Visual Moderation API and Banning Users

What happens if you need to enforce a more serious action, like automatically removing a message or banning a user? Let’s see how we can do this with built-in Stream Chat capabilities, this time using an example with Hive’s Visual Moderation API.

Now we’ll send a new message with an image attachment. Then, if Hive returns a high confidence score in restricted visual moderation classes, we will ban the user who sent it.

Here’s the example implementation from main.py that moderates ”general_nsfw”’ (explicit images) and ”general_suggestive”` (milder suggestive content). This logic looks similar to our implementation for the Text Moderation API:

12345678910111213141516171819202122232425262728293031323334353637383940from decimal import * from hive import get_hive_response visual_threshold = Decimal(0.9) banned_classes = ['general_nsfw','general_suggestive'] @app.route('/chatEvent', methods=['POST']) async def chatEvent(request): data = await request.json() if data["type"] == "message.new": if data["type"] == "message.new": text = data["message"]["text"] attachments = data["message"]["attachments"] user = data["message"]["user"] banned = False flagged = False reason="" for attachment in attachments: print(attachment["type"]) if attachment["type"] == "image": visual_hive_response = get_visual_hive_response(attachment["image_url"],hive_visual_api_key) for output in visual_hive_response["status"][0]["response"]["output"]: for class_ in output["classes"]: score = class_["score"] decimal_score = Decimal(score) if Decimal(visual_threshold).compare(decimal_score) == -1: print(class_["class"]) print(class_["score"]) if class_["class"] in banned_classes: reason = class_["class"] banned = True if banned: print(banned) chat.ban_user(user["id"], banned_by_id="hive-bot", timeout=24*60, reason=reason) return JSONResponse({"received": data}) if __name__ == "__main__": uvicorn.run(app, host='0.0.0.0', port=8000)

Let’s explain what this code is doing:

The /chatEvent route handler listens for new messages with attachments. If the attachment is of the type ”image”, we send the image URL and API key to our get_visual_hive_response function, which sends a POST request to Hive’s Visual Moderation endpoint and retrieves the response.

Then, like our Text Moderation implementation, if the image attachment receives a confidence score that exceeds our threshold (0.9) in any banned_classes (in this case we’ve chosen general_nsfw and general_suggestive in main.py), we call the ban_user method to ban the sender.

Understanding the Visual Moderation API’s JSON Response Object

Let’s see how this works in practice. Using the implementation above, we will send an image attachment that shows a person wearing a cosplay outfit that might be considered inappropriate on some platforms.

After sending the image attachment to Hive, we receive the JSON response below. To keep things focused, we’ve truncated other classes in the typical response for the purposes of this tutorial:

12345678910111213141516171819[ { "time": 0, "classes": [ { "class": "general_not_nsfw_not_suggestive", "score": 8.432921102897108e-7 }, { "class": "general_nsfw", "score": 0.0000030202454240811114 }, { "class": "general_suggestive", "score": 0.9999961364624657 }, ] } ]

Deep vision models return confidence scores between 0 and 1 based on its certainty that the image belongs in each class. In our moderation function, we set a threshold score of 0.9, where the model needs to be very confident the classification is correct. For this image, Hive’s visual model returns a confidence score in ”general_suggestive” that exceeds our threshold significantly.

We can be very confident that the image meets Hive’s definition for the “Suggestive” class (e.g., revealing or racy but not explicit). Since our moderation function checks the ”general_suggestive” score against the threshold, we take banned to True and then ban the user using Stream Chat’s ban_user function.

Then, you can see the user’s status updated in your Stream Dashboard:

In your chat app, you’ll also notice their previous messages are deleted and they can no longer send new messages within the channel.

If you need to moderate other types of images, simply add any of Hive’s other supported visual moderation classes to banned_classes in main.py. Then, the chatEvent function will check scores in those classes against our threshold and take moderation actions as needed!

Conclusion

We decided to keep things simple here by showing a broad approach to text moderation and a small subset of Hive’s visual moderation classes. In building a real implementation for your platform, though, you’re free to change which moderation classes are monitored (and at what threshold) to align Stream Chat moderation with your platform’s needs.

Based on the implementation we showed, you can add or remove classes from the banned_classes list to monitor different types of content, moderate at different threshold values (e.g., only flag messages at severity scores of 2 or 3), or take different enforcement actions supported by Stream Chat such as blocking or deleting a message. The same logic shown here can be adapted to a wide variety of content moderation policies in order to better protect your users.