For teams looking to outsource their moderating needs, there's no shortage of services available today. But with so many options and endless promises of "real-time" user-generated content (UGC) moderation and compliance-ready reporting, sorting through providers to find the best fit can be overwhelming.

Some teams choose to integrate a content moderation solution directly into their platform to maintain full control over safety workflows and performance. Others prefer to outsource moderation entirely to dedicated service providers. Deciding which path to take depends on your team's goals, resources, and the scale of your community.

Regardless, the best tools are the ones that consistently detect violations, scale under load, and help maintain safe, thriving communities.

Let's look at the best outsourced content moderation services on the market, then break down how to pick the right vendor, from industry-fit and modality coverage to how well they balance humans with automation.

What Are Content Moderation Services?

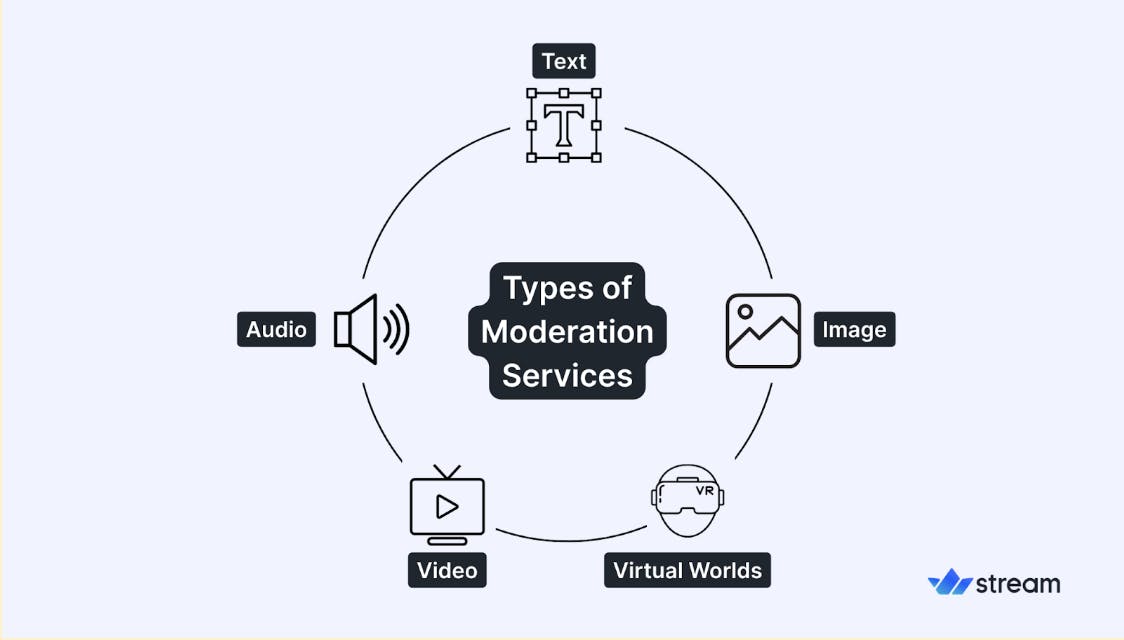

Content moderation services are outsourced teams that mix automated tools with human review to check, filter, and take action on UGC, like text, images, videos, audio, and live streams. Their goal is to spot and handle violations, such as hate speech, harassment, sexual content, or graphic violence.

Ultimately, their role is to take some of the burden off your shoulders in ensuring content follows the platform's community guidelines and legal requirements.

Let's look at the top eight moderation service providers in 2025.

Top 8 Content Moderation Services Explained

While some vendors may excel in one area compared to their competitors, all had to offer the following to make our list:

-

Multilingual and international coverage 24/7/365

-

Multimodality protection for text, image, audio, and video

-

Hybrid AI and human moderation

-

Robust compliance and security

-

Strong customer support

ModSquad

ModSquad is a global digital engagement services provider with a network of over 10,000 moderators. Its "ModSourcing" approach combines experienced human moderators, customer support agents, and community managers.

It also uses AI, automation tools, and a proprietary behavior matrix to maintain clean content and a healthy community.

With nearly two decades in the industry, ModSquad works with global brands (like the NFL and gaming giant Electronic Arts) to manage online interactions, safeguard platforms, and strengthen online communities. The services are flexible, scalable, and tailored to meet the needs of brand safety, compliance, and user experience.

Key Features

-

Global Reach and Multilingual Support: Service coverage in 70+ countries with 50+ languages and dialects supported.

-

Secure CX Platform: Flexible and modular systems that meet specific data security and privacy requirements.

-

Social Media Expertise: Full-service support for public-facing interactions, like strategy creation, campaign execution, influencer management, and crisis handling.

Best For

-

Blending moderating with CX and community management

-

Fan-driven brands in niches like gaming, sports, and publishing

Enshored

Enshored provides outsourced content moderation, customer experience, and back-office services for fast-growing companies across sectors like traveltech, healthtech, fintech, eCommerce, and SaaS.

With a team of over 2,000 global members, Enshored deploys trained, multilingual moderators within 30 days. It delivers scalable, secure, and high-quality support for platforms handling large volumes of UGC.

Key Features

-

Rapid Team Scaling: Its working model lets the company grow teams quickly (from a few agents to many), while maintaining quality and cohesion.

-

Full-Spectrum Moderation: It handles text, image, video, and audio moderation across memes, ads, user profiles, comments, DMs, livestreams, and more.

-

Community Safety Focus: Employs proactive screening to keep communities inclusive, tackling trolling, harmful content, and irrelevant clutter before it impacts engagement.

Best For

-

High-growth companies scaling fast

-

Traveltech, fintech, and healthtech companies with a global presence

LiveWorld

LiveWorld is a social-first digital agency with over 27 years in content moderation, community engagement, and compliance management. Services are available in 70+ countries and language combinations. They serve sectors including healthcare and pharma, offering multilingual human moderation supported by proprietary tools.

The platform's services extend from managing influencer programs to monitoring third-party platforms, with a focus on safeguarding brand reputation, building loyal communities, and improving UGC quality.

Key Features

-

Pharma Influencer Moderation and Compliance: Monitors influencer content to meet brand guidelines, manages adverse event reporting, and maintains compliance with promotional regulations.

-

Crisis Mitigation: Detects potential issues early on social channels and responds promptly to protect brand reputation.

-

Third-Party and Competitive Audits: Monitors ratings, reviews, and competitor platforms to deliver actionable insights.

Best For

-

UGC compliance in healthcare and pharmaceuticals

-

Protecting brands from and resolving UGC crises

Horatio

Horatio provides outsourced content moderation services for brands across retail, finance, healthcare, SaaS, edtech, social media, travel, and gaming.

Beyond handling multimodality in-app communication, you'll also find the platform continuously and actively monitoring for interpersonal harmful content, like harassment, hate speech, misinformation, and spam.

With dedicated backup staffing, low agent turnover, and secure handling of sensitive workflows, Horatio positions itself as an extension of its clients' internal teams.

Key Features

-

Custom Workflow Integration: Moderation processes align with client-specific escalation paths, review handling procedures, and customer experience goals.

-

Certified Compliance: SOC 2 Type II, PCI DSS, and HIPAA compliance with restricted-access zones for sensitive workflows with strong data protection.

-

Advanced Quality Assurance: 98% QA score maintained through structured audits, calibrated reviews, policy-aligned scorecards, and continuous feedback loops for moderation consistency.

Best For

-

Regulated industries with strict compliance needs

-

Real-time responses in high-traffic or high-risk scenarios

ICUC Social

ICUC Social has over two decades of experience managing enterprise-level online communities and shaping brand presence across global markets in 52+ languages.

It serves hundreds of large brands (like Starbucks, Chevron, and Sony) and provides trained teams of moderators, community managers, strategists, and analysts. And it handles social interactions of all types, from public comments to private messages.

The platform's work goes beyond moderation to cultivating brand identity, driving loyalty, and aligning engagement with each client's overall social media strategy.

Key Features

-

Social Listening and Sentiment Analysis: Tracks brand mentions and audience sentiment to detect risks, identify trends, and gather insights for content planning.

-

Crisis Management: Proactively identifies and mitigates potential issues before they escalate, which limits reputational impact.

-

Customized Reporting and Feedback Integration: Delivers detailed reports on moderation activity, engagement patterns, and user feedback to inform strategy, product improvements, and marketing decisions.

Best For

-

Global enterprises with high-volume social content

-

Teams needing a mix of moderating and sentiment tracking

Anolytics

Anolytics is best known for its high-accuracy data annotation services that power AI and machine learning models across industries like self-driving vehicles, retail, healthcare, and security. This expertise provides end-to-end content moderation services for multimodal platforms.

It has over 15 years of experience and a workforce of 1,500+ trained annotators operating 24/7. The platform blends human expertise with advanced moderation tools to establish brand safety, compliance, and user trust. The balance of technology and human review helps with accurate, context-aware content filtering to protect users and brands.

Key Features

-

HITL: Integrates skilled moderators into AI-assisted workflows for better context recognition and decision accuracy.

-

Security and Compliance: Services align with regulations like GDPR and SOC 2 Type 1 standards, offering secure, scalable, and policy-specific workflows.

-

Real-Time Content Review: Enables rapid detection and removal of harmful or non-compliant content, supporting continuous platform safety.

Best For

-

Protecting multimodal, high-volume platforms

-

Companies outsourcing both annotating and moderating

SupportYourApp

SupportYourApp delivers full-scale content moderation services globally in 60+ languages. The platform reviews and verifies UGC against legal standards. Its service covers fraud detection, abusive content identification, data validation, and community guideline enforcement.

Using AI-powered tools alongside skilled human reviewers, SupportYourApp manages large content volumes without compromising turnaround time or security. It helps prevent legal challenges with local laws and the Digital Services Act (DSA). The result is a safer and more compliant online environment.

Key Features

-

KYC Verification: Verifies user identities to prevent impersonation, ensure trust, and comply with sector-specific rules.

-

Regulatory Coverage: Aligns with the DSA, GDPR, CCPA, PCI DSS Level 1, and ISO requirements.

-

Customizable Moderation Policies: Tailors moderation workflows to match each client's community guidelines and operational needs.

Best For

-

KYC industries like marketplaces, fintech, and dating

-

Fraud protection and compliance across a vast range of languages

TaskUs

TaskUs delivers comprehensive Trust & Safety operations with AI-driven tools, human expertise, and clinically supported moderator care. It protects digital platforms across social networks, gaming, marketplaces, streaming, and GenAI environments.

Its services include the removal of harmful content, identity verification, fraud prevention, and enforcing moderation policies.

The approach blends technology with real-time human oversight to address emerging threats like deepfakes and misinformation.

TaskUs integrates advanced analytics, policy consulting, and compliance alignment to help platforms meet evolving regulations.

Key Features

-

Custom AI Lexicons: Builds contextual language models for higher detection accuracy in specialized topics or sensitive categories.

-

GenAI and Synthetic Threat Defense: HITL enforcement, deepfake detection tools, and contextual AI models achieving F1 scores above 90%.

-

Data-Driven Insights: Toxicity scorecards, content trend tracking, and standardized reporting for actionable safety decisions.

Best For

-

Gaming, social, and livestreaming under high risk

-

Companies in need of robust deepfake and fraud protections

Core Evaluation Criteria

-

Modalities Supported

Mixed-media environments have been the norm for some time now. It's rare for a company to build an app that only deals with one type of UGC.

Let's say you have a dating app with voice note features; you must account for image, text, and audio modalities. If you run a Twitch-like platform, you'll need real-time moderation for livestream chats on top of everything else.

Many providers claim to offer full support across all content modalities, but it's not always the case when you dive deeper. Some services will outsource specific modalities or struggle with latency, making them poorly suited for high-volume or real-time situations.

Users expect safety regardless of format. 37% of respondents worry that digital activity could expose them to harmful content or people. Your dating app can catch every unsolicited nude picture before it can cause damage, but users will still churn if your voice note feature is poorly moderated.

When picking a vendor, choose one that:

-

Handles all relevant UGC types effectively in-house

-

Presents reports and actions in a unified dashboard instead of per-type silos

-

Can guarantee low-latency performance across modalities, even during traffic spikes

-

AI Capabilities and Accuracy

Not all AI moderation tools are capable of the same level of performance. A vendor may claim their workflow is "AI-powered," but it only employs rudimentary keyword matching. Others will use large language models (LLMs) that can parse nuance and scale more effectively.

Thresholds for false positives and negatives are highly dependent on context. Different apps will have different tolerance levels; flirty language (in the right context) is expected on a romantic app, but it has no place on a K-12 tutoring platform. Investigating how a potential provider tunes or refines their AI models is critical.

When picking a vendor, you should consider:

-

Methods: Find out if they rely on static blocklists or use more advanced machine learning methods like transformer-based models, whether it's GPT or proprietary.

-

Edge cases: Assess how well their models understand intent and context. This can impact the handling of sarcasm, coded language, and more.

-

Trade-off management: Find out how they balance false positive rates and false negative rates to improve accuracy, and whether that matches up with your own internal content moderation policy targets.

-

Human-in-the-Loop Moderation

AI can have a massive, positive impact on the safety of your app and its users, but no automated system is perfect. That's where human moderation still plays a hugely important role. It's even a compliance requirement in some industries.

YouTube removed 8.4 million videos for guidelines violations in Q2 2024. But 3.6% of those were still the result of manual flagging and review; the biggest tech company still relies on humans for hundreds of thousands of moderating decisions.

Even though all of the vendors above have some form of HITL element, it's still critical to ask these questions:

-

Who handles human moderation? Is it their team, your team, or another vendor's?

-

If they do provide human moderators, what are their standard service level agreements (SLAs) for manual reviews?

-

Does their moderation system support queue routing? What about escalations and appeals?

-

Are quality controls in place, like sector-specific training or QA processes for moderation decisions?

If a provider outsources their HITL moderation, ask for the same details on how and when the team conducts moderation, including SLAs. If you've got an app where time-sensitive reviews are important, an offshore vendor with long turnaround times may not work for you.

-

Real-Time vs. Asynchronous Review

Real-time reviews are critical for scenarios like live chat or livestreaming. Asynchronous reviews can be suitable for lower-stakes environments, such as forums or product reviews. Some apps will implement both.

If your product features live elements, ask the service provider:

-

How their automated systems can immediately catch inappropriate comments or messages

-

What their typical latency is with benchmarks for proof

-

What role their mods play in catching and addressing live rule violations

Some vendors promise "real-time" moderation, but their latency rates are only at sub-minute or multi-second levels, where you could aim for a sub-second threshold. They might also only support true real-time moderation for one content modality, like text.

For asynchronous moderation, make sure that they support batch processing or review queues for lower-risk content.

Your app might be better suited for a hybrid method using both approaches. Some providers can apply both by segmenting modalities and users and applying the most appropriate review method.

-

Customization and Control

Although pretrained models are a solid starting point, content moderation on modern platforms is not static and rarely one-size-fits-all. Slang evolves and formats like memes make violations more difficult to detect. For instance, your gaming community may have trolls who appropriate in-game terms as hateful dogwhistles to sidestep default filters.

Your app will have its own unique moderation requirements. So, rather than being squeezed into pre-defined packages, check if the provider allows you to:

-

Define your own rule configurations, like thresholds and blocklists

-

Fine-tune their models to your app's vertical-specific needs with your own training data and edge cases

-

Reporting, Audit Trails, and Transparency

Whether or not your moderating partner removes harmful UGC is only half the equation. Both users and auditors want to know "why" removals happen and whether they align with platform policies.

You cannot wait for an audit (either internal or external) to discover that you've outsourced to a company that couldn't maintain compliance, especially in highly regulated industries and regions.

Check whether any prospective vendor can give you:

-

Exports of decision logs and reviewer notes

-

Reporting that already supports important legislation like GDPR or DSA

-

Data on human reviewer decisions

You may well need this data for showing timestamps and decision histories to auditors or when reporting internally to stakeholders.

-

Cost and Scalability

Pricing models for moderation services are often complicated and may be shaped by factors like:

-

Language, region, and modality coverage

-

Access to advanced tools and customized workflows

-

Service level agreements

-

Fixed- versus flex-contracts

-

Additional services, like crisis management or training set annotation

For example, what happens if you expand into a new market after signing your contract? Will you be able to add coverage without fees? Similarly, if you agree to a fixed rate but experience a sudden surge in popularity, will you be able to renegotiate or face steep overage charges?

Don't shy away from asking tough pricing questions. The costs can look affordable with a cursory glance, but as your app scales or your real-world usage comes into play, it can lead to some nasty surprises.

-

Language and Cultural Coverage

If your audience spans the globe, your chosen provider must support the required languages and understand their cultural contexts.

To ensure that your platform guidelines are enforced without misinterpretations, the moderators must be able to recognize region-specific sensitivities, slang, and context.

If your app requires multilingual, multicultural moderation, ask your potential service provider:

-

How many languages they cover

-

If they're trained to be fully culturally responsive in those languages

-

The ratio of human-to-automated responses that occur by language and region

How to Choose a Service

So, what should you do to choose the right provider? Apart from weighing the criteria and potential providers above, there are a few steps to remember.

First, identify your specific risk zones. This goes beyond high-priority modalities and should answer which types of community guideline violations are:

-

Most harmful to your users

-

Currently causing the most bottlenecks for your Trust & Safety team

-

Most likely to cause legal risks or harm to your brand reputation

Next, implement some form of evaluation scorecard while you work through potential providers.

Make sure all vendors answer every question sufficiently and that you are weighing your options on an equal footing. It's easy for polished pitch decks to get in the way of technical depth, but a scorecard helps you remain more objective in finding your best fit.

Finally, aim to run a pilot with your top choices. If you can submit real UGC and simulate genuine moderation scenarios, this helps to validate your decision with data and results. In particular, you can get a true sense of how workflows will look in practice and identify any immediate bottlenecks that might sway your decision.

Outsourced vs. In-House Moderation Tools

Choosing how to moderate user-generated content comes down to one core decision: whether to outsource moderation to a third-party service or integrate an in-house moderation solution directly into your product.

Both approaches can keep your community safe, but the right fit depends on how much control, customization, and scalability your team needs. Outsourced services take moderation off your plate, while in-house solutions give you deeper integration and flexibility within your existing stack.

| Category | Outsourced Moderation | In-House / Integrated Moderation |

|---|---|---|

| Setup Speed | Fast to deploy. Vendors can start moderating within days. | Requires initial setup and integration time, but once live, scales automatically. |

| Control & Customization | Limited control; policies and workflows often follow vendor standards. | Full control over filters, thresholds, and model tuning to match your community guidelines. |

| Scalability | Easily scales with external teams and 24/7 global coverage. | Scales programmatically with traffic; ideal for apps with dynamic workloads. |

| Accuracy & Context | Strong human review for nuanced decisions but may vary by team or language. | AI models and configurable pipelines can adapt to your specific domain and tone. |

| Data Privacy & Security | User data passes through third-party systems. Requires trust and compliance checks. | Keeps moderation data within your infrastructure for stronger privacy and compliance control. |

| Cost Model | Recurring service fees based on volume, region, and modality coverage. | Upfront investment in tools and integration; lower ongoing cost as usage scales. |

| Best For | Platforms without internal Trust & Safety resources or needing quick coverage across languages. | Teams that want seamless integration, consistent accuracy, and an easy-to-manage dashboard without heavy engineering lift. |

Final Thoughts

It's unlikely that a single content moderation service provider will fully tick every checkbox on your criteria list. But that's why it's important to know what is essential to your app, audience, and Trust & Safety workflows before evaluating the options.

If you begin by prioritizing the factors your team and brand care about the most and implement practical tools like scorecards and pilots to help you through the process, you'll be in a much better position to make the final call.

You may also find that your product would be better off with in-house moderation, either with a custom-built solution or by integrating a moderation API.