OpenAI just launched GPT-4.5. Here’s a snippet from their release blog post:

Early testing shows that interacting with GPT‑4.5 feels more natural. Its broader knowledge base, improved ability to follow user intent, and greater “EQ” make it useful for tasks like improving writing, programming, and solving practical problems. We also expect it to hallucinate less.

In short, it is perhaps an ideal chatbot. LLMs are chatbots, but not all LLMs excel in this role. Some, perhaps like GPT-4.5, have a greater emotional quotient (EQ), others have better research and analytical skills, and others still excel at specialized domains.

When selecting an AI model for your chatbot, it's crucial to consider your specific needs. Here, we will walk you through a decision framework for making the right choice.

Step 1: Identify Your Use Case and Objectives

This is obviously where to start. Building a customer support chatbot is different from building a coding chatbot, an AI assistant, or a medical assistant. You want to clearly define your chatbot’s primary goal to select the most appropriate AI model.

Here are some typical chatbot scenarios and what to look for in AI models to determine which aligns best with your intended use:

- Customer Support: Resolving user inquiries, providing technical support, and handling FAQs. Look for a model with high EQ, strong problem-solving skills, and low hallucination rates.

- Sales Assistance: Guiding users through purchasing decisions, recommending products, upselling, and cross-selling. For sales assistance, select a model that excels in persuasive communication, conversational empathy, and personalized recommendations.

- Content Moderation: AI assistants can streamline trust and safety team content moderation workflows and review processes.

- Content Generation: Assisting with creative tasks like writing, brainstorming ideas, or generating engaging content. Seek a model known for creativity, linguistic fluency, and the ability to adapt to diverse styles and tones.

- Personal Assistant: Organizing schedules, setting reminders, managing emails, and offering personalized recommendations. Prioritize models with strong organizational skills, task execution capability, and a high degree of personalization.

- Coding: Diagnosing issues, providing step-by-step instructions, and resolving complex technical problems. Choose a model with advanced analytical abilities, technical precision, and reliable accuracy.

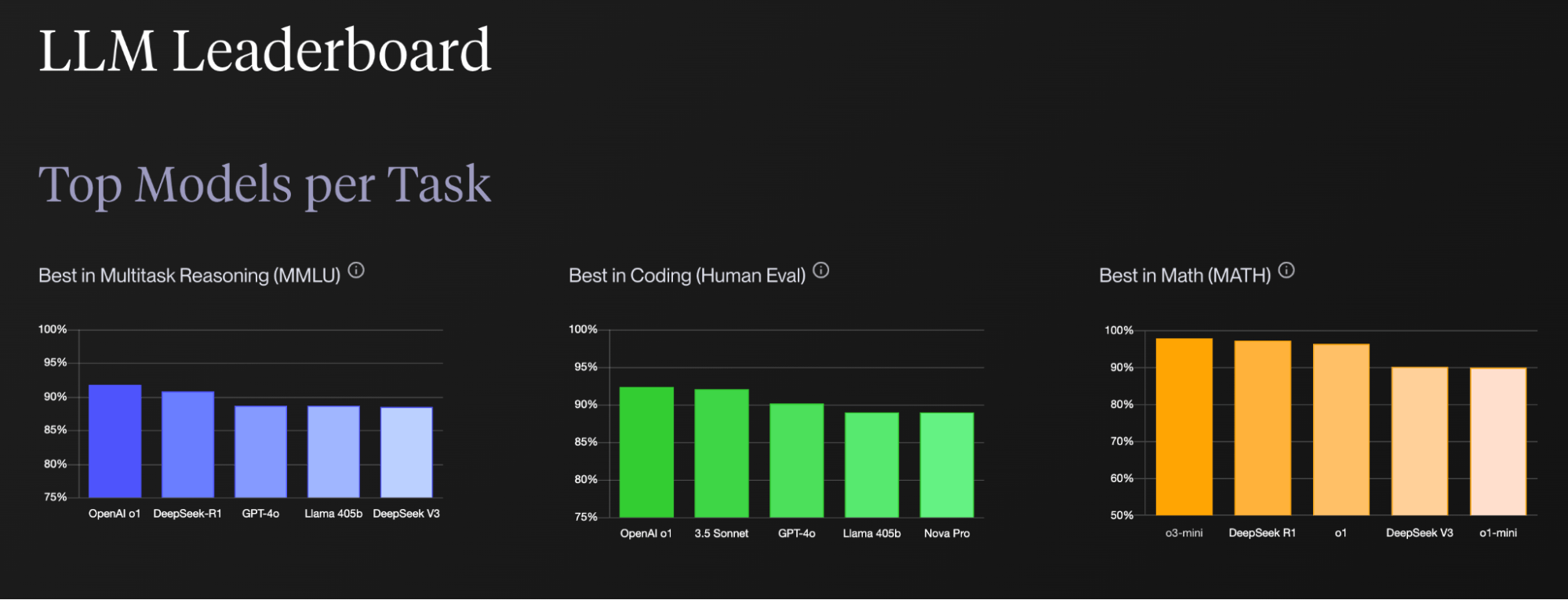

Related to this is knowing your benchmarks. Most LLMs are released alongside benchmarks for how they perform on specific tests and tasks:

- Accuracy and Reasoning: Tests like MMLU (Massive Multitask Language Understanding) or HumanEval (coding/problem-solving capabilities) provide quantitative comparisons among models.

- Language and Comprehension Skills: Evaluations like SuperGLUE, GSM8K, and ARC gauge natural language understanding, reasoning, and question-answering capabilities.

(source: vellum.ai)

You don’t always have to choose the model with the highest benchmark, but these give you a good understanding of the relative capabilities of current AI models. Defining a clear primary use case and understanding how this relates to the capabilities of different models will help streamline the evaluation of potential AI models and ensure alignment between your chatbot’s capabilities and your objectives.

Step 2: Define Strategic Constraints

Before you choose a specific model, you might need to determine whether you have any deployment constraints that will narrow your choices.

The most common are data privacy and security. These considerations are paramount when selecting an AI model for your chatbot. Many organizations handle sensitive customer information or proprietary business data that must be protected according to industry regulations and company policies.

The chosen AI model's approach to data handling directly impacts compliance with regulations like GDPR, HIPAA, or CCPA, which can carry significant penalties for violations. Additionally, customer trust depends heavily on responsible data practices, making this a business-critical decision beyond mere technical considerations.

Other constraints might be

- Integration Complexity: Assess how the model integrates into your existing technology stack.

- Infrastructure Needs: Evaluate the computing resources necessary for deployment.

- Customization/Fine-Tuning: Evaluate whether extensive fine-tuning or custom adaptations are needed.

These will all help decide one of your most significant choices: Proprietary vs Open-Source models.

Proprietary Models

This is going to be a big choice in your AI model. Proprietary models (GPT-4, Claude, Gemini) are developed by companies that maintain exclusive control over model weights and architecture. They offer high performance and regular updates but require API access, incur usage-based costs, and limit customization.

These offer several technical advantages:

- Robust API Infrastructure: These models typically come with well-documented, production-ready APIs with high uptime guarantees (often 99.9%+) and comprehensive monitoring tools.

- Continuous Improvement: Vendors regularly update models with performance improvements and new capabilities without requiring client-side changes.

- Rate Limiting and Scaling: Most proprietary solutions offer tiered access with varying rate limits (from 10 requests per minute to thousands) and automatic scaling options.

- Developer Tools: Comprehensive SDKs for multiple programming languages (Python, JavaScript, Java, Go, etc.) simplify chatbot integration.

Technical limitations include:

- API Latency: Network latency adds 100-500ms overhead compared to local deployment.

- Parameter Limits: Fixed context windows (typically 8K-128K tokens) and generation limits restrict certain applications.

- Black-Box Architecture: Limited visibility into model operation makes debugging and performance optimization challenging.

- Vendor Lock-in: Dependency on specific API structures and response formats can create technical debt.

Open-Source Models

Open-source models (LLaMA 3, DeepSeek R1, Mixtral) provide downloadable weights for self-hosting. They offer cost predictability, full customization, and data privacy but require technical expertise and computational resources to deploy. Performance historically lagged behind proprietary models, though this gap is narrowing, with models like LLaMA 3 and DeepSeek R1 approaching or surpassing GPT-4 quality in specific tasks.

Recent advancements in open-source models have significantly closed the performance gap with proprietary alternatives:

- Quantization Options: Models like LLaMA 3 support various precision levels (4-bit, 8-bit) that reduce memory requirements with minimal performance degradation.

- Architectural Innovations: Mixture-of-Experts (MoE) architectures in models like Mixtral enable larger effective parameter counts (46.7B) while requiring computational resources similar to much smaller dense models.

- Specialized Variants: Domain-adapted versions optimized for specific tasks (e.g., coding, medical, and legal) often outperform general-purpose proprietary models in their specialty.

- Fine-Tuning Flexibility: Complete access to model weights enables parameter-efficient tuning techniques like LoRA, QLoRA, and adapter-based approaches.

Technical requirements include:

- Hardware Specifications: High-end consumer GPUs (RTX 4090: 24GB VRAM) can run 7B parameter models, while server-class GPUs (A100: 80GB) are needed for larger 70B+ models without extensive optimization.

- Inference Optimization: Frameworks like vLLM, TensorRT-LLM, or CTransformers can improve throughput by 2-5x through KV caching, tensor parallelism, and optimized kernels.

- Deployment Complexity: To ensure reliability and scaling, container orchestration (Kubernetes, Docker Swarm) is typically required for production deployments.

Cloud-Based vs. Self-Hosted Deployment

If you choose open-source, you must determine whether you’ll use cloud-based deployment vs self-hosted.

Cloud-based deployment leverages provider infrastructure through APIs. These might be large cloud companies such as AWS (through AWS SageMaker or Bedrock) or AI-specific cloud companies such as TensorFuse or LlamaIndex. Benefits include zero hardware investment, automatic scaling, and minimal technical overhead. Drawbacks include per-token costs, potential latency, dependency on external services, and data leaving your environment.

Self-hosted deployment runs models on your infrastructure. Advantages include fixed costs (independent of usage volume), complete data control, and customization flexibility. Disadvantages include significant hardware requirements (e.g., LLaMA 2 70B needs ~140GB GPU memory in full precision), engineering complexity, and maintenance responsibility.

Step 3: Core Capabilities Evaluation

Once you have your use case and know whether you are using proprietary or open-source software, you should start understanding each model's core capabilities and assessing its relative strengths.

Here’s a way to consider the critical dimensions:

| Capability | Importance for Chatbots | Proprietary Model Examples | Open-source Model Examples |

|---|---|---|---|

| Conversational EQ | Empathy, emotional nuance, conversational warmth | GPT-4.5, Claude | LLaMA, Mixtral (with fine-tuning) |

| Accuracy & Hallucination Resistance | Reliable and accurate responses, fewer errors | GPT-4.5, Claude | Falcon, GPT-NeoX, DeepSeek (with verification) |

| Domain Expertise | Specialized knowledge in medical, legal, and technical fields | GPT-4 variants, Claude variants | GPT-NeoX, Falcon, DeepSeek (with targeted fine-tuning) |

| Reasoning & Analytical Skills | Complex logic and problem-solving ability | GPT-4, Claude, Gemini | GPT-NeoX, DeepSeek (enhanced with prompt engineering) |

| Creativity & Writing Quality | High-quality creative writing and content generation | GPT-4.5, Claude | LLaMA, Falcon, Mixtral (with diverse training data) |

| Multimodal Support | Handling multiple media types like text, images, audio | GPT-4 Vision, Gemini | Emerging OS multimodal models (additional development required) |

After identifying your use case and evaluating core capabilities, you can consider how each candidate AI model performs. The benchmarks above provide a helpful starting point, but real-world performance can differ significantly.

Implement pilot tests and limited deployments to gather feedback:

- A/B Testing: Compare two or more candidate models in real-time interactions with actual users to measure differences in effectiveness and satisfaction.

- Controlled Experiments: Conduct structured experiments on your chatbot's specific task scenarios. Analyze factors such as response relevance, error rates, and user engagement.

You should gather direct feedback from users interacting with your chatbot. Qualitative feedback provides insights that benchmarks alone may not capture. Survey users on their satisfaction and comfort level with the chatbot. You can also perform chat log analysis to identify patterns, common user issues, and areas where the chatbot consistently excels or struggles (though you have to consider the privacy concerns of analyzing chat logs–no PII should be shared).

Finally, there is the actual performance characteristics of each model

- Response Time (Latency): Faster models enhance user experience, particularly for customer support or sales.

- Cost per Interaction: Analyze how pricing and resource requirements scale with usage.

- Scalability: Ensure the chosen model can efficiently scale as your chatbot grows in user volume and complexity.

The interplay between these performance factors will significantly impact both user satisfaction and operational costs, making them critical considerations in your final decision.

Step 6: Decision Matrix & Scoring

How can you wrap all this together? A decision matrix like this allows you to compare candidate models by assigning importance weights to each criterion based on your specific chatbot use case, then score each model accordingly:

| Criteria | Importance (1–5) | GPT-4.5 | Claude | LLaMA (fine-tuned) | Deepseek (fine-tuned) |

|---|---|---|---|---|---|

| Conversational EQ | 5 | 5 | 4 | 3 | 3 |

| Accuracy & Hallucination | 4 | 5 | 4 | 3 | 4 |

| Domain Expertise | 4 | 4 | 4 | 3 | 4 |

| Reasoning & Analytical Skills | 4 | 5 | 4 | 3 | 4 |

| Creativity & Writing Quality | 3 | 5 | 5 | 4 | 3 |

| Multimodal Support | 2 | 4 | 3 | 2 | 2 |

| Cost and Scalability | 3 | 3 | 3 | 4 | 5 |

Multiply the model's score by the importance value for each criterion and sum these totals. The model with the highest cumulative score will be your optimal choice.

Balancing Capabilities, Constraints, and Costs

The ideal LLM for your chatbot isn't necessarily the most powerful or highest-ranked on benchmarks—it's the one that aligns with your specific needs, constraints, and objectives. Whether you choose the turnkey reliability of proprietary models or the flexibility and control of open-source alternatives, success comes from thoughtful evaluation and testing with your actual use cases.

As the capabilities gap between model types continues to narrow, focus less on chasing the latest breakthrough and more on building a solution that can evolve alongside both your requirements and the rapidly advancing technology landscape. The most effective implementations often combine multiple approaches, leveraging each model's strengths while mitigating limitations.

By applying the decision framework outlined here, you'll be well-positioned to make an informed choice that delivers exceptional experiences for your users while meeting your organization's technical and business requirements.