We've all been there: you step away from your chat application for a bit, and when you return, you're greeted by a mountain of unread messages across multiple channels.

Catching up can be a daunting and time-consuming task. But what if AI could lend a hand?

In this tutorial, we'll show you how to supercharge your Next.js chat application by integrating AI-generated summaries for unread messages. Users will be able to click a "What did I miss?" button and instantly get concise summaries for each channel they haven't caught up on, all powered by a local Large Language Model (LLM) for optimal performance and user privacy.

Here's a sneak peek of what we'll build:

We'll be using Next.js as our React framework, Shadcn UI for sleek UI components, Stream for the robust chat backend, and we'll run an LLM locally using LM Studio. This approach ensures your users' data stays private and the summarization is snappy.

Plus, this build allows easy swapping to cloud-based LLM providers like OpenAI, Gemini, or Anthropic if your needs change.

Let's dive in.

Set Up the Project

To get up and running quickly, we've prepared a starter project.

- The Starter Repository:

You can find the complete code for this project on GitHub, including a starter branch.

The starter branch (conveniently called starter) contains the basic chat application built with Next.js and Stream Chat, without the AI summary feature we're about to add.

- Cloning the Project:

Open your terminal and clone the starter branch:

123git clone -b starter --single-branch git@github.com:GetStream/nextjs-elevenlabs-chat-summaries.git cd nextjs-elevenlabs-chat-summaries

- Environment Variables:

Our chat application uses Stream Chat. You'll need API keys from Stream.

- Create a new file named .env.local in the root of your project.

- Add your Stream API key and secret:

NEXT_PUBLIC_STREAM_API_KEY=YOUR_STREAM_API_KEY STREAM_SECRET=YOUR_STREAM_SECRET- If you don't have a Stream account, you can sign up for a free account (as shown at 02:31 in the video). Once signed in, navigate to your app in the Stream Dashboard to find your Key and Secret.

- Installing Dependencies and Running:

With your environment variables in place, install the project dependencies and start the development server:

12yarn install yarn dev

Open your browser, and navigate to http://localhost:3000. You should see the basic chat application running.

Run Your Local LLM with LM Studio

For this tutorial, we'll use a Large Language Model (LLM) running locally on your machine. This is great for development as it keeps data private and avoids API costs. We'll use LM Studio.

- Introducing LM Studio:

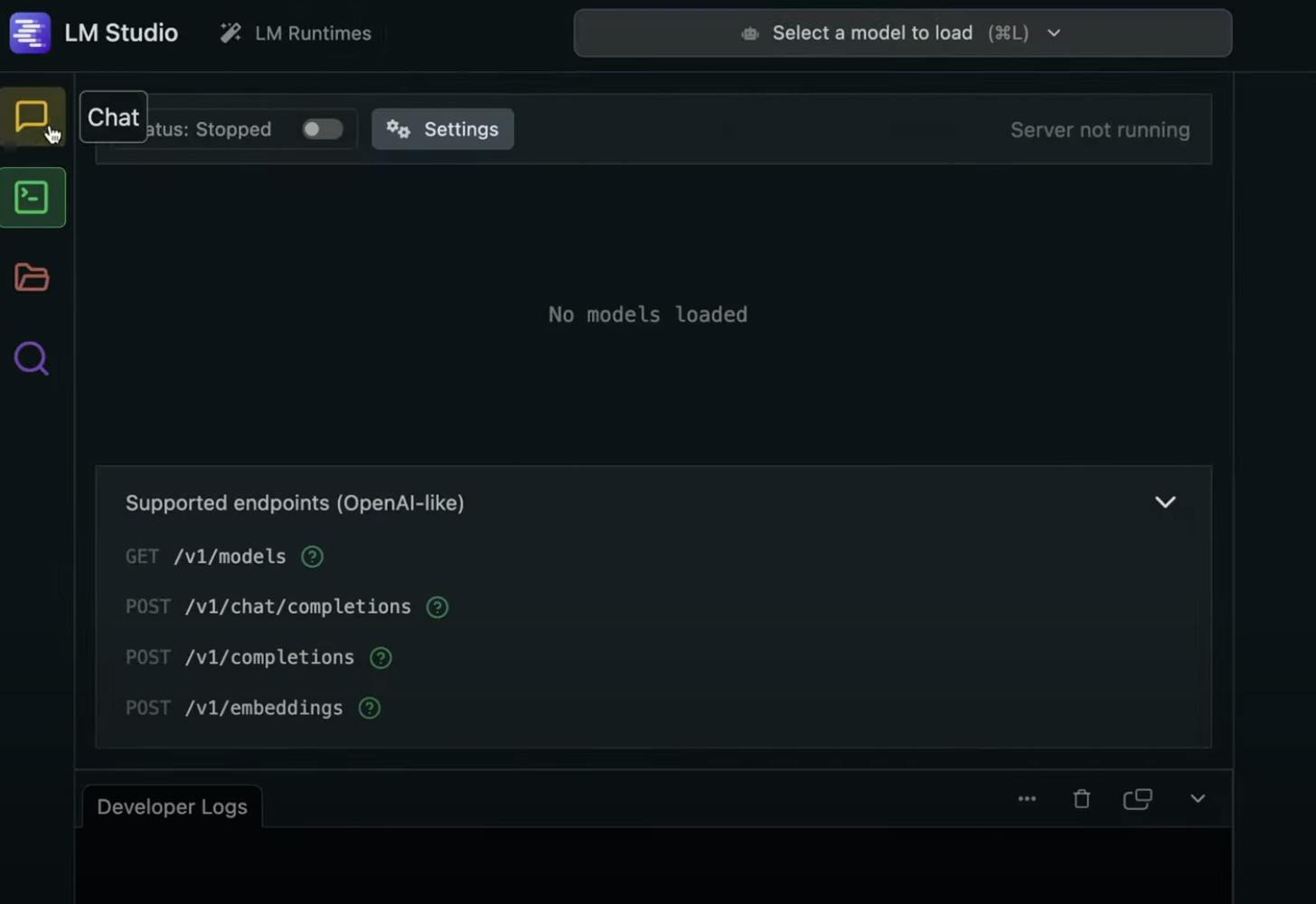

LM Studio makes it easy to discover, download, and run various open-source LLMs on your computer. It also provides an OpenAI-compatible local server.

- Setting up LM Studio:

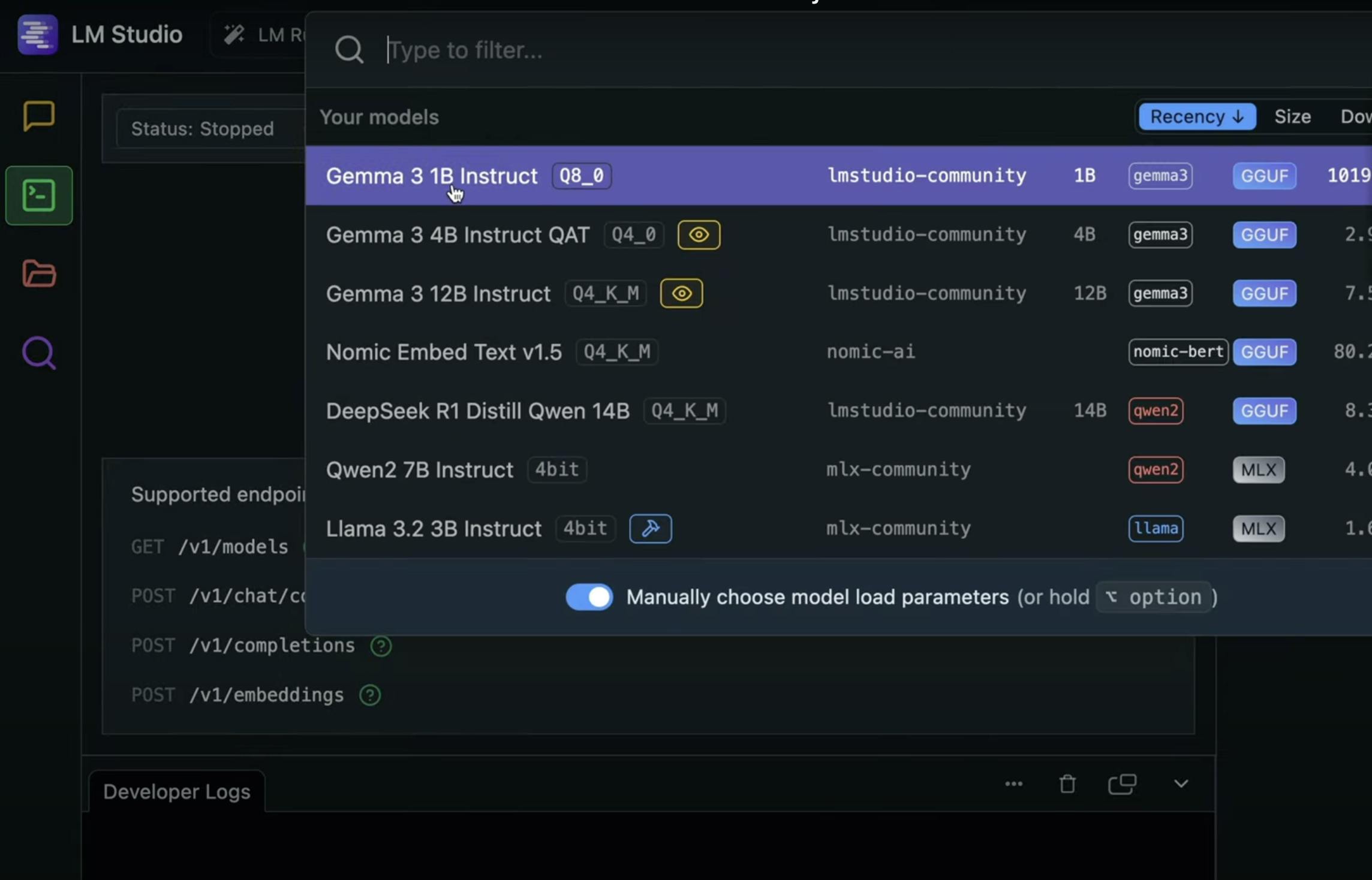

- In the search tab (magnifying glass icon), search for a model. The video uses a "Gemma 3 1B Instruct" model (e.g., gemma-3-1b-it-q8_0.gguf by lmstudio-community). Download a suitable quantization (QAT versions are often good for local use).

- Once downloaded, go to the local server tab (icon that looks like </>).

- Select the model you just downloaded from the dropdown at the top.

- Click "Start Server". LM Studio will typically start the server on http://127.0.0.1:1234.

- You'll see a list of supported OpenAI-like API endpoints, including /v1/chat/completions, which we'll use.

With your local LLM server running, we're ready to build the UI for our summaries.

Build the "What Did I Miss?" UI

We'll create a modal dialog that appears when the user clicks a "What did I miss?" button. This dialog will display a table of channels and their AI-generated summaries.

- Create UnreadMessageSummaries.tsx:

In your components folder, create a new file named UnreadMessageSummaries.tsx.

Start with a basic component structure (find the full file here):

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121122123124125126127128129130131132133134135136137138139140141142143144145146147148149150151152153154155156157158159160161162163164165166167168169170171172173174175176177178179180181182183184185186187188189190191type UnreadMessageSummariesProps = { loadedChannels: Channel[]; user: | OwnUserResponse<DefaultStreamChatGenerics> | UserResponse<DefaultStreamChatGenerics> | undefined; }; export default function UnreadMessageSummaries({ loadedChannels, user, }: UnreadMessageSummariesProps) { const [channelSummaries, setChannelSummaries] = useState< { channelName: string; summary: string }[] >([]); return ( <section className='flex items-center justify-center px-4 py-4 w-full'> <AlertDialog> <AlertDialogTrigger asChild> <Button variant='default' className='cursor-pointer' onClick={() => { const fetchChannelSummaries = async () => { try { // Get channels with unread messages const channelsWithUnread = loadedChannels?.filter( (channel) => channel.state.read[user?.id as string]?.unread_messages > 0 ); if (!channelsWithUnread?.length) return; const placeHolderSummaries = channelsWithUnread.map( (channel) => ({ channelName: channel.data?.name || 'Unnamed Channel', summary: '', }) ); setChannelSummaries(placeHolderSummaries); // Fetch summaries for each channel const summaries = await Promise.all( channelsWithUnread.map(async (channel) => { return getSummaryForChannel(channel); }) ); // Update state with new summaries setChannelSummaries(summaries); } catch (error) { console.error('Error fetching channel summaries:', error); } }; fetchChannelSummaries(); }} > What did I miss? </Button> </AlertDialogTrigger> <AlertDialogContent className='min-w-[70%] max-w-3xl w-full'> <AlertDialogHeader> <AlertDialogTitle>Unread Message Summaries</AlertDialogTitle> <AlertDialogDescription> Here are AI-generated summaries for channels with unread messages: <Table className='w-full mt-4'> <TableHeader> <TableRow> <TableHead>Channel</TableHead> <TableHead>Summary</TableHead> </TableRow> </TableHeader> <TableBody> {channelSummaries.map((item, index) => ( <TableRow key={index}> <TableCell className='font-medium text-foreground'> {item.channelName} </TableCell> <TableCell className='w-full text-muted-foreground whitespace-pre-line break-words space-y-2'> {item.summary === '' && ( <> <Skeleton className='w-full h-4' /> <Skeleton className='w-full h-4' /> <Skeleton className='w-full h-4' /> </> )} {item.summary !== '' && <>{item.summary}</>} </TableCell> </TableRow> ))} </TableBody> </Table> </AlertDialogDescription> </AlertDialogHeader> <AlertDialogFooter> <AlertDialogCancel className='cursor-pointer'> Close </AlertDialogCancel> {/* Optional: Add an action like "Mark as Read" or similar */} {/* <AlertDialogAction>Mark All Read</AlertDialogAction> */} </AlertDialogFooter> </AlertDialogContent> </AlertDialog> </section> ); async function getSummaryForChannel(channel: Channel): Promise<{ channelName: string; summary: string; }> { console.log( `Getting summary for ${channel.data?.name} with body: ${JSON.stringify({ messages: [ { role: 'system', content: 'You are handed messages from a chat channel. Please summarize them and ensure no context misses. If important also name the people involved and what their intentions and/or questions have been.', }, { role: 'user', content: `Unread messages: ${getUnreadMessages(channel)}`, }, ], response_format: { type: 'json_schema', json_schema: { name: 'summary_response', strict: 'true', schema: { type: 'object', properties: { summary: { type: 'string', }, }, required: ['summary'], }, }, }, })}` ); const response = await fetch('http://127.0.0.1:1234/v1/chat/completions', { method: 'POST', headers: { 'Content-Type': 'application/json', }, body: JSON.stringify({ messages: [ { role: 'system', content: 'You are handed messages from a chat channel. Please summarize them in 2 sentences and ensure there is no missing information. Respond only with the summary that is relevant to the user and no boilerplate.', }, { role: 'user', content: `Unread messages: ${getUnreadMessages(channel)}`, }, ], response_format: { type: 'json_schema', json_schema: { name: 'summary_response', strict: 'true', schema: { type: 'object', properties: { summary: { type: 'string', }, }, required: ['summary'], }, }, }, }), }); if (!response.ok) { throw new Error(`Failed to fetch summary for ${channel.data?.name}`); } const data = await response.json(); const content = JSON.parse(data.choices[0].message.content); console.log('content: ', content); return { channelName: channel.data?.name || 'Unnamed Channel', summary: content['summary'], }; } function getUnreadMessages(channel: Channel): string[] { const messages = channel.state.messages; const numberOfUnreadMessages = channel.state.read[user?.id as string].unread_messages; return messages .slice(-numberOfUnreadMessages) .map((message) => `${message.user?.name}: ${message.text}`); } }

- We import necessary UI components from Shadcn UI (

AlertDialog, Button, Table, Skeleton) and types fromstream-chat. - The component accepts

loadedChannelsanduseras props. - We use

useStateforchannelSummariesto hold the data for our table andisOpento control the dialog visibility. - The

AlertDialogTriggerwraps our "What did I miss?" button. - Inside

AlertDialogContent, we set up aTablewith "Channel" and "Summary" headers. - The

TableBodymaps overchannelSummaries. If a summary is empty (our initial loading state), we display threeSkeletoncomponents. Otherwise, we show the actual summary.

Implement the Summarization Logic

Now for the core AI part! We'll create functions to fetch unread messages, send them to our local LLM, and get back summaries.

Modify UnreadMessageSummaries.tsx:

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657function getUnreadMessages(channel: Channel): string[] { const messages = channel.state.messages; const numberOfUnreadMessages = channel.state.read[user?.id as string].unread_messages; return messages .slice(-numberOfUnreadMessages) .map((message) => `${message.user?.name}: ${message.text}`); } async function getSummaryForChannel(channel: Channel): Promise<{ channelName: string; summary: string; }> { const response = await fetch('http://127.0.0.1:1234/v1/chat/completions', { method: 'POST', headers: { 'Content-Type': 'application/json', }, body: JSON.stringify({ messages: [ { role: 'system', content: 'You are handed messages from a chat channel. Please summarize them in 2 sentences and ensure there is no missing information. Respond only with the summary that is relevant to the user and no boilerplate.', }, { role: 'user', content: `Unread messages: ${getUnreadMessages(channel)}`, }, ], response_format: { type: 'json_schema', json_schema: { name: 'summary_response', strict: 'true', schema: { type: 'object', properties: { summary: { type: 'string', }, }, required: ['summary'], }, }, }, }), }); if (!response.ok) { throw new Error(`Failed to fetch summary for ${channel.data?.name}`); } const data = await response.json(); const content = JSON.parse(data.choices[0].message.content); return { channelName: channel.data?.name || 'Unnamed Channel', summary: content['summary'], }; }

Key Changes and Explanations:

- getUnreadMessages(channel):

This helper function takes a Stream channel object and accesses channel.state.messages to get all messages in the channel.

channel.state.read[user.id as string]?.unread_messages gives us the count of unread messages for the current user.

With this unread message count, the function slices the messages array to isolate only the unread messages.

Finally, we map these messages into a simple string format: "User Name: Message text", joining them with newlines.

- getSummaryForChannel(channel):

This is an async function that gets the summary for a single channel. It starts by calling the getUnreadMessages function to retrieve the relevant messages.

Next, it constructs a fetch request to our local LM Studio endpoint (http://127.0.0.1:1234/v1/chat/completions). The body of this request is particularly important due to prompt engineering. It includes two messages: a system message instructing the AI on how to perform the task (e.g., "summarize in 2 sentences, be relevant, no boilerplate") and a user message containing the actual unread messages.

One of the key features used here is response_format. By setting this to { type: "json_schema" } and supplying a schema, the function instructs the language model to return its summary in a structured JSON format like { "summary": "The AI's summary here." }, which greatly improves the reliability of parsing the output.

After fetching, we parse the response. The summary text is often nested within data.choices[0].message.content. Since we requested JSON, this content itself is a JSON string, which we then parse again to get the summary field.

It returns an object { channelName, summary }.

- fetchChannelSummaries():

This async function is called when the "What did I miss?" button is clicked.

It first filters loadedChannels to find only those with unread messages for the current user.

Then, it creates placeholderSummaries (with empty summary strings) and updates the channelSummaries state. This will make our UI show the skeleton loaders.

The function then uses Promise.all to call getSummaryForChannel for every channel that has unread messages. This allows all summarization requests to happen concurrently.

Once all promises resolve, it updates the channelSummaries state with the actual fetched summaries, replacing the skeletons in the UI.

- Updating onClick:

Modify the onClick handler of the AlertDialogTrigger's Button to call fetchChannelSummaries:

123456789101112<AlertDialogTrigger asChild> <Button variant="default" className="cursor-pointer" onClick={async () => { setIsOpen(true); await fetchChannelSummaries(); }} > What did I miss? </Button> </AlertDialogTrigger>

Integrate the Feature into the Chat Application

Finally, let's add our new UnreadMessageSummaries component to the main chat interface. We'll put it in the CustomChannelList.tsx component, which is responsible for rendering the list of channels on the left.

Open components/CustomChannelList.tsx:

- Import the component:

1import UnreadMessageSummaries from './UnreadMessageSummaries';

- Determine if unread messages exist:

Inside the CustomListContainer

12345const unreadMessagesExist = loadedChannels?.some( (loadedChannel) => loadedChannel.state.read[user?.id as string]?.unread_messages > 0 ) ?? false;

- Render the component conditionally:

In the JSX returned by CustomListContainer, find the Channels heading. Just below it (but still within the main fragment), add the UnreadMessageSummaries component:

<h2 className='px-4 py-2 text-lg font-semibold tracking-tight w-full'>

Channels

</h2>

{unreadMessagesExist && loadedChannels && (

<UnreadMessageSummaries loadedChannels={loadedChannels} user={user} />

)}

<ScrollArea className='h-full w-full'>{children}</ScrollArea>We only render our summary component if unreadMessagesExist` is true and `loadedChannels

Demo of the Final Feature

Save all your files. Your Next.js app should hot-reload.

Now, if you have channels with unread messages:

- The "What did I miss?" button should appear above your channel list.

- Clicking it will open the modal.

- You'll briefly see the skeleton loaders.

- Then, the AI-generated summaries will populate the table!

Success! You've successfully integrated AI-powered summaries into your chat application.

What's Next?

In this tutorial, we've taken an existing Next.js and Stream Chat application and enhanced it with a powerful AI feature: summaries of unread messages. We utilized Shadcn UI for a clean interface and LM Studio to run a Gemma LLM locally, ensuring user privacy and good performance during development. The use of json_schema with the LLM API call was key to getting reliable, structured output.

Now what? Try adding:

- More AI chat features, like AI-generated channel titles.

- Group chat functionality to support multiple participants in a conversation.

- Livestreaming to your chat app to engage viewers in real time.

Also, this setup is quite flexible. If you wanted to switch to a cloud-based LLM like OpenAI's GPT models or Google's Gemini, you'd primarily need to change the API endpoint URL in getSummaryForChannel

Thanks for following along, and happy coding!