Creating AI agents can be tricky. Users today expect a high level of polish from their apps, and features like streaming responses, table components, thinking indicators, charts, code and file components, etc. can be hard to implement across different platforms.

To simplify this process for developers, we're introducing new components and libraries to make your AI integration with Stream Chat easier than ever.

As part of this release, we are exposing:

New UI Components

Available on iOS, Android, React, and React Native that work well with Stream Chat but can also be used standalone. These components facilitate the development of AI assistants such as ChatGPT, Grok, Gemini, and others.

New NodeJS Packages

Including stream-chat-js-ai-sdk and stream-chat-langchain which provide seamless integrations with Vercel's AI SDK and LangChain, respectively.

Stream Chat delivers the realtime layer and conversational memory, while the AI SDK and LangChain each offer a clean interface to multiple LLMs and support more advanced AI workflows.

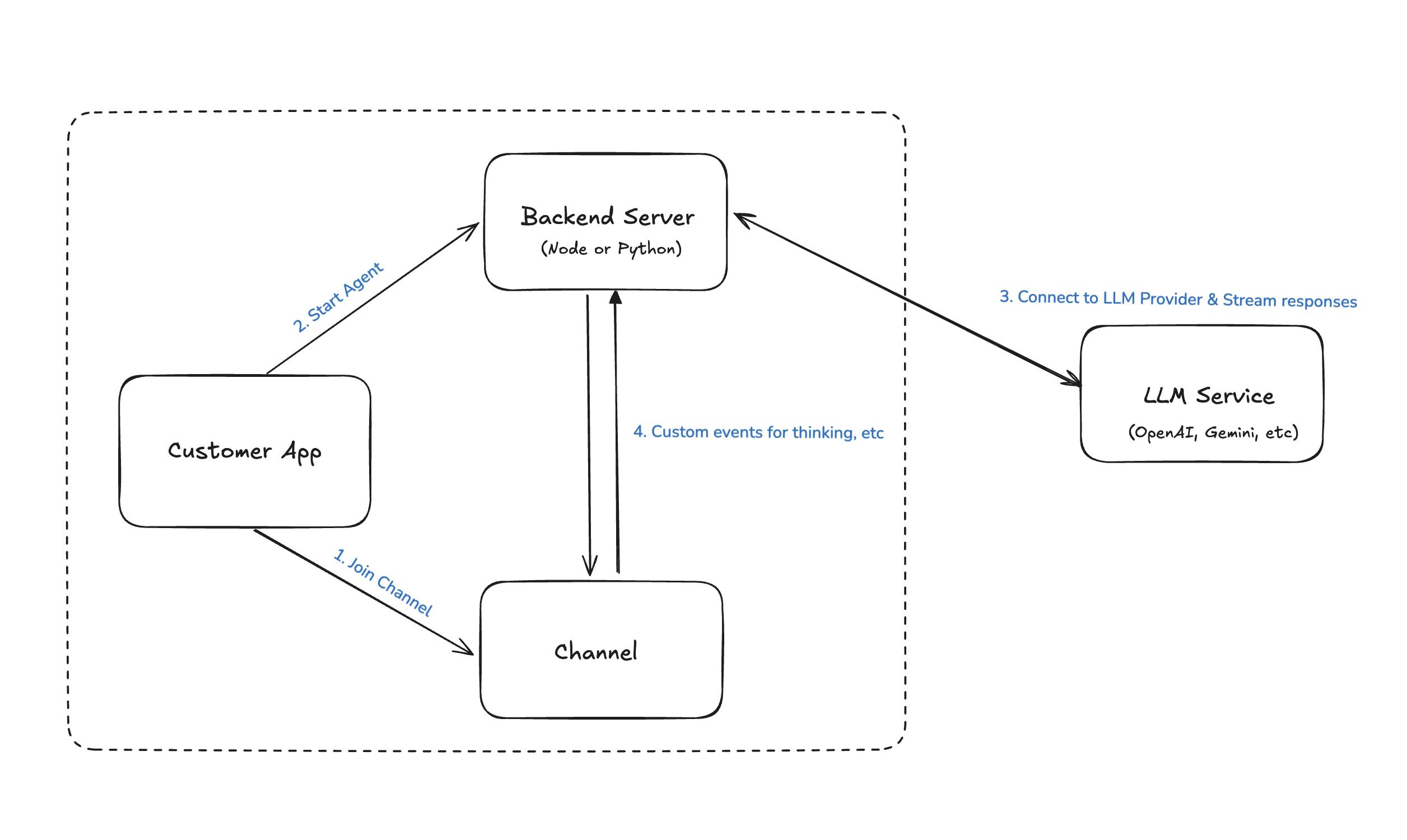

Additionally, we provide an example that uses a single backend server (NodeJS or Python) to manage the lifecycle of agents and handle the connection to external providers.

Here's an overview of the general approach you can take to integrate Stream Chat with different LLMs.

The integrating app can use our AI components to build the chatbot assistant’s UI faster. We offer components such as a streaming message view with advanced rendering support, composer, speech-to-text component, conversation suggestions and more.

Your backend can use one of the provided packages to facilitate the integration with popular AI SDKs, such as Langchain and Vercel’s AI SDK.

Or, in case you don’t want to depend on these packages, you can use our standalone NodeJS and Python examples, that show you how to integrate with Stream Chat’s server-side client and various LLMs (OpenAI and Anthropic are provided as examples).

Choose Your Platform

To get started building with AI and Stream, simply pick the framework most suited to your application and follow the step-by-step instructions outlined in each:

-

iOS: Learn how to create an assistant for iOS apps with Stream's iOS chat SDK.

-

Android: Build powerful and responsive assistants for Android applications with Stream's Android chat SDK.

-

React: Develop chat assistants for web applications with Stream's React Chat SDK.

-

React Native: Step into the world of AI-enhanced chat apps with Stream's React Native SDK and our tutorial.

For your backend integration, you can try out one of these options:

-

AI SDK Integration: Integrate StreamChat server-side with the popular AI SDK from Vercel.

-

Langchain Integration: Another StreamChat server-side integration with the popular agentic framework Langchain.

-

Standalone Samples: If you prefer not to use additional dependencies, kick start your integration with our sample projects in Python and NodeJS.

Start Building Today

Ready to take it for a spin? Choose your platform and give it an assistant a shot! Our team also created a helpful repo with examples for each SDK, including a sample backend implementation — check it out and leave us a ⭐ if you found it helpful.

Our team is always active on different social channels, so once you're finished with your app, feel free to tag us on X or LinkedIn and let us know how it went!