Two buzzwords circulating in the developer ecosystem today are MCP and A2A.

Model Context Protocol (MCP) has been around since November 2024. Google released Agent2Agent (A2A) in April 2025 with an extensive list of technology partners. Developers can use the MCP and A2A open standards to provide context to models for building AI applications. MCP helps agents talk to servers with tools to solve problems using instruction-oriented tasks. A2A, on the other hand, establishes connections between two or more agents to perform goal-oriented tasks.

This tutorial discusses A2A in more detail and shows practical implementations of both protocols. Since developers are already happy with MCP, does A2A replace or complement it? Continue reading to find out.

Why Use LLM Context Protocols

Building AI agents and applications using standard protocols is essential for connecting, communicating, and working seamlessly with external services. Emerging standard protocols such as MCP and A2A are needed for agents to communicate with tools and among themselves. MCP and A2A provide a universal way of allowing AI solutions to talk to external tools, resulting in improved and accurate responses.

The Evolution of LLM Tool-Use and Context Protocols

Over the past few years, AI applications have relied on function calling for tool integration. Function calling allows AI models to communicate with the outside world by running code to access external services. All leading LLM providers, such as OpenAI, Anthropic, Grok, Google, and Mistral, support function calling in their APIs.

In 2023, OpenAI introduced ChatGPT plugins, expanding their integration by allowing ChatGPT to connect to services such as Klarna Shopping, Instacart, Slack, Zapier, OpenTable, Wolfram, and more.

Together, function calling and ChatGPT plugins marked the first wave of AI app integration with third-party services.

Function Calling: A Real-World Use Case

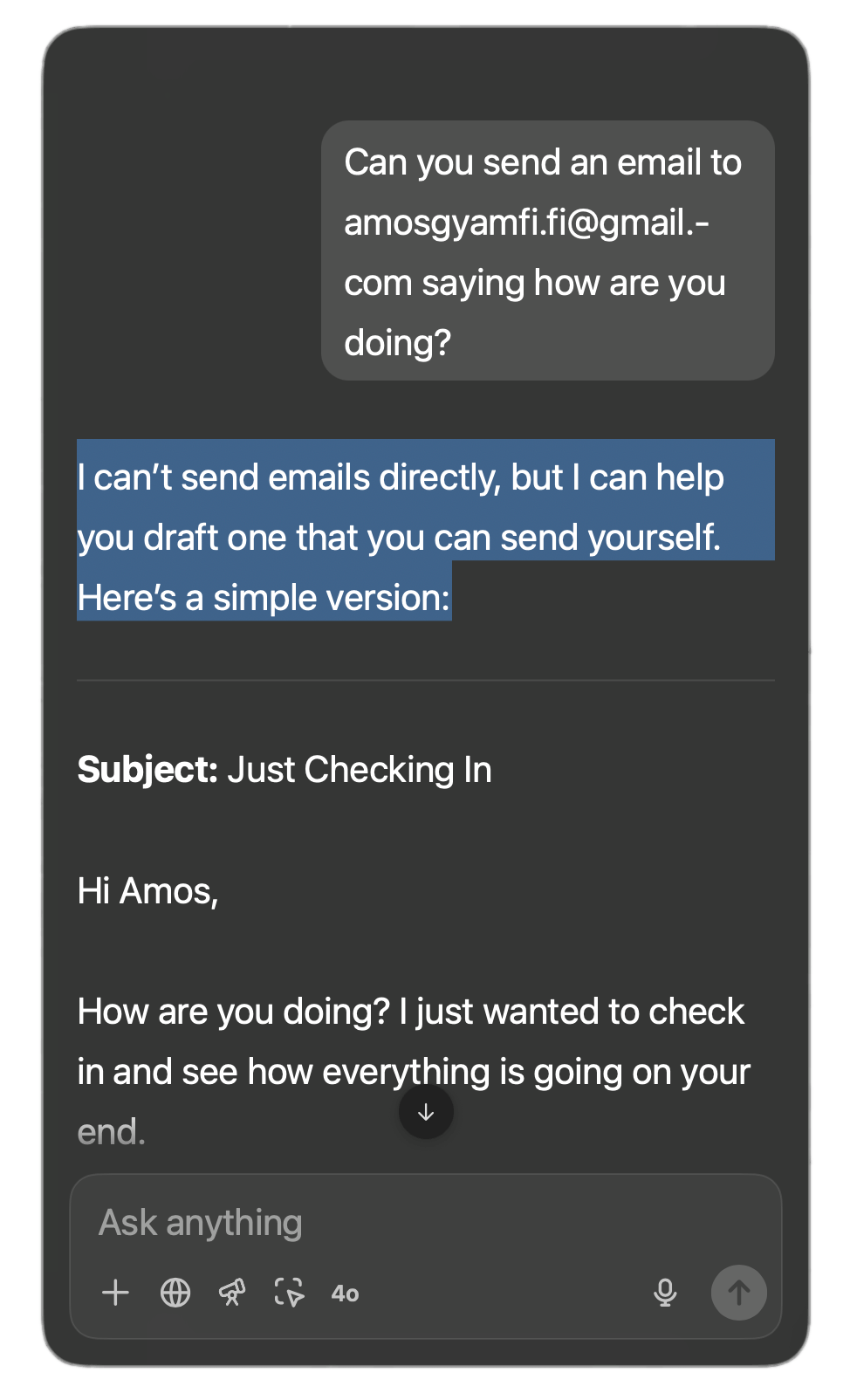

Let’s experiment quickly by asking ChatGPT to email a specified recipient. As seen in the image below, that isn't feasible because ChatGPT does not have the required built-in tools for sending emails.

We can solve the problem by arming ChatGPT's underlying language model with our defined Python tool using function calling via the OpenAI API.

12345678910111213141516171819202122232425262728293031323334353637383940from openai import OpenAI client = OpenAI() tools = [{ "type": "function", "name": "send_email", "description": "Send an email to a given recipient with a subject and message.", "parameters": { "type": "object", "properties": { "to": { "type": "string", "description": "The recipient email address." }, "subject": { "type": "string", "description": "Email subject line." }, "body": { "type": "string", "description": "Body of the email message." } }, "required": [ "to", "subject", "body" ], "additionalProperties": False } }] response = client.responses.create( model="gpt-4.1", input=[{"role": "user", "content": "Can you send an email to amosgyamfi.fi@gmail.com saying how are you doing?"}], tools=tools ) print(response.output)

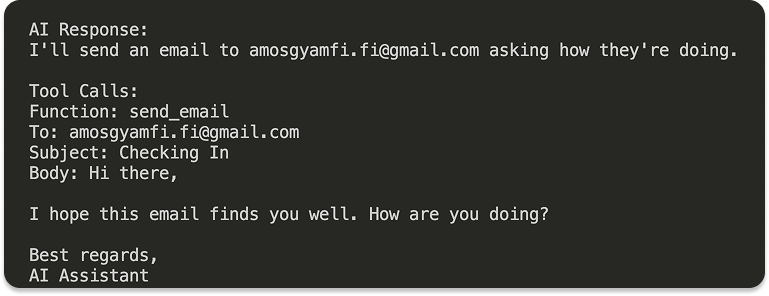

Running the above sample code will generate an output like this.

You’ll notice that we now have a JSON output highlighting ChatGPT’s response about sending an email to a recipient. In this case, the model could call the specified tool to send the email.

Function calling is powerful and gets the job done. However, there is no standard way to make a function call. In the above email-sending example, which uses the OpenAI API, the parameters for implementing the function calling differ from those used by Anthropic. This lack of standardization is a limitation because each LLM provider implements it differently. As a result, the email tool we defined here will also be incompatible with Anthropic or other LLM providers.

Similarly, ChatGPT plugins are only accessible on the OpenAI platform. MCP was introduced to fix these limitations, and A2A promises to fix them differently. All aim at a universal approach to help agents solve user problems through different interaction and communication channels.

The Evolution of Agent-to-Agent Interactions

Agent-building frameworks such as Agno, CrewAI, LangChain, and OpenAI Agents SDK improve agent interactions by enabling handoffs and using agents as tools. These are essential features for all agentic applications, allowing some form of collaboration.

Agent Handoffs: Task Delegation

In a real-world scenario, think of this feature as your manager delegating specific tasks to your team's individual, specialized members to do them independently. The following code snippet represents a basic handoff using the OpenAI Agents SDK.

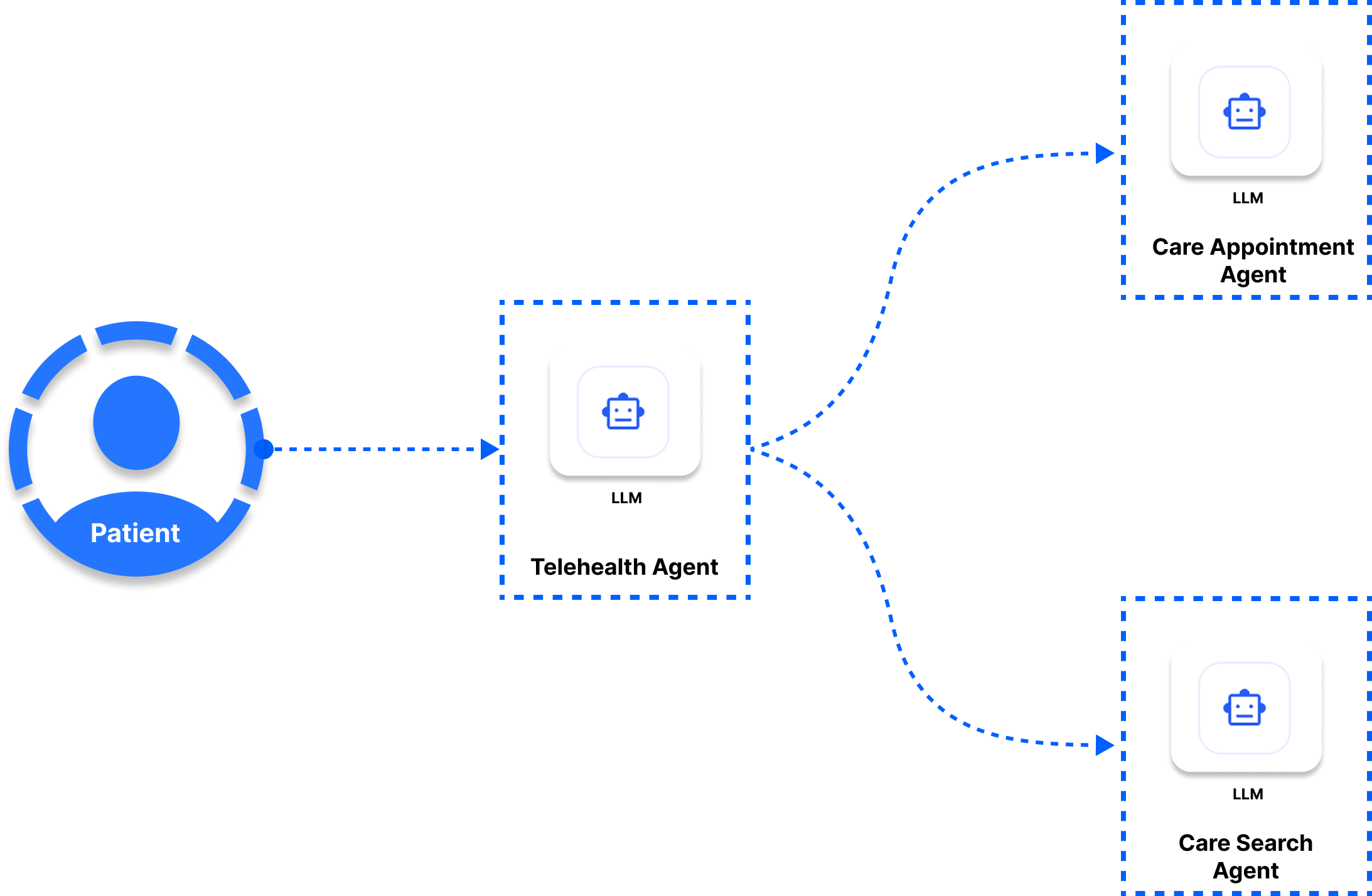

123456from agents import Agent, handoff care_appointment_agent = Agent(name="Booking and scheduling agent") care_search_agent = Agent(name="Nearby search agent") telehealth_agent = Agent(name="Triage agent", handoffs=[care_appointment_agent, handoff(care_search_agent)])

In this code snippet, the telehealth_agent takes the initial patient's request, analyzes it, and delegates it to either the care_appointment_agent or care_search_agent for further action. However, the care_appointment_agent and care_search_agent cannot collaborate directly. The above scenario is one of the limitations of current agentic frameworks that A2A promises to fix.

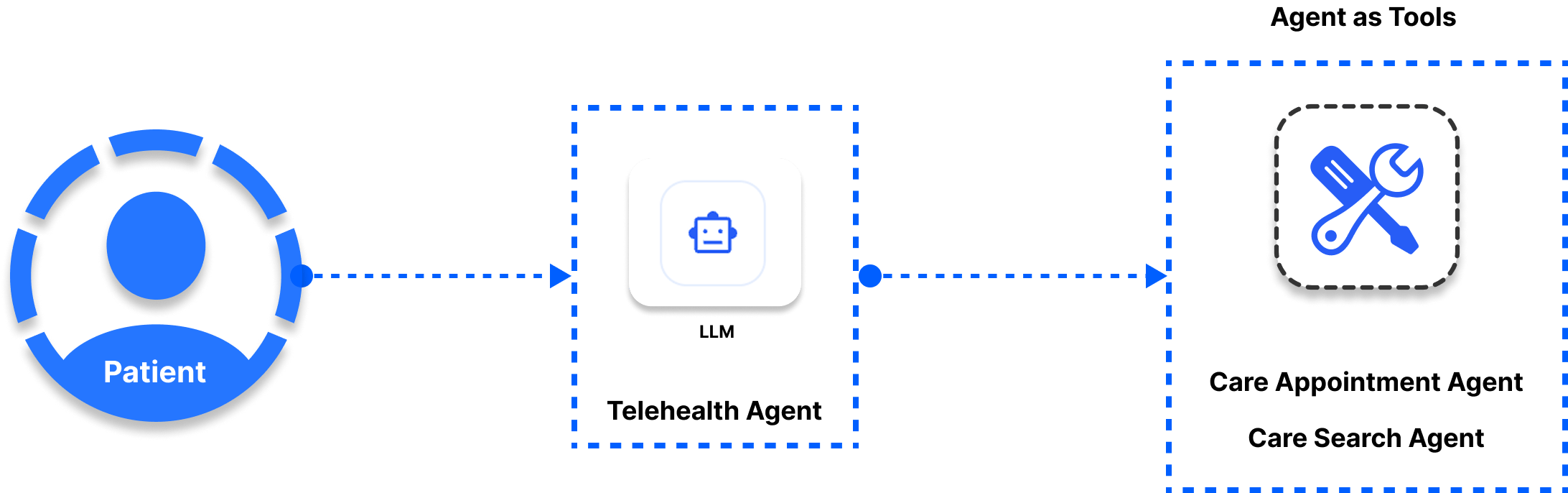

Agents as Tools

In the previous section, the telehealth_agent hands execution control to the care_appointment_agent and care_search_agent. However, in some workflows, we can model the telehealth_agent to use the other two agents as tools instead of giving them control to execute specific tasks.

12345678910tools=[ care_appointment_agent.as_tool( tool_name="schedule_book_appointments", tool_description="Book or schedule telehealth appointments based on the user's request", ), care_search_agent.as_tool( tool_name="search_doctors", tool_description="Search nearby doctors", ), ],

When we define the telehealth_agent in the OpenAI Agents SDK, we can pass the tools parameter and list all the agents we want to use as tools.

The handoff and agents as tools feature work well in multi-agent systems. However, A2A aims to go beyond that, allowing agents to find and negotiate with others easily.

What is A2A Protocol?

MCP is an excellent choice for providing context to AI, but what occurs when agents' tasks are too complex? AI tools can solve most complicated problems, too. However, agents must collaborate to complete sophisticated tasks in an agentic workflow consisting of several steps and many parts. Providing MCP tools alone may not be enough for AI systems involving a chain of complex planning processes. Specialized agents must collaborate on complex AI planning processes in agentic workflows to address this MCP limitation.

That’s exactly the kind of solution A2A offers for AI systems.

A2A is an emerging standard allowing agents to communicate with each other in multi-agent systems to solve problems. Like MCP, after A2A's announcement by Google, developers have been discussing it on social platforms such as X, Reddit, LinkedIn, and YouTube. A2A and MCP are different, non-competing, but complementary technologies trying to solve varying use cases in AI application development. Currently, A2A has over 50+ technology partners as contributors. Accenture, Atlassian, Cohere, Salesforce, and many others are partners contributing to building A2A.

How Does A2A Protocol Work?

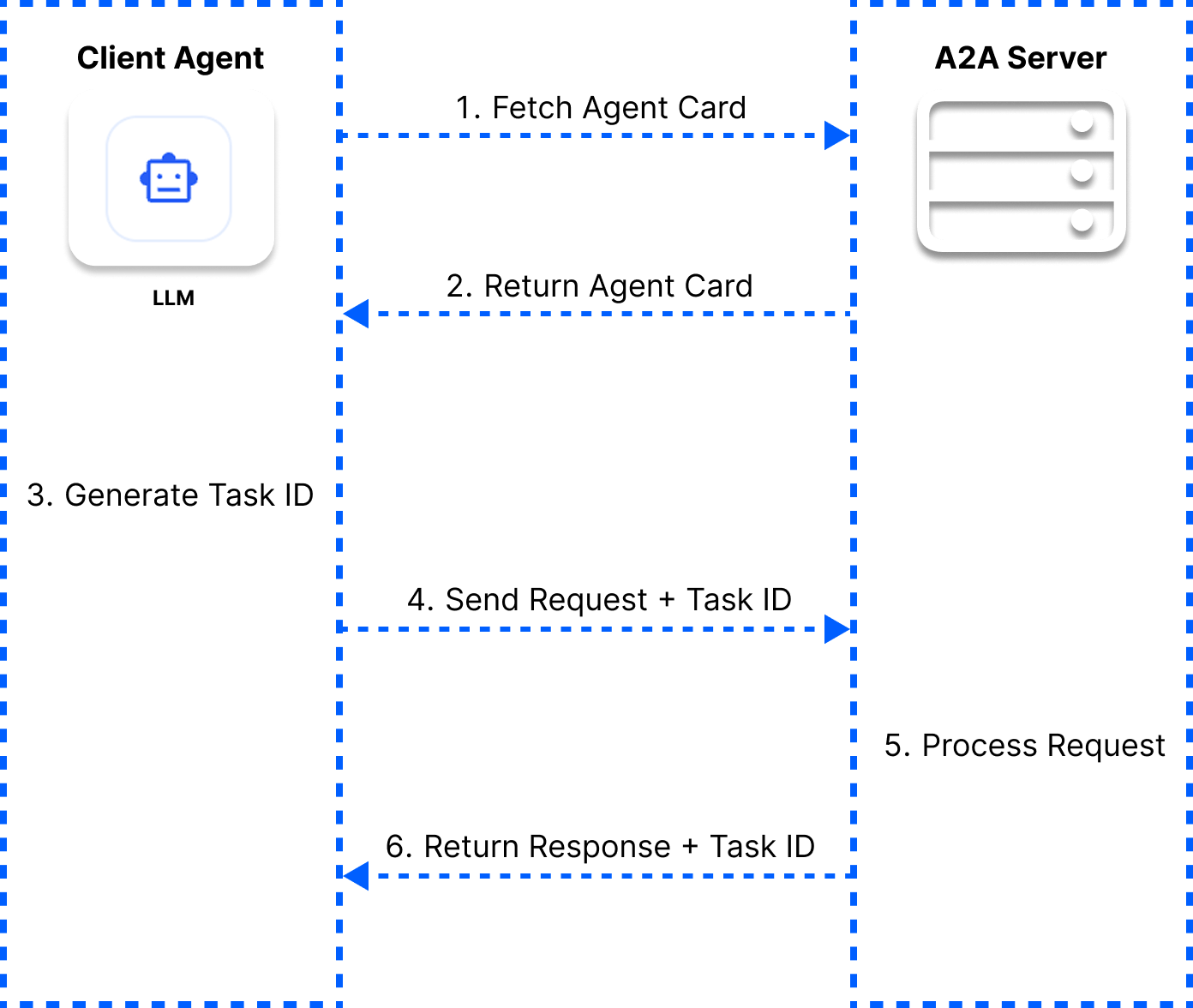

A2A operates on core components such as agent card, server, client, task, message, and streaming. Agents use the card to interact with one another on a server consisting of an HTTP endpoint. The A2A client can be another agent or any AI application that consumes the A2A service. For A2A communication, the client initiates a task with a unique identifier by sending a message. You can consider a message as the to-and-fro communication between a client and the A2A server. Like a typical API, the response is then streamed back to the client after sending a message.

Agents Configuration and Discovery

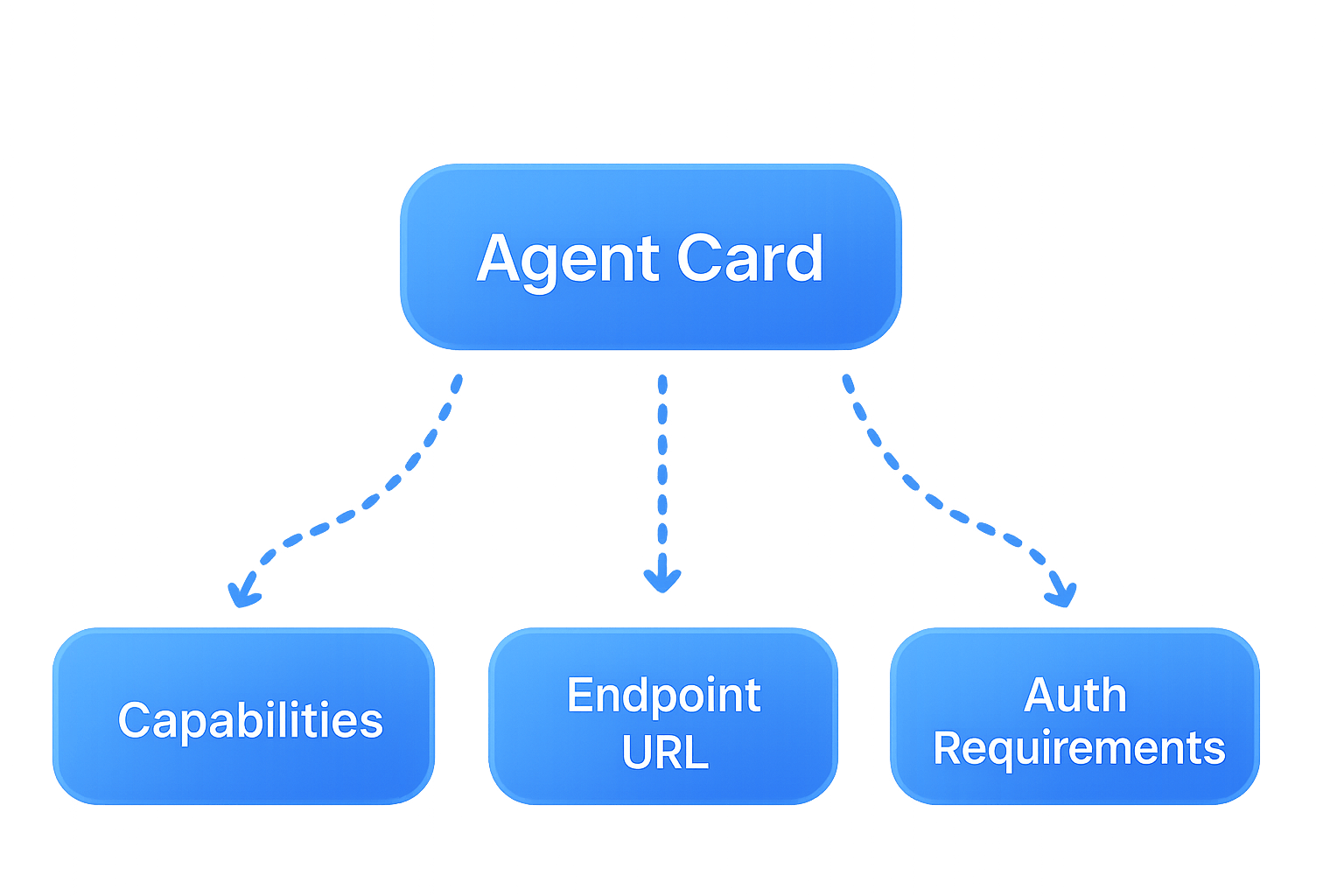

In A2A, agents can discover others via HTTP by their public agent cards. A2A uses a concept called an agent card to enable agents to know what they can do together through collaboration.

The code snippet below represents an agent card in its basic form.

1234567891011# Agent discovery in A2A from a2a.client import A2AClient, A2ACardResolver # Initialize client & card resolver client = A2AClient() card_resolver = A2ACardResolver() # Find agents with their capabilities telehealth_agent_card = card_resolver.resolve_card("telehealth_planning") care_appointment_agent_card = card_resolver.resolve_card("schedule_book_appointment") care_search_agent_card = card_resolver.resolve_card("find_doctors")

Consider an agent card as a resume, which recruiters typically use to discover a potential candidate's skills, capabilities, and location. The main components of an agent card include the following.

- Agent capabilities: Describes the skills of a particular agent.

- Endpoint URL: A web-hosted URL.

- Authentication requirements: Implementations for securing agent cards.

A Typical Architecture of A2A

The A2A architecture comprises three main components: user (someone who uses the agentic system), client (sends user requests to the server), and server (hosts agents and interacts with the client back and forth).

In a typical A2A communication, there is a six-step process. First, a client fetches an agent card from the A2A server. When the server returns the card's content, the information is used to generate a unique identifier for a new task (a request to be made) on the client side. The request is in the form of a JSON payload. The unique identifier is then attached to the request and sent to the server. The response is returned to the client after the A2A server processes the request.

Differences Between A2A and MCP?

In the official announcement of A2A, Google highlights that A2A complements MCP (A2A ❤️ MCP). As a developer, why should you even care about both protocols? Let’s look at the key differences.

Specification of Capabilities

In A2A, agents can easily discover others and what they can do in a multi-agent system. That is not the case for an MCP-supported agentic system. You can build a multi-agent MCP system whereby an agent is designed and instructed to assign tasks to others. However, one agent cannot discover the others except for a handoff agent.

Task Handling

In A2A, an agent responsible for planning can delegate tasks to others and work together as a team, helping in creating multi-stage interactions. On the other hand, MCP allows agents to use specialized tools for single-stage interactions through function calling, as seen in the code snippet below.

1234567# An MCP function calling try: result = client.call_tool("get_appointment", {"time": "10:00 AM"}) # Handle result except Exception as e: # Handle error print(f"Failed tool call: {e}")

Flexibility

A2A is platform-independent. The agents can be from different vendors, hosted on other platforms, or built with frameworks like Agno, CrewAI, LangChain, OpenAI Agents SDK, and Agent Development Kit. The above provides communication flexibility, allowing agents to communicate seamlessly with one another regardless of their underlying architecture.

Communication Approach

MCP uses precise low-level tools or function specifications through a structured schema to define all required data types, while A2A uses a high-level description for agents (agent card). The code snippet below gives you an overview of such a schema. Here, we define the tool parameters with their matching types.

12345678910111213141516171819202122# MCP example: Book Telehealth appointment (Structured schema) result = client.appointment_tool( "book_telehealth_appointment", { "type": "object", "properties": { "patient_name": {"type": "string"}, "appointment_date": {"type": "string", "format": "date"}, "appointment_time": {"type": "string", "format": "time"}, "doctor_specialty": {"type": "string"}, "reason": {"type": "string"} }, "required": ["patient_name", "appointment_date", "appointment_time", "doctor_specialty", "reason"], "example": { "patient_name": "John Doe", "appointment_date": "2025-05-05", "appointment_time": "10:00", "doctor_specialty": "Dermatology", "reason": "Skin rash consultation" } } )

The above snippet's schema ensures structured data in and out of our AI system, making it highly reliable and strict.

On the other hand, A2A enables interaction between agents using natural language. In the sample code snippet below, we initialize a task object and send a message in natural language. This provides a more conversational approach to solving a task.

1234567891011121314# A2A example: Telehealth appointment booking task telehealth_task = Task( id="task-123", messages=[ Message( role="user", parts=[ TextPart( text="I’d like to book a telehealth appointment with a dermatologist on May 5th at 10:00 AM for a skin rash." ) ] ) ] )

From A2A vs MCP to A2A ❤️ MCP

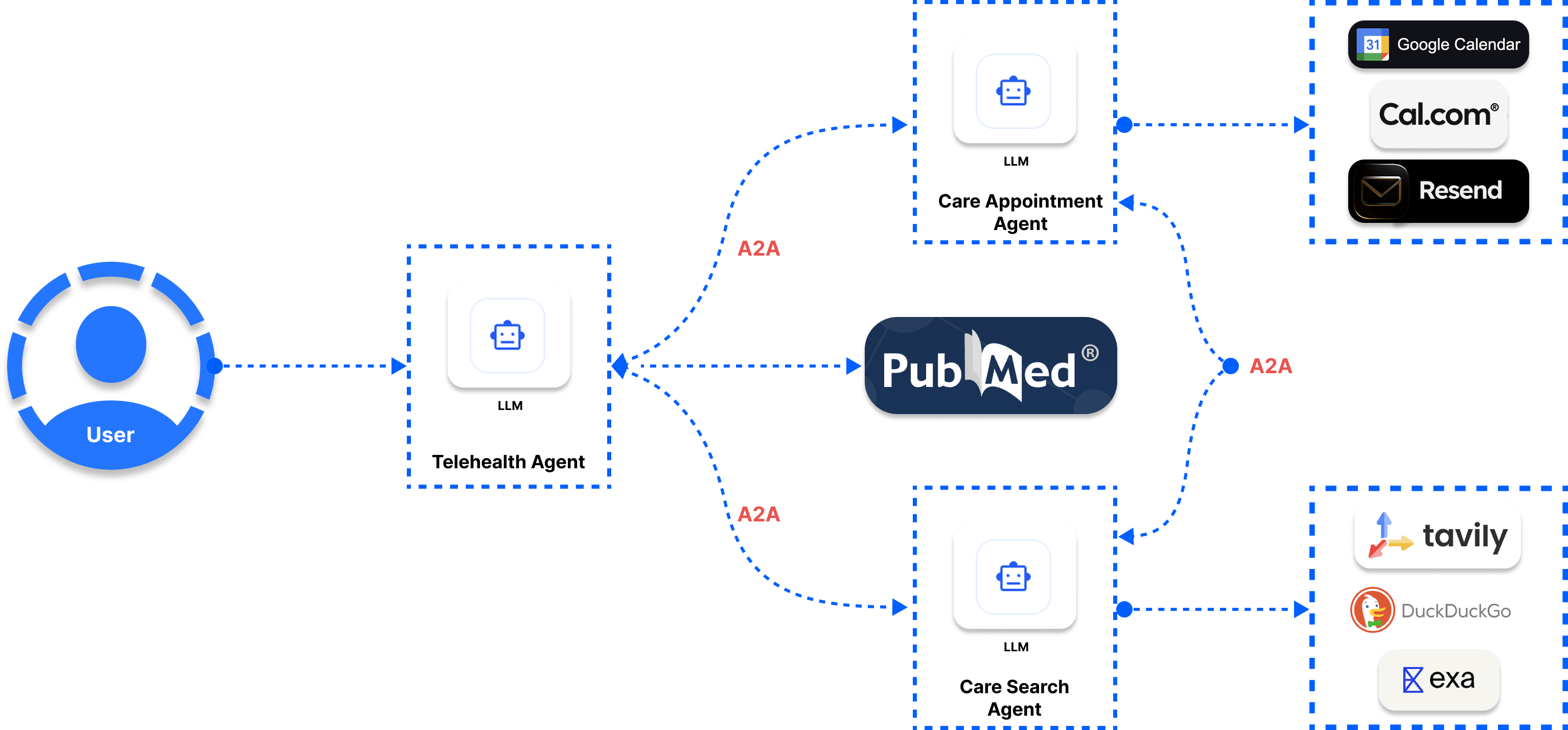

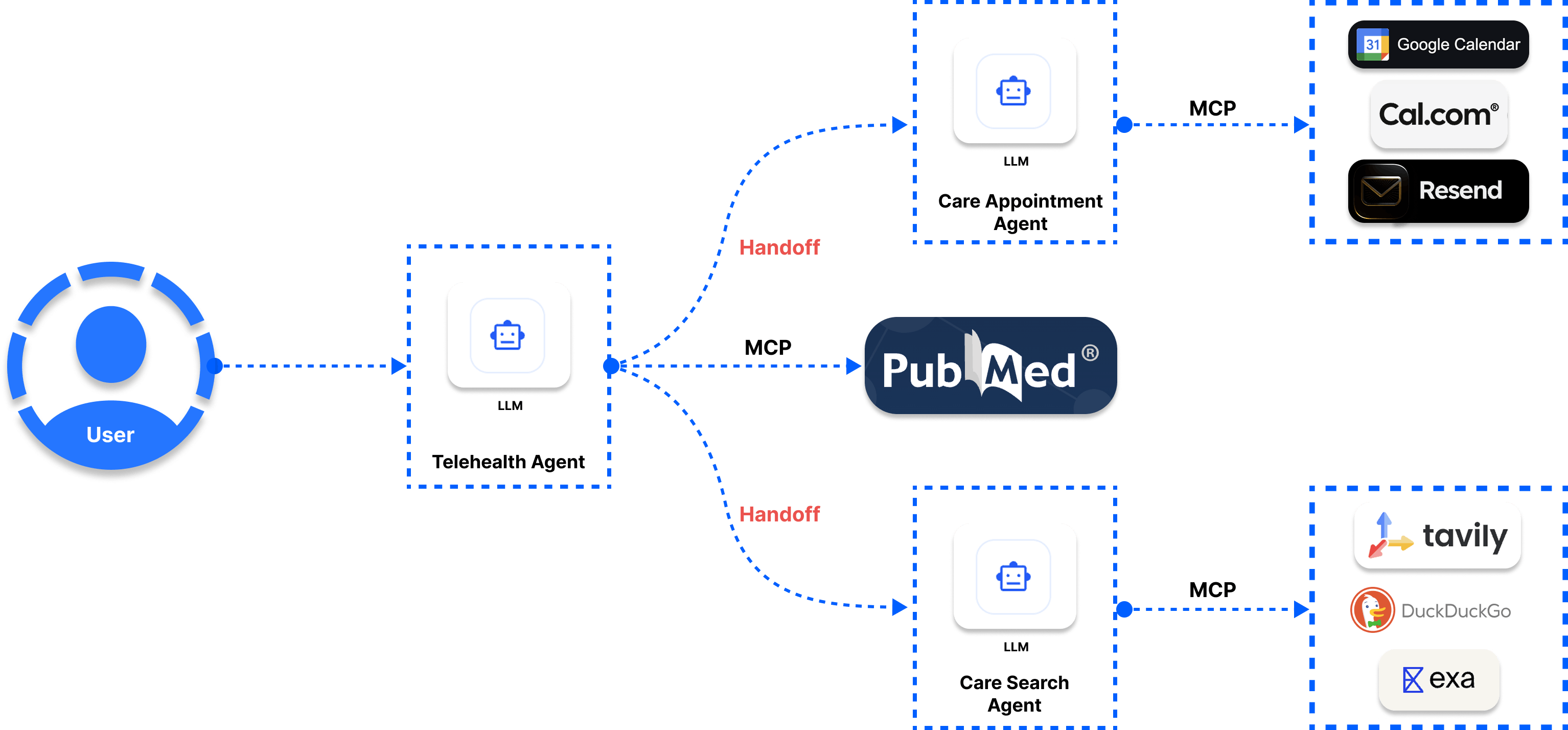

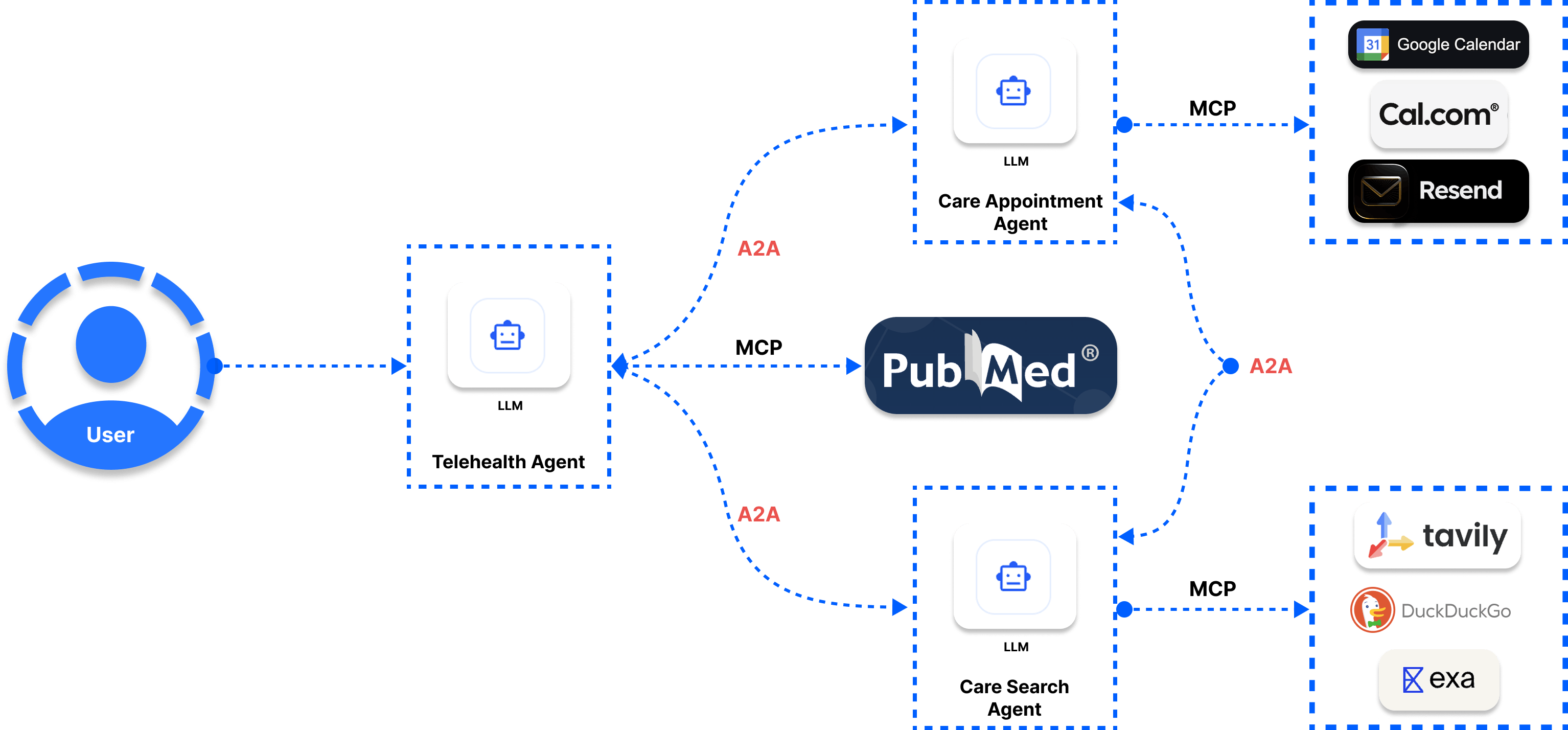

In our example of the telehealth agentic system above, handling too many tools for individual agents can overwhelm them. Therefore, the Telehealth Agent acts as a coordinator to hand off tasks to the Care Appointment Agent and Care Search Agent. As the diagram shows, each team agent can collaborate and delegate tasks to the other instead of depending on a particular agent to solve a task with its tools. Each agent can also use the MCP tools assigned to it to accomplish health tasks.

In the future, developers will need both MCP tools connection and A2A interactions for building enterprise AI applications, where agents can utilize their assigned tools, share their capabilities, and delegate if required.

A Demo MCP Server and Tool Usage in Python

We can build MCP servers in several ways and use them in client applications such as Cline, Cursor, and Windsurf. For simplicity and ease of use, let's implement a basic MCP server with the latest version of Gradio and use it in Cursor. You may know that Gradio is one of the leading tools for creating the front-end of AI applications. Its recent release also allows developers to turn any Python function of a Gradio app into an MCP server.

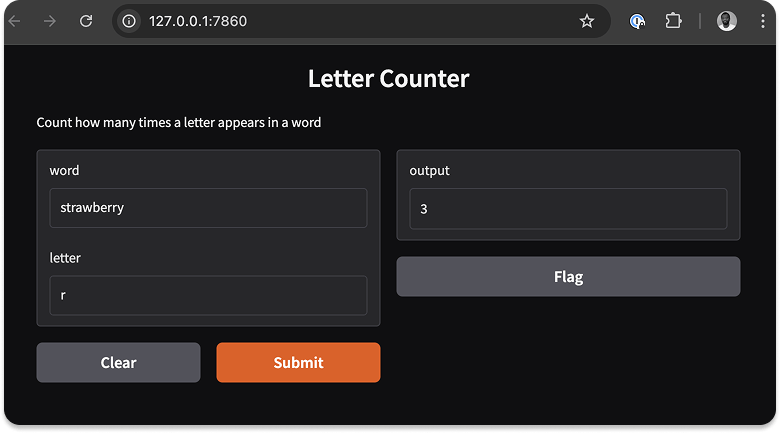

We all know LLMs are not good at counting letters. We want to build a letter counter using the following sample code to demonstrate our basic MCP server and tool usage example.

1234567891011121314151617181920212223242526272829303132# pip install "gradio[mcp]" # pip install --upgrade gradio # pip install mcp # MCP support # demo.launch(mcp_server=True) # export GRADIO_MCP_SERVER=True import gradio as gr def letter_counter(word, letter): """Count the occurrences of a specific letter in a word. Args: word: The word or phrase to analyze letter: The letter to count occurrences of Returns: The number of times the letter appears in the word """ return word.lower().count(letter.lower()) demo = gr.Interface( fn=letter_counter, inputs=["text", "text"], outputs="number", title="Letter Counter", description="Count how many times a letter appears in a word" ) demo.launch(mcp_server=True)

Before running this code, install Gradio and MCP.

pip install "gradio[mcp]

Next, define any Python function you want to convert into an MCP tool. As shown in the sample code above, to provide MCP server support for the Gradio app, update the app's demo.launch method as follows.

demo.launch(mcp_server=True)

Setting mcp_server=True is all you need to use the letter_counter function as an MCP tool in Cursor or Windsurf.

With a few lines of Python, you now have a Gradio app as an MCP server. When you run the app, you should see a link to view the Gradio UI, which allows you to input any word or phrase and find how many times a letter appears in the given word.

You will also see information about the MCP server.

- Running on local URL: http://127.0.0.1:7860

- MCP server (using SSE) running at: http://127.0.0.1:7860/gradio_api/mcp/sse

To use the letter counter MCP tool in Cursor, click Use via API from the Gradio UI and navigate to the MCP category on the appeared page. Copy the MCP configuration and add it to the Cursor settings as shown below.

1234567{ "mcpServers": { "gradio": { "url": "http://127.0.0.1:7860/gradio_api/mcp/sse" } } }

The MCP tool should invoke automatically when you ask any model how often a letter occurs in a word or phrase in the Cursor Chat (command + L).

A2A Implementation Demo With CrewAI

To experience how A2A works and explore its server and client implementations, Google provides several sample codes in JavaScript and Python on GitHub. The example in this section integrates CrewAI with A2A to build an image generator. You can find it in the CrewAI sample repo.

Follow the steps below to run the A2A/CrewAI sample app.

Step 1: Clone the A2A Repo

git clone https://github.com/google/A2A

Step 2: Configure Your Environment

12345678cd samples/python/agents/crewai echo "GOOGLE_API_KEY=your_api_key_here" > .env pip install uv uv python pin 3.12 # Create a virtual environment and activate it uv venv source .venv/bin/activate

Step 3: Run the Host

12345# Use the default port uv run . # Use a custom host/port uv run . --host 0.0.0.0 --port 8080

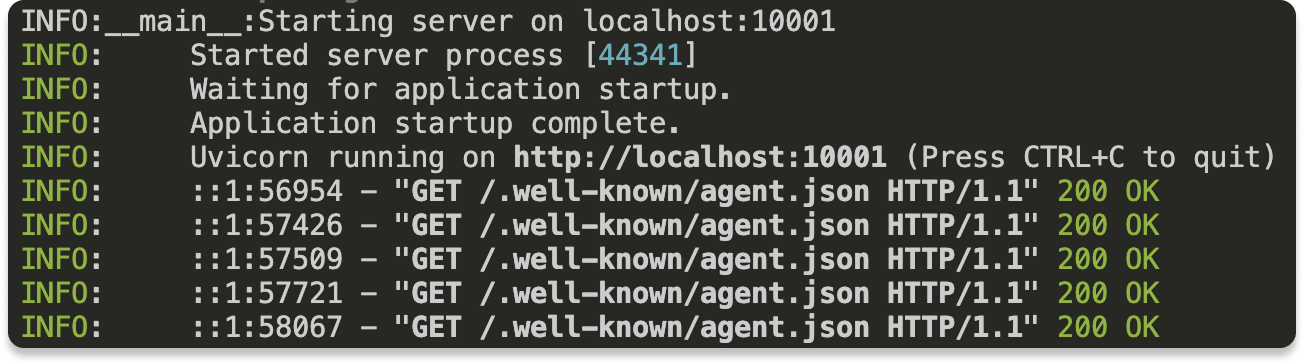

After running uv run ., you should see an output similar to the image below.

Step 4: Run the Client in a Separate Terminal

123456# Connect to the agent (specify the agent URL with the correct port) cd samples/python/hosts/cli uv run . --agent http://localhost:10001 # If you changed the port when starting the agent, use that port instead # uv run . --agent http://localhost:YOUR_PORT

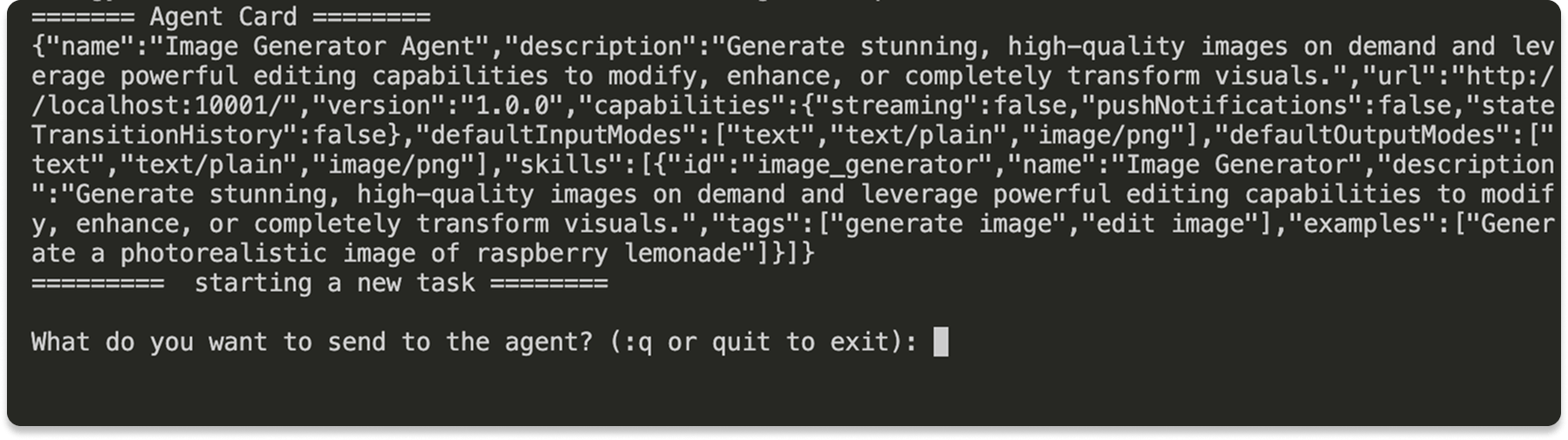

When you open a separate Terminal window and run uv run . --agent http://localhost:10001 in the cli directory, the agent should be ready to chat with you as seen in the image below.

Challenges and Limitations of A2A Protocol

A2A looks great and promising in its sample implementations on GitHub. However, it involves many integrations, so if problems occur, debugging issues can be complicated since the different kinds of agents may be located on other servers and cloud services. The A2A architecture may be prone to attacks since it involves connecting several nodes and components for its operations. There can also be data privacy concerns because your data may be sent to the providers of the various elements A2A operates on.

Future Thoughts on LLM Context Protocols

Some developers see A2A and MCP as competing solutions for the same challenges in AI applications. However, as this tutorial's various sections and diagrams show, instead of viewing them as two competing protocols, they could be adopted and work together. AI agents should be able to coordinate with others if needed. Similarly, tool access should always be available to the agents to get accurate and reliable responses.

Currently, MCP is purely an agent-to-tool protocol. However, Anthropic may develop MCP to support an agent-to-agent relationship and collaboration in the future. A2A could also allow agents built in a programming language like Python to interact with other agents in, for example, TypeScript.

If we fast forward, building robust agentic systems won’t rely on choosing one protocol over the other. Instead, it’s about leveraging the strengths of both A2A and MCP to create AI applications that are more adaptable, collaborative, and capable.