This tutorial teaches you how to quickly build a production-ready voice AI agent with OpenAI realtime using Stream’s video edge network, React, and Node.

- The instructions to the agent are sent server-side (node) so you can do function calling or RAG

- The integration uses Stream’s video edge network (for low latency) and WebRTC (so it works under slow/unreliable network conditions)

- You have full control over the AI setup and visualization

The end result will look something like this:

Step 1 - Credentials and Backend setup

First, we are going to setup the Node.js backend and get Stream and OpenAI credentials.

Step 1.1 - OpenAI and Stream credentials

To get started, you need an OpenAI account and an API key. Please note that the OpenAI credentials will never be shared client-side and will only be exchanged between yours and Stream servers.

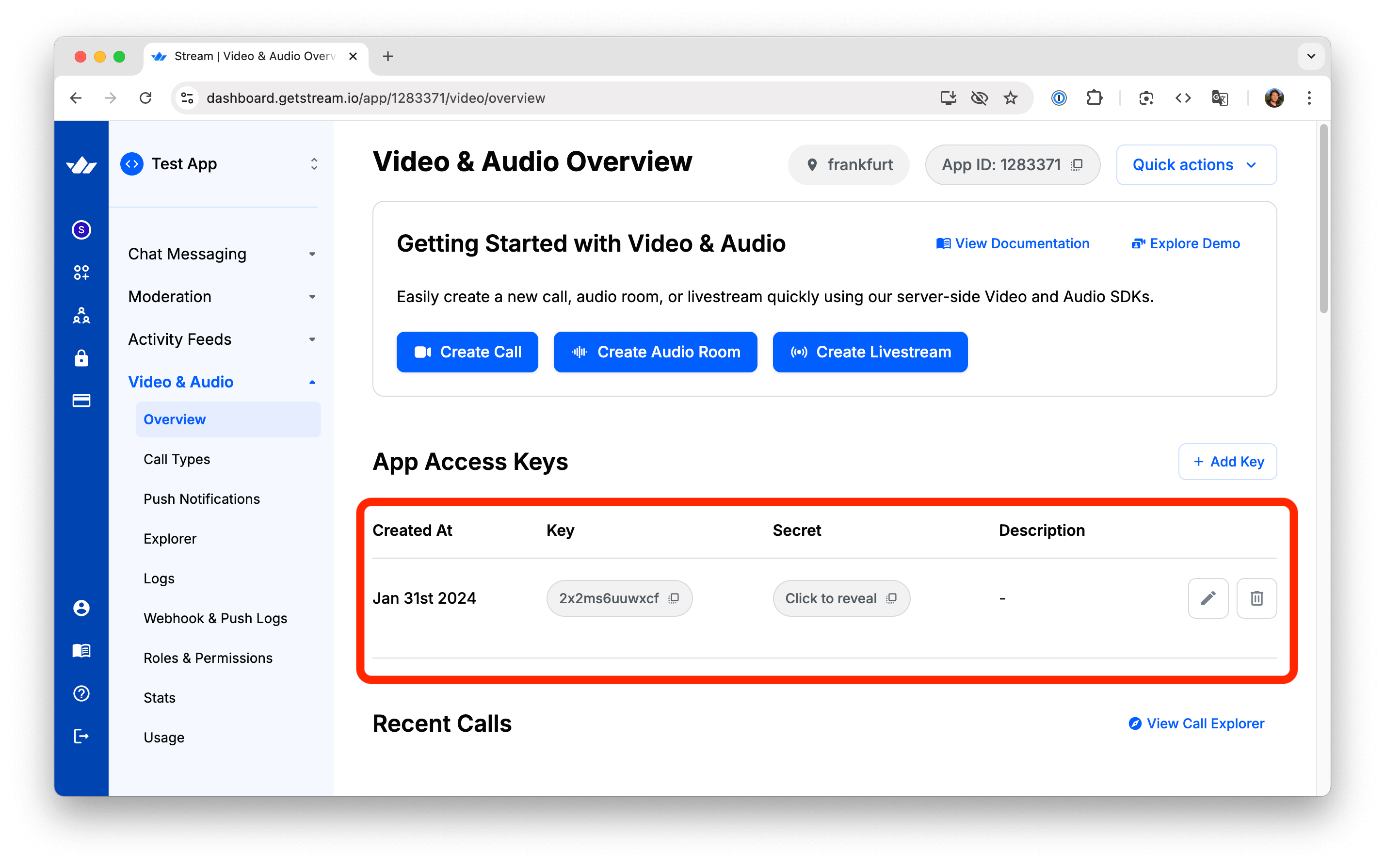

Additionally, you will need a Stream account and use the API key and secret from the Stream dashboard.

Step 1.2 - Create the Node.js project

Make sure that you are using a recent version of Node.js such as 22 or later, you can check that with node -v

First, let’s create a new folder called “openai-audio-tutorial”. From the terminal, go to the folder, and run the following command:

1npm init -y

This command generates a package.json file with default settings.

Step 1.3 - Installing the dependencies

Next, let’s update the generated package.json with the following content:

12345678910111213141516{ "name": "@stream-io/video-ai-demo-server", "type": "module", "dependencies": { "@hono/node-server": "^1.13.8", "@stream-io/node-sdk": "^0.4.17", "@stream-io/openai-realtime-api": "^0.1.0", "dotenv": "^16.3.1", "hono": "^4.7.4", "open": "^10.1.0" }, "scripts": { "server": "node ./server.mjs", "standalone-ui": "node ./standalone.mjs" } }

Then, run the following command to install the dependencies:

1npm install

Step 1.4 - Setup the credentials

Create a .env file in the project root with the following variables:

123456# Stream API credentials STREAM_API_KEY=your_stream_api_key STREAM_API_SECRET=your_stream_api_secret # OpenAI API key OPENAI_API_KEY=your_openai_api_key

Then edit the .env file with your actual API keys from Step 1.1. You can find the keys on your Stream Dashboard:

Step 1.5 - Implement the standalone-ui script

Before diving into the React integrations, we will build a simple server integration that will show how to connect to the AI agent to a call and connect to it with a simple web app.

Create a file called standalone.mjs and paste this content

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556import { config } from 'dotenv'; import { StreamClient } from '@stream-io/node-sdk'; import open from 'open'; import crypto from 'crypto'; // load config from dotenv config(); async function main() { // Get environment variables const streamApiKey = process.env.STREAM_API_KEY; const streamApiSecret = process.env.STREAM_API_SECRET; const openAiApiKey = process.env.OPENAI_API_KEY; // Check if all required environment variables are set if (!streamApiKey || !streamApiSecret || !openAiApiKey) { console.error("Error: Missing required environment variables, make sure to have a .env file in the project root, check .env.example for reference"); process.exit(1); } const streamClient = new StreamClient(streamApiKey, streamApiSecret); const call = streamClient.video.call("default", crypto.randomUUID()); // realtimeClient is https://github.com/openai/openai-realtime-api-beta openai/openai-realtime-api-beta const realtimeClient = await streamClient.video.connectOpenAi({ call, openAiApiKey, agentUserId: "lucy", }); // Set up event handling, all events from openai realtime api are available here see: https://platform.openai.com/docs/api-reference/realtime-server-events realtimeClient.on('realtime.event', ({ time, source, event }) => { console.log(`got an event from OpenAI ${event.type}`); if (event.type === 'response.audio_transcript.done') { console.log(`got a transcript from OpenAI ${event.transcript}`); } }); realtimeClient.updateSession({ instructions: "You are a helpful assistant that can answer questions and help with tasks.", }); // Get token for the call const token = streamClient.generateUserToken({user_id:"theodore"}); const callUrl = `https://pronto.getstream.io/join/${call.id}?type=default&api_key=${streamClient.apiKey}&token=${token}&skip_lobby=true`; // Open the browser console.log(`Opening browser to join the call... ${callUrl}`); await open(callUrl); } main().catch(error => { console.error("Error:", error); process.exit(1); });

Step 1.6 - Running the sample

At this point, we can run the script with this command:

1npm run standalone-ui

This will open your browser and connect you to a call where you can talk to the OpenAI agent. As you talk to the agent, you will notice your shell will contain logs for each event that OpenAI is sending.

Let’s take a quick look at what is happening in the server-side code we just added:

- Here we instantiate Stream Node SDK with the API credentials and then use that to create a new call object. That call will be used to host the conversation between the user and the AI agent.

12const streamClient = new StreamClient(streamApiKey, streamApiSecret); const call = streamClient.video.call("default", crypto.randomUUID());

- The next step, is to have the Agent connect to the call and obtain a OpenAI Realtime API Client. The

connectOpenAifunction does the following things: it instantiates the Realtime API client and then uses Stream API to connect the agent to the call. The agent will connect to the call as a user with ID"lucy"

12345const realtimeClient = await streamClient.video.connectOpenAi({ call, openAiApiKey, agentUserId: "lucy", });

- We then use the realtimeClient object to pass instructions to OpenAI and to listen to events emitted by OpenAI. The interesting bit here is that

realtimeClientis an instance of OpenAI’s official API client. This gives you full control of what you can do with OpenAI

12345678910realtimeClient.on('realtime.event', ({ time, source, event }) => { console.log(`got an event from OpenAI ${event.type}`); if (event.type === 'response.audio_transcript.done') { console.log(`got a transcript from OpenAI ${event.transcript}`); } }); realtimeClient.updateSession({ instructions: "You are a helpful assistant that can answer questions and help with tasks.", });

Step 2 - Setup your server-side integration

This example was pretty simple to set up and showcases how easy it is to add an AI bot to a Stream call. When building a real application, you will need your backend to handle authentication for your clients as well as send instructions to OpenAI (RAG, function calling in most applications, needs to run on your backend).

So the backend we are going to build will take care of two things:

- Generate a valid token to the React app to join the call running on Stream

- Use Stream APIs to join the same call with the AI agent and set it up with instructions

Step 2.1 - Implement the server.mjs

Create a new file in the same project, called server.mjs, and add the following code:

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121122123124125126127128129130131132133134135136import { serve } from "@hono/node-server"; import { StreamClient } from "@stream-io/node-sdk"; import { Hono } from "hono"; import { cors } from "hono/cors"; import crypto from 'crypto'; import { config } from 'dotenv'; // load config from dotenv config(); // Get environment variables const streamApiKey = process.env.STREAM_API_KEY; const streamApiSecret = process.env.STREAM_API_SECRET; const openAiApiKey = process.env.OPENAI_API_KEY; // Check if all required environment variables are set if (!streamApiKey || !streamApiSecret || !openAiApiKey) { console.error("Error: Missing required environment variables, make sure to have a .env file in the project root, check .env.example for reference"); process.exit(1); } const app = new Hono(); app.use(cors()); const streamClient = new StreamClient(streamApiKey, streamApiSecret); /** * Endpoint to generate credentials for a new video call. * Creates a unique call ID, generates a token, and returns necessary connection details. */ app.get("/credentials", (c) => { console.log("got a request for credentials"); // Generate a shorter UUID for callId (first 12 chars) const callId = crypto.randomUUID().replace(/-/g, '').substring(0, 12); // Generate a shorter UUID for userId (first 8 chars with prefix) const userId = `user-${crypto.randomUUID().replace(/-/g, '').substring(0, 8)}`; const callType = "default"; const token = streamClient.generateUserToken({ user_id: userId, }); return c.json({ apiKey: streamApiKey, token, callType, callId, userId }); }); /** * Endpoint to connect an AI agent to an existing video call. * Takes call type and ID parameters, connects the OpenAI agent to the call, * sets up the realtime client with event handlers and tools, * and returns a success response when complete. */ app.post("/:callType/:callId/connect", async (c) => { console.log("got a request for connect"); const callType = c.req.param("callType"); const callId = c.req.param("callId"); const call = streamClient.video.call(callType, callId); const realtimeClient = await streamClient.video.connectOpenAi({ call, openAiApiKey, agentUserId: "lucy", }); await setupRealtimeClient(realtimeClient); console.log("agent is connected now"); return c.json({ ok: true }); }); async function setupRealtimeClient(realtimeClient) { realtimeClient.on("error", (event) => { console.error("Error:", event); }); realtimeClient.on("session.update", (event) => { console.log("Realtime session update:", event); }); realtimeClient.updateSession({ instructions: "You are a helpful assistant that can answer questions and help with tasks.", turn_detection: { type: "semantic_vad" }, input_audio_transcription: { model: "gpt-4o-transcribe" }, input_audio_noise_reduction: { type: "near_field" }, }); realtimeClient.addTool( { name: "get_weather", description: "Call this function to retrieve current weather information for a specific location. Provide the city name.", parameters: { type: "object", properties: { city: { type: "string", description: "The name of the city to get weather information for", }, }, required: ["city"], }, }, async ({ city, country, units = "metric" }) => { console.log("get_weather request", { city, country, units }); try { // This is a placeholder for actual weather API implementation // In a real implementation, you would call a weather API service here const weatherData = { location: country ? `${city}, ${country}` : city, temperature: 22, units: units === "imperial" ? "°F" : "°C", condition: "Partly Cloudy", humidity: 65, windSpeed: 10 }; return weatherData; } catch (error) { console.error("Error fetching weather data:", error); return { error: "Failed to retrieve weather information" }; } }, ); return realtimeClient; } // Start the server serve({ fetch: app.fetch, hostname: "0.0.0.0", port: 3000, }); console.log(`Server started on :3000`);

In the code above, we set up two endpoints: /credentials, which generates a unique call ID and authentication token, and /:callType/:callId/connect, which connects the AI agent (that we call “lucy”) to a specific video call.

The assistant follows predefined instructions, in this case trying to be helpful with tasks. Based on the purpose of your AI bot, you should update these instructions accordingly. In the same updateSession call we instruct OpenAI to use the semantic classifier for voice activity detection to detect when the user has finished speaking, a GPT-4o based model for transcriptions, and near-field noise reduction for audio.

We also show an example of a function call, using the get_weather tool.

Step 2.2 - Running the server

We can run the server now, this will launch a server and listen on port:3000

1npm run server

To make sure everything is working as expected, you can run a curl GET request from your terminal.

1curl -X GET http://localhost:3000/credentials

As a result, you should see credentials required to join the call. With that, we’re all setup server-side!

Step 3 - Setting up the React project

Now that we have a backend that handles connecting the AI agent to the Stream call, we can switch to React and the front end.

Step 3.1 - Bootstrap your React project

We are going to use Vite to bootstrap our project. Execute the following command to create a React project with TypeScript support (as usual, feel free to use the package manager of your choice):

12npm create vite@latest ai-video-demo -- --template react-ts cd ai-video-demo

Step 3.2 - Adding the Stream Video dependency

Follow the steps here in order to add the SDK as a dependency to your project. For the best experience, we recommend using version 1.12.6 or above:

1npm install @stream-io/video-react-sdk

Step 4 - Stream Video setup

To connect to the call, we must first fetch the credentials (GET /credentials) from the server we implemented in the previous steps.

Then, we need to use the POST /:callType/:id/connect endpoint to add the AI agent to the call.

Step 4.1 - Fetching the credentials

Let’s create a method to fetch the credentials from our server. Create a new file src/join.ts and add the following code:

123456789101112131415161718192021import { Call, StreamVideoClient } from "@stream-io/video-react-sdk"; interface CallCredentials { apiKey: string; token: string; callType: string; callId: string; userId: string; } const baseUrl = "http://localhost:3000"; export async function fetchCallCredentials(): Promise<CallCredentials> { const res = await fetch(`${baseUrl}/credentials`); if (res.status !== 200) { throw new Error("Could not fetch call credentials"); } return (await res.json()) as CallCredentials; }

This method sends a GET request to fetch the credentials, which we'll use to set up the StreamVideoClient and join the call.

Note that we're using http://localhost:3000 as the base URL, because for the purposes of this tutorial we'll be running the server that we implemented in the previous steps locally.

Step 4.2 - Connecting to Stream Video

We now have the credentials, and we can connect to Stream Video. To do this, add the following code to src/join.ts:

12345678910111213141516171819202122232425262728293031export async function joinCall( credentials: CallCredentials ): Promise<[client: StreamVideoClient, call: Call]> { const client = new StreamVideoClient({ apiKey: credentials.apiKey, user: { id: credentials.userId }, token: credentials.token, }); const call = client.call(credentials.callType, credentials.callId); await call.camera.disable(); try { await Promise.all([connectAgent(call), call.join({ create: true })]); } catch (err) { await call.leave(); await client.disconnectUser(); throw err; } return [client, call]; } async function connectAgent(call: Call) { const res = await fetch(`${baseUrl}/${call.type}/${call.id}/connect`, { method: "POST", }); if (res.status !== 200) { throw new Error("Could not connect agent"); } }

We are doing several things at once in this function:

- Creating and authenticating the

StreamVideoClientwith the credentials. - Using the

/:type/:id/connectendpoint to add the AI agent to the call. - Using the

call.join()method to add the user to the call.

We will call this method from our UI in the next steps.

Step 5 - Building the UI

Let’s start building our UI top to bottom. Replace the contents of App.tsx file with the following code:

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556575859606162636465666768697071727374757677787980818283848586import { Call, CancelCallButton, ParticipantsAudio, StreamCall, StreamTheme, StreamVideo, StreamVideoClient, ToggleAudioPublishingButton, useCall, useCallStateHooks, } from "@stream-io/video-react-sdk"; import { useState } from "react"; import { fetchCallCredentials, joinCall } from "./join"; import "@stream-io/video-react-sdk/dist/css/styles.css"; const credentialsPromise = fetchCallCredentials(); export default function App() { const [client, setClient] = useState<StreamVideoClient | null>(null); const [call, setCall] = useState<Call | null>(null); const [status, setStatus] = useState<"start" | "joining" | "joined">("start"); const handleJoin = () => { setStatus("joining"); credentialsPromise .then((credentials) => joinCall(credentials)) .then(([client, call]) => { setClient(client); setCall(call); setStatus("joined"); }) .catch((err) => { console.error("Could not join call", err); setStatus("start"); }); }; const handleLeave = () => { setClient(null); setCall(null); setStatus("start"); }; return ( <> {status === "start" && ( <button onClick={handleJoin}> Click to Talk to AI </button> )} {status === "joining" && <>Waiting for agent to join...</>} {client && call && ( <StreamVideo client={client}> <StreamCall call={call}> <CallLayout onLeave={handleLeave} /> </StreamCall> </StreamVideo> )} </> ); } function CallLayout(props: { onLeave?: () => void }) { const call = useCall(); const { useParticipants } = useCallStateHooks(); const participants = useParticipants(); return ( <> <StreamTheme> <ParticipantsAudio participants={participants} /> <div className="call-controls"> <ToggleAudioPublishingButton menuPlacement="bottom-end" /> <CancelCallButton onClick={() => { call?.endCall(); props.onLeave?.(); }} /> </div> </StreamTheme> </> ); }

The CallLayout component renders the ParticipantsAudio component, so that we can hear incoming audio, and two buttons to toggle the microphone and leave the call.

You can add the following styles to index.css so that the page looks a bit nicer:

123456789101112131415161718192021222324252627282930313233*, *::before, *::after { box-sizing: border-box; } body { font: 18px/20px monospace; margin: 0; background: #00080f; color: #fff; } #root { display: flex; align-items: center; justify-content: center; height: 100vh; position: relative; overflow: hidden; } .call-controls { position: fixed; top: 20px; right: 20px; display: flex; gap: 10px; } .str-video__device-settings__option { white-space: nowrap; }

We can now start the app and see how it looks in a browser. The app should be available on http://localhost:5173/

1npm run dev

As you can see, as soon as the app loads we fetch credentials from our server. These credentials are used when the user presses the “Click to Talk to AI” button.

We then show different UI parts based on the status.

At this point, you can run the app, join a call, and converse with the AI agent. However, we can take this step further and show nice visualizations based on the audio levels of the participants.

Step 6 - Visualizing the audio levels

Let’s implement the AudioVisualizer next. This component will listen to the audio levels provided by the call state and visualize them with a nice glowing animation that expands and contracts based on the user's voice amplitude. To make the visualization more lifelike, we'll add a subtle breathing effect to it. Let’s start by creating a new src/AudioVisualizer.tsx file.

Step 6.1 - Calculating MediaStream volume

Stream Video SDK exposes each participant's audio as MediaStream objects. We can use the browser's audio processing API to calculate participant's audio volume.

1234567891011121314151617181920212223242526272829303132333435363738394041function useMediaStreamVolume(mediaStream: MediaStream | null) { const [volume, setVolume] = useState(0); useEffect(() => { if (!mediaStream) { setVolume(0); return; } let audioContext: AudioContext; const promise = (async () => { audioContext = new AudioContext(); const source = audioContext.createMediaStreamSource(mediaStream); const analyser = audioContext.createAnalyser(); const data = new Float32Array(analyser.fftSize); source.connect(analyser); const updateVolume = () => { analyser.getFloatTimeDomainData(data); const volume = Math.sqrt( data.reduce((acc, amp) => acc + (amp * amp) / data.length, 0) ); setVolume(volume); return requestAnimationFrame(updateVolume); }; return updateVolume(); })(); return () => { const audioContextToClose = audioContext; promise.then((handle) => { cancelAnimationFrame(handle); audioContextToClose.close(); }); }; }, [mediaStream]); return volume; }

This hook takes a MediaStream and updates the volume state on every animation frame, based on the stream’s audio volume.

Now let’s use this hook for our visualization.

Step 6.2 - AudioVisualizer

Our AudioVisualizer component first determines which participant is currently speaking and calculates the volume for that participant. (We have a one-second debounce on the current speaker state to make the transition less jarring.)

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455import { CSSProperties, useEffect, useState } from "react"; import { StreamVideoParticipant, useCallStateHooks } from "@stream-io/video-react-sdk"; const listeningCooldownMs = 1000; export function AudioVisualizer() { const { useParticipants } = useCallStateHooks(); const participants = useParticipants(); const [activity, setActivity] = useState<"listening" | "speaking">( "speaking" ); const speaker = participants.find((p) => p.isSpeaking); const agent = useAgentParticipant(); const mediaStream = activity === "listening" ? participants.find((p) => p.isLocalParticipant)?.audioStream : agent?.audioStream; const volume = useMediaStreamVolume(mediaStream ?? null); useEffect(() => { if (!speaker && activity === "listening") { const timeout = setTimeout( () => setActivity("speaking"), listeningCooldownMs ); return () => clearTimeout(timeout); } const isUserSpeaking = speaker?.isLocalParticipant; setActivity(isUserSpeaking ? "listening" : "speaking"); }, [speaker, activity]); return ( <div className="audio-visualizer" style={ { "--volumeter-scale": Math.min(1 + volume, 1.1), "--volumeter-brightness": Math.max(Math.min(1 + volume, 1.2), 1), } as CSSProperties } > <div className={`audio-visualizer__aura audio-visualizer__aura_${activity}`} /> </div> ); } function useAgentParticipant(): StreamVideoParticipant | null { const { useParticipants } = useCallStateHooks(); const participants = useParticipants(); const agent = participants.find((p) => p.userId === "lucy") ?? null; return agent; }

We call our agent “lucy”, and based on that, we filter out this participant from the current user. We show a different color depending on who is speaking. If the AI is speaking, we show a blue color with different gradients. When the current user is speaking, we use a red color instead.

The secret ingredient here is CSS. Copy-Paste the following stylesheets in your index.css file:

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354.audio-visualizer { transform: scale(var(--volumeter-scale)); filter: brightness(var(--volumeter-brightness)); } .audio-visualizer__aura { display: flex; align-items: center; justify-content: center; } .audio-visualizer__aura::before, .audio-visualizer__aura::after { content: ""; position: fixed; z-index: -1; border-radius: 50%; mix-blend-mode: plus-lighter; animation: aura-pulse alternate ease-in-out infinite; } .audio-visualizer__aura::before { width: 60vmin; height: 60vmin; background: #0055fff0; animation-delay: -1s; animation-duration: 2s; } .audio-visualizer__aura_listening::before { background: #f759dbf0; } .audio-visualizer__aura::after { width: 40vmin; height: 40vmin; background: #1af0fff0; animation-duration: 5s; } .audio-visualizer__aura_listening::after { background: #f74069f0; } @keyframes aura-pulse { from { transform: scale(0.99); filter: brightness(0.85) blur(50px); } to { transform: scale(1); filter: brightness(1) blur(50px); } }

As you can see, we slightly increase the brightness and scale of our visualization when a participant is speaking. We also use a breathing effect to make the visualization more lifelike.

Lets now update the CallLayout component inside the App.tsx

12345678910111213141516171819202122232425262728// imports... import { AudioVisualizer } from "./AudioVisualizer.tsx"; // ... the rest of the code function CallLayout(props: { onLeave?: () => void }) { const call = useCall(); const { useParticipants } = useCallStateHooks(); const participants = useParticipants(); return ( <> <StreamTheme> <ParticipantsAudio participants={participants} /> <div className="call-controls"> <ToggleAudioPublishingButton menuPlacement="bottom-end" /> <CancelCallButton onClick={() => { call?.endCall(); props.onLeave?.(); }} /> </div> </StreamTheme> <AudioVisualizer /> {/* Add the AudioVisualizer component */} </> ); }

Now, you can run the app, talk to the AI, and see beautiful visualizations while the participants speak.

You can find the source code of the Node.js backend here, while the completed React tutorial can be found on the following page.

Recap

In this tutorial, we have built an example of an app that lets you talk with an AI bot using OpenAI Realtime and Stream’s video edge infrastructure. The integration uses WebRTC for the best latency and quality even with poor connections.

We have shown you how to use OpenAI’s real-time API and provide the agent with custom instructions, voice, and function calls. On the React side, we have shown you how to join the call and build an animation using the audio levels.

Both the video SDK for React and the API have plenty more features available to support more advanced use-cases.

Next Steps

- Explore the tutorials for other platforms: iOS, Android, Flutter, React Native.

- Check the Backend documentation with more examples in JS and Python.

- Read more about the React SDK documentation about additional features.