In this tutorial, we will learn how to build an audio room experience similar to Twitter Spaces or Clubhouse using Stream Video. The end result will support the following features:

- Backstage mode. You can start the call with your co-hosts and chat a bit before going live.

- Calls run on Stream's global edge network for optimal latency and scalability.

- There is no cap to how many listeners you can have in a room.

- Listeners can raise their hand, and be invited to speak by the host.

- Audio tracks are sent multiple times for optimal reliability.

- UI components are fully customizable, as demonstrated in the Flutter Video Cookbook.

You can find the full code for the audio room tutorial on the Flutter Video Tutorials repository.

Let's dive in! If you have any questions or need to provide feedback along the way, don't hesitate to use the feedback button - we're here to help!

Step 1 - Create a new project and add configuration

Let's begin by creating a new Flutter project and adding the required dependencies.

You can use the following command to achieve this:

123flutter create audioroom_tutorial --empty cd audioroom_tutorial flutter pub add stream_video stream_video_flutter

You should now have the dependencies in your pubspec.yaml file with the latest version:

123456dependencies: flutter: sdk: flutter stream_video: ^latest stream_video_flutter: ^latest

Stream offers several packages for integrating video capabilities into your application:

- stream_video_flutter: Contains pre-built UI components for quick implementation

- stream_video: The core client SDK for direct API access

- stream_video_push_notification: Provides push notification support and CallKit integration. We won't be using it in this tutorial.

Setting up Required Permissions

Before proceeding, you need to add the required permissions for audio functionality to your app.

For Android, update your AndroidManifest.xml file by adding these permissions:

12345678910111213141516<manifest xmlns:android="http://schemas.android.com/apk/res/android"> <uses-permission android:name="android.permission.INTERNET"/> <uses-feature android:name="android.hardware.camera"/> <uses-feature android:name="android.hardware.camera.autofocus"/> <uses-permission android:name="android.permission.CAMERA"/> <uses-permission android:name="android.permission.RECORD_AUDIO"/> <uses-permission android:name="android.permission.ACCESS_NETWORK_STATE"/> <uses-permission android:name="android.permission.CHANGE_NETWORK_STATE"/> <uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS"/> <uses-permission android:name="android.permission.BLUETOOTH" android:maxSdkVersion="30"/> <uses-permission android:name="android.permission.BLUETOOTH_ADMIN" android:maxSdkVersion="30"/> <uses-permission android:name="android.permission.BLUETOOTH_CONNECT"/> ... </manifest>

For iOS, open your Info.plist file and add:

123456789101112<key>NSMicrophoneUsageDescription</key> <string>Microphone access is needed to speak in audio rooms</string> <key>UIApplicationSupportsIndirectInputEvents</key> <true/> <key>UIBackgroundModes</key> <array> <string>audio</string> <string>fetch</string> <string>processing</string> <string>remote-notification</string> <string>voip</string> </array>

Finally, you need to set the platform to iOS 14.0 or higher in your Podfile:

1platform :ios, '14.0'

Step 2 - Setting up the Stream Video client

To run the application, we need a valid user token. In a production app, this token would typically be generated by your backend API when a user logs in.

For simplicity in this tutorial, we'll provide a way to generate a user token:

Here are credentials to try out the app with:

| Property | Value |

|---|---|

| API Key | Waiting for an API key ... |

| Token | Token is generated ... |

| User ID | Loading ... |

| Call ID | Creating random call ID ... |

Now, let's import the package and initialize the Stream client with your credentials:

12345678910111213141516171819202122232425import 'package:flutter/material.dart'; import 'package:stream_video_flutter/stream_video_flutter.dart'; Future<void> main() async { // Ensure Flutter is able to communicate with Plugins WidgetsFlutterBinding.ensureInitialized(); // Initialize Stream video and set the API key for our app. StreamVideo( 'REPLACE_WITH_API_KEY', user: const User( info: UserInfo( name: 'John Doe', id: 'REPLACE_WITH_USER_ID', ), ), userToken: 'REPLACE_WITH_TOKEN', ); runApp( const MaterialApp( home: HomeScreen(), ), ); }

Step 3 - Building the home screen

Our application will consist of two main screens:

- A home screen with options to create or join audio rooms

- The audio room screen to interact with other participants

Let's create the home screen with a button to create an audio room:

12345678910111213141516171819202122class HomeScreen extends StatefulWidget { const HomeScreen({super.key}); State<HomeScreen> createState() => _HomeScreenState(); } class _HomeScreenState extends State<HomeScreen> { Widget build(BuildContext context) { return Scaffold( body: Center( child: ElevatedButton( onPressed: () => _createAudioRoom(), child: const Text('Create an Audio Room'), ), ), ); } Future<void> _createAudioRoom() async {} }

Now, we can fill in the functionality to create a audio room whenever the button is pressed.

To do this, we have to do a few things:

- Create a call with a type of

audio_roomand pass in an ID for the call. - Create the call on Stream's servers using

call.getOrCreate(), adding the current user as a host. - Configure and join the call with camera/microphone settings.

- If call is successfully created, join the call and use

call.goLive()to start the audio room immediately. - Navigate to the page for displaying the audio room once everything is created properly.

⚠️ If you do not call call.goLive(), an audio_room call will be started in backstage mode, meaning the call hosts can join and see each other but the call will be invisible to others.

Here is what all of the above looks like in code:

12345678910111213141516171819202122232425262728293031323334353637383940Future<void> _createAudioRoom() async { // Set up our call object final call = StreamVideo.instance.makeCall( callType: StreamCallType.audioRoom(), id: 'REPLACE_WITH_CALL_ID', ); // Create the call and set the current user as a host final result = await call.getOrCreate( members: [ MemberRequest( userId: StreamVideo.instance.currentUser.id, role: 'host', ), ], ); if (result.isSuccess) { // Set some default behaviour for how our devices should be configured once we join a call. // Note that the camera will be disabled by default because of the `audio_room` call type configuration. final connectOptions = CallConnectOptions( microphone: TrackOption.enabled(), ); await call.join(connectOptions: connectOptions); // Allow others to see and join the call (exit backstage mode) await call.goLive(); Navigator.of(context).push( MaterialPageRoute( builder: (context) => AudioRoomScreen( audioRoomCall: call, ), ), ); } else { debugPrint('Not able to create a call.'); } }

Step 4 - Building the audio room screen

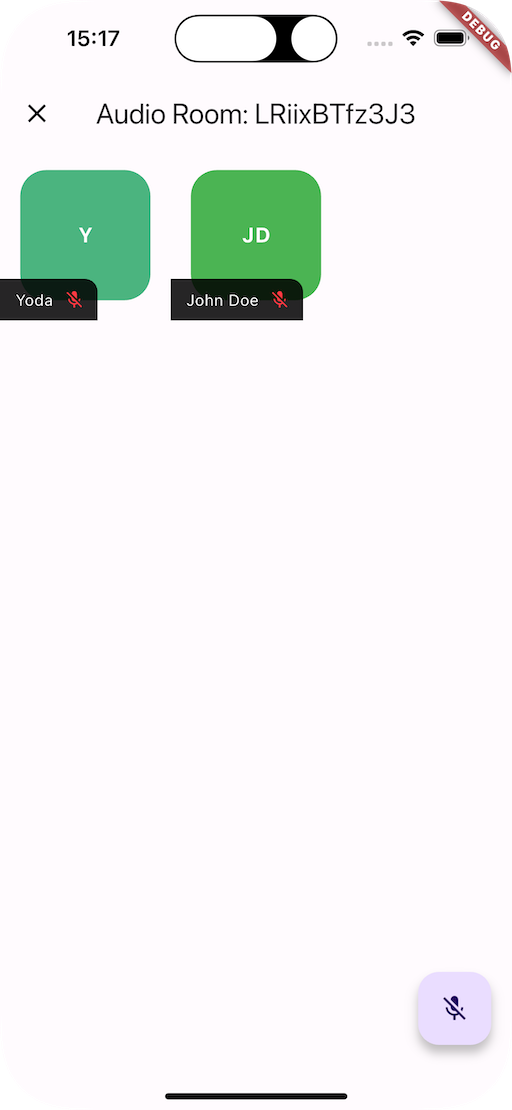

In this example, we'll create an audio room screen that shows all current participants in the room. The screen will include functionality for users to leave the audio room, toggle their microphone on/off, transition the call between live and backstage modes, and manage permission requests from other users.

Let's start by creating a basic audio room screen widget that takes the call object as a parameter.

This widget will listen to the call's state changes through call.state.valueStream, allowing us to react to any updates in the audio room in real-time.

Here's the implementation of our screen:

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849class AudioRoomScreen extends StatefulWidget { const AudioRoomScreen({ super.key, required this.audioRoomCall, }); final Call audioRoomCall; State<AudioRoomScreen> createState() => _AudioRoomScreenState(); } class _AudioRoomScreenState extends State<AudioRoomScreen> { late CallState _callState; void initState() { super.initState(); _callState = widget.audioRoomCall.state.value; } Widget build(BuildContext context) { return Scaffold( appBar: AppBar( title: Text('Audio Room: ${_callState.callId}'), leading: IconButton( onPressed: () async { await widget.audioRoomCall.leave(); if (context.mounted) { Navigator.of(context).pop(); } }, icon: const Icon( Icons.close, ), ), ), body: StreamBuilder<CallState>( initialData: _callState, stream: widget.audioRoomCall.state.valueStream, builder: (context, snapshot) { // ... }, ), ); } }

In this code sample, we display the ID of the call using the existing CallState in an AppBar at the top of the Scaffold.

Additionally, there is also a leading close action on the AppBar which leaves the audio room.

Next, inside the StreamBuilder, we can display the grid of participants if the state is retrieved correctly.

If retrieval fails or is still in progress, we can display a failure message or a loading indicator respectively.

123456789101112131415161718192021222324252627282930313233StreamBuilder<CallState>( initialData: _callState, stream: widget.audioRoomCall.state.valueStream, builder: (context, snapshot) { if (snapshot.hasError) { return const Center( child: Text('Cannot fetch call state.'), ); } if (snapshot.hasData && !snapshot.hasError) { var callState = snapshot.data!; return GridView.builder( itemBuilder: (BuildContext context, int index) { return Align( widthFactor: 0.8, child: ParticipantAvatar( participantState: callState.callParticipants[index], ), ); }, gridDelegate: const SliverGridDelegateWithFixedCrossAxisCount( crossAxisCount: 3, ), itemCount: callState.callParticipants.length, ); } return const Center( child: CircularProgressIndicator(), ); }, ),

For displaying the participants, we are creating a custom ParticipantAvatar widget that takes the participantState as a parameter:

12345678910111213141516171819202122232425262728293031323334353637383940class ParticipantAvatar extends StatelessWidget { const ParticipantAvatar({ required this.participantState, super.key, }); final CallParticipantState participantState; Widget build(BuildContext context) { return AnimatedContainer( duration: const Duration(milliseconds: 300), curve: Curves.linear, decoration: BoxDecoration( border: Border.all( color: participantState.isSpeaking ? Colors.green : Colors.white, width: 2, ), shape: BoxShape.circle, ), padding: const EdgeInsets.all(2), child: CircleAvatar( radius: 40, backgroundImage: participantState.image != null && participantState.image!.isNotEmpty ? NetworkImage(participantState.image!) : null, child: participantState.image == null || participantState.image!.isEmpty ? Text( participantState.name.substring(0, 1).toUpperCase(), style: const TextStyle( color: Colors.white, fontSize: 20, ), ) : null, ), ); } }

The ParticipantAvatar widget displays a circular avatar with a border that changes color based on whether the participant is speaking.

It also shows the participant's name or initials if an image is not available.

Step 5 - Adding a floating action button to control the microphone and backstage mode

Lets add a floating action buttons to control the microphone and backstage mode.

123floatingActionButton: AudioRoomActions( audioRoomCall: widget.audioRoomCall, ),

The AudioRoomActions widget is a custom widget that takes the audioRoomCall as a parameter.

It contains a FloatingActionButton for controlling the microphone and a FloatingActionButton.extended for controlling the backstage mode.

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121122123124125126127128129130131132133134135import 'dart:async'; class AudioRoomActions extends StatefulWidget { const AudioRoomActions({required this.audioRoomCall, super.key}); final Call audioRoomCall; State<AudioRoomActions> createState() => _AudioRoomActionsState(); } class _AudioRoomActionsState extends State<AudioRoomActions> { var _microphoneEnabled = false; var _waitingForPermission = false; StreamSubscription? _callEventsSubscription; void initState() { super.initState(); _microphoneEnabled = widget.audioRoomCall.connectOptions.microphone.isEnabled; _callEventsSubscription = widget.audioRoomCall.callEvents.on<StreamCallPermissionsUpdatedEvent>(( event, ) { if (event.user.id != StreamVideo.instance.currentUser.id) { return; } if (_waitingForPermission && event.ownCapabilities.contains(CallPermission.sendAudio)) { setState(() { _waitingForPermission = false; }); ScaffoldMessenger.of(context).showSnackBar( const SnackBar( content: Text( 'Permission to speak granted. You can now enable your microphone.', ), ), ); } }); } Widget build(BuildContext context) { return StreamBuilder<CallState>( initialData: widget.audioRoomCall.state.value, stream: widget.audioRoomCall.state.valueStream, builder: (context, snapshot) { final callState = snapshot.data; if (callState == null) { return const SizedBox.shrink(); } return Row( mainAxisAlignment: MainAxisAlignment.end, spacing: 20, children: [ if (callState.createdByMe) FloatingActionButton.extended( heroTag: 'go-live', label: callState.isBackstage ? const Text('Go Live') : const Text('Stop Live'), icon: callState.isBackstage ? const Icon( Icons.play_arrow, color: Colors.green, ) : const Icon( Icons.stop, color: Colors.red, ), onPressed: () { if (callState.isBackstage) { widget.audioRoomCall.goLive(); } else { widget.audioRoomCall.stopLive(); } }, ), FloatingActionButton( heroTag: 'microphone', child: _microphoneEnabled ? const Icon(Icons.mic) : const Icon(Icons.mic_off), onPressed: () { if (_microphoneEnabled) { widget.audioRoomCall.setMicrophoneEnabled(enabled: false); setState(() { _microphoneEnabled = false; }); } else { if (!widget.audioRoomCall.hasPermission( CallPermission.sendAudio, )) { widget.audioRoomCall.requestPermissions( [CallPermission.sendAudio], ); setState(() { _waitingForPermission = true; }); ScaffoldMessenger.of(context).showSnackBar( const SnackBar( content: Text('Permission to speak requested'), ), ); } else { widget.audioRoomCall.setMicrophoneEnabled(enabled: true); setState(() { _microphoneEnabled = true; }); } } }, ), ], ); }); } void dispose() { _callEventsSubscription?.cancel(); super.dispose(); } }

Audio rooms start in backstage mode by default, requiring us to call call.goLive() to make them publicly accessible. We've already implemented this when creating the call in the home screen.

However, we can also toggle between live and backstage modes using call.goLive() and call.stopLive() respectively.

It's important to note that regular users can only join an audio room when it's in live mode. We only show this button to the audio room owner.

In the code above, you'll notice our microphone toggle implementation includes a permission check with call.hasPermission(). This is crucial since most audio room participants are typically listeners without speaking privileges.

If a user lacks speaking permission, we can request it programmatically using the call.requestPermissions() method.

If the user has requested permission, we show a snackbar to inform them that the permission has been requested.

We also subscribe to the call.callEvents stream to be notified when the user's permission has been granted.

Step 6 - Handling permission requests

By default, the audio_room call type only allows speaker, admin and host roles to speak. Regular participants can request permission.

If different defaults make sense for your app, you can edit the call type in the dashboard or create your own.

Lets wrap the StreamBuilder in a Stack widget and add a PermissionRequests widget to the bottom of the screen.

12345678910111213141516171819202122body: Stack( children: [ StreamBuilder<CallState>( ... ), if (widget.audioRoomCall.state.value.createdByMe) Positioned( bottom: 0, left: 0, right: 0, child: SafeArea( top: false, child: Padding( padding: const EdgeInsets.only(bottom: 80), child: PermissionRequests( audioRoomCall: widget.audioRoomCall, ), ), ), ), ], ),

We only show the PermissionRequests widget to the call owner.

The PermissionRequests widget is a custom widget that takes the audioRoomCall as a parameter

and displays permission requests one at a time to the call owner.

Using this simple UI component, owner of the call can grant or deny permission requests.

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455565758596061626364656667686970717273747576777879808182838485868788899091929394959697class PermissionRequests extends StatefulWidget { const PermissionRequests({required this.audioRoomCall, super.key}); final Call audioRoomCall; State<PermissionRequests> createState() => _PermissionRequestsState(); } class _PermissionRequestsState extends State<PermissionRequests> { final List<StreamCallPermissionRequestEvent> _permissionRequests = []; void initState() { super.initState(); widget.audioRoomCall.onPermissionRequest = (permissionRequest) { setState(() { _permissionRequests.add(permissionRequest); }); }; } Widget build(BuildContext context) { if (_permissionRequests.isEmpty) { return const SizedBox.shrink(); } final request = _permissionRequests.first; final displayName = request.user.name.isNotEmpty ? request.user.name : request.user.id; final permissions = request.permissions.join(', '); return Padding( padding: const EdgeInsets.all(16), child: Card( elevation: 6, shape: RoundedRectangleBorder( borderRadius: BorderRadius.circular(12), ), child: Column( children: [ ListTile( dense: true, leading: CircleAvatar( radius: 18, child: Text( displayName.isNotEmpty ? displayName[0].toUpperCase() : '?', ), ), title: Text('$displayName requests'), subtitle: Text(permissions), ), Align( alignment: Alignment.centerRight, child: Padding( padding: const EdgeInsets.all(8.0), child: Row( mainAxisSize: MainAxisSize.min, children: [ TextButton( onPressed: () { setState(() { _permissionRequests.removeAt(0); }); }, child: const Text( 'Deny', style: TextStyle(color: Colors.red), ), ), const SizedBox(width: 8), ElevatedButton( onPressed: () async { await widget.audioRoomCall.grantPermissions( userId: request.user.id, permissions: request.permissions.toList(), ); if (mounted) { setState(() { _permissionRequests.removeAt(0); }); } }, child: const Text('Allow'), ), ], ), ), ), ], ), ), ); } }

Step 6 - Testing Your Audio Room

To make this a little more interactive, let's join the audio room from your browser:

If all works as intended, you will see an audio room with two participants:

You can request permission to speak by pressing the hand icon in the browser.

By default, the audio_room call type has backstage mode enabled, which creates a private space where hosts can prepare before making the room public. This is particularly useful for testing audio quality, discussing topics in advance, or coordinating with co-hosts before allowing audience members to join.

In this tutorial, we called call.goLive() immediately after creating the call, which transitions the room from backstage to live mode when you navigate to the audio room screen. This means any users can see and join your room right away.

You can customize this behavior through Stream's dashboard, where you can configure default settings for backstage mode, control who can transition calls between states, and set up other call-specific permissions to match your app's requirements.

Other built-in features

There are a few more exciting features that you can use to build audio rooms:

- Requesting Permissions: Participants can ask the host for permission to speak, share video etc

- Query Calls: You can query calls to easily show upcoming calls, calls that recently finished etc

- Call Previews: Before you join the call you can observe it and show a preview. IE John, Sarah and 3 others are on this call.

- Reactions & Custom events: Reactions and custom events are supported

- Recording & Broadcasting: You can record your calls, or broadcast them to HLS

- Chat: Stream's chat SDKs are fully featured and you can integrate them in the call

- Moderation: Moderation capabilities are built-in to the product

- Transcriptions: Transcriptions aren't available yet, but are coming soon

Recap

Find the complete code for this tutorial on the Flutter Video Tutorials Repository.

Stream Video allows you to quickly build a scalable audio-room experience for your app. Please do let us know if you ran into any issues while running this tutorial. Our team is also happy to review your UI designs and offer recommendations on how to achieve it with Stream.

To recap what we've learned:

- You setup a call with

var call = client.makeCall(callType: StreamCallType.audioRoom(),id: 'CALL_ID');. - The call type

audio_roomcontrols which features are enabled and how permissions are set up. - The

audio_roomby default enables backstage mode, and only allows admins to join before the call goes live. - When you join a call, realtime communication is setup for audio & video calling with

call.join(). - Data in

call.stateandcall.state.value.participantsmake it easy to build your own UI.

Calls run on Stream's global edge network of video servers. Being closer to your users improves the latency and reliability of calls. For audio rooms we use Opus RED and Opus DTX for optimal audio quality.

The SDKs enable you to build audio rooms, video calling and livestreaming in days.

We hope you've enjoyed this tutorial, and please do feel free to reach out if you have any suggestions or questions.

Final Thoughts

In this video app tutorial we built a fully functioning Flutter audio room app with our Flutter SDK component library. We also showed how easy it is to customize the behavior and the style of the Flutter video app components with minimal code changes.

Both the video SDK for Flutter and the API have plenty more features available to support more advanced use-cases.