In this tutorial, we will cover the steps to quickly build a low-latency live-streaming experience in Flutter using Stream's Video SDK. The livestream is broadcast using Stream's edge network of servers around the world. We will show you how to implement common livestream features, such as displaying the number of watchers, allowing users to wait before the livestream starts, handling different states and much more.

You can find a working project that uses the examples below here.

Overview

This guide will walk you through implementing livestreaming in your Flutter app using three key streaming technologies:

- WebRTC provides ultra-low latency streaming (sub-second) - perfect for interactive experiences like live auctions or Q&As where real-time engagement is critical

- HLS (HTTP Live Streaming) enables reliable large-scale broadcasting with broad device compatibility and adaptive quality. While it has higher latency (5-30 seconds), it excels at reaching large audiences with stable playback

- RTMP (Real-Time Messaging Protocol) bridges professional broadcasting tools like OBS to your app with low latency (2-5 seconds). While it’s being phased out in favor of newer protocols, it’s still commonly used for ingesting streams due to its reliability and low latency

We'll focus primarily on WebRTC streaming, while also covering HLS and RTMP integration. For quick implementation, we provide a ready-to-use LivestreamPlayer component with a polished default UI.

Ready to build your livestreaming experience? Let's get started! Feel free to use the feedback button if you have any questions - we're here to help make your implementation successful.

Step 1 - Create a new project and add configuration

Let's begin by creating a new Flutter project and adding the required dependencies.

You can use the following command to achieve this:

123flutter create livestream_tutorial --empty cd livestream_tutorial flutter pub add stream_video stream_video_flutter collection intl

You should now have the dependencies in your pubspec.yaml file with the latest version:

12345678dependencies: flutter: sdk: flutter stream_video: ^latest stream_video_flutter: ^latest collection: ^latest intl: ^lastest

Stream has several packages that you can use to integrate video into your application.

In this tutorial, we will use the stream_video_flutter package which contains pre-built UI elements for you to use.

You can also use the stream_video package directly if you need direct access to the low-level client.

Before you go ahead, you need to add the required permissions for video calling to your app.

In your AndroidManifest.xml file, add these permissions:

12345678910111213141516<manifest xmlns:android="http://schemas.android.com/apk/res/android"> <uses-permission android:name="android.permission.INTERNET"/> <uses-feature android:name="android.hardware.camera"/> <uses-feature android:name="android.hardware.camera.autofocus"/> <uses-permission android:name="android.permission.CAMERA"/> <uses-permission android:name="android.permission.RECORD_AUDIO"/> <uses-permission android:name="android.permission.ACCESS_NETWORK_STATE"/> <uses-permission android:name="android.permission.CHANGE_NETWORK_STATE"/> <uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS"/> <uses-permission android:name="android.permission.BLUETOOTH" android:maxSdkVersion="30"/> <uses-permission android:name="android.permission.BLUETOOTH_ADMIN" android:maxSdkVersion="30"/> <uses-permission android:name="android.permission.BLUETOOTH_CONNECT"/> ... </manifest>

For the corresponding iOS permissions, open the Info.plist file and add:

1234567891011121314<key>NSCameraUsageDescription</key> <string>$(PRODUCT_NAME) needs access to your camera for video calls.</string> <key>NSMicrophoneUsageDescription</key> <string>$(PRODUCT_NAME) needs access to your microphone for voice and video calls.</string> <key>UIApplicationSupportsIndirectInputEvents</key> <true/> <key>UIBackgroundModes</key> <array> <string>audio</string> <string>fetch</string> <string>processing</string> <string>remote-notification</string> <string>voip</string> </array>

Finally, you need to set the platform to iOS 14.0 or higher in your Podfile:

1platform :ios, '14.0'

Step 2 - Setting up the Stream Video client

To actually run this sample we need a valid user token. The user token is typically generated by your server side API. When a user logs in to your app you return the user token that gives them access to the call. To make this tutorial easier to follow we'll generate a user token for you:

Please update REPLACE_WITH_API_KEY, REPLACE_WITH_USER_ID, REPLACE_WITH_TOKEN, and REPLACE_WITH_CALL_ID with the actual values:

Here are credentials to try out the app with:

| Property | Value |

|---|---|

| API Key | Waiting for an API key ... |

| Token | Token is generated ... |

| User ID | Loading ... |

| Call ID | Creating random call ID ... |

First, let's import the package into the project and then initialise the client with the credentials you received.

Replace the content of the main.dart file with the following code:

12345678910111213141516171819202122232425import 'package:flutter/material.dart'; import 'package:stream_video_flutter/stream_video_flutter.dart'; Future<void> main() async { // Ensure Flutter is able to communicate with Plugins WidgetsFlutterBinding.ensureInitialized(); // Initialize Stream video and set the API key for our app. StreamVideo( 'REPLACE_WITH_API_KEY', user: const User( info: UserInfo( name: 'John Doe', id: 'REPLACE_WITH_USER_ID', ), ), userToken: 'REPLACE_WITH_TOKEN', ); runApp( const MaterialApp( home: HomeScreen(), ), ); }

Step 3 - Building the home screen

To keep things simple, our sample application will only consist of two screens, a landing page to allow users the ability to create a livestream, and another page to view and control the livestream.

Let's start by creating a new file called home_screen.dart. We'll implement a simple home screen that displays a button in the center - when pressed, this button will create and start a new livestream. While the livestream is being created the button will be disabled:

12345678910111213141516171819202122232425262728293031323334import 'package:flutter/material.dart'; class HomeScreen extends StatefulWidget { const HomeScreen({ super.key, }); State<HomeScreen> createState() => _HomeScreenState(); } class _HomeScreenState extends State<HomeScreen> { String? createLoadingText; Widget build(BuildContext context) { return Scaffold( body: Center( child: ElevatedButton( onPressed: createLoadingText == null ? () async { setState(() => createLoadingText = 'Creating Livestream...'); await _createLivestream(); setState(() => createLoadingText = null); } : null, child: Text(createLoadingText ?? 'Create a Livestream'), ), ), ); } Future<void> _createLivestream() async {} }

Now, we can fill in the functionality to create a livestream whenever the button is pressed.

To do this, we have to do a few things:

To create and start a livestream, we need to:

- Initialize a call instance with type

livestreamand a unique ID - Create the call on Stream's servers using

call.getOrCreate(), adding the current user as a host. - Update the call with backstage settings so viewers can see when the livestream will start.

- Configure and join the call with camera/microphone settings.

- Display the livestream UI by navigating to a new screen that will handle the video feed and controls

Here is what all of the above looks like in code:

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354import 'package:stream_video/stream_video.dart'; Future<void> _createLivestream() async { // Set up our call object final call = StreamVideo.instance.makeCall( callType: StreamCallType.liveStream(), id: 'REPLACE_WITH_CALL_ID', ); // Create the call and set the current user as a host final result = await call.getOrCreate( members: [ MemberRequest( userId: StreamVideo.instance.currentUser.id, role: 'host', ), ], ); if (result.isFailure) { debugPrint('Not able to create a call.'); return; } // Configure the call to allow users to join before it starts by setting a future start time // and specifying how many seconds in advance they can join via `joinAheadTimeSeconds` final updateResult = await call.update( startsAt: DateTime.now().toUtc().add(const Duration(seconds: 120)), backstage: const StreamBackstageSettings( enabled: true, joinAheadTimeSeconds: 120, ), ); if (updateResult.isFailure) { debugPrint('Not able to update the call.'); return; } // Set some default behaviour for how our devices should be configured once we join a call final connectOptions = CallConnectOptions( camera: TrackOption.enabled(), microphone: TrackOption.enabled(), ); // Our local app user can join and receive events await call.join(connectOptions: connectOptions); Navigator.of(context).push( MaterialPageRoute( builder: (context) => LiveStreamScreen(livestreamCall: call), ), ); }

For livestream calls the backstage mode is enabled by default. You can change it in the Stream Video Dashboard or by updating the call settings in the code.

1234567await call.update( backstage: const StreamBackstageSettings( enabled: false, ), ); If backstage mode is enabled, the call hosts can join and see each other but the call will be invisible to others until `call.goLive()` is called.

Step 4 - Building the livestream screen

Now lets build the livestream screen for streaming that shows the live video feed and tracks viewer count in real-time. We will also create UI elements for possible states of the livestream, such as when it's not started yet and in the backstage mode, when it's live, or when it's ended.

To implement this, we'll create a widget that takes a livestream call object as a parameter.

By using the PartialCallStateBuilder, our widget can react to relevant changes in the livestream state, such as the livestream being in backstage or ended.

Let's create a new file called livestream_screen.dart and add the following code to implement our livestream screen:

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748import 'package:flutter/material.dart'; import 'package:stream_video_flutter/stream_video_flutter.dart'; class LiveStreamScreen extends StatefulWidget { const LiveStreamScreen({ super.key, required this.livestreamCall, }); final Call livestreamCall; State<LiveStreamScreen> createState() => _LiveStreamScreenState(); } class _LiveStreamScreenState extends State<LiveStreamScreen> { Widget build(BuildContext context) { return PartialCallStateBuilder( call: widget.livestreamCall, selector: (state) => (isBackstage: state.isBackstage, endedAt: state.endedAt), builder: (context, callState) { return Scaffold( body: Builder( builder: (context) { if (callState.isBackstage) { return BackstageWidget( call: widget.livestreamCall, ); } if (callState.endedAt != null) { return LivestreamEndedWidget( call: widget.livestreamCall, ); } return LivestreamLiveWidget( call: widget.livestreamCall, ); }, ), ); }, ); } }

This screen uses a PartialCallStateBuilder to reactively update the UI based on changes in the livestream state. The next step is to implement the different elements of the screen which will display the backstage environement and the livestream video feed.

We'll start with the BackstageWidget which will display a countdown timer and a message indicating that the livestream is starting soon and the number of participants waiting to join.

We'll also add a button to transition the call from backstage mode to live mode or to leave the call.

By calling call.goLive() the call will transition from backstage mode to live mode and allow other participants to join the call.

By default users can only join live calls. If you want to allow users to join before the livestream starts, you can set the joinAheadTimeSeconds parameter when creating the call.

All permissions can also be adjusted in the Stream Video Dashboard.

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455import 'package:intl/intl.dart'; class BackstageWidget extends StatelessWidget { const BackstageWidget({ super.key, required this.call, }); final Call call; Widget build(BuildContext context) { return PartialCallStateBuilder( call: call, selector: (state) => state.callParticipants.where((p) => !p.roles.contains('host')).length, builder: (context, waitingParticipantsCount) { return Center( child: Column( spacing: 8, mainAxisAlignment: MainAxisAlignment.center, children: [ PartialCallStateBuilder( call: call, selector: (state) => state.startsAt, builder: (context, startsAt) { return Text( startsAt != null ? 'Livestream starting at ${DateFormat('HH:mm').format(startsAt.toLocal())}' : 'Livestream starting soon', style: Theme.of(context).textTheme.titleLarge, ); }), if (waitingParticipantsCount > 0) Text('$waitingParticipantsCount participants waiting'), ElevatedButton( onPressed: () { call.goLive(); }, child: const Text('Go Live'), ), ElevatedButton( onPressed: () { call.leave(); Navigator.pop(context); }, child: const Text('Leave Livestream'), ), ], ), ); }, ); } }

Now, let's implement the LivestreamEndedWidget which will display a message indicating that the livestream has ended and a list of recordings. Please note that we did not add a way to end the livestream, only to leave it. To end the livestream you call call.end();.

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778class LivestreamEndedWidget extends StatefulWidget { const LivestreamEndedWidget({ super.key, required this.call, }); final Call call; State<LivestreamEndedWidget> createState() => _LivestreamEndedWidgetState(); } class _LivestreamEndedWidgetState extends State<LivestreamEndedWidget> { late Future<Result<List<CallRecording>>> _recordingsFuture; void initState() { super.initState(); _recordingsFuture = widget.call.listRecordings(); } Widget build(BuildContext context) { return Scaffold( appBar: AppBar( automaticallyImplyLeading: false, leading: IconButton( icon: const Icon(Icons.arrow_back), onPressed: () { widget.call.leave(); Navigator.pop(context); }, ), ), body: Center( child: Column( mainAxisAlignment: MainAxisAlignment.center, children: [ const Text('Livestream has ended'), FutureBuilder( future: _recordingsFuture, builder: (context, snapshot) { if (snapshot.hasData && snapshot.data!.isSuccess) { final recordings = snapshot.requireData.getDataOrNull(); if (recordings == null || recordings.isEmpty) { return const Text('No recordings found'); } return Column( children: [ const Text('Watch recordings'), ListView.builder( shrinkWrap: true, itemCount: recordings.length, itemBuilder: (context, index) { final recording = recordings[index]; return ListTile( title: Text(recording.url), onTap: () { // open }, ); }, ), ], ); } return const SizedBox.shrink(); }, ), ], ), ), ); } }

Make sure the host role has permissions to list the recordings in Stream Dashboard if you want to display them in the app.

To start recording during the call you can use call.startRecording() method.

Alternatively you can set the recordings mode to auto in the Stream Video Dashboard, or by updating the call settings in the code.

Finally, let's implement the LivestreamLiveWidget which will display the host's livestream video feed and controls.

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051class LivestreamLiveWidget extends StatelessWidget { const LivestreamLiveWidget({super.key, required this.call}); final Call call; Widget build(BuildContext context) { return StreamCallContainer( call: call, callContentWidgetBuilder: (context, call) { return PartialCallStateBuilder( call: call, selector: (state) => state.callParticipants .where((e) => e.roles.contains('host')) .toList(), builder: (context, hosts) { if (hosts.isEmpty) { return const Center( child: Text("The host's video is not available"), ); } return StreamCallContent( call: call, callAppBarWidgetBuilder: (context, call) => CallAppBar( call: call, showBackButton: false, title: PartialCallStateBuilder( call: call, selector: (state) => state.callParticipants.length, builder: (context, count) => Text( 'Viewers: $count', ), ), onLeaveCallTap: () { call.stopLive(); }, ), callParticipantsWidgetBuilder: (context, call) { return StreamCallParticipants( call: call, participants: hosts, ); }, ); }, ); }, ); } }

If all works as intended, we will be able to create a livestream from the first device:

Error Handling

Livestreaming depends on many factors, such as the network conditions on both the user publishing the stream, as well as the viewers.

A proper error handling is needed, to be transparent to the potential issues the user might be facing.

When the network drops, the SDK tries to reconnect the user to the call. However, if it fails to do that, the status in the CallState becomes disconnected. This gives you the chance to show an alert to the user and provide some custom handling (e.g. a message to check the network connection and try again).

Here's an example how to do that, by listening to the status change in initState of the LiveStreamScreen widget:

1234567891011121314151617181920212223242526272829import 'dart:async'; class _LiveStreamScreenState extends State<LiveStreamScreen> { late StreamSubscription<CallState> _callStateSubscription; void initState() { super.initState(); _callStateSubscription = widget.livestreamCall.state.valueStream .distinct((previous, current) => previous.status != current.status) .listen((event) { if (event.status is CallStatusDisconnected) { // Prompt the user to check their internet connection } }); } void dispose() { _callStateSubscription.cancel(); super.dispose(); } Widget build(BuildContext context) { // ... } }

Step 5 - Viewing a livestream (WebRTC)

Stream uses a technology called SFU cascading to replicate your livestream over different SFUs around the world. This makes it possible to reach a large audience in realtime.

To view the livestream for testing, click Create livestream in the Flutter app and click the link below to watch the video in your browser:

This will work if you used the token snippet above. You might need to update the url with the call id you used in your code.

Viewing a livestream in a Flutter application

If you want to view the livestream through a Flutter application, you can use the LivestreamPlayer widget that is built into the Flutter SDK.

Let's add second button in the home screen to allow users to view the livestream:

12345678910111213141516171819202122232425262728293031323334353637Widget build(BuildContext context) { return Scaffold( body: Center( child: Column( mainAxisAlignment: MainAxisAlignment.center, spacing: 16, children: [ ElevatedButton( onPressed: createLoadingText == null ? () async { setState( () => createLoadingText = 'Creating Livestream...', ); await _createLivestream(); setState(() => createLoadingText = null); } : null, child: Text(createLoadingText ?? 'Create a Livestream'), ), ElevatedButton( onPressed: viewLoadingText == null ? () { setState( () => viewLoadingText = 'Joining Livestream...', ); _viewLivestream(); setState(() => viewLoadingText = null); } : null, child: Text(viewLoadingText ?? 'View a Livestream'), ), ], ), ), ); }

And implement the _viewLivestream method:

1234567891011121314151617181920212223242526272829303132333435363738394041424344Future<void> _viewLivestream() async { // Set up our call object final call = StreamVideo.instance.makeCall( callType: StreamCallType.liveStream(), id: 'REPLACE_WITH_CALL_ID', ); final result = await call.getOrCreate(); // Call object is created if (result.isSuccess) { // Set default behaviour for a livestream viewer final connectOptions = CallConnectOptions( camera: TrackOption.disabled(), microphone: TrackOption.disabled(), ); // Our local app user can join and receive events final joinResult = await call.join(connectOptions: connectOptions); if (joinResult case Failure failure) { debugPrint('Not able to join the call: ${failure.error}'); return; } Navigator.of(context).push( MaterialPageRoute( builder: (context) => Scaffold( appBar: AppBar( title: const Text('Livestream'), leading: IconButton( icon: const Icon(Icons.arrow_back), onPressed: () { call.leave(); Navigator.of(context).pop(); }, ), ), body: LivestreamPlayer(call: call), ), ), ); } else { debugPrint('Not able to create a call.'); } }

With this implementation the user will see our default UI for a livestream viewer with a back button to go back to the home screen.

The LivestreamPlayer widget has most required controls and info for viewing a livestream and makes your job to create a livestream viewing interface effortless.

To test this you should create 2 different users for the host and the viewer.

Step 6 (Optional) - Start HLS stream

Stream offers two flavors of livestreaming, WebRTC-based livestreaming and RTMP-based livestreaming. WebRTC based livestreaming allows users to easily start a livestream directly from their phone and benefit from ultra low latency.

The final piece of livestreaming using Stream is support for HLS or HTTP Live Streaming. HLS, unlike WebRTC based streaming, tends to have a 10 to 20 second delay but offers video buffering under poor network conditions.

To enable HLS support, your call must first be placed into "broadcasting" mode using the call.startHLS() method.

We can then obtain the HLS URL by querying the hlsPlaylistURL from call.state:

1234567final result = await call.startHLS(); if (result.isSuccess) { final url = call.state.value.egress.hlsPlaylistUrl; //... }

With the HLS URL, your call can be broadcast to most livestreaming platforms.

RTMP Livestreaming

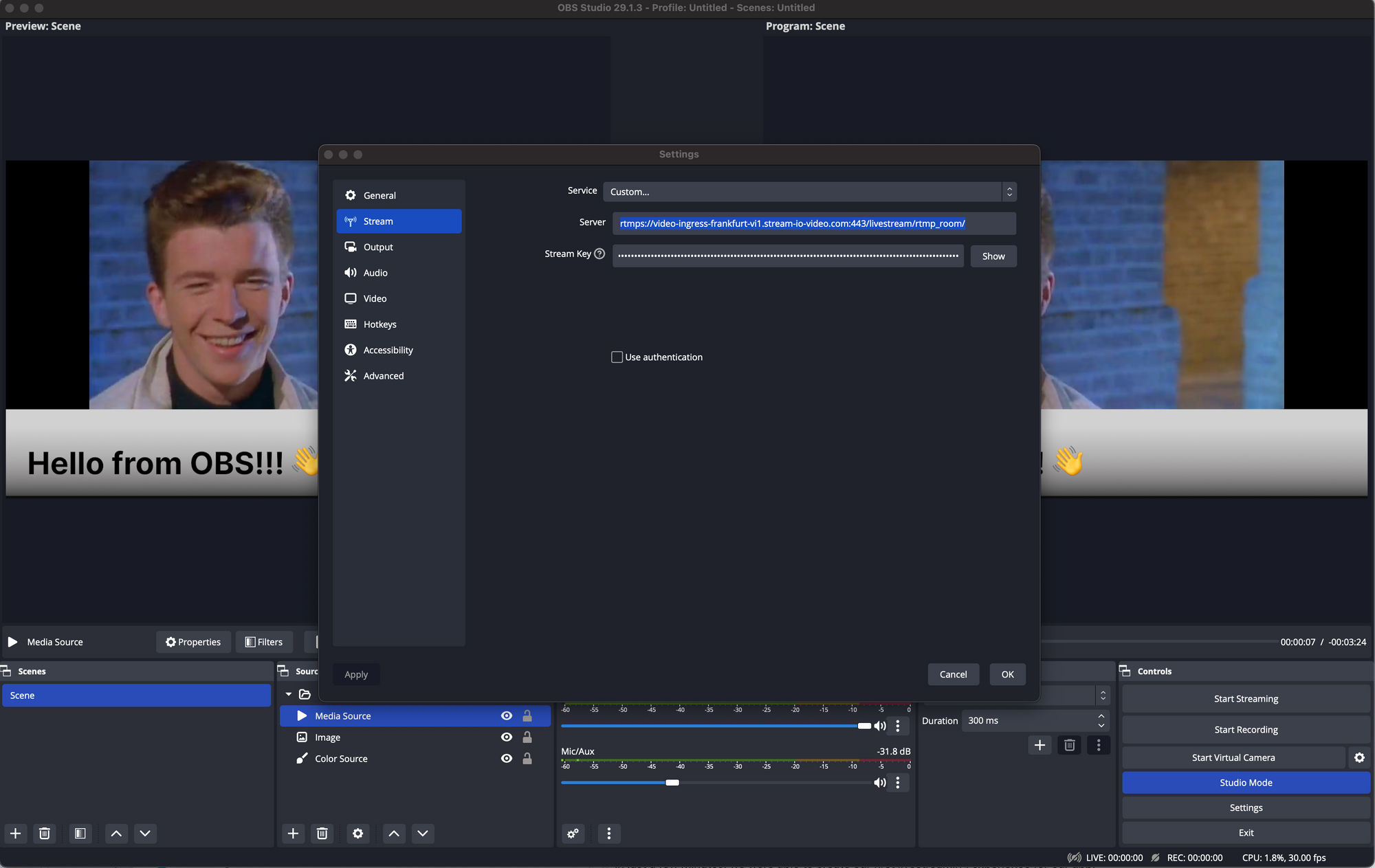

For more advanced livestreaming configurations such as cases where multiple cameras may be required or different scenes and animations, streaming tools like OBS can be used together with Stream video using RTMP (Real Time Messaging Protocol).

By default, when a call is created, it is given a dedicated RTMP URL which can be used by most common streaming platforms to inject video into the call. To configure RTMP and OBS with Stream, two things are required:

- The RTMP URL of the call

- A "streaming key" comprised of your application's API Key and User Token in the format

apikey/usertoken

With these two pieces of information, we can update the settings in OBS then select the "Start Streaming" option to view our livestream in the application.

⚠️ A user with the name and user token provided to OBS will appear in the call. It is worth creating a dedicated user object for OBS streaming.

Recap

Find the complete code for this tutorial on the Flutter Video Tutorials Repository.

Stream Video allows you to quickly build in-app low-latency livestreaming in Flutter. Our team is happy to review your UI designs and offer recommendations on how to achieve it with the Stream SDKs.

To recap what we've learned:

- WebRTC is optimal for latency, while HLS is slower, but buffers better for users with poor connections.

- You set up a call with

final call = client.makeCall(callType: StreamCallType.liveStream(), id: callID). - The call type

livestreamcontrols which features are enabled and how permissions are set up. - The livestream call has backstage mode enabled by default. This allows you and your co-hosts to setup your mic and camera before allowing people in.

- When you join a call, realtime communication is setup for audio & video:

call.join(), remember to set theconnectOptionsappropriately. - Data in

call.stateandcall.state.value.participantsmake it easy to build your own UI.

Calls run on Stream's global edge network of video servers. Being closer to your users improves the latency and reliability of calls. The SDKs enable you to build livestreaming, audio rooms and video calling in days.

We hope you've enjoyed this tutorial and please feel free to reach out if you have any suggestions or questions.

Final Thoughts

In this video app tutorial we built a fully functioning Flutter livestreaming app with our Flutter SDK component library. We also showed how easy it is to customize the behavior and the style of the Flutter video app components with minimal code changes.

Both the video SDK for Flutter and the API have plenty more features available to support more advanced use-cases.