It's been just over a month since we released the first version of Vision Agents, our new open-source framework designed to help developers quickly build video AI applications using their favourite AI tool and Stream. Since the initial release, we've been hard at work adding new plugins, simplifying the code, and working with the community to find and squash bugs.

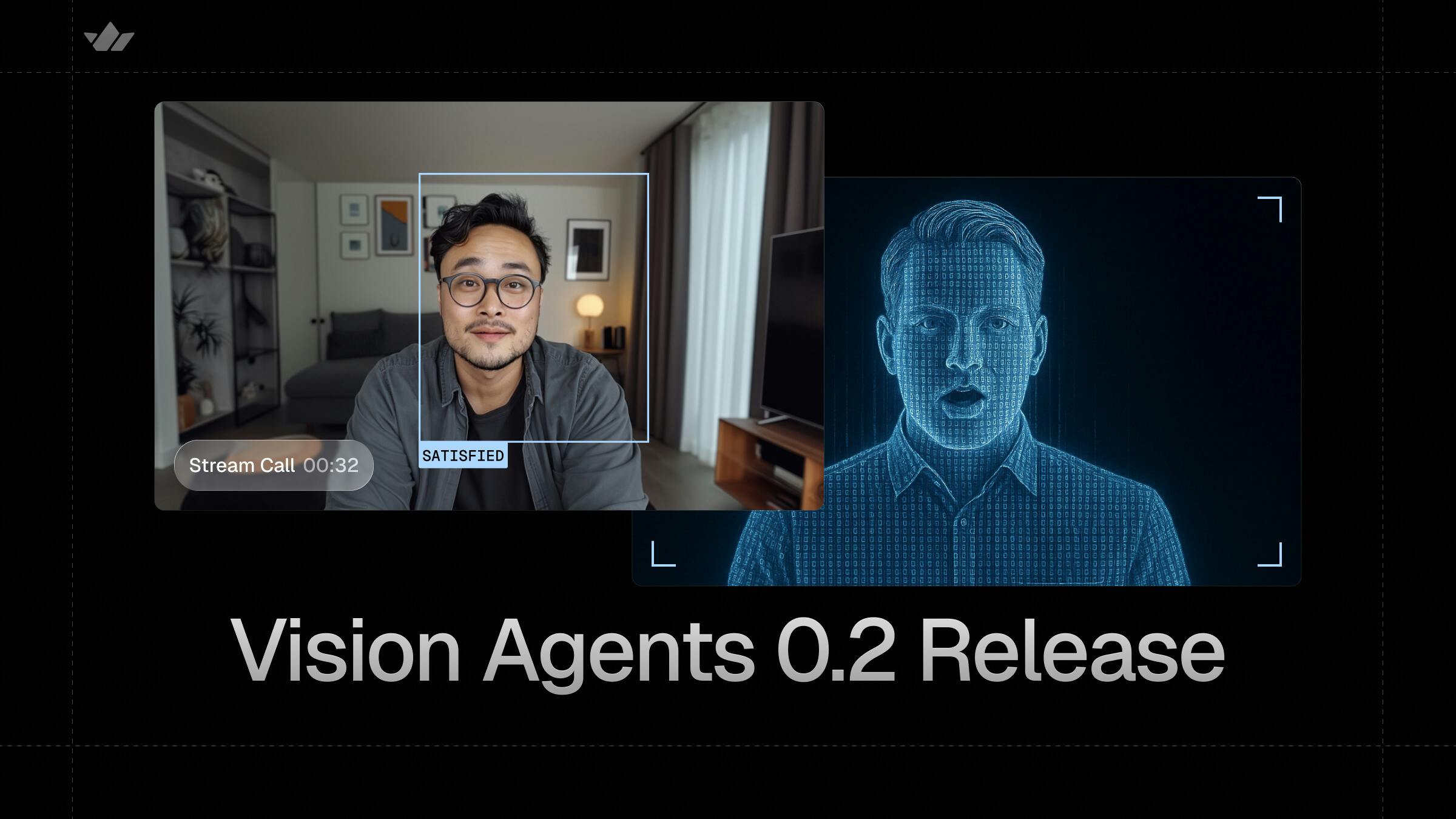

Today, we're excited to release v0.2 of Vision Agents, which brings seven new plugins across avatars, text-to-speech, turn-taking, VLMs and more.

Here's a quick look at Vision Agents in action, counting squats in real-time using video input and your preferred LLM/VLM stack.

What's New in v0.2

Moondream VLM: On Device, Cloud, Caption, VQA, and Detect

We've added full support for Moondream, a compact vision-language model optimized for edge devices and low-latency video processing.

This addition enables agents to perform real-time visual tasks, such as captioning, object recognition, or interactive scene analysis, with minimal resource demands. It's plug-and-play: update to the latest release and configure it in your LLM pipeline for instant use.

1234567891011121314processor = moondream.CloudDetectionProcessor( api_key="your-api-key", # or set MOONDREAM_API_KEY env var detect_objects="person", # or ["person", "car", "dog"] for multiple fps=30 ) # Use in an agent agent = Agent( edge=getstream.Edge(), agent_user=User(name="Vision Assistant"), instructions="You are a helpful vision assistant.", llm=gemini.Realtime(fps=10), processors=[processor], )

HeyGen Avatars

HeyGen enables developers to integrate high-quality, lifelike avatars into their applications with just a few lines of code.

Using the HeyGen plugin with Vision Agents, developers can integrate real-time streaming avatars directly into their pipeline with just a single line of code. Vision Agents will automatically handle the coordination between speech, text, and LLM logic behind the scenes.

123456789101112131415agent = Agent( edge=getstream.Edge(), agent_user=User(name="Avatar Assistant"), instructions="You're a friendly AI assistant.", llm=gemini.LLM("gemini-2.0-flash-exp"), stt=fast_whisper.STT(), tts=inworld.TTS() processors=[ heygen.AvatarPublisher( avatar_id="default", quality=heygen.VideoQuality.HIGH ) ] )

LLM and VLM Improvements: Gemini, OpenAI, Baseten — Pick Your Poison

Whether you're planning to use Gemini, OpenAI, or a model API like Baseten together with the latest version of Qwen 3-VL, this release brings major improvements to the latency and audio-video handling under the hood.

The framework will intelligently detect and switch between different modes, ranging from a traditional LLM like Gemini-2 to the latest version of Qwen's VLM model, while managing the required services (TTS, STT, Realtime) for each.

1234567891011# Initialize the Baseten VLM llm = openai.ChatCompletionsVLM(model="qwen3vl") # Create an agent with video understanding capabilities agent = Agent( edge=getstream.Edge(), agent_user=User(name="Video Assistant", id="agent"), instructions="You're a helpful video AI assistant. Analyze the video frames and respond to user questions about what you see.", llm=llm, stt=deepgram.STT(), tts=elevenlabs.TTS(),

Looking Ahead

It's been great working with the community on the 0.2 milestone, whether it's issues developers are raising, the many AI companies reaching out, or feedback directly on X. A great example of this has been collaborating with the awesome team at Inworld AI to bring the state of the art TTS models to Vision Agents as an our of the box plugin for our community.

Our goal remains the same: help developers bring video AI capabilities to their apps in a fraction of the time and code. Our focus for 0.3 and 0.4 will be on continuing to improve the API surface area, further reducing latency and perceived latency times, and providing guides and helpers to enable developers to quickly deploy Vision Agents to their preferred hosting provider.