Building a Video SDK is an interesting engineering challenge, a geek’s dream of lower-level concurrency primitives, synchronization mechanisms, and intelligent throttling–all working in tight loops. Additionally, you’re negotiating codecs (H.264, VP8/9, AV1), managing lock-free queues to maintain frame flow, and performing bandwidth estimation to ensure video remains smooth and audio stays in sync.

Video Challenges

It’s all fun and exciting until you face the reality of what it means to ship something that has to “just work” across thousands of devices, networks, and edge cases. Unlike a typical mobile SDK, where errors might result in a missing button or delayed animation, video SDKs thrive or fail based on their resilience and the quality of the experience. If the video drops, the audio desyncs, or the frames stutter, the user notices it instantly.

Many things can go wrong with video calls:

- Edge cases: Devices with unusual hardware codecs, OS-level quirks, or vendor-specific modifications.

- Network issues: Bandwidth fluctuations, packet loss, and jitter - all of which need to be handled efficiently in real-time. We also have firewalls that different organizations put in place on various ports, blocking the real-time connection needed for a call to work.

- Platform divergences: What works on iOS may behave differently on Android, and even two Android devices from the same brand can exhibit inconsistent behaviour. Add to this the different browser implementations and their subtle differences in implementing the WebRTC protocol, and you get even more complexity to handle.

- Many other issues: From CallKit to permission management, numerous problems can arise during a call.

Our Approach to Testing

To ensure the quality of our SDKs and reduce regressions, we do regular types of tests:

- Unit tests ensure that the building blocks of our SDK, such as signalling, state machines, and reconnection logic, work as expected in isolation.

- Snapshot tests help us ensure UI consistency, as rendering controls and overlays across different screen densities and orientations can introduce subtle bugs.

- Integration tests simulate end-to-end flows, ensuring that when a user joins a call, negotiates media, and streams video, all components work together seamlessly.

We have significant test coverage, and we run the test suites on each PR.

However, given the nature of video, none of these tests guarantees that things will run smoothly all the time.

TestDevLab Collaboration

To tackle this challenge, we need to test across a wide range of devices, including flagship phones, budget models, and tablets, with different hardware encoders, screen resolutions, and OS versions.

And devices are only half the story: video quality changes dramatically when you factor in network conditions. A stream that looks great on Wi-Fi can degrade quickly on congested 4G or under heavy packet loss.

To obtain objective and reproducible results, we partnered with TestDevLab, a well-established company specialising in media quality assurance. Their tooling and expertise enable us to simulate diverse network conditions, measure video/audio quality using standardised metrics, and run repeatable scenarios across a large device lab.

We were also doing comparisons with Google Meet under the same testing conditions, which we will share in this post.

This collaboration provided us with both the breadth of coverage and the scientific rigour we needed to transform the subjective “it looks good” impression into data-driven improvements.

Testing Plan

To make testing systematic and repeatable, we structured our plan around a few scenarios:

- Run on a wide range of Android devices to account for the vast ecosystem fragmentation.

- Define a cross-platform call matrix covering iOS, Android, mobile browsers, macOS, and Windows.

- Vary network bandwidth and packet loss across thresholds to observe how call quality degrades and recovers.

- Simulate distant SFU (Selective Forwarding Unit) servers to measure the impact of latency and routing on call experience.

MOS Score

We will use the Mean Opinion Score (MOS) to rate the video and audio call quality. MOS is a standardised method for measuring the perceived quality of audio or video as judged by human listeners or viewers.

Test participants rate the quality on a scale from 1 (bad) to 5 (excellent), and the average becomes the MOS. While the scale theoretically ranges up to 5.0, in practice, even reference-quality audio or video rarely reaches that level due to variations in human perception and bias.

As a result, the practical upper bound is around 4.5, which is generally considered the best achievable score in real-world systems. MOS for audio reflects clarity, distortion, and naturalness of speech, while for video, it captures resolution, smoothness, and the presence of visual artifacts.

Here’s an example of the MOS score values:

Android Testing

For the Android compatibility tests, we were using the following methodology:

- Initiate call on a receiver device - MacBook Pro.

- Join the call through the test device.

- The test device is pointed to a media source - an external device playing a video with a sound on loop.

- After joining, switch to the back camera and record the call for 1 minute.

- Test functionality of UI elements.

- After the 2nd minute, leave the call.

- After the call, test if the speaker works.

Testing Results

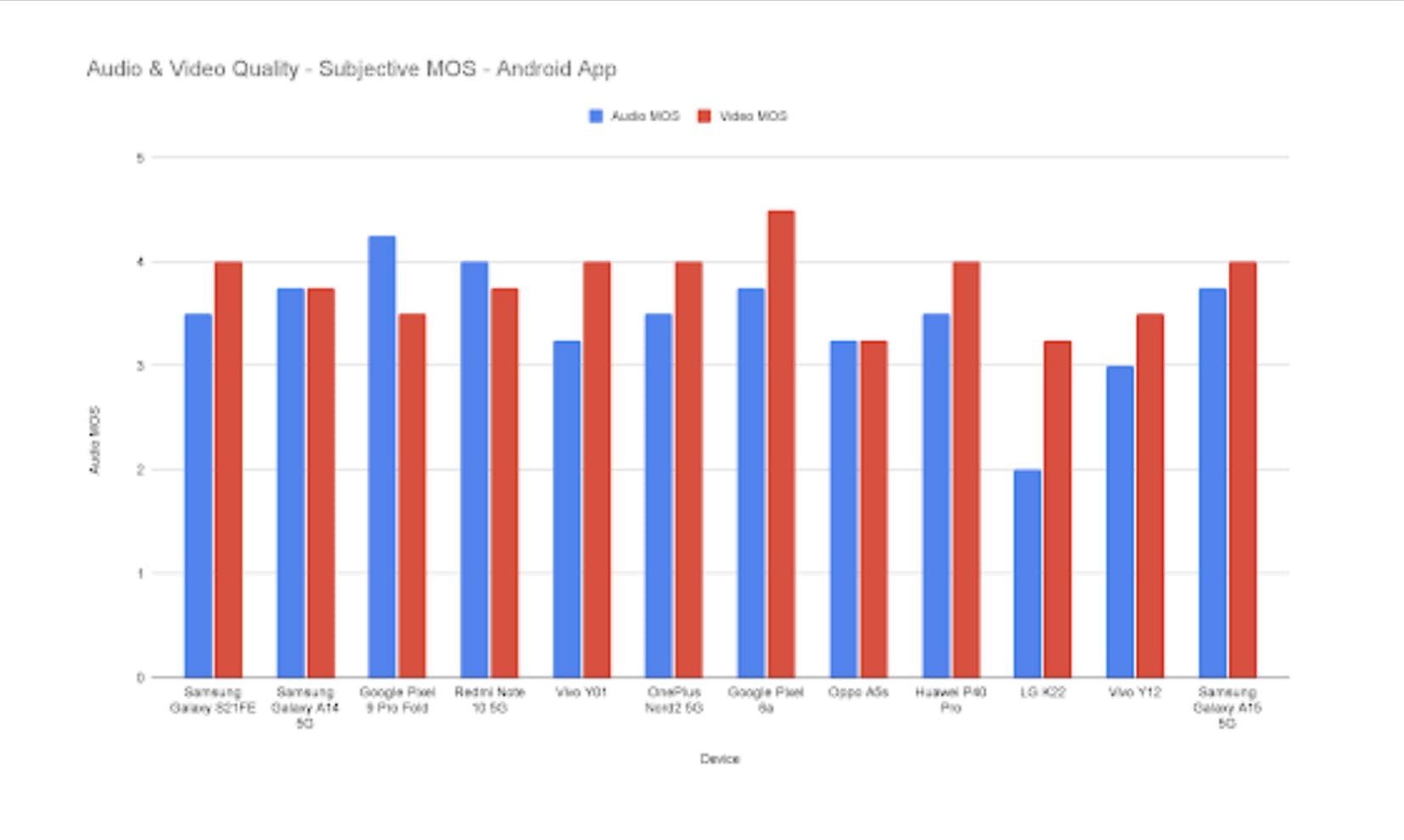

Here’s a chart with the results of MOS scores from the Android testing.

Some highlights from the audio testing:

- Google Pixel 9 Pro Fold (4.25): Clear, artefact-free sound.

- Redmi Note 10 5G (4): Smooth adjustment, mild noise cancelling.

- Noise cancellation often introduces artefacts or unnatural emphasis (especially on sibilants like "s", "z", "c").

- Several devices had unstable loudness or muffling (e.g., Vivo Y01, Galaxy A15 5G).

Some highlights from the video testing:

- Google Pixel 6a (4.5): High clarity, minimal issues.

- Vivo Y01 (4): Despite audio issues, the back camera output was solid when focused.

- Freezes after contrast changes or camera switching.

- Autofocus problems (e.g., Vivo Y01, Vivo Y12).

In a nutshell, the experience was mostly positive, with a few things that we needed to improve.

Network Tests

For the network tests, the following setup was used:

- An iOS device with a mobile hotspot, network link conditioner, and required custom network profiles is set up.

- The sender test device is connected to a regular unlimited wifi network.

- The receiver test device is connected to the mobile hotspot of the iOS network device. Initially set to the uncapped network setting.

- Both devices are pointed towards a dynamic media source (a Desktop monitor with video playing).

- Default app settings are enabled throughout the call.

With this setup, we were able to utilise the iOS device’s custom network profile to adjust the packet loss and network bandwidth.

The calls were six minutes long, and after each minute, there was a change in the network capabilities.

Device Matrix

Since there are many different platforms with various codecs and other video/audio capabilities, we used a device matrix for testing.

We were using both high-end and low-end devices across iOS, Android, macOS, and Windows. All the different combinations brought a total of 496 video calls.

In parallel, under the same conditions, we tested the Google Meet app and compared it to Stream Video on the same platform.

Testing Results

High-level summary of the testing:

- Stream Video quality was on a similar level to Google Meet.

- On uncapped bandwidth, it sometimes even performed better.

- On lower bandwidths / higher packet loss, Google Meet adjusted better.

- When there was not enough bandwidth, Google Meet prioritized audio, while Stream attempted to support both, resulting in poor audio and video quality.

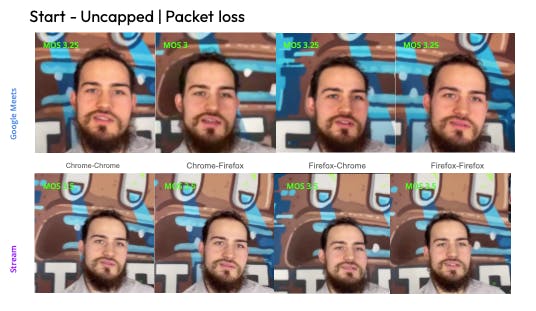

Here’s an example visual comparison of how the MOS value changes over time when we shift the packet loss, for both Stream and Google Meet.

iOS Example

To illustrate the results, here’s a more detailed example for iOS. The same patterns are observed in tests on all platforms.

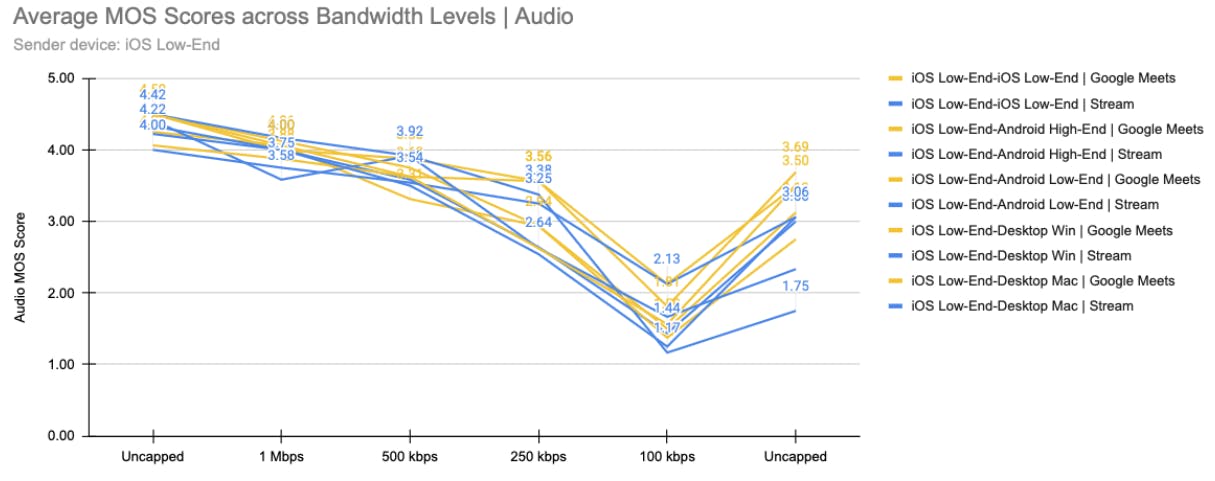

Audio Results Bandwidth Change

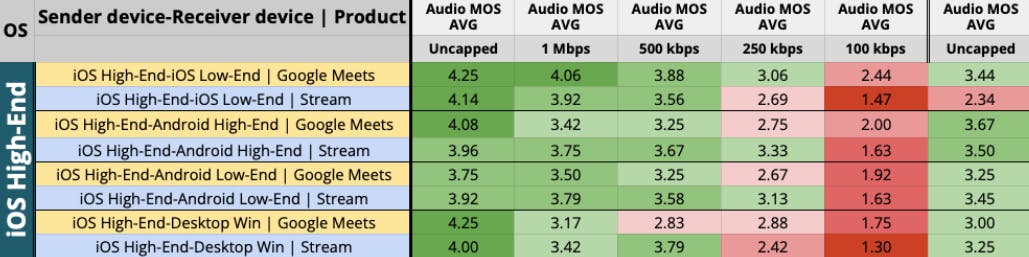

First, let’s see the MOS scores for audio when changing the bandwidth:

In the first column, you can see the different combinations of iOS devices as senders, and different receiving devices. The yellow rows show values for Google Meet, while the blue ones display values for Stream Video.

The remaining columns represent the MOS values based on the bandwidth:

- Uncapped - with no restrictions on the bandwidth, the values are similar. In some cases, Stream Video is preferable, while in others, Google Meet is the better option. However, the differences are minor in both cases, which means audio works well on both.

- 1 Mbps - The results are similar to the uncapped test, but with lower MOS values, which is expected and still acceptable.

- 500 kbps - Here, we also obtain similar results, but the combination of Google Meet’s iOS and Desktop Windows yields a poor value of 2.83, indicating signs of degradation in the call experience.

- 250 kbps - At this point, Stream Video starts to show lower quality compared to Google Meet on more devices.

- 100 kbps - At the lowest bandwidth we used for testing, both MOS scores are low, but Google Meet has higher values across devices.

- Uncapped - We recover the good connection again, and this column shows how fast we recover the audio from the lowest to uncapped bandwidth.

In conclusion, Stream Video performs as well as Google Meet (and sometimes better) in good bandwidth conditions, but performs worse in inferior network environments.

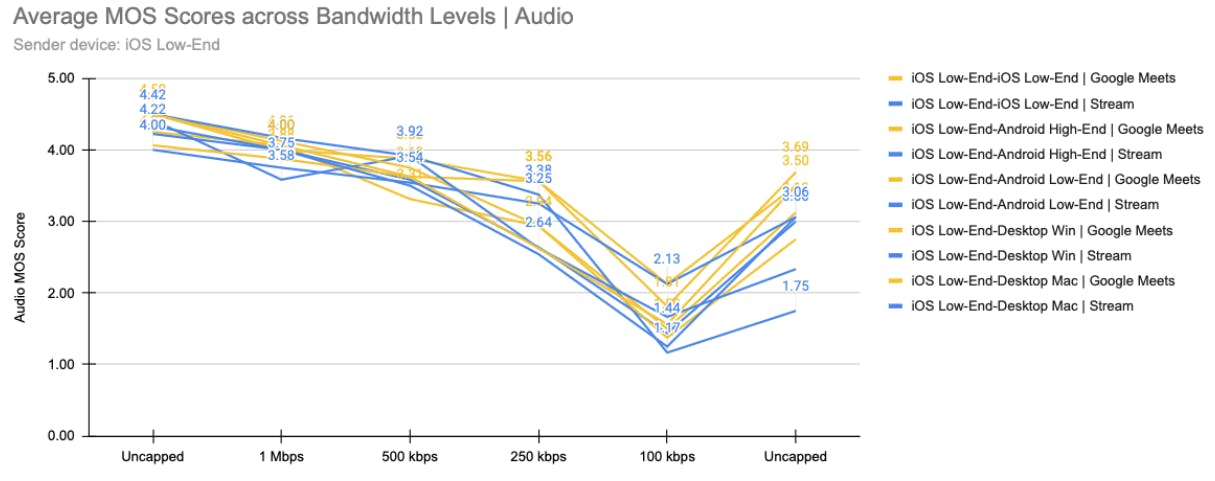

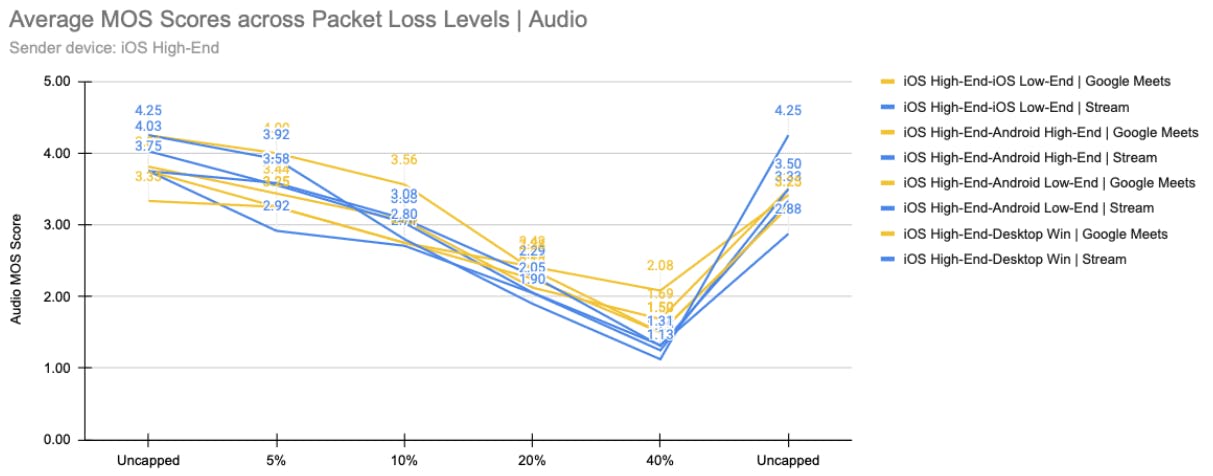

Audio Results Packet Loss

Next, let’s examine the MOS scores for audio when varying the packet loss.

The results are similar to the ones for changing the bandwidth.

- Uncapped - both solutions perform similarly - Stream Video is better most of the time.

- 5% and 10% packet loss - similar performance, with lower MOS scores.

- 20% and 40% packet loss - Stream Video’s quality degrades faster.

- Uncapped again - both recover, although the iOS high-end to low-end call still has a bad MOS value.

The conclusion here is similar to the bandwidth change tests: Google Meet handles lower network speeds better because it prioritizes audio.

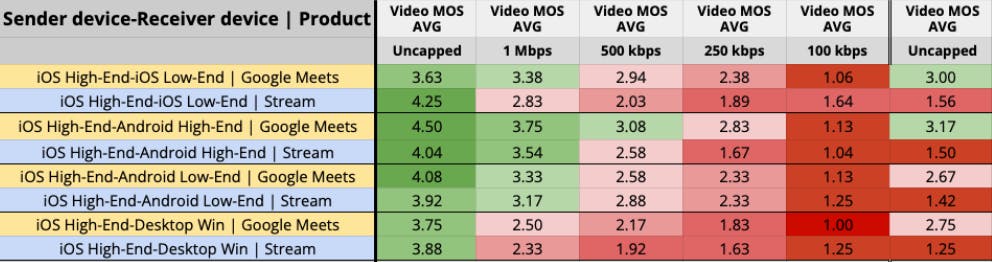

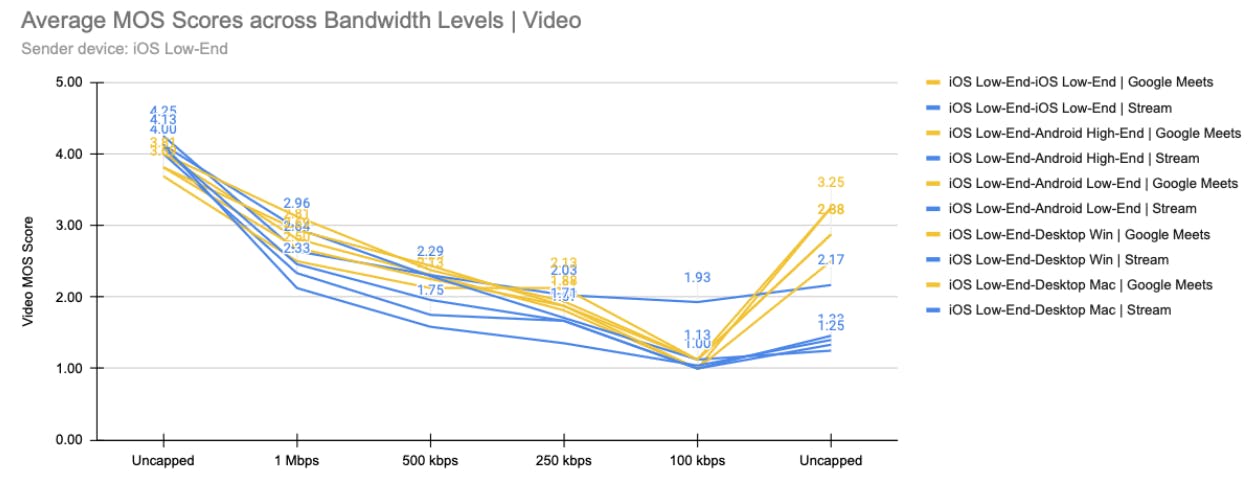

Video Results Bandwidth Change

Here are the MOS scores for Video calls with Bandwidth change:

The scores are similar to those of the audio tests. However, in the lowest bandwidth of 100 kbps, you can see that Google Meet’s scores are mostly 1.0, which means there’s no video at all. With this feature, they were able to get better scores on audio.

Another interesting thing is that Google Meet recovers the video more quickly when the uncapped bandwidth is restored.

Video Results Packet Loss

Finally, here are the results of the video performance with packet loss applied.

Again, similar results were obtained, as in the other tests. It’s worth mentioning that at a 40% packet loss rate, the video is practically unusable on both platforms. iOS high-end to iOS low-end also had lower scores here on Stream Video.

SFU Tests

We were also testing how video performs if you are assigned a distant SFU (Selective Forwarding Unit) for a call, compared to one that is close to you.

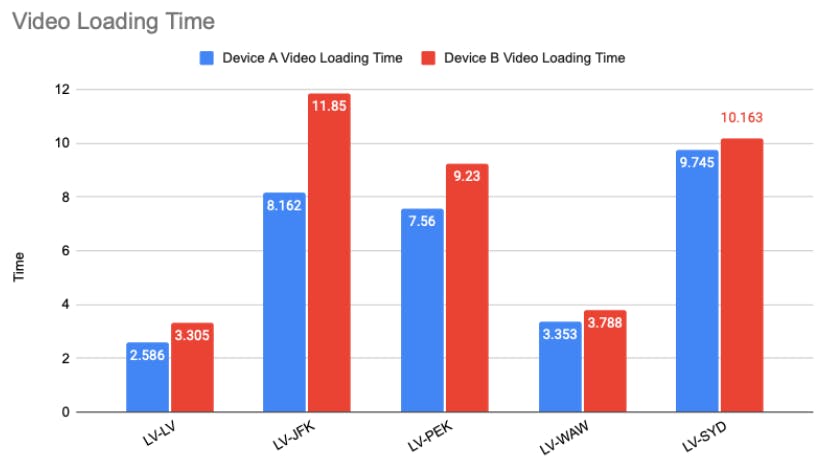

The tests were performed in Latvia, and with some custom code, a distant SFU in other locations, such as New York and Sydney, was picked. We were measuring the potential impact of a wrong routing algorithm on the video experience.

In the initial tests, when both SFUs are located in Latvia, the video loading time is relatively fast. However, if one of them, for example, is in New York, then the second device can take over 10 seconds to load the video. Additionally, the quality in those cases is lower, as the data requires more time to travel, which is a natural consequence.

Fortunately, our edge network covers most of the world, so these situations won’t happen much in the real world.

Steps Taken

After the thorough testing with TestDevLab, we reached two key conclusions:

- Performance parity with Google Meet – in most cases, our SDK matched (and sometimes exceeded) Google Meet, which is a significant milestone.

- Room for improvement on poor networks – we needed to deliver a more resilient experience for users in challenging conditions, such as making calls on trains or in areas with unstable connectivity.

Based on these findings, we implemented several improvements:

- Automatic video track pausing – when bandwidth drops, our SDK now prioritizes audio by pausing video, keeping conversations smooth even on bad connections.

- Smarter bandwidth estimation – We refined our algorithm to measure available bandwidth more accurately, resulting in more adaptive quality adjustments.

- Targeted bug fixes and optimizations – testing surfaced issues across both UI components and low-level clients, which we systematically resolved.

Conclusion

Partnering with an independent, well-established testing company provided us with unbiased insights and helped surface issues that we might have otherwise missed internally. This collaboration directly improved the reliability and quality of our video SDK.

If you’d like to see the results in action, explore our video SDK docs and try Stream Video in your own app.