Redis is one of those remarkable tools that seem able to do everything. Need a lightning-fast in-memory cache? Redis. Need a straightforward database ideal for key-value pairs? Redis. Need a message broker for building out a publish/subscribe chat system?

There’s Redis again. And that is how we’re going to use it today. Redis gives us real-time pub/sub capabilities perfect for building chat applications, with virtually no latency and built-in scalability. Here, we will pair it with the latest release of Next.js, version 15, taking advantage of its latest performance upgrades.

Understanding Redis and Its Pub/Sub Mechanism

Before we get into the build, let’s take two minutes to understand what will be happening under the hood of our application.

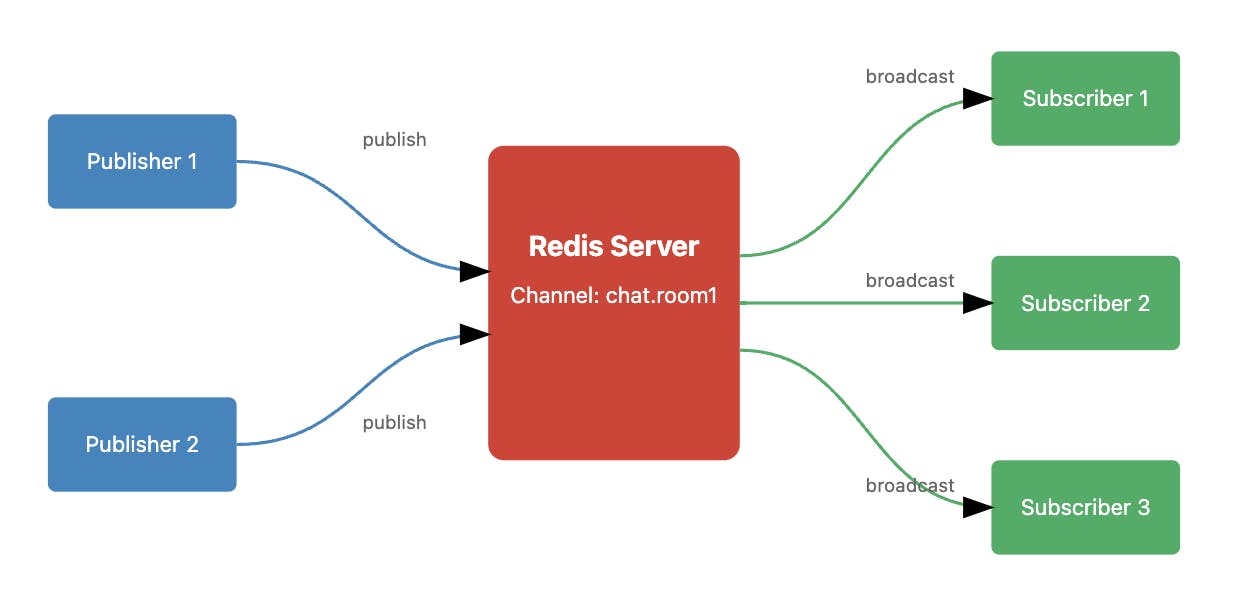

At its core, Redis Pub/Sub operates on a channel-based messaging paradigm where publishers send messages to channels without knowledge of what subscribers (if any) might receive them. When a client publishes a message to a channel, Redis efficiently broadcasts it to all subscribed clients in real-time, with an average latency of less than 1 millisecond.

- Publishing in Redis is sending a message to a specific channel, similar to broadcasting on a radio frequency. Any client can publish to any channel and immediately distribute the message to all current subscribers.

- Subscribing is the process of listening to one or more channels for messages. When a client subscribes to a channel, they automatically receive all messages published to that channel after their subscription begins. Multiple clients can subscribe to the same channel, enabling one-to-many communication patterns perfect for chat applications.

There are a few important considerations when working with Redis Pub/Sub:

- Message Persistence: By default, Redis Pub/Sub operates in a fire-and-forget mode - messages are not stored and cannot be retrieved later. If a subscriber is offline when a message is published, they won't receive it when they reconnect. This is why we'll implement additional message storage in our chat application.

- Pattern Matching: Redis supports pattern-based subscriptions using glob-style patterns (e.g., 'chat.*' would match 'chat.room1', 'chat.room2', etc.), enabling flexible channel organization schemes.

- Performance: Redis Pub/Sub is highly efficient, capable of handling millions of subscribers and delivering messages with sub-millisecond latency. However, each subscriber connection maintains state on the Redis server, so it's crucial to properly manage connections in production environments.

This understanding of Redis Pub/Sub's capabilities and limitations will inform our architectural decisions as we build our chat application.

Setting Up Our Next.js 15 Project

Let’s start by setting up Next.js and installing the dependencies we’ll need to run our application. To create a new Next.js project, you can use:

npx create-next-app@latest [project-name]

This will take you through the setup process, asking you questions to determine how you want to use the framework. Here’s how we set this project up:

Would you like to use TypeScript? … No / Yes

Would you like to use ESLint? … No / Yes

Would you like to use Tailwind CSS? … No / Yes

Would you like your code inside a `src/` directory? … No / Yes

Would you like to use App Router? (recommended) … No / Yes

Would you like to use Turbopack for next dev? … No / Yes

Would you like to customize the import alias (@/* by default)? … No / YesAfter that, we can cd into our project directory and install the Upstash library:

npm install @upstash/redis

Upstash is a serverless Redis provider that offers excellent integration with Next.js and edge functions, making it ideal for our globally distributed chat application. Their client library provides type-safe Redis operations and automatic connection management.

To make our app look a little nicer, we’ll also set up the shadcn UI component library:

12npx shadcn@latest init npx shadcn@latest add button input card

shadcn/ui provides unstyled, accessible components that we can easily customize with Tailwind CSS. It's perfect for building a modern chat interface without starting from scratch.

To start our app, we can then run:

npm run dev

This will just load the boilerplate code from the Next.js setup–let’s start building out our chat application.

Designing a Scalable Group Chat Architecture

Within our root directory, we want to create a lib directory with a redis.ts file inside:

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556575859606162636465// lib/redis.ts import { Redis } from "@upstash/redis"; const redis = new Redis({ url: process.env.UPSTASH_REDIS_URL!, token: process.env.UPSTASH_REDIS_TOKEN!, }); interface Message { sender: string; message: string; timestamp: number; } export async function publishMessage(room: string, message: Message) { await redis.publish(room, JSON.stringify(message)); } export async function storeMessage(room: string, message: Message) { await redis.lpush(`chat:${room}`, JSON.stringify(message)); await redis.ltrim(`chat:${room}`, 0, 99); // Keep only the last 100 messages } export async function getMessages(room: string): Promise<Message[]> { const messages = await redis.lrange(`chat:${room}`, 0, -1); return messages .map((msg) => { try { return { sender: msg.sender || "Unknown", message: msg.message || "", timestamp: msg.timestamp || Date.now(), }; } catch (error) { console.error("Error parsing message:", msg); return null; } }) .filter((msg): msg is Message => msg !== null); } export async function getLatestMessages( room: string, lastTimestamp: number ): Promise<Message[]> { const messages = await getMessages(room); return messages.filter((msg) => msg.timestamp > lastTimestamp); } export async function setUsername( room: string, userId: string, username: string ) { await redis.hset(`users:${room}`, { [userId]: username }); } export async function getUsername( room: string, userId: string ): Promise<string> { const username = await redis.hget(`users:${room}`, userId); return username || "Anonymous"; }

What is happening here? Let’s break it down. First, we have the basic setup and types:

1234567891011121314import { Redis } from "@upstash/redis"; // Initialize Redis client with Upstash credentials const redis = new Redis({ url: process.env.UPSTASH_REDIS_URL!, token: process.env.UPSTASH_REDIS_URL!, }); // Define message structure interface Message { sender: string; message: string; timestamp: number; }

This establishes our Redis connection and defines our chat messages–a sender, the message, and a timestamp. We need our UPSTASH_REDIS_URL and UPSTASH_REDIS_URL from our Upstash account, which we’ll save in an .env.local file.

Then, we have the core message functions. First, the function to publish messages:

123export async function publishMessage(room: string, message: Message) { await redis.publish(room, JSON.stringify(message)); }

This broadcasts a message to all subscribers in a specific chat room using the Redis pub/sub system for real-time communication.

Then we have our function for message storage:

1234export async function storeMessage(room: string, message: Message) { await redis.lpush(`chat:${room}`, JSON.stringify(message)); await redis.ltrim(`chat:${room}`, 0, 99); // Keep only last 100 messages }

This saves messages to persistent storage using Redis lists. It prepends new messages using lpush and maintains a rolling history of 100 messages per room using ltrim. We use a chat: prefix for room-specific message storage.

Next, our message retrieval functions. We can get all messages with getMessages:

1234567891011121314151617export async function getMessages(room: string): Promise<Message[]> { const messages = await redis.lrange(`chat:${room}`, 0, -1); return messages .map((msg) => { try { return { sender: msg.sender || "Unknown", message: msg.message || "", timestamp: msg.timestamp || Date.now(), }; } catch (error) { console.error("Error parsing message:", msg); return null; } }) .filter((msg): msg is Message => msg !== null); }

This function retrieves the entire message history for a room and adds some error handling for badly formatted messages. We then use getMessages to get recent messages with getLatestMessages:

1234567export async function getLatestMessages( room: string, lastTimestamp: number ): Promise<Message[]> { const messages = await getMessages(room); return messages.filter((msg) => msg.timestamp > lastTimestamp); }

This will get messages newer than a specific timestamp. Finally, we have a function to set a username:

1234567export async function setUsername( room: string, userId: string, username: string ) { await redis.hset(`users:${room}`, { [userId]: username }); }

We’re using some key design patterns such as:

- Namespace prefixing (chat:, users:)

- Data expiration (message trimming)

- Error handling and data validation

- Default values for resilience

- Type safety with TypeScript

- Separation of concerns (publishing vs storage)

This file creates a robust foundation for real-time message broadcasting and history persistence, forming the backbone of our scalable architecture.

Implementing Basic Group Chat Functionality

With our basic functions set up, we can turn our attention to our API routes that will handle message retrieval, message sending, and username management within our real-time group chat system.

Here’s our messages route:

123456789101112131415161718// app/api/messages/route.ts import { NextResponse } from "next/server"; import { getLatestMessages } from "@/lib/redis"; export async function GET(req: Request) { const { searchParams } = new URL(req.url); const room = searchParams.get("room"); const lastTimestamp = parseInt(searchParams.get("lastTimestamp") || "0"); if (!room) { return NextResponse.json({ error: "Room is required" }, { status: 400 }); } const messages = await getLatestMessages(room, lastTimestamp); return NextResponse.json(messages); }

This GET endpoint manages message retrieval. It accepts a room identifier and an optional lastTimestamp parameter to implement incremental message loading. It fetches the recent messages using the getLatestMessages function from our redis.ts file.

Then we have a route to send a message:

12345678910111213141516171819// app/api/send-message/route.ts import { NextResponse } from "next/server"; import { publishMessage, storeMessage } from "@/lib/redis"; export async function POST(req: Request) { const { room, message, sender } = await req.json(); const fullMessage = { sender, message, timestamp: Date.now(), }; await publishMessage(room, fullMessage); await storeMessage(room, fullMessage); return NextResponse.json({ success: true }); }

The message-sending endpoint implements a dual-action approach to handle real-time message distribution.

- First, it constructs a message object with the sender's information and a Unix timestamp, then uses publishMessage for immediate real-time delivery to active clients.

- Simultaneously, the message persists via storeMessage to ensure message history is maintained for future retrieval.

This architecture ensures both real-time functionality and message persistence.

Finally, we need a route to set each username:

123456789101112131415// app/api/set-username/route.ts import { NextResponse } from "next/server"; import { setUsername } from "@/lib/redis"; export async function POST(req: Request) { const { room, username } = await req.json(); // In a real application, you'd want to generate or retrieve a unique user ID const userId = Math.random().toString(36).substring(7); await setUsername(room, userId, username); return NextResponse.json({ success: true, userId }); }

The username management endpoint implements a simplified user identity system with temporary user IDs. It generates a pseudo-random user identifier using base-36 encoding for brevity. The endpoint associates the username with the generated ID in Redis using the setUsername function, enabling user presence tracking within chat rooms.

This implementation provides a foundation for user management while maintaining stateless API design principles.

Managing Chat Rooms

The final piece of the puzzle is the chat room itself. With Next.js dynamic routes, we only need to create a single file to handle every single chat room we can create:

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121122123124125126127128129130131132// app/chat/[room]/page.tsx "use client"; import { useState, useEffect, useRef } from "react"; import { useParams } from "next/navigation"; import { Button } from "@/components/ui/button"; import { Input } from "@/components/ui/input"; import { Card, CardContent, CardHeader, CardTitle } from "@/components/ui/card"; interface Message { sender: string; message: string; timestamp: number; } export default function ChatRoom() { const [messages, setMessages] = useState<Message[]>([]); const [input, setInput] = useState(""); const [username, setUsername] = useState(""); const [isUsernameSet, setIsUsernameSet] = useState(false); const { room } = useParams(); const messagesEndRef = useRef<HTMLDivElement>(null); const lastTimestampRef = useRef(0); useEffect(() => { const fetchMessages = async () => { const response = await fetch( `/api/messages?room=${room}&lastTimestamp=${lastTimestampRef.current}` ); const newMessages: Message[] = await response.json(); if (newMessages.length > 0) { setMessages((prevMessages) => [...prevMessages, ...newMessages]); lastTimestampRef.current = Math.max( ...newMessages.map((msg) => msg.timestamp) ); } }; fetchMessages(); const intervalId = setInterval(fetchMessages, 1000); // Poll every second return () => clearInterval(intervalId); }, [room]); useEffect(() => { messagesEndRef.current?.scrollIntoView({ behavior: "smooth" }); }, [messages]); const sendMessage = async (e: React.FormEvent) => { e.preventDefault(); if (!input.trim() || !isUsernameSet) return; const response = await fetch("/api/send-message", { method: "POST", headers: { "Content-Type": "application/json" }, body: JSON.stringify({ room, message: input, sender: username }), }); if (response.ok) { setInput(""); } }; const setUserName = async (e: React.FormEvent) => { e.preventDefault(); if (!username.trim()) return; const response = await fetch("/api/set-username", { method: "POST", headers: { "Content-Type": "application/json" }, body: JSON.stringify({ room, username }), }); if (response.ok) { setIsUsernameSet(true); } }; if (!isUsernameSet) { return ( <Card className="w-full max-w-md mx-auto mt-8"> <CardHeader> <CardTitle>Enter Your Username</CardTitle> </CardHeader> <CardContent> <form onSubmit={setUserName} className="space-y-4"> <Input type="text" value={username} onChange={(e) => setUsername(e.target.value)} placeholder="Your username" className="w-full" /> <Button type="submit" className="w-full"> Set Username </Button> </form> </CardContent> </Card> ); } return ( <Card className="w-full max-w-2xl mx-auto"> <CardHeader> <CardTitle>Chat Room: {room}</CardTitle> </CardHeader> <CardContent> <div className="h-96 overflow-y-auto mb-4 p-4 border rounded"> {messages.map((msg, index) => ( <div key={index} className="mb-2"> <span className="font-bold">{msg.sender}: </span> {msg.message} </div> ))} <div ref={messagesEndRef} /> </div> <form onSubmit={sendMessage} className="flex gap-2"> <Input type="text" value={input} onChange={(e) => setInput(e.target.value)} placeholder="Type a message..." className="flex-grow" /> <Button type="submit">Send</Button> </form> </CardContent> </Card> ); }

This is another long code snippet, so let’s break it down again. First, we have our state and data management:

12345const [messages, setMessages] = useState<Message[]>([]); const [input, setInput] = useState(""); const [username, setUsername] = useState(""); const [isUsernameSet, setIsUsernameSet] = useState(false); const lastTimestampRef = useRef(0);

We’re using React hooks for state management. The messages array maintains the chat history, while lastTimestampRef uses useRef to persist the latest message timestamp across re-renders without triggering additional renders.

We fetch messages with a useEffect hook:

1234567891011121314151617useEffect(() => { const fetchMessages = async () => { const response = await fetch( `/api/messages?room=${room}&lastTimestamp=${lastTimestampRef.current}` ); const newMessages: Message[] = await response.json(); if (newMessages.length > 0) { setMessages((prevMessages) => [...prevMessages, ...newMessages]); lastTimestampRef.current = Math.max( ...newMessages.map((msg) => msg.timestamp) ); } }; fetchMessages(); const intervalId = setInterval(fetchMessages, 1000); return () => clearInterval(intervalId); }, [room]);

This uses the lastTimestamp parameter to fetch only new messages and updates the lastTimestampRef to track the most recent message. clearInterval prevents memory leaks, and we maintain real-time updates with a 1-second polling interval.

We implement auto-scrolling using a combination of useRef and useEffect:

1234const messagesEndRef = useRef<HTMLDivElement>(null); useEffect(() => { messagesEndRef.current?.scrollIntoView({ behavior: "smooth" }); }, [messages]);

messagesEndRef creates a reference to a dummy div at the bottom of the message list, and the useEffect hook triggers a smooth scroll animation whenever new messages arrive.

Next, our message-sending logic:

123456789101112const sendMessage = async (e: React.FormEvent) => { e.preventDefault(); if (!input.trim() || !isUsernameSet) return; const response = await fetch("/api/send-message", { method: "POST", headers: { "Content-Type": "application/json" }, body: JSON.stringify({ room, message: input, sender: username }), }); if (response.ok) { setInput(""); } };

This implements several features:

- Input validation to prevent empty messages

- Username verification before sending

- Optimistic UI updates by clearing the input on successful send

- Error handling through response status checking

Then, we have username management:

123456789101112const setUserName = async (e: React.FormEvent) => { e.preventDefault(); if (!username.trim()) return; const response = await fetch("/api/set-username", { method: "POST", headers: { "Content-Type": "application/json" }, body: JSON.stringify({ room, username }), }); if (response.ok) { setIsUsernameSet(true); } };

This persists our username through the set-username API.

Now, we can render our UI. The JSX implementation consists of two main views: the username entry form and the main chat interface.

If no username is set, it shows a card for the user to enter a name:

1234567891011121314151617181920212223if (!isUsernameSet) { return ( <Card className="w-full max-w-md mx-auto mt-8"> <CardHeader> <CardTitle>Enter Your Username</CardTitle> </CardHeader> <CardContent> <form onSubmit={setUserName} className="space-y-4"> <Input type="text" value={username} onChange={(e) => setUsername(e.target.value)} placeholder="Your username" className="w-full" /> <Button type="submit" className="w-full"> Set Username </Button> </form> </CardContent> </Card> ); }

Once a username is entered, the main chat interface is shown with a scrolling text area for messages and an input area for the user to enter their message:

12345678910111213141516171819202122232425262728293031return ( <Card className="w-full max-w-2xl mx-auto"> <CardHeader> <CardTitle>Chat Room: {room}</CardTitle> </CardHeader> <CardContent> {/* Message Display Area */} <div className="h-96 overflow-y-auto mb-4 p-4 border rounded"> {messages.map((msg, index) => ( <div key={index} className="mb-2"> <span className="font-bold">{msg.sender}: </span> {msg.message} </div> ))} <div ref={messagesEndRef} /> </div> {/* Message Input Form */} <form onSubmit={sendMessage} className="flex gap-2"> <Input type="text" value={input} onChange={(e) => setInput(e.target.value)} placeholder="Type a message..." className="flex-grow" /> <Button type="submit">Send</Button> </form> </CardContent> </Card> );

And that’s it–our group chat is ready to go. If you have stopped the application, then restart it with npm run dev, then head to localhost:3000/chat/lobby (or any other room name):

Easier Group Chat With Stream

Redis provides an excellent foundation for building real-time chat applications with its pub/sub capabilities, low latency, and scalability. This is often how developers start out building real-time chat. But they also quickly realize the limitations. For one, it can be memory-hungry, making it expensive to host. But, as developers build out their chat interfaces, it also requires a ton of additional developer time to build the advanced functionality users now expect.

Using Stream's chat API, you can implement the same functionality we built here in just a few lines of code, while gaining access to enterprise-grade features. This includes message threading, file uploads, reactions, typing indicators, and presence detection–features requiring considerable extra effort to implement with Redis.

If you’re interested in learning how to build a chat app in Next.js with Stream, we have a tutorial on Using Stream to Build a Livestream Chat App in Next.js. If you want to stay with Redis, the above code and explanation can help you build a robust, real-time chat application your users will love.