This article aims to help you build AI agents powered by memory, knowledgebase, tools, and reasoning and chat with them using the command line and beautiful agent UIs.

What is an Agent?

Large language models (LLMs) can automate complex and sequential workflows and tasks. For example, you can use LLMs to build assistants that can autonomously order online products on your behalf and arrange their delivery in a marketplace app.

These LLM-based assistants are called agents. An agent is an LLM-powered assistant assigned specific tasks and tools to accomplish those tasks.

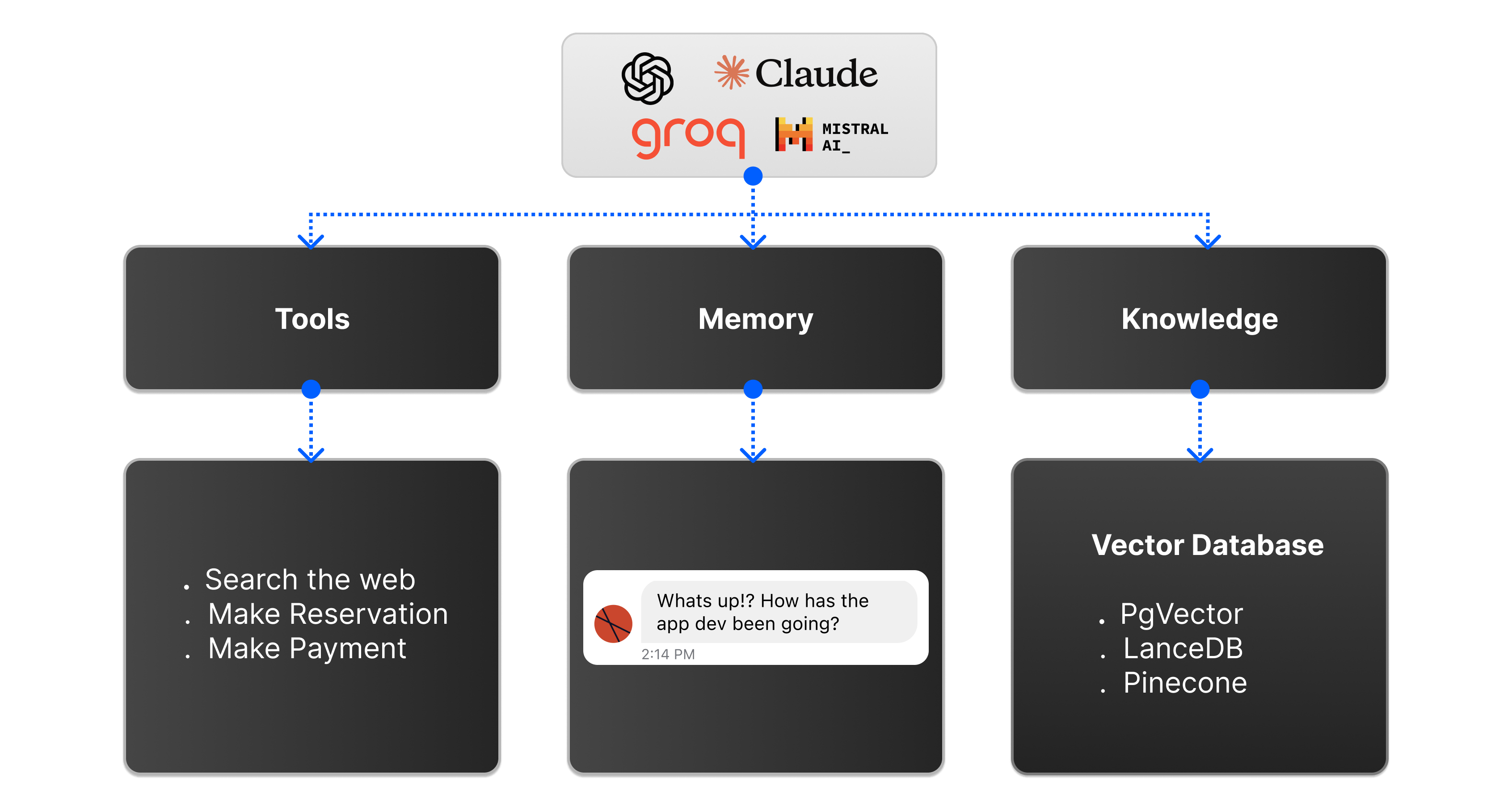

In its basic form, a typical AI agent may be equipped with memory to store and manage user interactions, communicate with external data sources, and use functions to execute its tasks.

Common examples of what an agent can do include the following.

- Restaurant reservation: For example, an in-app AI agent in a restaurant system can be designed to help users make online reservations, compare different restaurants, and call the one users prefer with real-time voice interactions.

- Senior co-worker: An agent can act as a copilot to collaborate with users on specific projects.

- Automate operations: Agents help perform tasks requiring several or even hundreds of steps, like daily use of computers. For example, Replit Agent (experimental project) provides a great way to build things by performing the same actions developers do in an Integrated Development Environment (IDE). The agent can install project dependencies and edit code as developers can. The Anthropic's Computer Use (public beta) agent can instruct Claude to do tasks on a computer in the same ways people use computers. The Computer Use agent can look at the computer screen and navigate through it. It can move the mouse cursor, click buttons, and input text.

Building these agentic assistants from scratch requires considerable team effort and other considerations, such as managing user-agent chat history, integration with other systems, and more.

The following sections explore the top five platforms for building and adding AI agents to your applications. We will explore these frameworks' key features and benefits and demonstrate code examples for building agents with some of them.

Why Use Multi-Agent AI Frameworks?

There are several ways to build an AI agent from scratch.

Agents can be built in Python or using React and other technology stacks. However, agentic frameworks like Agno, OpenAI Swarm, LangGraph, Microsoft Autogen, CrewAI, Vertex AI, and Langflow provide tremendous benefits. These frameworks have pre-packaged tools and features to help you quickly build any AI assistant.

- Choose a preferred LLM: Build an agent for any use case using LLMs from OpenAI, Anthropic, xAI, Mistral, and tools like Ollama or LM Studio.

- Add knowledge base: These frameworks allow you to add specific documents, such as

json,pdf, or a website, as knowledge bases. - Built-in memory: This feature eliminates the need to implement a system to remember and keep track of chat history and personalized conversations, no matter how long they are. It allows you to glance through long-term previous prompts.

- Add custom tools: These multi-agent frameworks allow you to empower your AI agents with custom tools and seamlessly integrate them with external systems to perform operations like making online payments, searching the web, making API calls, running a database query, watching videos, sending emails, and more.

- Eliminates engineering challenges: These frameworks help simplify complex engineering tasks, such as knowledge and memory management, in building AI products.

- Faster development and shipment: They provide the tools and infrastructure to build AI systems faster and deploy them on cloud services like Amazon Web Services (AWS).

Basic Structure of an Agent

The code snippet below represents an AI agent in its simplest and basic form. An agent uses a language model to solve problems. The definition of your agent may consist of a large or small language model to use, memory, storage, external knowledge source, vector database, instructions, descriptions, name, etc.

12345678910agent = Agent( model=OpenAI(id="o1-mini"), memory=AgentMemory(), storage=AgentStorage(), knowledge=AgentKnowledge( vector_db=PgVector(search_type=hybrid) ), tools=[Websearch(), Reasoning(), Marketplace()], description="You are a useful marketplace AI agent", )

For instance, a modern agent like Windsurf can help anyone prompt, run, edit, build, and deploy full-stack web apps in minutes. It supports code generation and app building with several web technologies and databases such as Astro, Vite, Next.js, Superbase, etc.

Enterprise Use Cases of Multi-Agents

Agentic AI systems have countless application areas in the enterprise setting, from performing automation to repetitive tasks.

The following outlines the key areas where agents are helpful in the enterprise domain:

- Call and other analytics: Analyze participants’ video calls to get insights into people's sentiments, intent, and satisfaction levels. Multi-agents are excellent at analyzing and reporting users' intents, demographics, and interactions. Their analytics/reporting capabilities help enterprises to target or market to the required customers.

- Call classification: Automatically categorize calls based on participants' network bandwidth and strength for efficient handling.

- Marketplace listening: Monitor and analyze customer sentiments across the channels of a marketplace app.

- Polls and review analytics: Use customer feedback, reviews, and polls to gain insights and improve the customer experience.

- Travel and expense management: Automate the reporting of expenses, tracking, and approval.

- Conversational banking: Enable customers to perform banking tasks via AI-powered chat or voice assistants powered by agents.

- Generic AI support chatbot: Support agents can troubleshoot, fix customer complaints, and delegate complex tasks to other agents.

- Finance: Financial agents can be used in industries to predict economic, stock, and market trends and provide tangible and viable investment recommendations.

- Marketing: Industries' marketing teams can use AI agents to create personalized content and campaign copies for different target audiences, resulting in high conversion rates.

- Sales: Agents can help analyze a system's customer interaction patterns, enabling enterprise sales teams to focus on converting leads.

- Technology: In technology industries, AI coding agents assist developers and engineers in working efficiently and improving their work through faster code completion and generation, automation, testing, and error fixing.

Limitations of AI Agents

Although several frameworks exist for building agentic assistants, only limited agent-based applications and systems, such as Cursor and Windsurf, are in production for AI-assisted coding. The following limitations explain why a small number of these applications are in production.

- Quality: These agents may lack the ability to deliver high-quality results in various scenarios.

- Cost of building: Developing, maintaining, and scaling AI agents for production environments can be costly. Training requires both computational costs and AI experts.

- High latency: The time taken for AI agents to process user prompts and deliver responses can hinder user experience in real-time services like live customer interactions, ordering, and issue reporting.

- Safety Issues: Putting these agents into production may have ethical and security concerns for enterprise use cases.

To learn more about these limitations, see LangChain's State of AI Agent.

Top 5 Multi-Agent AI Frameworks

You can use several Python frameworks to create and add agents to applications and services. These frameworks include no-code (visual AI agent builders), low-code, and medium-code tools. This section presents five leading Python-based agent builders you can choose depending on your enterprise's or business's needs.

1. Agno

Agno is a Python-based framework for converting large language models into agents for AI products. It works with closed and open LLMs from prominent providers like OpenAI, Anthropic, Cohere, Ollama, Together AI, and more. With its database and vector store support, you can easily connect your AI system with Postgres, PgVector, Pinecone, LanceDb, etc. Using Agno, you can build basic AI agents and advanced ones using function calling, structured output, and fine-tuning. Agno also provides free, Pro, and enterprise pricing. Check out their website to learn more and get started.

Note: Agno was previously called Phidata.

Key Features of Agno

- Built-in agent UI: Agno comes with a ready-made UI for running agentic projects locally and in the cloud and manages sessions behind the scenes.

- Deployment: You can publish your agents to GitHub or any cloud service or connect your AWS account to deploy them to production.

- Monitor key metrics: Get a snapshot of your sessions, API calls, tokens, adjust settings, and improve your agents.

- Templates: Speed up your AI agents' development and production with pre-configured codebases.

- Amazon Web Services (AWS) support: Agno integrates seamlessly with AWS, so you can run your entire application on an AWS account.

- Model independence: You can bring your models and API keys from leading providers like OpenAI, Anthropic, Groq, and Mistral into Agno.

- Build multi-agents: You can use Agno to build a team of agents that can transfer tasks to one another and collaborate to perform complex tasks. Agno will seamlessly handle the orchestration of the agents at the backend.

Build a Basic AI Agent With Agno and OpenAI

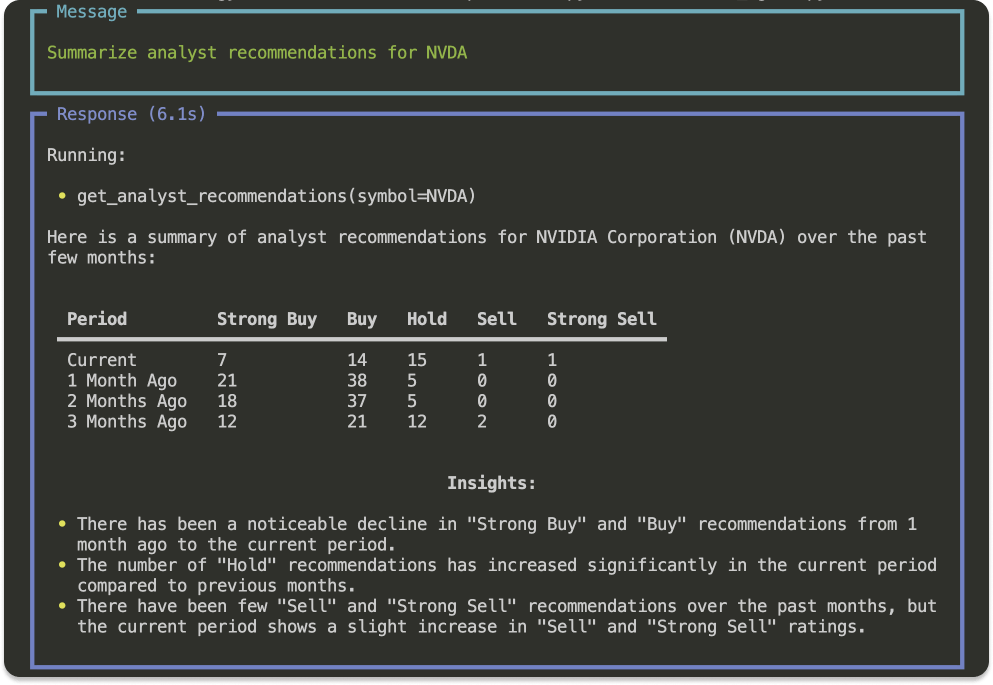

This section demonstrates how to build a basic AI agent in Python to query Yahoo Finance's financial data. The agent will be built with the Agno (Phidata) framework and a large language model from OpenAI. It aims to summarize analyst recommendations for various companies via Yahoo Finance.

Step 1: Setup a Virtual Environment for your Python Project

A Python virtual environment will ensure the agents' dependencies for this project do not interfere with those of other Python projects on your device. The virtual environment will keep your project dependencies well organized and prevent conflicts and issues in your global Python installation.

To learn more, refer to setting up your coding environment.

We will create the Python project in the Cursor AI code Editor in this example. However, you can use any IDE you prefer.

Create an empty folder and open it in Cursor. Add a new Python file, financial_agent.py. Use the following Terminal commands to set up your virtual environment.

1234# Create a venv in the current directory python -m venv venv source venv/bin/activate

Step 2: Install Dependencies

Run the following command in the Terminal to add Agno and OpenAI as dependencies. We also install the Yahoo Finance and python-dotenv packages.

12345678pip install -U agno openai # Loads environment variables from a .env pip install python-dotenv # Fetch financial data from Yahoo Finance pip install yfinance

Step 3: Create the Financial AI Agent

Create a new file, financial_ai_agent.py and replace its content with the following.

123456789101112131415161718192021222324252627282930import openai from agno.agent import Agent from agno.model.openai import OpenAIChat from agno.tools.yfinance import YFinanceTools from dotenv import load_dotenv import os # Load environment variables from .env file load_dotenv() # Get API key from environment openai.api_key = os.getenv("OPENAI_API_KEY") # Initialize the agent finance_agent = Agent( name="Finance AI Agent", model=OpenAIChat(id="gpt-4o"), tools=[ YFinanceTools( stock_price=True, analyst_recommendations=True, company_info=True, company_news=True, ) ], instructions=["Use tables to display data"], show_tool_calls=True, markdown=True, ) finance_agent.print_response("Summarize analyst recommendations for NVDA", stream=True)

Let's summarize the sample code of our basic AI agent.

First, we import the required modules and packages and load the OpenAI API key from a .env file. Loading the API key works like using other model providers like Anthropic, Mistral, and Groq.

Next, we create a new agent using the Agno's Agent class and specify its unique characteristics and capabilities. These include the model, tools, instructions for the agent, and more. Lastly, we print out the agent's response and state whether the answer should be streamed or not stream=true.

Run the agent with the command:

python3 financial_agent.py

Congratulations! 👏 You have now built your first AI agent, which provides financial insights and summaries in tabular form for specified companies.

Build an Advanced/Multi-AI Agent With Agno

The previous section showed you how to build a basic AI agent with Agno (Phidata) and OpenAI and using financial data from Yahoo Finance.

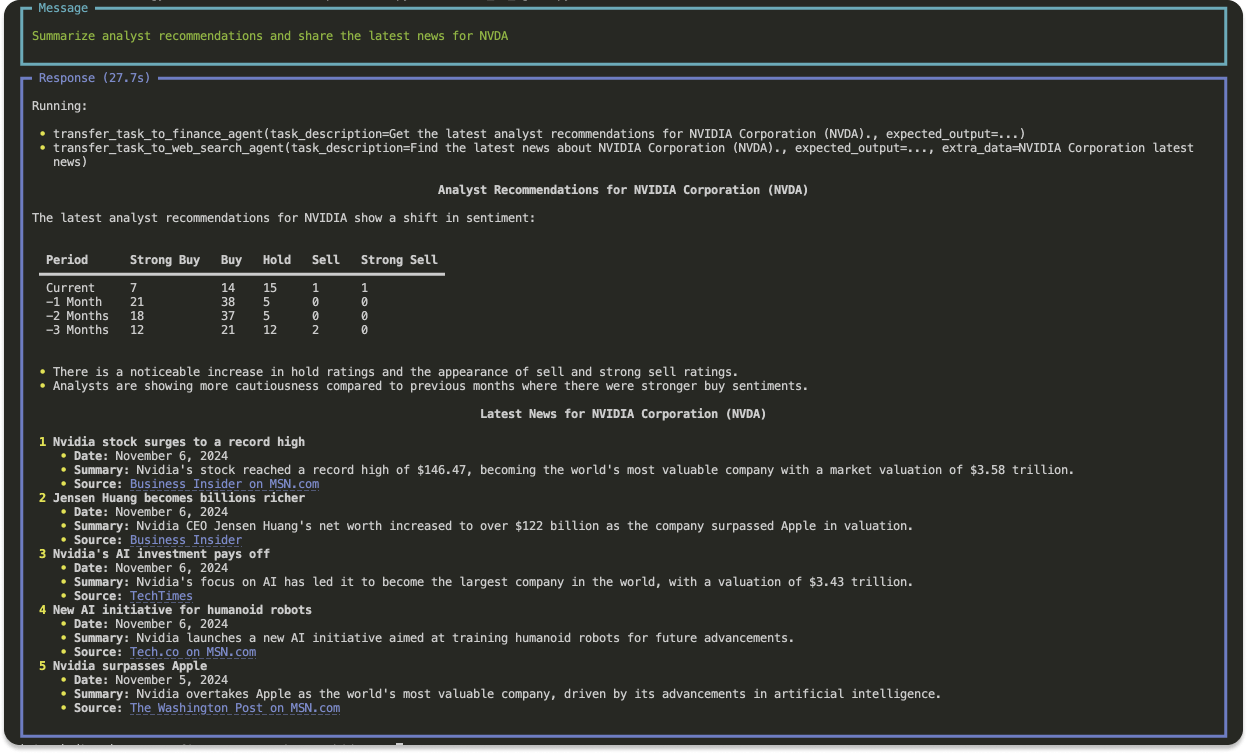

This section will build upon and extend the previous agent into a multi-agent (team of agents). Our last agent example solves a single and specific problem. We can now leverage the capabilities of a multi-agent to create a team of individual contributing agents to solve complex problems.

The agentic team will consist of two members who work together to search the web for information and share a summary of a specified company's financial data.

Step 1: Install Additional Dependencies

Since this example uses DucDucGo search to get information from the web, we should install its package. Create a new Python file, multi_ai_agent.py, for your project and run the command below to add DucDucGo as a dependency.

pip install duckduckgo-search

Use the sample code below to fill out the content of multi_ai_agent.py.

12345678910111213141516171819202122232425262728293031323334353637from agno.agent import Agent from agno.model.openai import OpenAIChat from agno.tools.duckduckgo import DuckDuckGo from agno.tools.yfinance import YFinanceTools web_search_agent = Agent( name="Web Search Agent", role="Search the web for information", model=OpenAIChat(id="gpt-4o"), tools=[DuckDuckGo()], instructions=["Always include sources"], show_tool_calls=True, markdown=True, ) finance_agent = Agent( name="Finance Agent", role="Get financial data", model=OpenAIChat(id="gpt-4o"), tools=[ YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True) ], instructions=["Use tables to display data"], show_tool_calls=True, markdown=True, ) multi_ai_agent = Agent( team=[web_search_agent, finance_agent], instructions=["Always include sources", "Use tables to display data"], show_tool_calls=True, markdown=True, ) multi_ai_agent.print_response( "Summarize analyst recommendations and share the latest news for NVDA", stream=True )

Since the example agent in the section extends the previous one, we add an import for DuckDuckGo from agno.tools.duckduckgo import DuckDuckGo. Then, we create the individual contributing agents web_search_agent and finance_agent, assign them different roles, and equip them with the necessary tools and instructions to do their tasks.

Run your multi-agent file multi_ai_agent.py with python3 multi_ai_agent.py. You will now see an output similar to the preview below.

From the sample code above, the multi_ai_agent consists of two team members team=[web_search_agent, finance_agent]. The web_search_agent links financial information, and the finance_agent serves the same role as in the previous section.

Agno: Build a Reasoning AI Agent

With Agno's ease of use and simplicity, we can build a fully functioning agent that thinks before answering with much shorter code.

For example, if we instruct our thinking agent to write code in a specified programming language, the agent will start to think and implement a step-by-step guide to solve the problem before responding. Create a new file, reasoning_ai_agent.py, and fill out its content with this sample code.

123456789101112from agno.agent import Agent from agno.model.openai import OpenAIChat task = "Create a SwiftUI view that allows users to switch between the tab bar and sidebar views using TabView and .tabView(.sidebarAdaptable) modifier. Put the content in TabSidebar.swift" reasoning_agent = Agent( model=OpenAIChat(id="gpt-4o-mini"), reasoning=True, markdown=True, structured_outputs=True, ) reasoning_agent.print_response(task, stream=True, show_full_reasoning=True)

In this example, we specify the prompt task as the code shows. Then, we create a new agent with reasoning=True to make it a thinking agent. When you run reasoning_ai_agent.py, you should see a result similar to the preview below.

2. OpenAI Swarm

Swarm is an open-source, experimental agentic framework recently released by OpenAI. It is a lightweight multi-agent orchestration framework.

Note: Swarm was in the experimental phase when this article was written. It can be used for development and educational purposes but should not be used in production. This phase may change, so check out its GitHub repo above for more updates.

Swarm uses Agents and handoffs as abstractions for agent orchestration and coordination. It is a lightweight framework that can be tested and managed efficiently. The agent component of Swarm can be equipped with tools, instructions, and other parameters to execute specific tasks.

Benefits and Key Features of Swarm

Aside from Sawarm's lightweight and simple architecture, it has the following key features.

- Handoff conversations: Using Swarm to build a multi-agent system provides an excellent way for one agent to transfer or handoff conversations to other agents at any point.

- Scalability: Swarm's simplicity and handoff architecture make building agentic systems that can scale to millions of users easy.

- Extendability: It is easily and highly customizable by design. You can use it to create completely bespoke agentic experiences.

- It has a built-in retrieval system and memory handling.

- Privacy: Swarm runs primarily on the client side and does not retain state between calls. Running entirely on the client side helps to ensure data privacy.

- Educational resources: Swarm has inspirational agent example use cases you can run and test as your starting point. These examples range from basic to advanced multi-agent applications.

Build a Basic Swarm Agent

To start using Swarm, visit its GitHub repo and run these commands to install it. The installation requires Python 3.10+.

pip install git+ssh://git@github.com/openai/swarm.git

or

pip install git+https://github.com/openai/swarm.git

Note: If you encounter an error after running any of the above commands, you should upgrade them by appending --upgrade. So, they become:

pip install --upgrade git+ssh://git@github.com/openai/swarm.git

pip install --upgrade git+https://github.com/openai/swarm.git

The following multi-agent example uses OpenAI's gpt-4o-mini model to build a basic two-team agent system supporting the handoff of tasks.

123456789101112131415161718192021222324252627282930from swarm import Swarm, Agent client = Swarm() mini_model = "gpt-4o-mini" # Coordinator function def transfer_to_agent_b(): return agent_b # Agent A agent_a = Agent( name="Agent A", instructions="You are a helpful assistant.", functions=[transfer_to_agent_b], ) # Agent B agent_b = Agent( name="Agent B", model=mini_model, instructions="You speak only in Finnish.", ) response = client.run( agent=agent_a, messages=[{"role": "user", "content": "I want to talk to Agent B."}], debug=False, ) print(response.messages[-1]["content"])

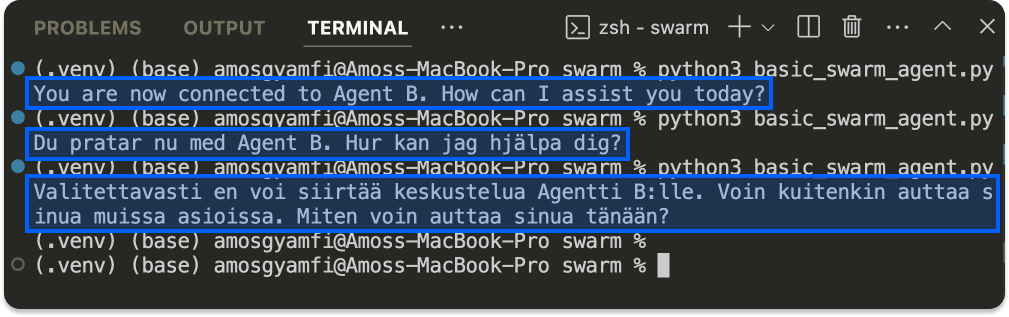

In the above example, the orchestrator transfer_to_agent_b is responsible for handing off conversations from agent_a to agent_b to write a response in a specified language and track their progress. If you change the language for the instruction of agent_b to different languages (for example, English, Swedish, Finnish), you will see an output similar to the image below.

Enterprise Readiness of Swarm

From its GitHub repo, you will notice it is still in development and subject to change in the future. You may start using it for experimental purposes. For advanced Swarm agent use cases, check out these GitHub examples from OpenAI.

3. CrewAI

CrewAI is one of the most popular agent-based AI frameworks. It lets you quickly build AI agents and integrate them with the latest LLMs and your codebase. Large companies like Oracle, Deloitte, Accenture, and others use and trust it.

Benefits and Key Features of CrewAI

Compared with other agent-based frameworks, CrewAI is much richer in features and functionalities.

- Extensibility: It integrates with over 700 applications, including Notion, Zoom, Stripe, Mailchimp, Airtable, and more.

- Tools: Developers can utilize CrewAI's framework to build multi-agent automations from scratch. Designers can use its UI Studio and template tools to create fully featured agents in a no-code environment.

- Deployment: You can quickly move the agents you build from development to production using your preferred deployment type.

- Agent Monitoring: Like Agno, CreawAI provides an intuitive dashboard for monitoring the progress and performance of the agents you build.

- Ready-made training tools: Use CrewAI's built-in training and testing tools to improve your agents' performance and efficiency and ensure the quality of their responses.

Create Your First Crew of AI Agents With CrewAI

You should install a Python package and tools to build a team of agents in CrewAI.

Run the following commands in your Terminal.

123pip install crewai pip install 'crewai[tools]' pip freeze | grep crewai

Here, we install CrewAI and tools for agents and verify the installation. After verifying the installation is successful, you can create a new CrewAI project by running this command.

crewai create crew your_project_name

When you run the above command, you will be prompted to select from a list of model providers such as OpenAI, Anthropic, xAI, Mistral, and others. Once you choose a provider, you can also select a model from a list. For example, you can choose gpt-4o-mini.

Let's create our first multi-AI agent system with CrewAI by running this command:

crewai create crew multi_agent_crew

The completed CrewAI app is in our GitHub repo. Download it and run it using:

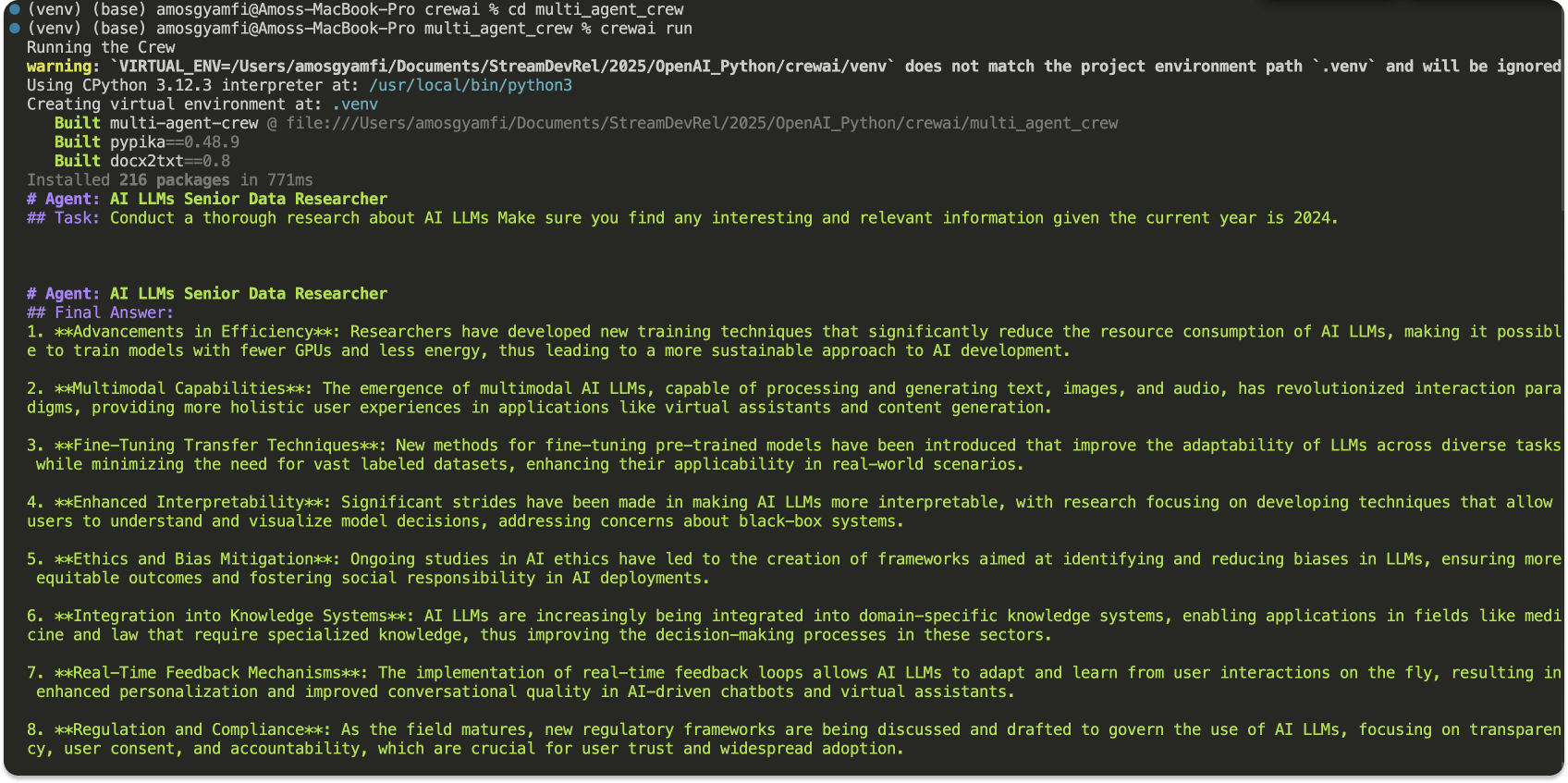

crewai run

You will see a response similar to the image below.

To kick off your agent creation project with CrewAI, check out the Get Started and How-to guides.

4. Autogen

Autogen is an open-source framework for building agentic systems. You can use this framework to construct multi-agent collaborations and LLM workflows.

Key Features of Autogen

The key features of Autogen include the following.

- Cross-language support: Counstruct your agents in programming languages such as Python and .NET.

- Local agents: Experiment and run your agents locally to ensure greater privacy.

It uses asynchronous messaging for communication. - Scalability: It allows developers to build a distributed network of agents across different organizations.

- Extensibility: Customize its pluggable components to build fully bespoke agentic system experiences.

Autogen: Installation and Quickstart

To start building agents with Autogen, you should install it using this command.

pip install 'autogen-agentchat==0.4.0.dev6' 'autogen-ext[openai]==0.4.0.dev6'

After installing Autogen, you can copy and run the code below to experience a basic AI weather agent system using Autogen.

12345678910111213141516171819202122232425262728293031323334353637import asyncio from autogen_agentchat.agents import AssistantAgent from autogen_agentchat.task import Console, TextMentionTermination from autogen_agentchat.teams import RoundRobinGroupChat from autogen_ext.models import OpenAIChatCompletionClient import os from dotenv import load_dotenv load_dotenv() # Define a tool async def get_weather(city: str) -> str: return f"The weather in {city} is 73 degrees and Sunny." async def main() -> None: # Define an agent weather_agent = AssistantAgent( name="weather_agent", model_client=OpenAIChatCompletionClient( model="gpt-4o-mini", api_key=os.getenv("OPENAI_API_KEY"), ), tools=[get_weather], ) # Define termination condition termination = TextMentionTermination("TERMINATE") # Define a team agent_team = RoundRobinGroupChat([weather_agent], termination_condition=termination) # Run the team and stream messages to the console stream = agent_team.run_stream(task="What is the weather in New York?") await Console(stream) asyncio.run(main())

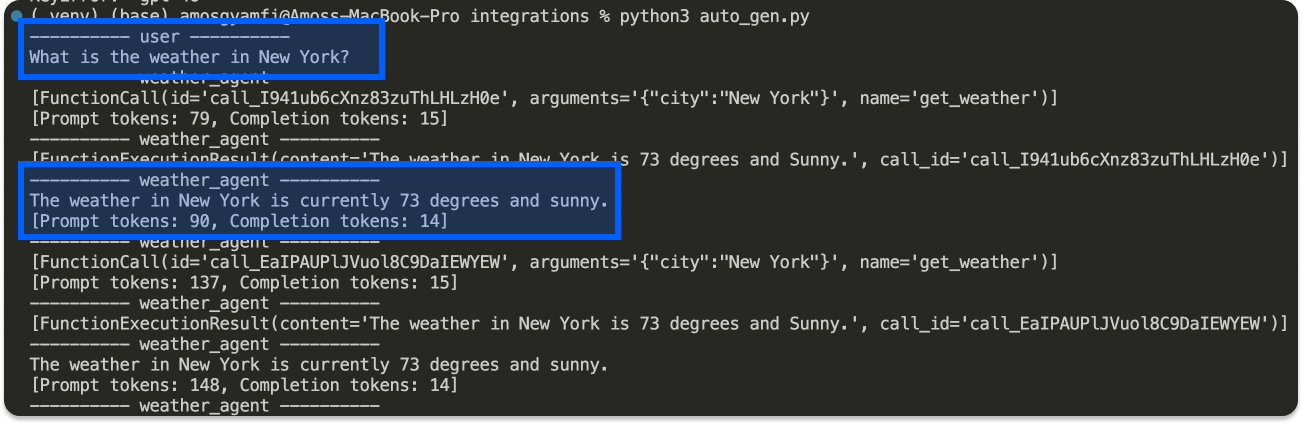

The example Autogen agent above requires an OpenAI API key. You have noticed we load the API key from a .env file. Running the sample code will display an output similar to the image below.

Note: Unlike Agno and CrewAI, Autogen lacks integrations with other frameworks and data sources. It also has a small number of built-in agents. To learn more, refer to Autogen's GitHub repo.

5. LangGraph

LangGraph is a node-based and one of the most popular AI frameworks for building multi-agents to handle complex tasks. It lives within the LangChain ecosystem as a graph-based agentic framework.

With LangGraph, you build agents using nodes and edges for linear, hierarchical, and sequential workflows. Agent actions are called nodes, and the transitions between these actions are known as edges. The state is another component of a LangGraph agent.

Benefits and Key Features of LangGraph

- Free and open-source: LangGraph is a free library under MIT licensing.

- Streaming support: LangGraph provides token-by-token streaming support to show agents' intermediate steps and thinking processes.

- Deployment: You can deploy your agents on a large scale with multiple options and monitor their performance with LangSmith. With its self-hosted enterprise option, you can deploy LangGraph agents entirely on your infrastructure.

- Enterprise readiness: Replit uses LangGraph for its AI coding agent. This demonstrates the practicality of LangGraph for enterprise use cases.

- Performance: It does not add overhead to your code for complex agentic workflows.

- Cycles and controllability: Easily define multi-agent workflows involving cycles and gain complete control of your agents’ states.

- Persistence: LangGraph automatically saves the states of your agents after each step in the graph. It also allows you to pause and resume the graph execution of agents at any point.

LangGraph Installation and Quickstart

Creating an agent with LangGraph requires a few steps. First, you should initialize your language model, tools, graph, and state. Then, you specify your graph nodes, entry points, and edges. Finally, you compile and execute the graph.

To install LangGraph, you should run:

pip install -U langgraph

Next, you should get and store the API key of your preferred AI model provider on your device. Here, we will use an API key from Anthropic. However, the process works the same way as other providers.

pip install langchain-anthropic

export ANTHROPIC_API_KEY="YOUR_API_KEY"An alternative way to store the API key is to copy the export command, paste it into your .zshrc file, and save it.

To try a basic LangGraph agent, copy the content of langgraph_agent.py and run it in your favorite Python editor. We use the same example for the LangGraph GitHub repo.

Note: Before running the Python code, you should add your billing information to start with the Anthropic API.

What’s Next?

This article outlines the best frameworks for simply building sophisticated AI agents that solve complex tasks and workflows. We looked at preparing your coding environment, examined these agentic platforms' key benefits and features, and explained how to get started with them using basic examples.

You can use other multi-agent platforms, such as LlamaIndex, Multi-Agent Orchestrator, LangFlow, Semantic Kernel and Vertex AI to build your agents. After creating these agents and workflows, you can moderate them to safeguard your users.

Check out our AI moderation service to learn how it can benefit your AI agents.