Introduction to Simulcast

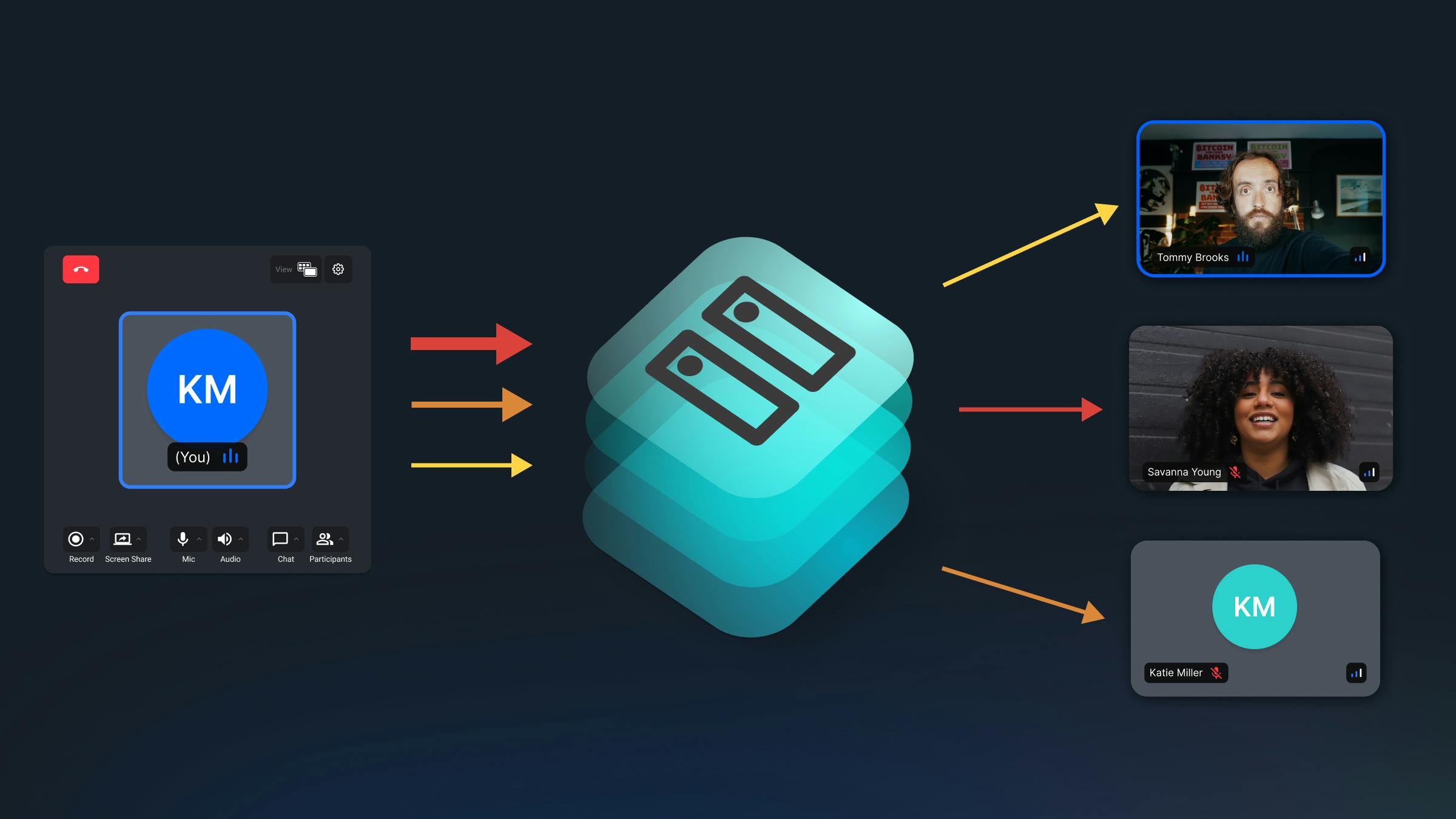

Simulcast represents a crucial optimization technique in WebRTC architecture that solves the heterogeneous network problem. While the basic SFU architecture provides an efficient way to distribute media streams, it faces challenges when participants have varying network capabilities. Simulcast addresses this by allowing each participant to send multiple versions of their video stream at different quality levels, enabling the SFU to forward the most appropriate version to each recipient based on their network conditions.

The Core Concept

Imagine simulcast as a multi-lingual broadcast where the same content is transmitted in different languages simultaneously. Similarly, a video stream is encoded at multiple quality levels and sent in parallel, allowing the SFU to select the most appropriate version for each recipient. This approach ensures optimal quality for high-bandwidth users while maintaining stability for those with limited connectivity.

The Technical Problem: Heterogeneous Networks

In real-world video conferencing scenarios, participants join calls with vastly different network capabilities. Some users might have high-speed fiber connections, while others connect through congested mobile networks or unreliable WiFi. This heterogeneity creates a fundamental challenge for video distribution systems.

Understanding the Slow User Problem

When using a basic SFU architecture without simulcast, the server forwards a single quality stream from each sender to all receivers. This one-size-fits-all approach leads to several critical issues:

The Lowest Common Denominator Effect

Without simulcast, the system faces a difficult choice. If it sends high-quality video, users with poor connections will experience constant buffering and freezing. If it reduces quality to accommodate the weakest connection, users with good bandwidth receive unnecessarily degraded video. This forces the entire system to operate at the level of the participant with the worst connection.

Network Congestion Cascade

WebRTC includes built-in error recovery mechanisms like Picture Loss Indication (PLI) and Negative Acknowledgement (NACK). When a participant experiences packet loss, these mechanisms request retransmission of lost data. In a system without simulcast, if multiple participants have poor connections, the sender can become overwhelmed with retransmission requests, creating a cascade effect that degrades performance for everyone.

Quality Adaptation Challenges

Traditional systems attempt to solve these problems through dynamic quality adaptation, where the sender reduces quality when network conditions deteriorate. However, this approach affects all recipients equally, even those with excellent connections. The result is a frustrating experience where video quality fluctuates based on the weakest link in the network.

// Without simulcast - problematic approach

class BasicStreamManager {

constructor() {

this.participants = new Map();

this.streamQuality = 'high'; // Fixed quality for all

}

async adjustForSlowUser(participant) {

// Forced to lower quality for everyone

if (participant.bandwidth < this.minimumRequired) {

this.streamQuality = 'low';

await this.notifyAllParticipants('quality_degraded');

}

}

}

How Simulcast Works

The Fundamental Concept

Simulcast solves the heterogeneous network problem by having each sender encode and transmit multiple versions of their video simultaneously. Instead of sending a single stream that must work for everyone, the sender creates several streams at different quality levels. The SFU then intelligently forwards the most appropriate version to each recipient based on their individual network conditions.

Multiple Encoding Streams

The process begins at the sender's device, which captures video from the camera and creates multiple encoded versions. Each version represents a different quality tier:

- High Quality: Full resolution, high bitrate for users with excellent connections

- Medium Quality: Reduced resolution and bitrate for average connections

- Low Quality: Minimal resolution and bitrate for poor connections

These streams are encoded in parallel, with each subsequent tier typically reducing the resolution by a factor of 2 and adjusting the bitrate accordingly. For example, if the high-quality stream is 720p at 2.5 Mbps, the medium might be 360p at 500 Kbps, and the low might be 180p at 150 Kbps.

class SimulcastEncoder {

constructor() {

this.encodings = [

{ rid: 'high', maxBitrate: 2500000, scaleResolutionDownBy: 1 },

{ rid: 'medium', maxBitrate: 500000, scaleResolutionDownBy: 2 },

{ rid: 'low', maxBitrate: 150000, scaleResolutionDownBy: 4 }

];

}

async setupSimulcast(videoTrack) {

const sender = new RTCRtpSender(videoTrack);

// Configure multiple encodings

const params = sender.getParameters();

params.encodings = this.encodings;

await sender.setParameters(params);

return sender;

}

}

SFU Stream Selection

Once the sender has encoded multiple quality tiers, the SFU takes on the crucial role of stream selection. The SFU doesn't simply forward all streams to all participants—this would defeat the purpose of simulcast by consuming excessive bandwidth. Instead, it makes intelligent decisions about which quality tier to send to each recipient.

Selection Criteria

The SFU considers multiple factors when selecting stream quality:

-

Available Bandwidth: The recipient's current network capacity is the primary factor. The SFU continuously monitors bandwidth through RTCP feedback and network statistics.

-

Display Requirements: There's no point sending 1080p video to a participant viewing it in a small thumbnail. The SFU considers how the video will be displayed.

-

Device Capabilities: Some devices may struggle to decode high-resolution video due to CPU or GPU limitations.

-

Network Stability: Even if bandwidth is sufficient, unstable connections with high jitter or packet loss may require lower quality streams for smooth playback.

-

User Preferences: Some applications allow users to manually select quality preferences, which the SFU must honor.

The Selection Process

The selection process is dynamic and continuous. As network conditions change, the SFU can seamlessly switch between quality tiers without interrupting the stream. This adaptability ensures each participant always receives the best possible quality their connection can support.

class SimulcastSelector {

constructor() {

this.qualityThresholds = {

high: { minBandwidth: 1500000, minResolution: '720p' },

medium: { minBandwidth: 500000, minResolution: '480p' },

low: { minBandwidth: 150000, minResolution: '240p' }

};

}

selectStreamQuality(participant, availableStreams) {

const { bandwidth, displaySize, cpuUsage } = participant.getMetrics();

// Consider multiple factors for quality selection

const factors = {

networkCapacity: this.evaluateBandwidth(bandwidth),

displayRequirements: this.evaluateDisplaySize(displaySize),

processingPower: this.evaluateCPU(cpuUsage)

};

return this.determineOptimalQuality(factors, availableStreams);

}

determineOptimalQuality(factors, streams) {

// Select highest quality that meets all constraints

if (factors.networkCapacity >= this.qualityThresholds.high.minBandwidth &&

factors.displayRequirements >= 720 &&

factors.processingPower < 80) {

return streams.high;

} else if (factors.networkCapacity >= this.qualityThresholds.medium.minBandwidth) {

return streams.medium;

} else {

return streams.low;

}

}

}

Implementation Details

Understanding the Architecture

Before diving into the code, it's important to understand how simulcast is implemented in WebRTC. The architecture involves three main components:

- Sender (Client): Captures video and produces multiple encoded streams

- SFU (Server): Receives all streams and forwards appropriate ones to recipients

- Receiver (Client): Receives and decodes the selected stream

The key insight is that all the complexity of managing multiple streams is hidden from the receiver. From the receiver's perspective, they're simply getting a single video stream—the SFU handles all the quality selection behind the scenes.

Client-Side Configuration

Setting up simulcast requires careful configuration during the WebRTC connection establishment. The sender must explicitly tell the browser to produce multiple encodings of the video track. This happens during the track addition phase of the WebRTC negotiation.

class SimulcastClient {

async initializeSimulcast() {

const stream = await navigator.mediaDevices.getUserMedia({

video: {

width: { ideal: 1280 },

height: { ideal: 720 }

}

});

const videoTrack = stream.getVideoTracks()[0];

// Create peer connection with simulcast support

const pc = new RTCPeerConnection({

encodings: [

{ rid: 'high', active: true, maxBitrate: 2500000 },

{ rid: 'medium', active: true, maxBitrate: 500000, scaleResolutionDownBy: 2 },

{ rid: 'low', active: true, maxBitrate: 150000, scaleResolutionDownBy: 4 }

]

});

// Add track with simulcast configuration

const sender = pc.addTrack(videoTrack, stream);

return { pc, sender };

}

}

Server-Side Management

The SFU plays a critical role in simulcast by managing the multiple incoming streams from each sender and making intelligent forwarding decisions. Unlike a simple forwarding server, a simulcast-enabled SFU must maintain detailed state about each participant's capabilities, preferences, and current network conditions.

The server-side management involves several key responsibilities:

- Stream Registry: The SFU must track all available quality tiers for each sender, maintaining a mapping between participants and their streams.

- Subscription Management: It needs to manage which recipients are subscribed to which quality tiers, handling subscription changes dynamically.

- Quality Decision Making: The SFU must continuously evaluate network conditions and make quality decisions for each recipient independently.

- Stream Switching: When quality changes are needed, the SFU must seamlessly switch between streams without causing interruption or artifacts.

class SimulcastManager {

constructor() {

this.streamLayers = new Map();

this.participantPreferences = new Map();

}

async handleIncomingStreams(participantId, streams) {

// Store all quality layers

this.streamLayers.set(participantId, {

high: streams.find(s => s.rid === 'high'),

medium: streams.find(s => s.rid === 'medium'),

low: streams.find(s => s.rid === 'low')

});

// Update routing for all subscribers

await this.updateStreamRouting(participantId);

}

async updateStreamRouting(publisherId) {

const layers = this.streamLayers.get(publisherId);

// For each subscriber, select appropriate quality

for (const [subscriberId, subscriber] of this.subscribers) {

const quality = this.selectQualityForSubscriber(subscriber);

const selectedStream = layers[quality];

await this.forwardStream(selectedStream, subscriber);

}

}

}

Bandwidth Adaptation

Understanding Quality Switching

One of the most powerful features of simulcast is its ability to adapt dynamically to changing network conditions. Unlike traditional systems where quality changes affect all participants, simulcast allows per-recipient adaptation. This means that if one participant's network deteriorates, only their received quality is affected—everyone else continues to receive optimal quality.

The Adaptation Process

Quality adaptation in simulcast follows a continuous feedback loop:

-

Monitoring: The SFU constantly monitors each recipient's network conditions through RTCP feedback, measuring metrics like packet loss, round-trip time, and jitter.

-

Evaluation: Based on these metrics, the SFU evaluates whether the current quality tier is appropriate or if a change is needed.

-

Decision: The system decides whether to upgrade to a higher quality tier, downgrade to a lower one, or maintain the current quality.

-

Transition: If a change is needed, the SFU seamlessly switches to forwarding a different quality tier without interrupting the stream.

Stability Considerations

A critical aspect of quality switching is avoiding oscillation. Rapid switching between quality levels creates a poor user experience. To prevent this, systems implement hysteresis—requiring network conditions to remain stable for a period before making a change.

class DynamicQualityAdapter {

constructor() {

this.qualityHistory = new Map();

this.switchThreshold = 3; // Require stable conditions for N seconds

}

async monitorAndAdapt(participant) {

const metrics = await this.collectMetrics(participant);

const currentQuality = participant.currentQuality;

const suggestedQuality = this.evaluateQuality(metrics);

if (suggestedQuality !== currentQuality) {

await this.handleQualityChange(participant, suggestedQuality);

}

}

async handleQualityChange(participant, newQuality) {

const history = this.qualityHistory.get(participant.id) || [];

history.push({ quality: newQuality, timestamp: Date.now() });

// Only switch if conditions are stable

if (this.isQualityStable(history, newQuality)) {

await this.switchQuality(participant, newQuality);

this.notifyQualityChange(participant, newQuality);

}

this.qualityHistory.set(participant.id, history);

}

}

Bandwidth Estimation

Accurate bandwidth estimation is the foundation of effective simulcast operation. Without reliable measurements of each participant's network capacity, the SFU cannot make informed decisions about which quality tier to forward. This process is more complex than simply measuring download speed—it requires continuous monitoring of real-time network conditions.

The estimation process involves several key components:

- Continuous Measurement: Rather than relying on one-time speed tests, the system continuously monitors actual throughput by tracking bytes transferred over time.

- Statistical Analysis: Raw measurements are processed to filter out temporary fluctuations and identify sustained trends.

- Multi-Metric Evaluation: Beyond raw bandwidth, the system considers packet loss, jitter, and round-trip time to form a complete picture of network health.

- Predictive Modeling: Advanced systems may use historical data to predict future bandwidth availability, allowing proactive quality adjustments.

class BandwidthEstimator {

constructor() {

this.measurements = [];

this.windowSize = 5000; // 5 second window

}

async estimateBandwidth(participant) {

const stats = await participant.getStats();

const currentTime = Date.now();

// Process incoming/outgoing bytes

const measurement = {

timestamp: currentTime,

bytesReceived: stats.bytesReceived,

bytesSent: stats.bytesSent

};

this.measurements.push(measurement);

// Remove old measurements

this.measurements = this.measurements.filter(

m => currentTime - m.timestamp < this.windowSize

);

return this.calculateBandwidth();

}

calculateBandwidth() {

if (this.measurements.length < 2) return 0;

const first = this.measurements[0];

const last = this.measurements[this.measurements.length - 1];

const duration = (last.timestamp - first.timestamp) / 1000; // seconds

const bytesTransferred = (last.bytesReceived - first.bytesReceived) +

(last.bytesSent - first.bytesSent);

return (bytesTransferred * 8) / duration; // bits per second

}

}

Advanced Simulcast Features

Temporal Scalability

While traditional simulcast focuses on spatial scalability (different resolutions), temporal scalability adds another dimension by varying the frame rate. This creates a more granular quality ladder that can better adapt to varying network conditions.

Temporal scalability works by encoding video frames in a hierarchical structure. The base layer contains key frames at a low frame rate (e.g., 7.5 fps), while enhancement layers add intermediate frames to reach higher frame rates (15 fps, 30 fps). This approach offers several advantages:

- Finer Control: Networks can drop enhancement layers to reduce bandwidth while maintaining smooth motion at lower frame rates.

- Graceful Degradation: When bandwidth decreases, the system can reduce frame rate before reducing resolution, which often provides a better user experience.

- CPU Efficiency: Lower-powered devices can decode only the base layer, reducing processing requirements.

class TemporalScalabilityManager {

constructor() {

this.temporalLayers = [

{ tid: 0, framerate: 7.5 }, // Base layer

{ tid: 1, framerate: 15 }, // Middle layer

{ tid: 2, framerate: 30 } // Full framerate

];

}

configureTemporalLayers(encoder) {

return encoder.configure({

codec: 'VP8',

scalabilityMode: 'L1T3', // 1 spatial, 3 temporal layers

// ... other config

});

}

selectTemporalLayer(bandwidth, cpuUsage) {

if (bandwidth < 200000 || cpuUsage > 90) {

return 0; // Base layer only

} else if (bandwidth < 500000 || cpuUsage > 70) {

return 1; // Up to middle layer

} else {

return 2; // All layers

}

}

}

Screen Share Optimization

Screen sharing presents unique challenges that require different simulcast parameters than regular video. Unlike natural video content, screen content has distinct characteristics that affect how it should be encoded and transmitted.

Key differences in screen content include:

- High Resolution Requirements: Text and UI elements need to remain sharp and readable, making resolution more important than frame rate.

- Motion Characteristics: Screen content typically has less motion than video, with many static periods punctuated by occasional changes.

- Content Type: Screenshots often contain text, sharp edges, and solid color areas that compress differently than natural video.

- User Expectations: Viewers expect crystal-clear text and UI elements, even if the frame rate is lower.

These characteristics require a different approach to simulcast configuration. Screen share streams typically use higher bitrates at each quality tier, prioritize resolution over frame rate, and employ different encoding parameters optimized for screen content.

class ScreenShareSimulcast {

constructor() {

this.screenEncodings = [

{ rid: 'high', maxBitrate: 3000000, maxFramerate: 5 },

{ rid: 'medium', maxBitrate: 1000000, maxFramerate: 3 },

{ rid: 'low', maxBitrate: 500000, maxFramerate: 1 }

];

}

async setupScreenShare() {

const displayStream = await navigator.mediaDevices.getDisplayMedia({

video: {

cursor: 'always',

displaySurface: 'monitor'

}

});

// Configure for high quality, low framerate

const videoTrack = displayStream.getVideoTracks()[0];

const constraints = {

...this.screenEncodings,

degradationPreference: 'maintain-resolution'

};

return this.applySimulcastConstraints(videoTrack, constraints);

}

}

Performance Analysis

Bandwidth Savings

Simulcast provides significant bandwidth optimization:

| Scenario | Without Simulcast | With Simulcast | Savings |

|---|---|---|---|

| 5 participants (mixed bandwidth) | 5 × 2.5 Mbps = 12.5 Mbps | 2.5 + 1.5 + 3×0.5 = 5.5 Mbps | 56% |

| 10 participants (mixed bandwidth) | 10 × 2.5 Mbps = 25 Mbps | 2.5 + 3×1.5 + 6×0.5 = 10 Mbps | 60% |

| 20 participants (mixed bandwidth) | 20 × 2.5 Mbps = 50 Mbps | 2.5 + 5×1.5 + 14×0.5 = 17 Mbps | 66% |

CPU Utilization

Simulcast impacts both sender and receiver CPU usage:

class CPUMonitor {

async measureSimulcastImpact() {

const baseline = await this.measureCPUUsage();

// Enable simulcast

await this.enableSimulcast();

const withSimulcast = await this.measureCPUUsage();

return {

encodingOverhead: withSimulcast.sender - baseline.sender,

decodingSavings: baseline.receiver - withSimulcast.receiver

};

}

}

Best Practices

Encoding Configuration

Choosing the right encoding parameters is crucial for simulcast effectiveness. The optimal configuration depends on various factors including the use case, expected network conditions, and device capabilities. There's no one-size-fits-all solution, but understanding the trade-offs helps in making informed decisions.

Key considerations for encoding configuration include:

- Use Case Requirements: A business conference has different needs than a webinar or mobile chat application.

- Target Audience: Consider the typical devices and network conditions of your users.

- Content Type: Talking heads require different settings than presentations or screen sharing.

- Resource Constraints: Balance quality against CPU usage and bandwidth consumption.

The goal is to create a quality ladder that provides smooth transitions between tiers while ensuring each tier offers meaningful quality improvements over the one below it.

class SimulcastConfigurator {

getOptimalConfig(scenario) {

switch (scenario) {

case 'conference':

return {

high: { resolution: '720p', bitrate: 2500000, fps: 30 },

medium: { resolution: '360p', bitrate: 800000, fps: 30 },

low: { resolution: '180p', bitrate: 200000, fps: 15 }

};

case 'webinar':

return {

high: { resolution: '1080p', bitrate: 4000000, fps: 30 },

medium: { resolution: '720p', bitrate: 1500000, fps: 30 },

low: { resolution: '360p', bitrate: 500000, fps: 15 }

};

case 'mobile':

return {

high: { resolution: '480p', bitrate: 1000000, fps: 30 },

medium: { resolution: '360p', bitrate: 500000, fps: 30 },

low: { resolution: '240p', bitrate: 200000, fps: 15 }

};

}

}

}

Quality Switching Logic

Implementing effective quality switching requires more than just monitoring bandwidth—it requires preventing rapid oscillation between quality levels. This is where hysteresis comes into play. Hysteresis is a technique that creates a buffer zone around quality thresholds to prevent constant switching when conditions fluctuate near a boundary.

Think of hysteresis like a thermostat: instead of turning the heat on and off every time the temperature crosses exactly 70°F, it might wait until the temperature drops to 68°F before turning on, and not turn off until it reaches 72°F. This prevents constant cycling that would be both inefficient and annoying.

In simulcast quality switching, hysteresis means:

- Upgrade Threshold: Bandwidth must exceed the higher quality requirement by a margin (e.g., 20%) for a sustained period before upgrading.

- Downgrade Threshold: Bandwidth must fall below the lower quality requirement by a margin (e.g., 20%) before downgrading.

- Time Requirements: Conditions must remain stable for several seconds before making a change.

This approach ensures a stable viewing experience, as frequent quality changes can be more disruptive than maintaining a slightly suboptimal but consistent quality level.

class QualitySwitchController {

constructor() {

this.hysteresis = {

upgradeThreshold: 1.2, // 20% above threshold

downgradeThreshold: 0.8 // 20% below threshold

};

}

shouldSwitchQuality(current, metrics) {

const threshold = this.qualityThresholds[current];

if (metrics.bandwidth > threshold.bandwidth * this.hysteresis.upgradeThreshold) {

return this.getNextHigherQuality(current);

} else if (metrics.bandwidth < threshold.bandwidth * this.hysteresis.downgradeThreshold) {

return this.getNextLowerQuality(current);

}

return current; // No change

}

}

Limitations and Considerations

While simulcast provides significant benefits, it comes with trade-offs:

- Increased Encoding Load: Sender devices must encode multiple streams simultaneously

- Bandwidth Overhead: Although optimized per recipient, total upstream bandwidth increases

- Complexity: Implementation and debugging become more complex

- Codec Support: Not all codecs support simulcast equally well

Conclusion

Simulcast is an essential optimization for modern WebRTC applications, enabling high-quality video conferencing across heterogeneous networks. By allowing the SFU to select the most appropriate stream quality for each participant, simulcast ensures optimal experience for all users regardless of their network conditions.

The key to successful simulcast implementation lies in proper configuration of encoding parameters, intelligent quality selection algorithms, and smooth adaptation to changing network conditions. When implemented correctly, simulcast significantly improves the scalability and reliability of WebRTC applications.

Further Reading

- WebRTC Simulcast Specification: https://tools.ietf.org/html/draft-ietf-mmusic-sdp-simulcast

- VP8 Temporal Scalability: https://tools.ietf.org/html/rfc7741