In real-time communication applications powered by WebRTC, the consistency and timing of data delivery are critical for maintaining a seamless user experience. However, network conditions in the real world are rarely ideal, with fluctuations in bandwidth, latency, and packet arrival times. To overcome these challenges, WebRTC implementations leverage various buffer mechanisms that play vital roles in ensuring smooth audio and video communication.

This lesson explores the different types of buffers in WebRTC, their specific purposes, and how they work together to create resilient real-time connections even under challenging network conditions.

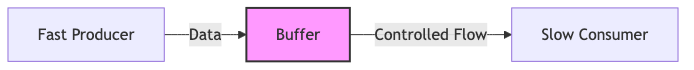

What Are Buffers?

A buffer is a dedicated area of memory used to temporarily store data before it is processed or transmitted. In computing systems, buffers serve several important functions:

- Flow Control: Buffers manage the rate of data transfer between components operating at different speeds

- Desynchronization: They allow producers and consumers of data to operate independently

- Smoothing: Buffers compensate for variations in data transfer rates

- Error Resilience: They provide space for error detection and correction mechanisms

In the context of WebRTC, buffers are critical components that handle the unpredictable nature of internet connections, ensuring that audio and video streams remain smooth and synchronized despite network fluctuations.

The Jitter Buffer: Smoothing Network Irregularities

Understanding Network Jitter

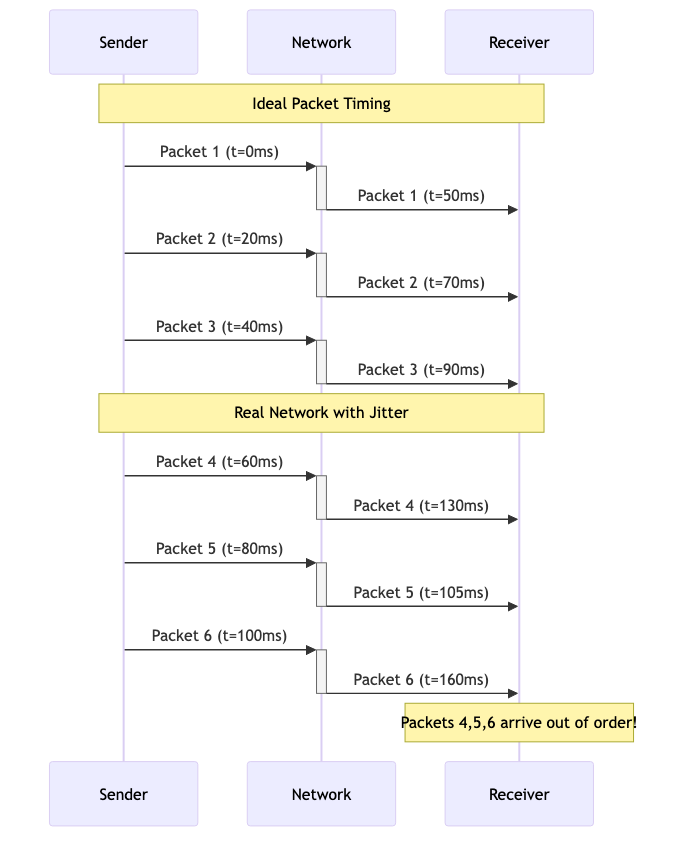

The jitter buffer is perhaps the most crucial buffer in WebRTC applications. To understand its importance, we must first understand network jitter:

Jitter refers to the variation in packet arrival times. In an ideal network, data packets would arrive at precisely consistent intervals. In real networks, however, packets face:

- Variable routing paths

- Congestion at network nodes

- Processing delays at routers

- Queuing at intermediate devices

These factors create unpredictable variations in when packets arrive at the destination, even if they were sent at regular intervals. Without compensation, this variation results in:

- Audio distortion, pops, and clicks

- Video stuttering and freezing

- Misalignment between audio and video

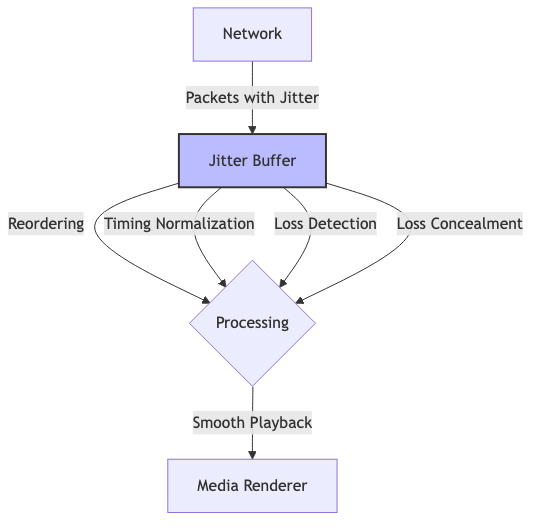

Jitter Buffer Architecture

The jitter buffer sits at the receiving end of a WebRTC connection and performs several critical functions:

- Reordering: Packets that arrive out of sequence are placed in their correct order

- Timing Normalization: Creates a consistent playback timing regardless of arrival intervals

- Loss Detection: Identifies missing packets in the sequence

- Loss Concealment: Implements strategies to minimize the impact of missing data

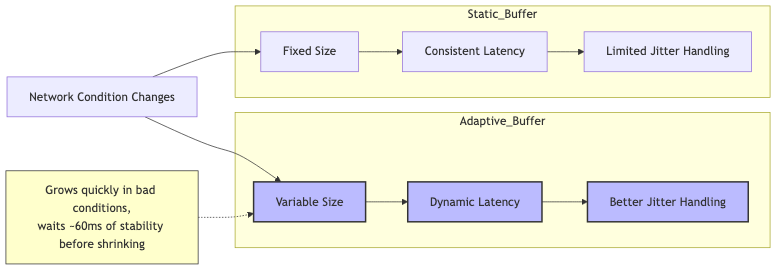

Static vs. Adaptive Jitter Buffers

Jitter buffers come in two primary configurations:

Static Jitter Buffers

- Maintain a fixed size regardless of network conditions

- Provide consistent latency

- Simpler to implement

- May be insufficient during periods of high jitter

- Can be wastefully large during stable network conditions

Adaptive Jitter Buffers

- Dynamically adjust their size based on observed network characteristics

- Expand during periods of high jitter to accommodate greater variability

- Contract during stable network conditions to minimize latency

- Constantly balance the trade-off between smoothness and latency

- More complex to implement but provide better overall experience

- Use hysteresis to avoid oscillation (quick to grow, slow to shrink)

Most modern WebRTC implementations use adaptive jitter buffers, which can respond to changing network conditions automatically. The buffer size typically ranges from 15ms to 120ms for audio (with an initial value around 40ms that can grow on poor connections), with video buffers often allowing for larger values due to the different nature of video perception compared to audio.

Operating Principles of Jitter Buffers

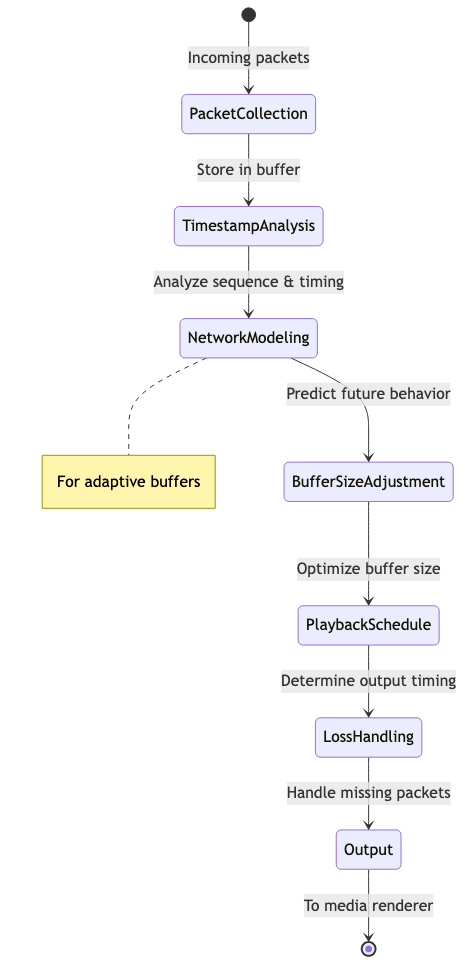

The operation of a jitter buffer involves several steps:

- Packet Collection: Incoming packets are stored in the buffer as they arrive

- Timestamp Analysis: The buffer examines packet timestamps and sequence numbers

- Network Modeling: Statistics are gathered to predict future network behavior

- Buffer Size Adjustment: For adaptive buffers, size is modified based on network models

- Playback Schedule: The buffer determines the optimal timing for releasing packets

- Loss Handling: Missing packets are detected and appropriate concealment is applied

- Output: Data is released to the media renderer (audio or video) at the appropriate time

This process occurs continuously throughout a WebRTC session, with the buffer making micro-adjustments to maintain optimal performance.

Audio-Specific Jitter Buffer Techniques

Audio jitter buffers employ specialized techniques:

- Packet Loss Concealment (PLC): Algorithmic generation of plausible replacements for lost audio packets, often combined with comfort noise. Opus uses PLC + comfort-noise, not time-stretching, at losses under 120ms.

- Time-Scale Modification: Subtle stretching or compression of audio used in some implementations, though rarely in modern RTC voice codecs.

- Forward Error Correction: Using redundant data to reconstruct lost packets (as detailed in the Media Resilience lesson)

- Buffer Flushing: Occasional reset during periods of silence to reduce accumulated latency

Video-Specific Jitter Buffer Techniques

Video streams have different requirements and capabilities:

- Frame Freeze: Holding the last good frame when new frames are delayed

- Decoder Reference Picture Selection (RPS): Skipping frames while maintaining reference integrity

- Resolution Adaptation: Temporarily reducing quality to maintain timing

- Decoder Coordination: Working with the video decoder to handle partial frame data

Note that true frame interpolation (generating synthetic intermediate frames) is still experimental in WebRTC, with some codecs like SVT-AV1 SFrame beginning to support it.

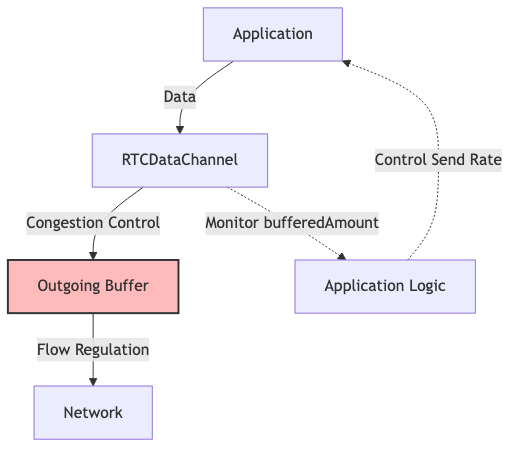

The RTCDataChannel Buffer: Managing Application Data

Beyond audio and video, WebRTC also supports direct data transmission through the RTCDataChannel API. This channel has its own buffering mechanism with distinct characteristics.

The Outgoing Data Buffer

Each RTCDataChannel instance maintains an outgoing data buffer that serves several purposes:

- Congestion Control: Prevents overwhelming the network by regulating data flow

- Backpressure Indication: Provides feedback about network capacity

- Reliability Layer: Supports retransmission of lost packets when reliable mode is enabled

Note that ordered delivery is enforced at a layer above the buffer; the buffer itself stores already-ordered data chunks.

Unlike the jitter buffer, which is primarily concerned with timing, the data channel buffer focuses on reliable delivery and flow control.

Monitoring and Managing the Data Buffer

While developers cannot directly set the size of the outgoing data buffer, WebRTC provides mechanisms to monitor and work with it:

// Creating a data channel

const dataChannel = peerConnection.createDataChannel("myChannel");

// Setting a threshold for buffer monitoring

dataChannel.bufferedAmountLowThreshold = 65536; // 64 KB

// Event listener for when buffer drains below the threshold

dataChannel.addEventListener('bufferedamountlow', () => {

console.log('Buffer has drained below threshold, safe to send more data');

// Resume sending data

});

// Checking buffer state before sending large data

function sendLargeData(data) {

if (dataChannel.bufferedAmount > 1048576) { // 1 MB

console.log('Buffer too full, pausing transmission');

// Queue data or try again later

return false;

}

dataChannel.send(data);

return true;

}

Best Practices for Data Channel Buffer Management

When working with RTCDataChannel buffers, consider these guidelines:

- Check

bufferedAmountbefore sending large data to prevent buffer overflow - Set appropriate

bufferedAmountLowThresholdvalues based on your application's needs - Implement send queues for large file transfers or data streams

- Consider chunking large data into smaller pieces with progress tracking

- Monitor buffer growth as a sign of network congestion or receiver slowdown

For more detailed information on RTCDataChannel usage, refer to the RTCDataChannel lesson in Module 2 and Mozilla's developer documentation.

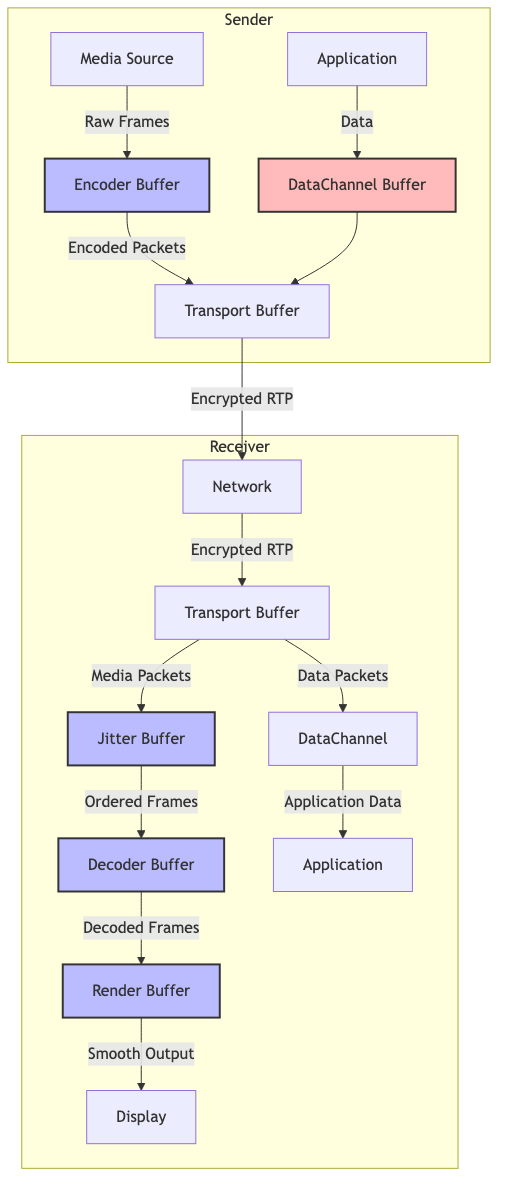

The Complete WebRTC Buffer Ecosystem

WebRTC employs a comprehensive system of buffers that work together to ensure smooth communication:

Encoder Buffers

- Input Frames Buffer: Holds raw frames waiting to be encoded

- Bitrate Adaptation Buffer: Temporarily stores frames during bitrate adjustment

- Reference Frame Buffer: Maintains key frames needed for predictive encoding

Decoder Buffers

- Decode Queue: Holds encoded packets waiting to be processed

- Render Buffer: Stores decoded frames before display

- Frame Prediction Buffer: Contains data needed for reconstructing predicted frames

Network Buffers

- Transport Buffer: Low-level buffer for RTP/RTCP packets

- DTLS/SRTP Buffer: Handles encrypted media before/after encryption processing

- ICE Candidate Buffer: Stores connectivity candidates during connection establishment

These internal buffers are generally not directly accessible to application developers but play crucial roles in WebRTC's overall performance.

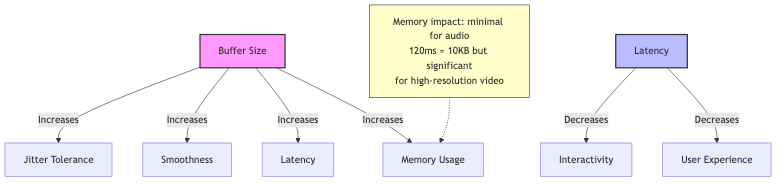

Buffer Size and Latency Trade-offs

One of the most important considerations in WebRTC buffer management is the trade-off between buffer size and latency:

Larger buffers provide more room to handle network variations but introduce more delay. The optimal buffer size depends on:

- Application Requirements: Video conferencing needs lower latency than broadcast streaming

- Network Conditions: More jitter requires larger buffers

- User Expectations: Different use cases have different tolerance for delay

- Device Capabilities: Mobile devices may have memory constraints

Troubleshooting Buffer-Related Issues

Common buffer-related problems in WebRTC applications include:

-

Buffer Bloat: Excessive buffering leading to high latency

- Solution: Adjust buffer size limits and improve congestion control

-

Buffer Underrun: Buffer emptying before new data arrives

- Solution: Increase buffer size or implement more aggressive packet loss concealment

-

Oscillating Buffer Size: Buffer constantly expanding and contracting

- Solution: Implement smoothing in buffer size adjustments

-

Memory Growth: Unbounded buffer expansion consuming system resources

- Solution: Implement maximum buffer size limits and monitor memory usage

-

Synchronization Drift: Audio and video buffers becoming desynchronized

- Solution: Implement cross-stream synchronization based on RTP timestamps

Implementing Custom Buffer Logic

While WebRTC handles most buffering automatically, applications with specific requirements may need custom buffer management:

// Example: Custom monitoring of jitter buffer health using WebRTC stats API

async function monitorJitterBuffer(rtcPeerConnection) {

const stats = await rtcPeerConnection.getStats();

stats.forEach(report => {

if (report.type === 'inbound-rtp' && report.kind === 'audio') {

// jitterBufferDelay is in seconds, not milliseconds

const delayInSeconds = report.jitterBufferDelay;

const emittedCount = report.jitterBufferEmittedCount;

const packetDurationMs = 20; // Typical audio packet duration in ms

// Calculate buffer delay in ms, correctly accounting for packet duration

const bufferDelayMs = (delayInSeconds / emittedCount) * 1000 * (1000 / packetDurationMs);

console.log(`Audio jitter buffer:

- Delay: ${delayInSeconds.toFixed(3)}s

- Emitted count: ${emittedCount}

- Buffer delay: ${bufferDelayMs.toFixed(1)}ms

- Concealed samples: ${report.concealedSamples || 'N/A'}

- Total samples received: ${report.totalSamplesReceived || 'N/A'}

`);

}

if (report.type === 'inbound-rtp' && report.kind === 'video') {

const delayInSeconds = report.jitterBufferDelay;

const emittedCount = report.jitterBufferEmittedCount;

console.log(`Video jitter buffer:

- Delay: ${delayInSeconds.toFixed(3)}s

- Emitted count: ${emittedCount}

- Buffer delay: ${(delayInSeconds / emittedCount * 1000).toFixed(1)}ms

`);

}

});

}

// Call this function periodically to monitor buffer health

setInterval(() => monitorJitterBuffer(peerConnection), 2000);

Conclusion

Buffers are essential components in WebRTC that enable smooth real-time communication despite the inherent challenges of network transmission. The jitter buffer compensates for variable packet timing, while the data channel buffer manages application data flow. Together with internal encoder, decoder, and network buffers, they form a comprehensive system that balances latency, quality, and reliability.

Understanding how these buffers work and interoperate allows developers to build more resilient WebRTC applications and troubleshoot issues more effectively when they arise. For best performance in video calling applications, buffer management should be considered as part of the overall application architecture.