Build low-latency Vision AI applications using our new open-source Vision AI SDK. ⭐️ on GitHub

Detect harmful text, images, and video in real time and take action with customizable policies with minimal setup, all backed by powerful NLP and LLMs.

Trusted By

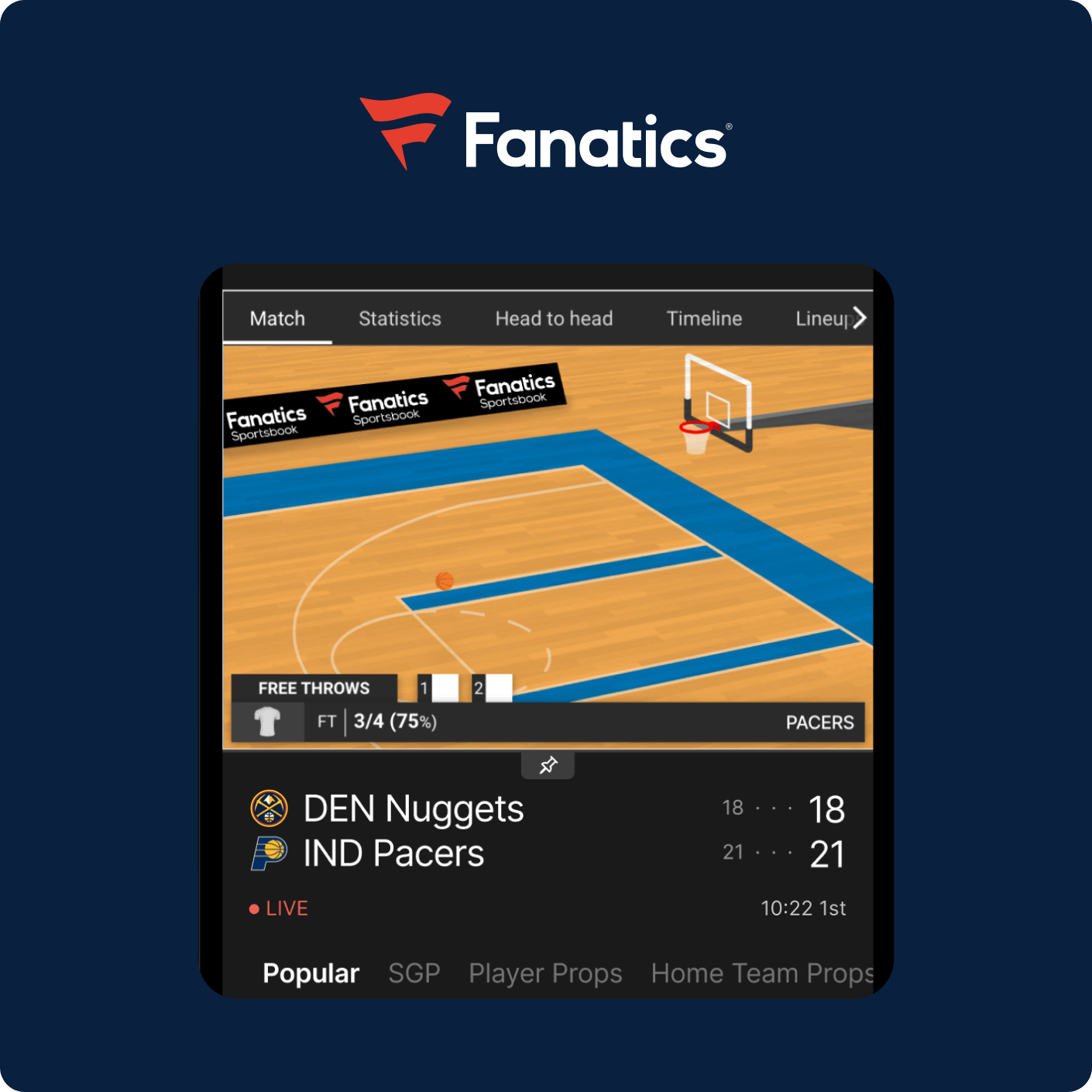

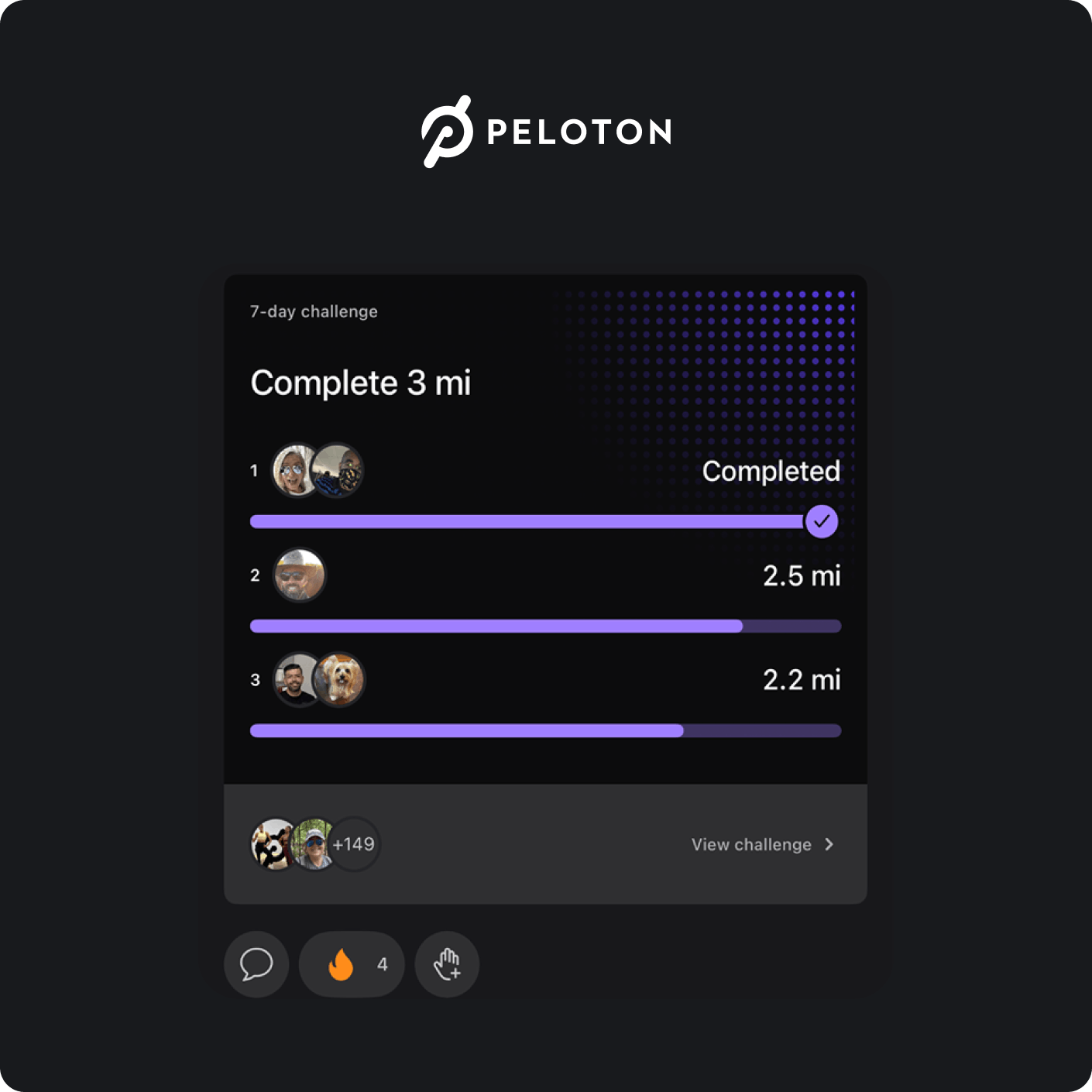

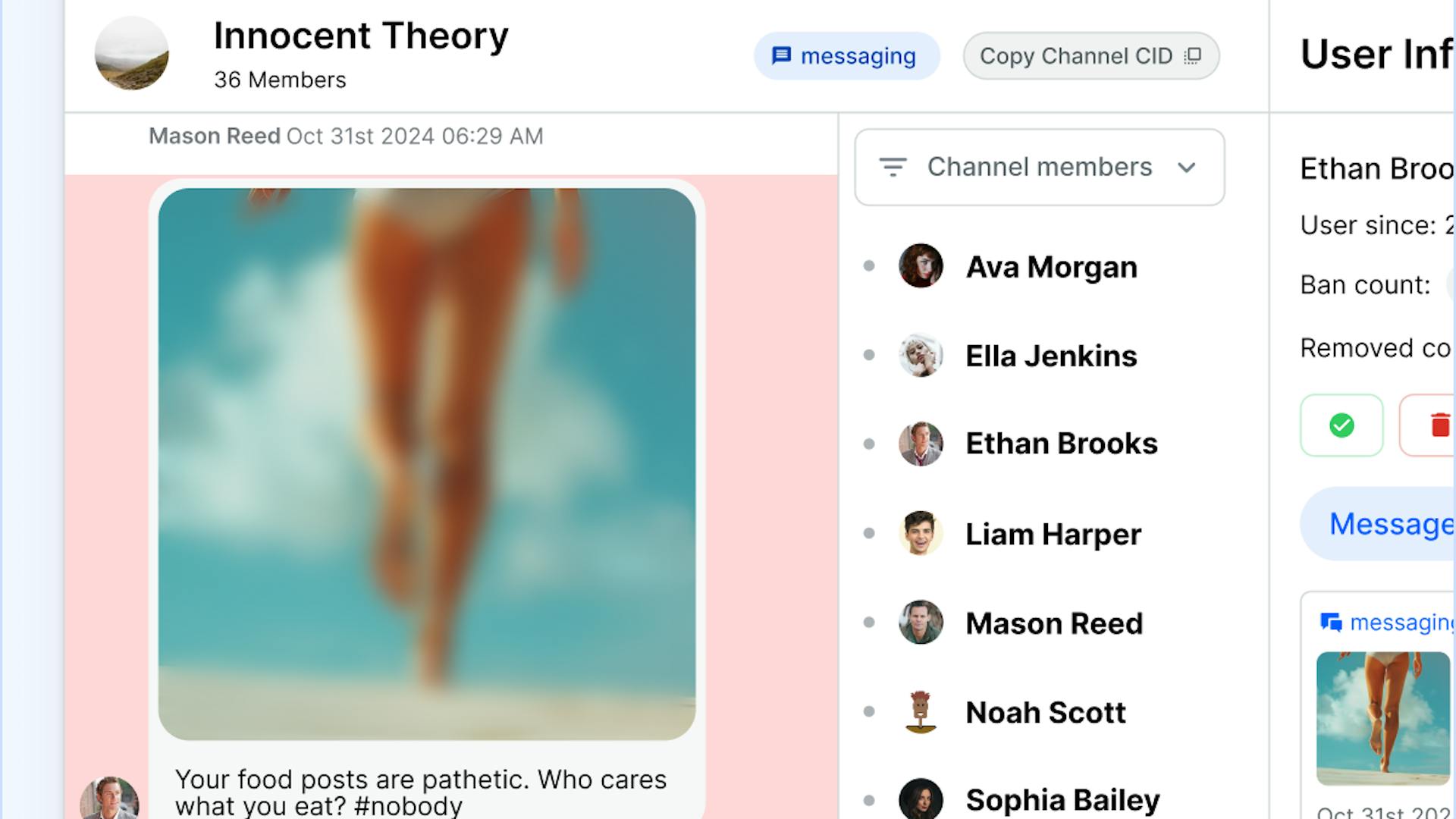

Industry-leading apps protect their brand and communities with Stream AI Moderation, ensuring every message, image, and video shared is appropriate and on-topic.

Meet evolving legal and platform requirements, without the operational burden.

Protect users and reduce legal risk under Europe's strictest digital regulations.

Comply with new safety standards and enforce age-appropriate protections across your platform.

Automatically flag content to protect minors and prevent child exploitation at scale.

Ensure transparency, human oversight, and risk mitigation in line with the latest EU standards for AI deployment.

Go beyond traditional keyword filters and blocklists with LLMs that evaluate flagged content with greater contextual understanding.

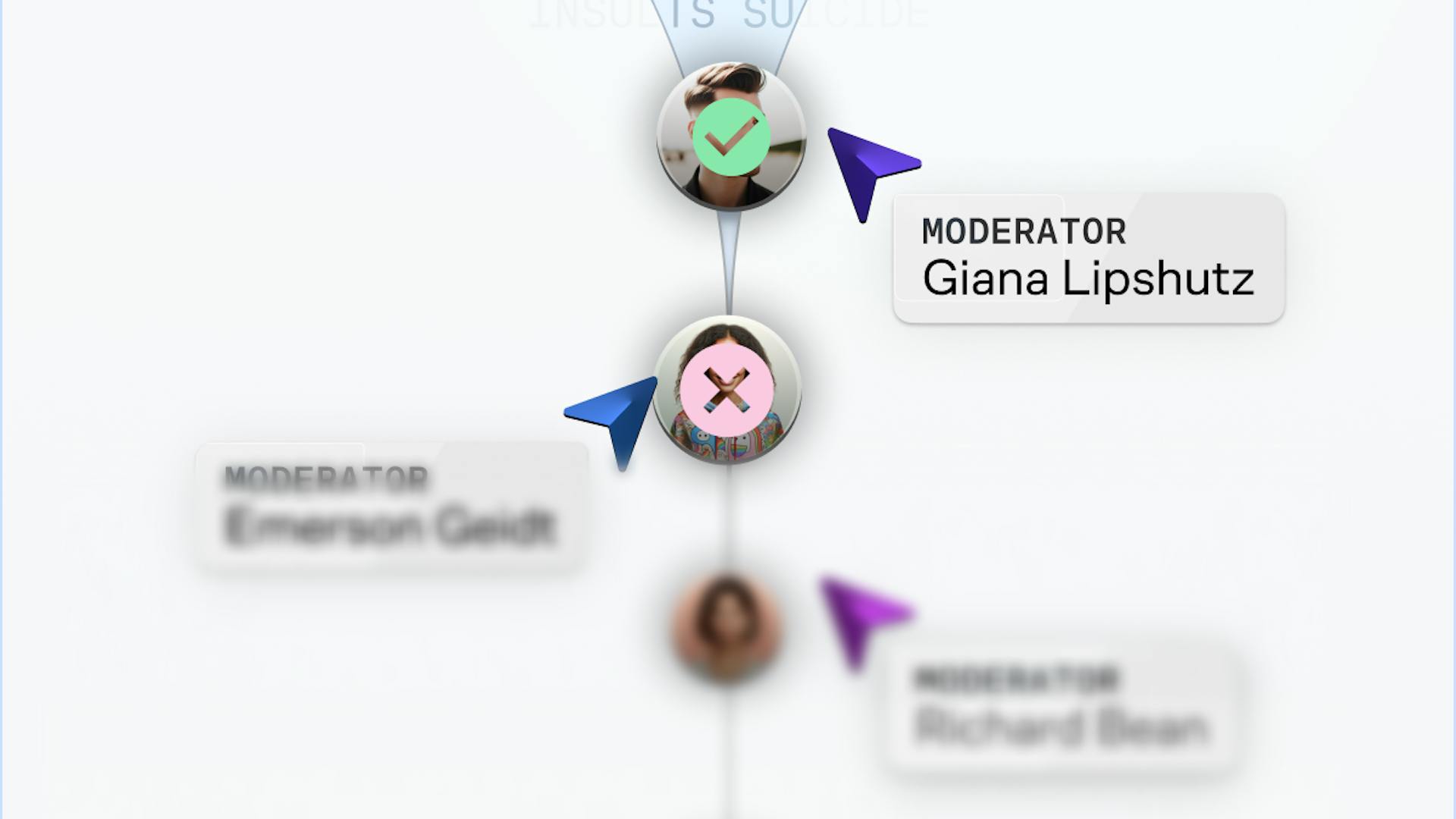

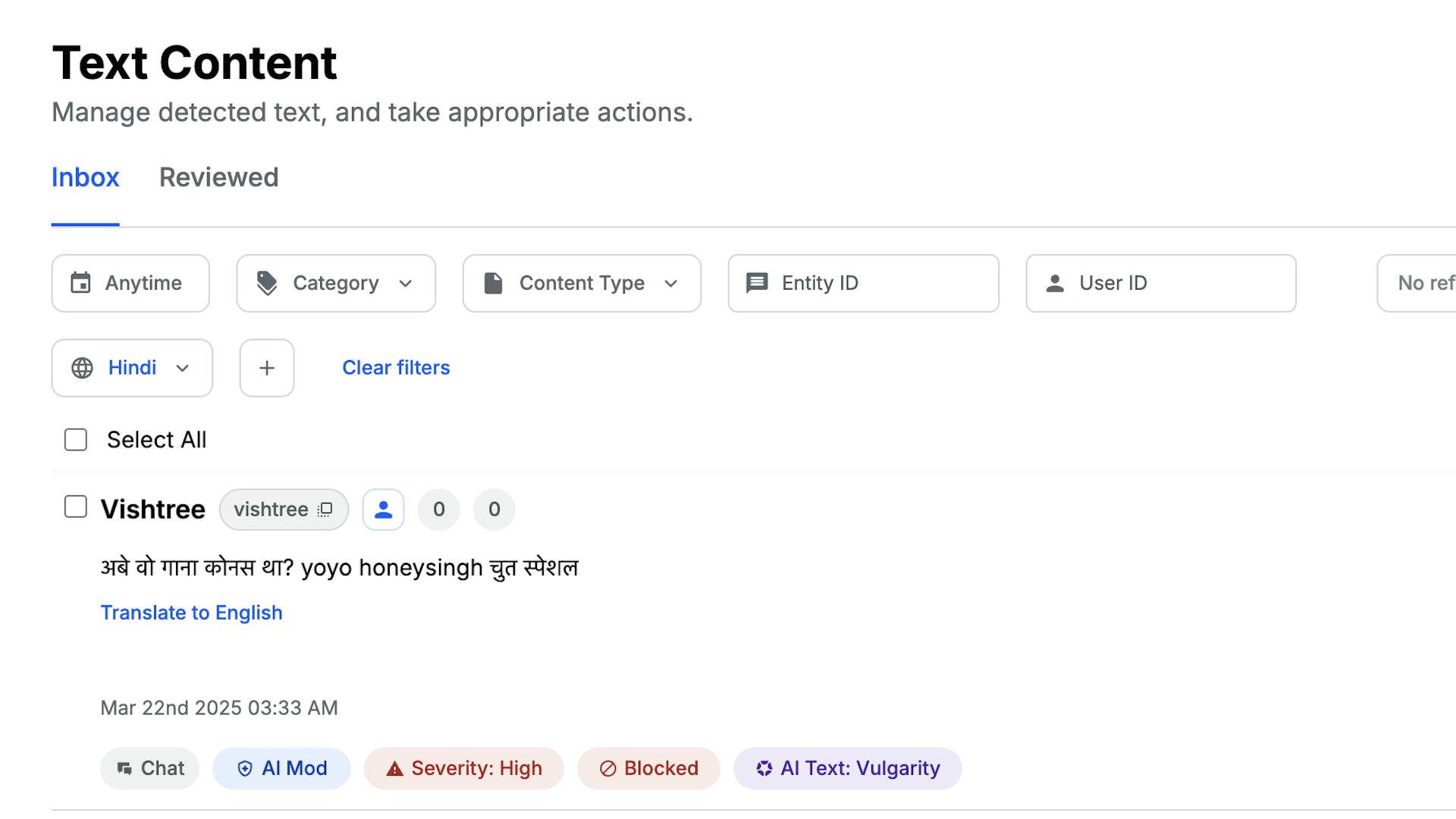

Moderate up to 25% faster with intuitive queues, contextual views, and tools designed to reduce reviewer fatigue and speed up resolution time.

Build your custom dashboard on top of Stream’s APIs for complete workflow control or use Stream to review flagged content, manage users, and more.

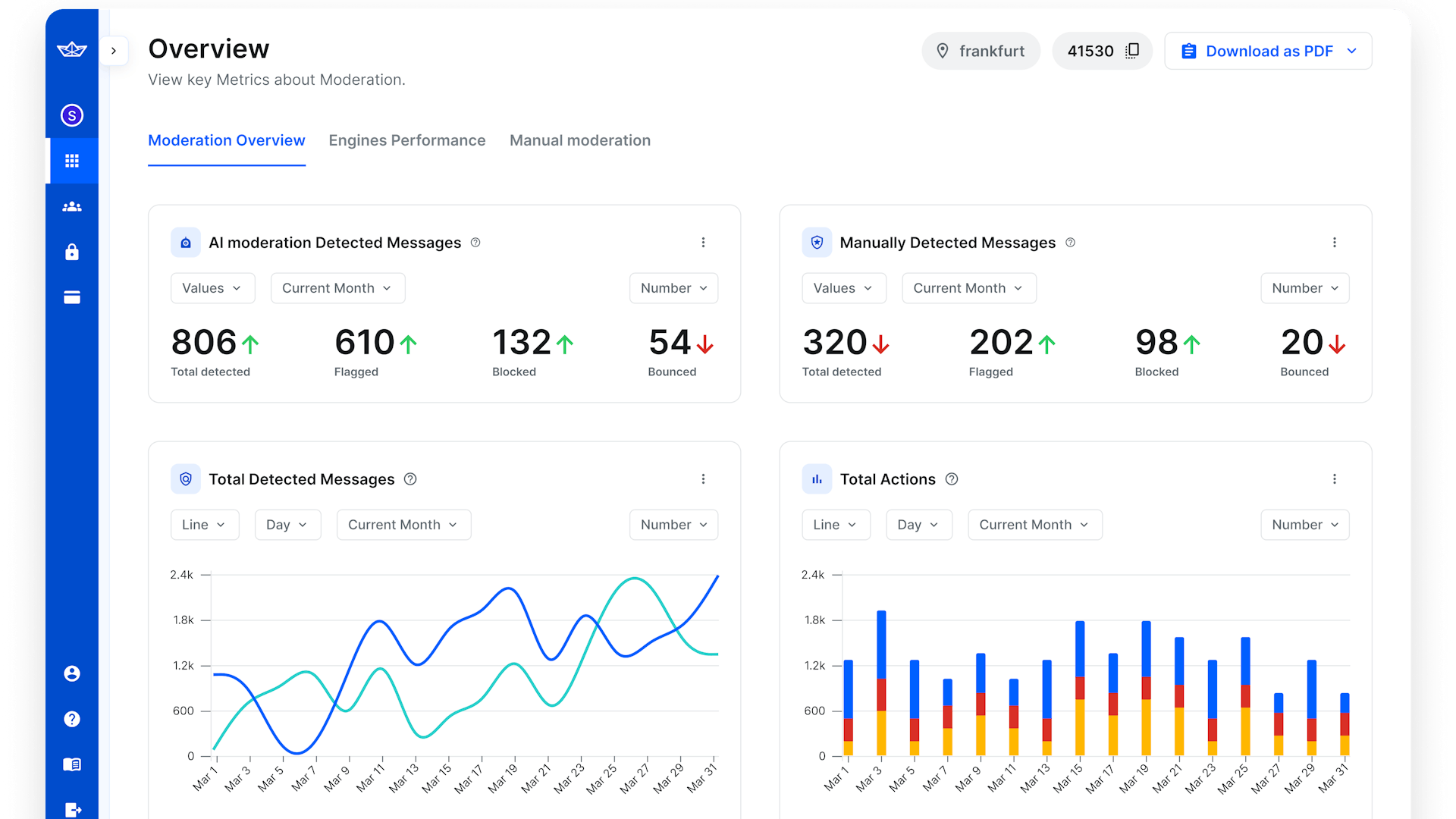

Track monthly trends on detection rates, harm types, and moderator actions to improve your trust & safety strategy.

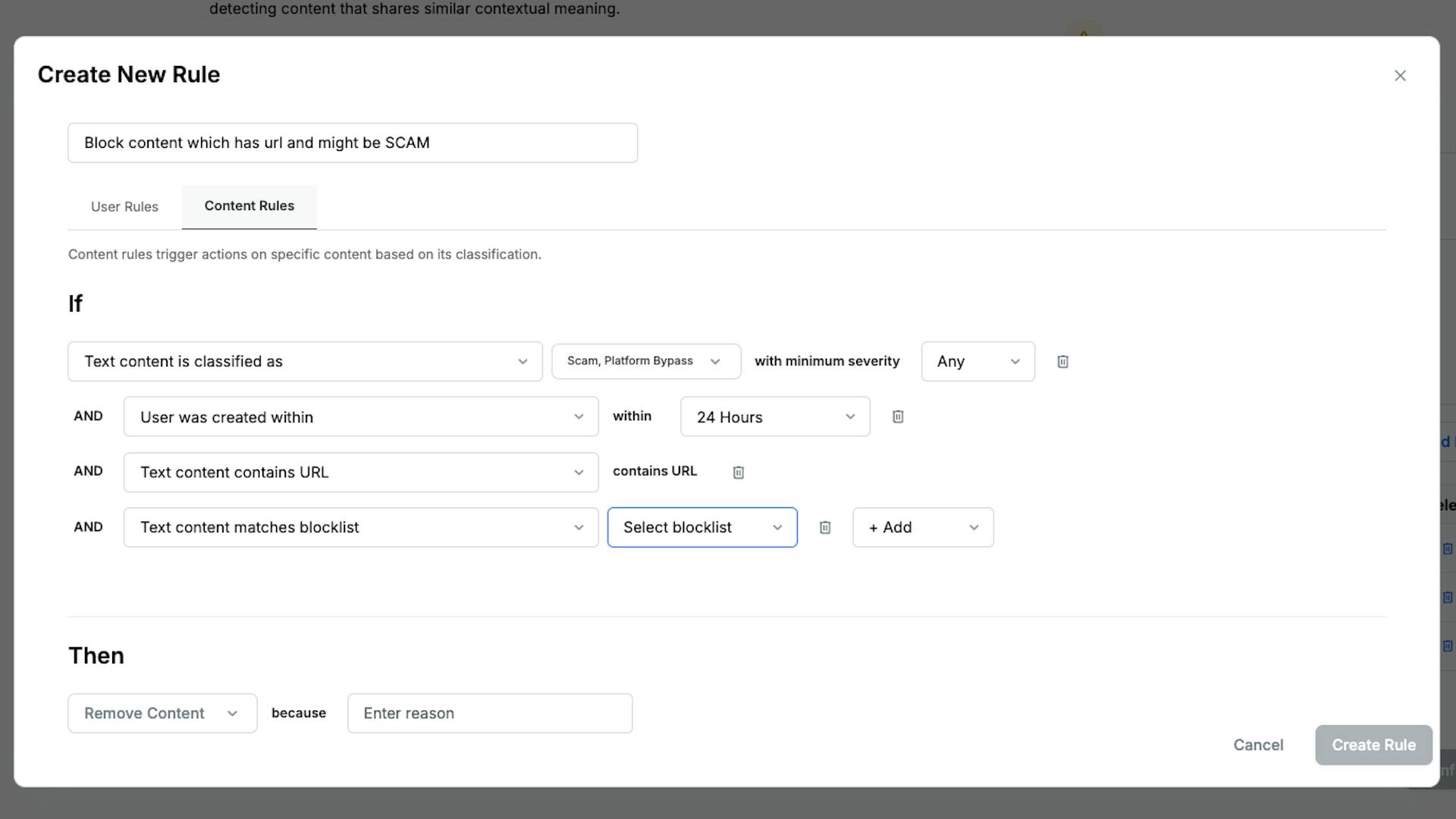

Define custom logic using a no-code interface, set if/then conditions, define actions, and adapt enforcement to match policies.

Secure your platform across 50+ languages with Stream’s AI moderation, which automatically blocks user-generated content.

Use Stream’s dashboard or integrate existing tools and reviewer workflows via API to create a seamless, customized experience.

Using NLP, AI can detect emotional tone and subjective intent to support early intervention and promote healthy community interactions.

Instantly flag issues to give your team the speed and control they need to stay on top of high-traffic events.

AI-powered detection for every format of user-generated content.

Detect toxicity, harassment, hate speech, vulgarity, platform circumvention, and more in real-time across 50+ languages.

Automatically scan for NSFW, violent, manipulated images, and more using AI models and OCR to detect unsafe visual content and embedded text.

Analyze real-time video or files for inappropriate scenes, unsafe content, scams, and more before they reach your community.

Automatically analyze audio files for hate speech, explicit language, threats, and other unsafe content using AI models trained to detect harmful speech patterns and tone.

Learn how AI Moderation can strengthen your trust and safety stack.

Stream AI Moderation:

AI excels at processing large volumes of content within a split second, identifying patterns, and flagging potential issues. Human moderators are essential for nuanced decisions, contextual understanding, and handling complex cases.

AI moderation systems can be tailored to align with your platform’s unique policies and standards. Our flexible rule-setting allows complete flexibility to meet your specific community needs.

AI moderation uses pre-trained models to detect specific harm types like profanity, hate speech, or spam using a technique known as Natural Language Processing (NLP). LLM-based moderation goes a step further by leveraging large language models to assess context, tone, and nuance. Stream provides both AI and LLM-based moderation.

Yes, by automating the initial screening process, AI moderation allows human moderators to focus on more complex and nuanced cases, improving overall speed and efficiency.

Yes. AI moderation is designed to scale with platform growth, handling large volumes of content without a proportional increase in moderation resources.

Get started with our AI-powered solutions to protect your platform and moderate user-generated content. No credit card required.