Using WebRTC Media Stream API

WebRTC Media Stream API Overview

The MediaStream API represents a real-time media stream within a stream object that allows you to access the media streams from local cameras and microphone input and synchronize video and audio tracks.

Each instance of MediaStream contains several media tracks, such as video or audio tracks that extend MediaStreamTrack, which represents a single media track within a stream.

Using the MediaStream APIs

Let’s create a simple WebRTC application that displays local video stream via your webcam. You can create an instance of MediaStream with MediaDevices.getUserMedia() method, which returns a media stream, such as video (hardware or virtual video source such as camera, video recording device, screen sharing service, and so on) or audio tracks (microphone or virtual audio sources).

The getUserMedia() method takes a MediaStreamConstraints object as a parameter, so we need to create an instance of MediaStreamConstraints before creating the MediaStream.

MediaStreamConstraints enables you to define the desired media type and request specific specifications, such as width/height dimensions or various modes for your video and audio tracks.

In this lesson, we will create straightforward media constraints that focus on setting the width/height dimensions for a video track, as illustrated in the example below:

As you can see in the example above, you can set two media type below and request their specifications:

- Audio: This signifies your intention to capture audio. The value provided here is a boolean type, allowing you to define specific constraints for the audio track.

- Video: This signifies your intention to capture video. Similarly, the value is an object that enables you to specify detailed constraints for the video track.

After creating the media constrains, you can create an instance of MediaStream like the example below:

The MediaStream is the essential concept in the WebRTC, and you should use it for most of following up lessons. You can get video and audio tracks with MediaStream.getVideoTracks() and MediaStream.getAudioTracks() methods and add other tracks from remote networks.

If you want to learn more about the methods of MediaStream APIs, check out the Instance methods for MediaStream.

Create a video stream page

First thing first, let’s create an index.html file that displays a real-time video stream on the screen, that comes from the local camera, and ask the user permission to use the camera if a user click a button on the screen.

In the example above, you should don't forget to add the autoplay attribute on the video element that plays the real-time video stream automatically.

Next, you should create a main.js file that gets user media and display a video stream from the local camera like the example below:

If you want to apply some styles for the demo application, you can download the main.css and save it into the css folder.

Note: You can download the full source code of Lesson 1 on GitHub.

Running the WebRTC demo application

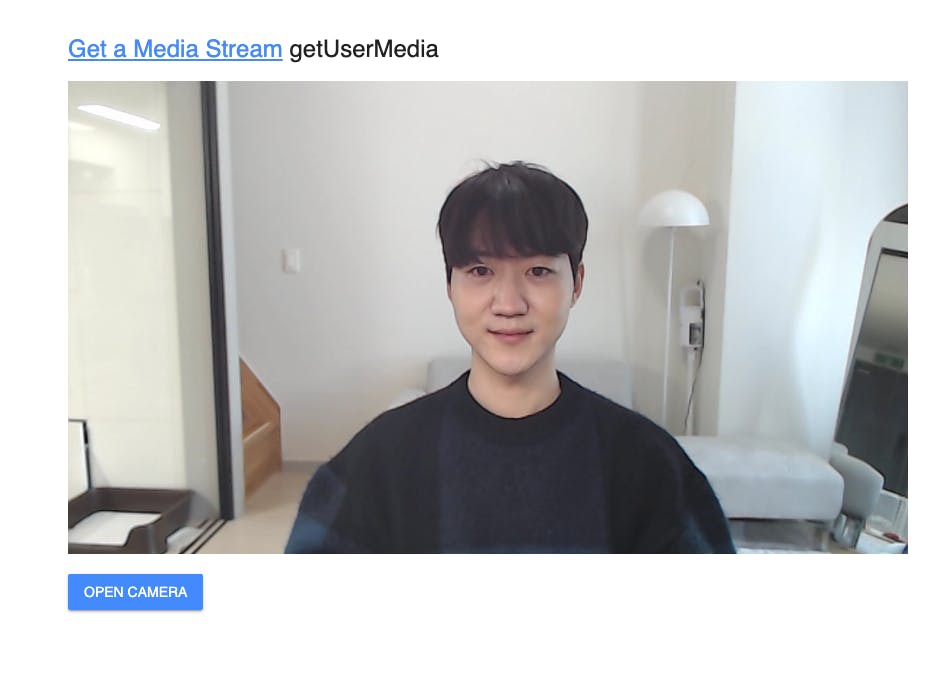

If you run the index.html file, then you will see the demo below:

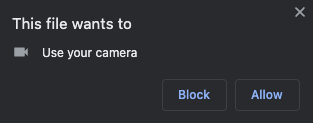

Next, click the Open camera button to display a video stream from the local camera. If you click the button, you will see the popup below that asks you to get access permission for your local camera.

If you click the Block or cancel the popup, you will see the error message like the screenshot below:

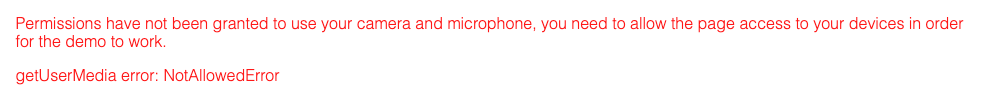

If you click the Allow button on the popup, you will see the real-time video stream screen on your screen like the below:

Note: You can demonstrate and test the sample project about Get a Media Stream on your web browser without building the project.

Debugging WebRTC in Chrome browser

If you want to debug your WebRTC application, you can easily debug your WebRTC application on the chrome://webrtc-internals/ page, a WebRTC inspection tool by Chrome.

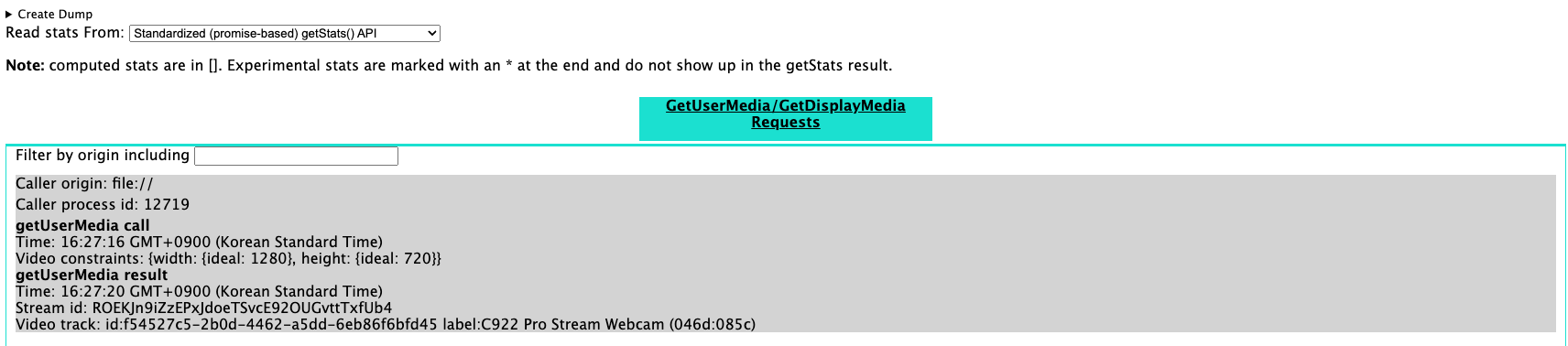

Firstly, run the Get a Media Stream example application, and go into the chrome://webrtc-internals/ page, and you will see the inspection page below:

You can trace the the WebRTC request details on the page. If you want to learn more about the debugging WebRTC, check out the WebRTC in chrome browser.