As discussed in module 2, Working With SDP Messages, SDP (Session Description Protocol) is a fundamental part of real-time communication systems. SDP plays a crucial role in the negotiation process between peers, defining essential parameters such as codecs, media types, and network configurations. In this comprehensive lesson, we'll explore the intricate structure of SDP and delve into the powerful yet complex technique known as SDP munging.

Understanding SDP: The Foundation of WebRTC Negotiation

SDP is a text-based protocol standardized by the IETF (Internet Engineering Task Force) in RFC 4566. It provides a universal format for endpoints to describe multimedia sessions, facilitating functions such as session announcements, invitations, and other forms of multimedia negotiation.

Rather than being a transport protocol, SDP is a format for session description—it's the language that WebRTC peers use to communicate their capabilities and preferences to each other during the connection establishment process.

The Anatomy of an SDP Message

If you've used debugging tools like Chrome's WebRTC internals accessible via chrome://webrtc-internals/, you've likely encountered SDP messages. At first glance, they might appear cryptic—a series of letters and values that seem difficult to decipher:

v=0

o=jdoe 2890844526 2890842807 IN IP4 10.47.16.5

s=SDP Seminar

i=A Seminar on the session description protocol

u=http://www.example.com/seminars/sdp.pdf

e=j.doe@example.com (Jane Doe)

c=IN IP4 224.2.17.12/127

t=2873397496 2873404696

a=recvonly

m=audio 49170 RTP/AVP 0

m=video 51372 RTP/AVP 99

a=rtpmap:99 h263-1998/90000Each line in an SDP message starts with a single character (followed by an equals sign) that designates the type of information being conveyed. These line types follow a structured format and appear in a specific order within the SDP.

Key SDP Fields Explained

Let's break down the essential components of an SDP message:

Session-Level Fields

These fields provide information about the overall session and appear only once in an SDP:

-

v=(Protocol Version): Always set to 0 for the current version of SDP.v=0 -

o=(Origin): Contains information about the session originator, including username, session ID, session version, network type, address type, and IP address.o=jdoe 2890844526 2890842807 IN IP4 10.47.16.5Breaking this down:

jdoe: Username (often auto-generated in WebRTC)2890844526: Session ID (unique identifier)2890842807: Session version (increments with modifications)IN: Network type (Internet)IP4: Address type (IPv4)10.47.16.5: IP address of the originator

-

s=(Session Name): A human-readable name for the session.s=SDP Seminar -

t=(Timing): Specifies the start and end times for the session (in Network Time Protocol format).t=2873397496 2873404696In WebRTC, these values are typically set to 0, indicating an "unbounded" or indefinite session.

-

c=(Connection Information): Provides connection data such as network type, address type, and connection address.c=IN IP4 224.2.17.12/127

Optional Session-Level Fields

-

i=(Session Information): Additional information about the session.i=A Seminar on the session description protocol -

u=(URI): A URI pointing to additional information about the session.u=http://www.example.com/seminars/sdp.pdf -

e=(Email): Email address of the person responsible for the session.e=j.doe@example.com (Jane Doe)

Media-Level Fields

After the session-level information, SDP contains one or more media sections, each beginning with an m= line:

-

m=(Media): Specifies the media type, port, transport protocol, and format descriptions.m=audio 49170 RTP/AVP 0 m=video 51372 RTP/AVP 99Each

m=line follows this format:m=... Where:

<media type>: Can be "audio", "video", "text", "application", or "message"<port>: The transport port for the media<protocol>: The transport protocol (e.g., "UDP", "RTP/AVP", "RTP/SAVP")<format/payload type>: One or more payload type numbers (codecs) supported for this media

-

a=(Attribute): Media attributes that provide additional information about the media stream.a=recvonly a=rtpmap:99 h263-1998/90000The

a=rtpmapattribute maps the payload type number (in this case, 99) to a specific codec (h263-1998) with a clock rate (90000 Hz).

Media Attributes Deep Dive

The media attributes (a=) are particularly important in WebRTC as they carry crucial information about codecs, encryption, ICE candidates, and other critical parameters. Here are some of the most common attribute types you'll encounter:

Codec-Related Attributes

-

a=rtpmap: Maps a payload type number to a specific codec name and parameters.a=rtpmap:96 VP8/90000 a=rtpmap:111 opus/48000/2 -

a=fmtp(Format Parameters): Provides additional parameters for a specific payload type.a=fmtp:111 minptime=10;useinbandfec=1;usedtx=0This example configures the Opus audio codec (payload type 111) with a minimum packet time of 10ms, enables in-band forward error correction, and disables discontinuous transmission.

Direction Attributes

These specify the direction of media flow:

a=sendrecv: The endpoint can send and receive this media type (default).a=sendonly: The endpoint will only send this media type.a=recvonly: The endpoint will only receive this media type.a=inactive: The media stream is temporarily inactive.

ICE-Related Attributes

-

a=ice-ufrag: The username fragment for ICE.a=ice-ufrag:F7gI -

a=ice-pwd: The password for ICE.a=ice-pwd:x9cml/YzichV2+XlhiMu8g -

a=candidate: Specifies an ICE candidate for connectivity.a=candidate:1 1 UDP 2130706431 192.168.1.5 49203 typ host

DTLS-Related Attributes

-

a=fingerprint: Contains the fingerprint of the DTLS certificate.a=fingerprint:sha-256 8C:71:B3:...:E2:1B:F7 -

a=setup: Defines the DTLS role (active, passive, actpass).a=setup:actpass

RTP-Related Attributes

-

a=ssrc: Defines an RTP Synchronization Source identifier.a=ssrc:1234 cname:user@example.com -

a=rtcp-mux: Indicates that RTP and RTCP are multiplexed on the same port.a=rtcp-mux

The Significance of SDP in WebRTC

Understanding SDP is crucial for WebRTC developers because:

-

Negotiation Process: SDP forms the backbone of the WebRTC negotiation process. Peers exchange SDP messages to agree on media formats, codecs, and network parameters.

-

Troubleshooting: When WebRTC connections fail, analyzing SDP messages can often reveal the cause of the issue.

-

Advanced Configuration: Certain WebRTC features require specific SDP configurations that may not be directly accessible through the standard APIs.

-

Interoperability: Ensuring compatibility between different browsers and platforms often involves understanding and sometimes modifying SDP messages.

SDP Munging: Customizing WebRTC Behavior

SDP munging refers to the practice of manually modifying SDP messages to achieve specific behaviors that may not be directly accessible through standard WebRTC APIs. While powerful, this technique must be approached with caution.

The Double-Edged Sword of SDP Munging

Before diving into how to perform SDP munging, it's important to understand its advantages and potential pitfalls:

Advantages

- Enhanced Flexibility: Munging allows developers to implement features or behaviors not yet available through standard WebRTC APIs.

- Fine-Grained Control: It provides precise control over codec selection, bitrates, and other media parameters.

- Workarounds for Browser Inconsistencies: It can help overcome differences in WebRTC implementation across browsers.

Risks and Challenges

- Compatibility Issues: Incorrect munging can lead to connection failures, especially across different browsers or WebRTC versions.

- Future Maintenance Challenges: As WebRTC evolves, munged SDP may become incompatible with newer versions, requiring ongoing updates.

- Debugging Complexity: Troubleshooting becomes more complex when SDP has been manually modified.

- Standardization Problems: Munging often works around standards rather than following them, which can lead to non-standard behavior.

Best Practices for SDP Munging

If you need to implement SDP munging, follow these guidelines to minimize risks:

-

Use Standard APIs First: Many operations that previously required munging can now be done with standard APIs:

javascript// Instead of munging to change codec order: const transceiver = pc.getTransceivers().find(t => t.sender.track?.kind === 'video'); const capabilities = RTCRtpSender.getCapabilities('video'); const preferredCodecs = capabilities.codecs.sort((a, b) => { // Move VP8 to the front if (a.mimeType.includes('VP8')) return -1; if (b.mimeType.includes('VP8')) return 1; return 0; }); transceiver.setCodecPreferences(preferredCodecs); -

Document Everything: Keep detailed documentation of all munging operations, including:

- Why standard APIs were insufficient

- What specific SDP fields are being modified

- Expected outcomes and potential side effects

- Browser compatibility considerations

-

Test Thoroughly: Test your munging code across all target browsers and devices, with a comprehensive test matrix.

-

Use Parsing Libraries: Instead of regex-based string manipulation, use structured SDP parsing:

javascript// Example with sdp-transform library const parsedSdp = sdpTransform.parse(sdp); // Modify the parsed object, not strings parsedSdp.media[0].rtp = /* modifications */; const modifiedSdp = sdpTransform.write(parsedSdp); -

Validate Before Use: Always validate modified SDP before applying it to connections.

-

Implement Strict Error Handling: Catch and handle errors gracefully with fallbacks to standard behavior.

-

Log for Debugging: Maintain comprehensive logs of both original and modified SDP.

-

Stay Updated: Keep track of WebRTC evolution to adjust your munging strategies as standards change, especially as browsers introduce new APIs that make munging unnecessary.

Real-World SDP Munging Examples

Let's explore some practical examples of SDP munging, drawing from real-world applications like the Stream Video SDK.

Example 1: Prioritizing a Preferred Codec

When peers support multiple codecs, the order of codecs in the SDP determines which one will be preferred. By reordering codecs in the SDP, you can influence codec selection.

⚠️ Warning: Modifying codec order after DTLS fingerprints or BUNDLE groups have been established can break connectivity. Always perform codec reordering before setting the local description (SLD) to avoid invalidating cryptographic parameters.

Before diving into munging, consider using the standard WebRTC API when available:

// Modern approach using standard WebRTC API (preferred when available)

function setPreferredCodecWithTransceiver(pc, mediaType, preferredCodec) {

const transceivers = pc.getTransceivers();

for (const transceiver of transceivers) {

if (transceiver.sender.track?.kind === mediaType) {

const codecs = RTCRtpSender.getCapabilities(mediaType).codecs;

// Move preferred codec to the front

const preferred = codecs.filter(codec =>

codec.mimeType.toLowerCase().includes(preferredCodec.toLowerCase())

);

const others = codecs.filter(codec =>

!codec.mimeType.toLowerCase().includes(preferredCodec.toLowerCase())

);

// Set codec preferences

if (preferred.length > 0) {

transceiver.setCodecPreferences([...preferred, ...others]);

console.log(`Set preferred ${mediaType} codec to ${preferredCodec}`);

}

}

}

}

When the standard API isn't sufficient or you need cross-browser compatibility, here's how the Stream Video SDK implements codec prioritization through SDP munging:

export const setPreferredCodec = (

sdp: string,

mediaType: 'video' | 'audio',

preferredCodec: string,

) => {

// Note: getMediaSection is a helper function that parses SDP to extract

// the media section and related attributes - you'll need to implement this

// or use a library like sdp-transform

const section = getMediaSection(sdp, mediaType);

if (!section) return sdp;

const rtpMap = section.rtpMap.find(

(r) => r.codec.toLowerCase() === preferredCodec.toLowerCase(),

);

const codecId = rtpMap?.payload;

if (!codecId) return sdp;

const newCodecOrder = moveCodecToFront(section.media.codecOrder, codecId);

// Create the modified SDP

const result = sdp.replace(

section.media.original,

`${section.media.mediaWithPorts} ${newCodecOrder}`,

);

// Log for debugging

console.log('Modified codec order SDP:', result);

return result;

};

Let's break down this process step by step:

-

Locate the Media Section: First, find the relevant media section (audio or video) in the SDP.

-

Identify the Preferred Codec: Find the payload type ID for the preferred codec by searching the rtpmap entries.

-

Reorder the Codecs: Move the preferred codec to the front of the list in the media line.

For example, to prioritize VP8 (payload type 96) in a video stream, we might transform this line:

m=video 9 UDP/TLS/RTP/SAVPF 100 101 96 97 35 36 102 125 127Into this:

m=video 9 UDP/TLS/RTP/SAVPF 96 100 101 97 35 36 102 125 127This simple reordering tells the WebRTC engine to prefer VP8 over other codecs when establishing the connection.

Example 2: Toggling Discontinuous Transmission (DTX)

DTX (Discontinuous Transmission) is a feature in audio codecs that reduces bandwidth usage during silence by sending fewer packets when no one is speaking. Enabling or disabling this feature can be achieved through SDP munging:

export const toggleDtx = (sdp: string, enable: boolean): string => {

const opusFmtp = getOpusFmtp(sdp);

if (opusFmtp) {

const matchDtx = /usedtx=(\d)/.exec(opusFmtp.config);

const requiredDtxConfig = `usedtx=${enable ? '1' : '0'}`;

if (matchDtx) {

const newFmtp = opusFmtp.original.replace(

/usedtx=(\d)/,

requiredDtxConfig,

);

return sdp.replace(opusFmtp.original, newFmtp);

} else {

const newFmtp = `${opusFmtp.original};${requiredDtxConfig}`;

return sdp.replace(opusFmtp.original, newFmtp);

}

}

return sdp;

};

Here's the process:

-

Find the Opus Codec Configuration: Locate the fmtp line for the Opus audio codec.

-

Check for Existing DTX Setting: Determine if the DTX parameter is already present in the configuration.

-

Modify or Add the DTX Setting: Either update the existing DTX setting or add it if it doesn't exist.

For example, to enable DTX on an Opus codec, we might transform:

a=fmtp:111 minptime=10;useinbandfec=1;usedtx=0Into:

a=fmtp:111 minptime=10;useinbandfec=1;usedtx=1Or, if DTX wasn't specified, we might add it:

a=fmtp:111 minptime=10;useinbandfec=1Into:

a=fmtp:111 minptime=10;useinbandfec=1;usedtx=1Example 3: Modifying Bandwidth Parameters

WebRTC allows for bandwidth limitation, but sometimes you need more fine-grained control over bandwidth allocation. SDP munging can help:

export const setBandwidth = (

sdp: string,

mediaType: 'video' | 'audio',

bandwidthBps: number, // bandwidth in bits per second

): string => {

const section = getMediaSection(sdp, mediaType);

if (!section) return sdp;

// Remove existing bandwidth lines if present

let result = sdp.replace(/b=AS:.*\r\n/g, '');

result = result.replace(/b=TIAS:.*\r\n/g, '');

// Add new bandwidth lines after the media line

const mediaLine = section.media.original;

// Add both TIAS (preferred) and AS (for legacy compatibility)

// TIAS is in bits per second while AS is in kilobits per second

const asBandwidth = Math.floor(bandwidthBps / 1000);

const newBandwidthLine = `${mediaLine}\r\nb=TIAS:${bandwidthBps}\r\nb=AS:${asBandwidth}`;

result = result.replace(mediaLine, newBandwidthLine);

// Log for debugging

console.log('Modified bandwidth SDP:', result);

return result;

};

This function:

-

Locates the Media Section: Finds the audio or video section in the SDP.

-

Removes Existing Bandwidth Settings: Clears any existing bandwidth lines (both TIAS and AS).

-

Adds New Bandwidth Limits: Inserts both TIAS and AS bandwidth lines right after the media line.

Important Note: In modern WebRTC implementations,

b=TIAS:(Transport Independent Application Specific) is the preferred bandwidth control format as specified in RFC 3890. This is interpreted more precisely by browsers compared to the olderb=AS:(Application Specific) format. However, including both formats provides better backward compatibility.

For example, to set a 2 Mbps limit for video, the function would add:

b=TIAS:2000000

b=AS:2000After the video media line in the SDP, with TIAS specified in bits per second (2,000,000 bps) and AS in kilobits per second (2,000 kbps).

Example 4: Enabling Stereo Audio

For applications requiring high-quality audio, enabling stereo can be important. This often requires modifying the Opus codec parameters:

export const enableStereo = (sdp: string): string => {

const opusFmtp = getOpusFmtp(sdp);

if (opusFmtp) {

// Check if stereo is already enabled

if (opusFmtp.config.includes('stereo=1')) {

return sdp; // Already enabled, no changes needed

}

// Add stereo=1 to the fmtp line

const newFmtp = `${opusFmtp.original};stereo=1`;

return sdp.replace(opusFmtp.original, newFmtp);

}

return sdp;

};

This function:

-

Finds the Opus Configuration: Locates the fmtp line for the Opus codec.

-

Checks if Stereo is Already Enabled: Avoids making unnecessary changes.

-

Adds the Stereo Parameter: Appends "stereo=1" to enable stereo audio.

The modification would change:

a=fmtp:111 minptime=10;useinbandfec=1To:

a=fmtp:111 minptime=10;useinbandfec=1;stereo=1Example 5: Removing Unwanted Codecs

Sometimes you want to ensure specific codecs aren't used, either for performance or compatibility reasons:

export const removeCodec = (

sdp: string,

mediaType: 'video' | 'audio',

codecToRemove: string,

): string => {

const section = getMediaSection(sdp, mediaType);

if (!section) return sdp;

// Find the payload ID for the codec to remove

const rtpMap = section.rtpMap.find(

(r) => r.codec.toLowerCase() === codecToRemove.toLowerCase(),

);

if (!rtpMap) return sdp; // Codec not found

const payloadId = rtpMap.payload;

// Remove the codec from the m= line

const codecList = section.media.codecOrder.split(' ');

const filteredCodecs = codecList.filter(id => id !== payloadId).join(' ');

let result = sdp.replace(

section.media.original,

`${section.media.mediaWithPorts} ${filteredCodecs}`,

);

// Remove ALL associated attribute lines for this codec

// 1. Remove rtpmap line

result = result.replace(new RegExp(`a=rtpmap:${payloadId}.*\\r\\n`, 'g'), '');

// 2. Remove fmtp line

result = result.replace(new RegExp(`a=fmtp:${payloadId}.*\\r\\n`, 'g'), '');

// 3. Remove rtcp-fb lines (important and often forgotten)

result = result.replace(new RegExp(`a=rtcp-fb:${payloadId}.*\\r\\n`, 'g'), '');

// 4. Remove any other attribute lines referencing this payload ID

result = result.replace(new RegExp(`a=[a-zA-Z0-9\\-]+:${payloadId}.*\\r\\n`, 'g'), '');

// Log for debugging

console.log('Modified SDP after codec removal:', result);

return result;

};

This function:

-

Identifies the Codec's Payload ID: Finds the numeric identifier for the codec to be removed.

-

Removes the Codec from the Media Line: Filters out the payload ID from the list of supported formats.

-

Removes ALL Associated Attributes: Thoroughly cleans up by removing rtpmap, fmtp, rtcp-fb, and any other attribute lines referencing the removed codec.

⚠️ Important: When removing codecs, you must clean up all associated attribute lines to avoid leaving SDP in an inconsistent state. Missing this step is a common cause of WebRTC connectivity failures after munging.

For instance, to remove H.264 (payload ID 125) from the video options, the function would transform:

m=video 9 UDP/TLS/RTP/SAVPF 96 97 98 99 100 101 102 122 123 124 125 127

a=rtpmap:125 H264/90000

a=fmtp:125 level-asymmetry-allowed=1;packetization-mode=1;profile-level-id=42e01f

a=rtcp-fb:125 nack

a=rtcp-fb:125 nack pli

a=rtcp-fb:125 ccm firTo:

m=video 9 UDP/TLS/RTP/SAVPF 96 97 98 99 100 101 102 122 123 124 127With all the attribute lines for payload 125 completely removed, ensuring a consistent SDP structure.

Advanced Considerations for SDP Munging

As you become more comfortable with SDP munging, there are several advanced aspects to consider:

Timing of SDP Modifications

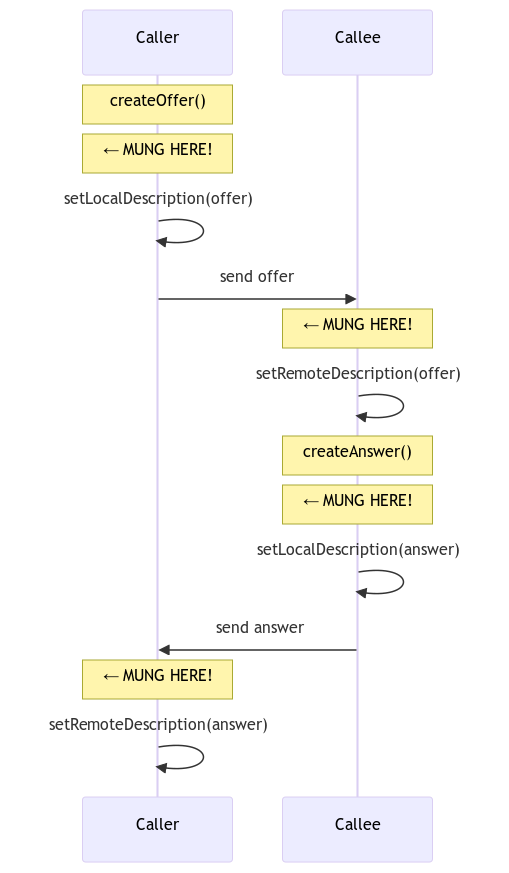

The timing of when you perform SDP munging in the WebRTC connection process is critical. Here's a visualization of the SDP lifecycle and when munging should occur:

The ideal places to mung SDP are:

- After

createOffer()but beforesetLocalDescription() - After

createAnswer()but beforesetLocalDescription() - After receiving remote SDP but before

setRemoteDescription()

// Example: Munging SDP at the right time

pc.createOffer()

.then(offer => {

// 1. Log the original SDP for debugging

console.log('Original offer SDP:', offer.sdp);

// 2. Modify the offer SDP before setting it as local description

const modifiedSdp = modifySdp(offer.sdp);

// 3. Validate the modified SDP (ideally using a library like sdp-transform)

try {

validateSdp(modifiedSdp);

} catch (error) {

console.error('SDP munging produced invalid SDP:', error);

// Fall back to original SDP

return pc.setLocalDescription(offer);

}

// 4. Log the modified SDP for debugging

console.log('Modified offer SDP:', modifiedSdp);

const modifiedOffer = new RTCSessionDescription({

type: 'offer',

sdp: modifiedSdp

});

return pc.setLocalDescription(modifiedOffer);

})

.then(() => {

// Send the modified offer to the remote peer

sendSignalingMessage({

type: 'offer',

sdp: pc.localDescription.sdp

});

})

.catch(error => console.error('Error creating offer:', error));

Browser-Specific Considerations

Different browsers may generate slightly different SDP formats, requiring adaptations in your munging code:

function mungeBasedOnBrowser(sdp) {

const browser = detectBrowser();

if (browser === 'firefox') {

// Firefox-specific munging

return mungeForFirefox(sdp);

} else if (browser === 'safari') {

// Safari-specific munging

return mungeForSafari(sdp);

} else {

// Default (Chrome) munging

return mungeForChrome(sdp);

}

}

Regression Testing

Given the complexity of SDP munging, implementing comprehensive testing is crucial:

function testSdpMunging() {

// Test cases for different browsers and scenarios

const testCases = [

{

name: 'Chrome video codec prioritization',

input: chromeSdpSample,

expected: expectedChromeSdpAfterMunging,

operation: sdp => setPreferredCodec(sdp, 'video', 'VP8')

},

// Additional test cases...

];

// Run tests

testCases.forEach(test => {

const result = test.operation(test.input);

if (result === test.expected) {

console.log(`✅ Test passed: ${test.name}`);

} else {

console.error(`❌ Test failed: ${test.name}`);

console.log('Expected:', test.expected);

console.log('Got:', result);

}

});

}

Tools and Validation for Safe SDP Munging

To minimize the risks associated with SDP munging, consider using established libraries and validation techniques:

Using SDP Transform Libraries

Rather than manipulating SDP strings directly with regular expressions, consider using purpose-built libraries:

// Example using sdp-transform library

import * as sdpTransform from 'sdp-transform';

function safelyMungSdp(sdpString, mediaType, operation) {

try {

// Parse SDP into a manipulable object

const parsedSdp = sdpTransform.parse(sdpString);

// Find the target media section

const mediaSection = parsedSdp.media.find(m => m.type === mediaType);

if (!mediaSection) return sdpString;

// Apply the operation to the parsed object (safer than string manipulation)

operation(mediaSection, parsedSdp);

// Convert back to string

const modifiedSdp = sdpTransform.write(parsedSdp);

// Validate the result before returning

if (!isValidSdp(modifiedSdp)) {

console.warn('Munging produced invalid SDP, falling back to original');

return sdpString;

}

return modifiedSdp;

} catch (error) {

console.error('Error during SDP munging:', error);

// Always fall back to the original SDP on error

return sdpString;

}

}

// Usage example

const modifiedSdp = safelyMungSdp(

originalSdp,

'video',

(mediaSection) => {

// Safe modification of the parsed object

if (mediaSection.rtp) {

// Prioritize VP8

const vp8Codec = mediaSection.rtp.find(c => c.codec.toLowerCase() === 'vp8');

if (vp8Codec) {

// Move VP8 to front of payload list

const pt = vp8Codec.payload;

mediaSection.payloads = pt + ' ' +

mediaSection.payloads.split(' ').filter(p => p != pt).join(' ');

}

}

}

);

SDP Validation and Fuzzing

Before applying munged SDP to a connection, validate it to catch potential errors:

function validateSdp(sdp) {

// Basic structural validation

if (!sdp.includes('v=0') || !sdp.includes('m=')) {

throw new Error('SDP missing required sections');

}

// Check for matching media sections and attributes

const mediaCount = (sdp.match(/m=/g) || []).length;

const mediaWithMissingInfo =

mediaCount - (sdp.match(/a=rtpmap:/g) || []).length;

if (mediaWithMissingInfo > 0) {

throw new Error('Media section missing rtpmap attributes');

}

// Validate DTLS fingerprints

if (sdp.includes('a=fingerprint:') &&

!/a=fingerprint:sha-\d+ [0-9A-F:]+/i.test(sdp)) {

throw new Error('Invalid DTLS fingerprint format');

}

// More validations as needed...

return true;

}

Conclusion: The Balancing Act of SDP Munging

SDP munging represents a powerful technique in the WebRTC developer's toolkit, allowing for customization and optimization beyond what standard APIs provide. However, it comes with significant responsibilities:

-

Use Standard APIs First: Before resorting to SDP munging, check if newer APIs like

RTCRtpTransceiver.setCodecPreferences()can achieve your goal. -

Use Parsing Libraries: Prefer structured SDP manipulation with libraries like

sdp-transformover direct string manipulation. -

Stay Informed: Keep up with WebRTC standards evolution to ensure your munging remains compatible.

-

Test Rigorously: Thoroughly test your munging code across all target platforms and browsers.

-

Validate and Log: Always validate munged SDP before use and maintain comprehensive logs for debugging.

-

Implement Fallbacks: Have graceful fallbacks when munging fails or produces invalid SDP.

-

Document Clearly: Maintain comprehensive documentation of all munging operations for future maintenance.

-

Consider Long-term Maintenance: Be prepared to update your munging code as browsers update their WebRTC implementations.

By understanding the structure of SDP and approaching munging with appropriate caution, you can harness its power to create more flexible, performant, and feature-rich WebRTC applications. The key is striking the right balance between customization and adherence to standards.

Additional Resources

- Stream Video SDK GitHub Repository: Explore real-world implementations of SDP munging in a production library.

- WebRTC SDP Specification (RFC 8866): The complete and updated specification for SDP.

- WebRTC Samples SDP Section: Example applications demonstrating SDP handling in WebRTC.

- Chrome WebRTC Internals: A built-in Chrome tool for inspecting WebRTC connections and SDP messages.