Optimizing bandwidth usage is essential in real-time communication applications, and achieving the ideal equilibrium between bit rates and traffic priority is crucial to providing positive user experiences. In this lesson, we will delve into effective strategies for fine-tuning bit rates and appropriately prioritizing various types of network traffic to enhance the overall user experience.

Understanding Bitrates and Traffic Priority

Before exploring various balancing strategies, let's first define and comprehend the concepts of bitrates and traffic priority.

- Bitrate: This term describes the volume of data measured in the number of bits transferred or processed per unit of time. Typically quantified in bits per second (bps), a higher bitrate in contexts like streaming services usually translates to enhanced video or audio quality.

- Traffic Priority: This concept entails giving precedence to certain types of network traffic over others. For example, to guarantee fluid and uninterrupted communication, real-time video conferencing could be prioritized over file downloads in a network.

The Challenge of Balancing Bandwidth

The bandwidth is a limited resource in any network, so effectively managing the bitrates and prioritizing different types of traffic becomes a crucial aspect of network management. Balancing bitrates and traffic priority is a complex task that involves a delicate interplay between network capacity, Quality of Service (QoS), and user experience.

- Network Capacity: As the cornerstone of any communication system, network capacity denotes the maximum volume of data that can be transferred or processed over a network at any given moment. The primary challenge here is to optimize the use of available bandwidth without causing network congestion. This necessitates a balanced approach that demands a thorough understanding of the network's capabilities and the demands imposed on it.

- Quality of Service (QoS): QoS involves the management of data traffic to minimize packet loss and latency, ensuring that essential applications are given precedence over less critical ones. QoS plays a crucial role when dealing with a network where various types of traffic coexist. So, it prioritizes time-sensitive traffic like video streaming over others, thus maintaining a high quality of service where it's most needed.

- User Experiences: Ultimately, the success of balancing bit rates and traffic priority is measured by the user experience, which means they should be experienced by users anyway. So, monitoring user feedback and network performance data is crucial. This ongoing assessment allows for fine-tuning the balance between bitrates and traffic priorities, ensuring optimal user satisfaction.

Now, let’s delve into the strategies for balancing the bandwidth by balancing bitrates and prioritizing traffic types.

Strategies for Balancing Bitrates

As highlighted in our previous discussion, the importance of seamless streaming and efficient data transfer cannot be overstated, with balancing bitrates being a vital aspect beyond mere technical requirements. To achieve this balance, there are three key strategies to consider: Adaptive Bitrate Streaming, Quality of Service (QoS) Control, and Traffic Shaping. Each strategy is integral in ensuring that network communications are efficient, smooth, and high quality.

1. Adaptive Bitrate Streaming (ABR)

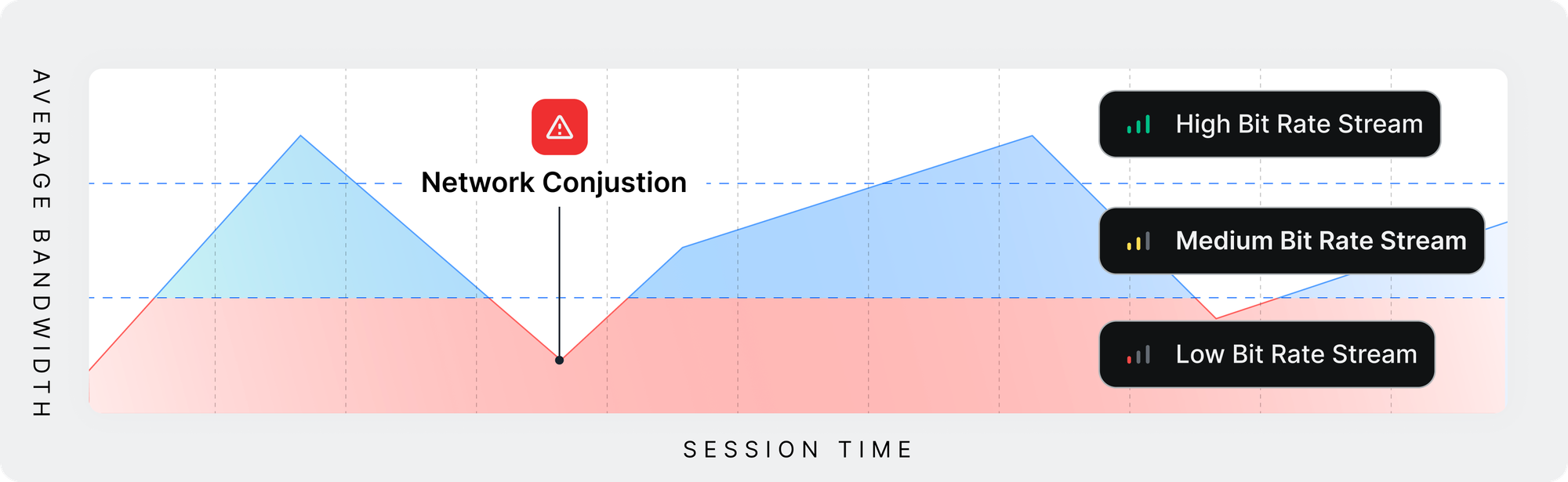

Adaptive Bitrate Streaming, predominantly utilized in media streaming, dynamically alters the quality of a video stream to align with the current network conditions and the capabilities of the user's device. It operates by real-time detection of the user's bandwidth and CPU capacity, subsequently adjusting the video stream's quality to match these parameters as described in the figure below:

Adopting this strategy enhances user experience. For example, in streaming scenarios, viewers receive video quality tailored to their network's capacity, ensuring optimal usage without overburdening or underutilizing the network. This approach effectively reduces instances of buffering, leading to smoother and more enjoyable streaming experiences for users.

Conversely, offering an optional feature allowing users to select their streaming quality level manually can also enhance user experience. This respects the preferences of those who prefer not to use higher resources for streaming services. Users can choose a bitrate that aligns with their specific needs and preferences, thereby tailoring their viewing experience. The highest bitrates are not the best option for every user, as individual requirements and resources vary. Such a feature empowers users to make choices that best suit their circumstances, ultimately leading to a more personalized and satisfying streaming experience.

2. Quality of Service Control

We’ve already discussed QoS control, which is a network management practice that prioritizes certain types of traffic over others to ensure the performance of critical applications. QoS can prioritize traffic based on various factors, such as the type of service (e.g., video streaming vs file downloading). By prioritizing certain types of traffic, QoS helps efficiently manage the network’s available bandwidth, thus enhancing user experiences.

Controlling Quality of Service (QoS) can also be effectively managed by prioritizing traffic based on user groups and their specific needs. For instance, if your service caters to diverse user groups with varying requirements, tailoring priority according to their usage patterns or preferences can enhance their user experience.

For example, a user group A predominantly engages in video streaming. They will likely perceive the service as exceptionally smooth and efficient if video streaming traffic is prioritized. On the other hand, for a user group B that favors file downloading or uploading over streaming, prioritizing file-related traffic can lead to a perception of superior network management and performance.

Therefore, a deep analysis of your user base and adopting tailored strategies can lead to optimal user experiences. By understanding and catering to the specific needs of different user groups, your service can provide a more personalized and satisfactory experience, demonstrating an adaptive and user-centric approach to network management.

3. Traffic Shaping

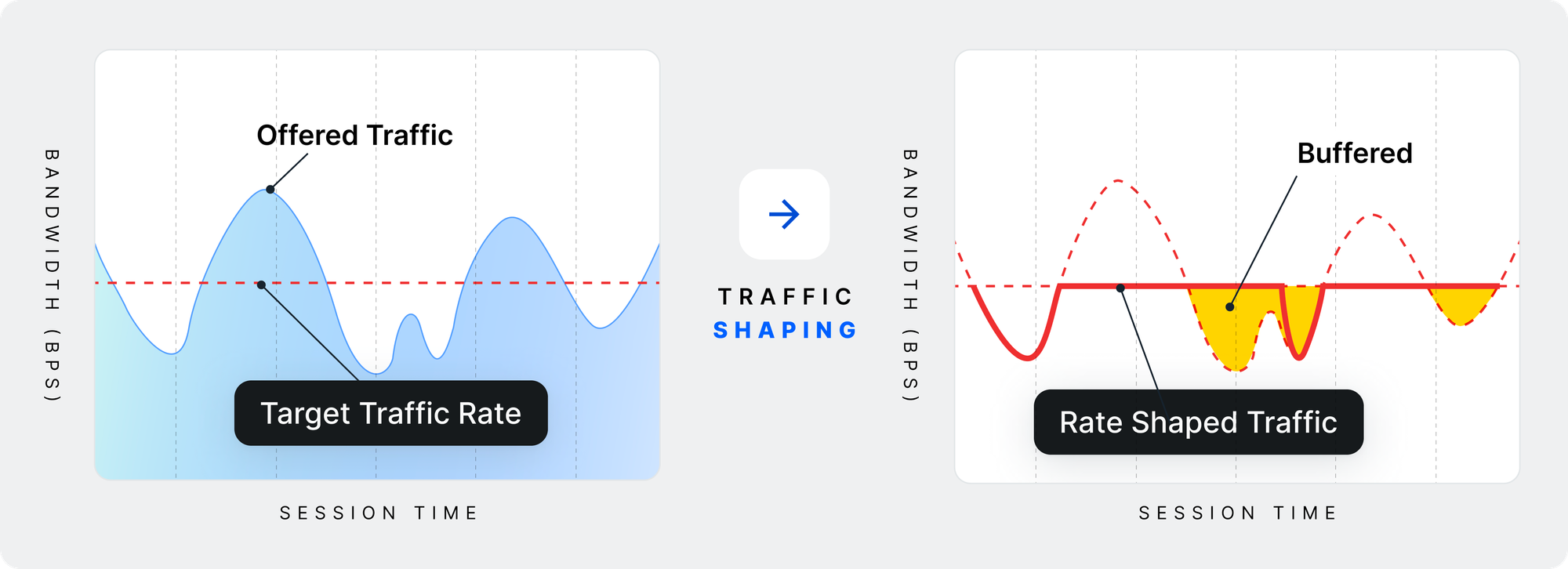

Traffic Shaping, part of the QoS technology, is a bandwidth management method used to control network data transfer. Its primary goal is to facilitate a consistent and smooth flow of traffic. This technique involves regulating the amount of data entering the network and moderating data bursts to prevent network congestion. Additionally, it aims to optimize or guarantee performance and improve latency, making it a crucial tool in maintaining network efficiency and stability.

This strategy focuses on balancing the necessity for prompt data transmission and the network's overall capacity. The aim is to prevent any single data stream from overwhelming the network's capabilities. To accomplish this, traffic shaping involves temporarily storing metered packets or cells in a buffer when incoming traffic exceeds the network's capacity. Following this, it strategically limits the bandwidth for a period to prevent network congestion effectively.

Traffic Shaping is often contrasted with another QoS strategy, Traffic Policing, which takes a more immediate approach by either discarding excess traffic or marking it as non-compliant. If your priority is to ensure the safe delivery of all data and protect it from network congestion, Traffic Shaping is the preferable choice. This method conserves data by managing traffic flow without discarding it.

On the other hand, if your network can afford not to prioritize the safe delivery of every piece of data, Traffic Policing might be more suitable. This approach does not require memory usage for buffering, as it instantly deals with excess traffic by dropping or marking it as non-compliant. This makes Traffic Policing a more straightforward, albeit less conservative, approach than Traffic Shaping, depending on your requirements.

Prioritizing Traffic Types

As highlighted in our previous discussions, network traffic management transcends technical challenges and emerges as a strategic imperative. It is crucial in facilitating efficient and effective communication and data transfer, culminating in an enhanced user experience.

WebRTC applications encompass many use cases, each transmitting different types of network traffic. Understanding and prioritizing these various traffic types according to their specific requirements is key. In this section, we will delve into how distinct forms of network traffic, including voice traffic, video streaming, and data transfer, can be effectively prioritized to optimize both network performance and the overall user experience.

1. Voice Traffic: Ensuring Clarity and Continuity

Voice traffic, particularly in VoIP (Voice over Internet Protocol) services, requires real-time transmission with minimal latency. Many modern applications likely have voice calling or group call features, which allow users to communicate with each other in many industries, such as socials, dating, education, and much more.

The foremost priority in managing voice traffic within WebRTC services is ensuring that voice packets are delivered promptly and in the correct sequence. This careful handling is crucial to prevent issues such as voice jitters, which can greatly affect call quality. Therefore, in scenarios where your WebRTC service predominantly focuses on voice call features, it's crucial to prioritize voice traffic within your network traffic management strategy, such as Quality of Service (QoS), as we’ve discussed in the previous sections.

This prioritization is key to minimizing latency, ensuring that voice packets are transmitted without delay or loss. Such an approach guarantees clear and continuous communication, essential for maintaining the quality and reliability of voice calls in your WebRTC service.

2. Video Streaming: Balancing Quality and Buffering

There is a critical demand for smooth video playback in industries like entertainment, conferencing, and surveillance that rely heavily on video streaming. This entails achieving a well-balanced compromise between high-quality visuals and minimal buffering. Such video streaming activities typically require more bandwidth than other network traffic types.

Building on what was discussed in the previous section, Adaptive Bitrate Streaming (ABR), which dynamically adjusts the quality of a video stream to match current network conditions and the user's device capabilities, stands out as an optimal strategy for real-time communication. In scenarios where each frame is crucial, such as in live stream gaming or video calling, ABR plays a vital role in ensuring consistent quality and fluidity of the video.

When your device encounters network congestion, ABR (Adaptive Bitrate Streaming) automatically adjusts the bitrates to deliver a lower quality level, ensuring that your video stream remains active. This approach allows you to continue watching the video without frequent pauses and resumes, which is a significantly better user experience than having the video stop and resume later due to insufficient device capacity.

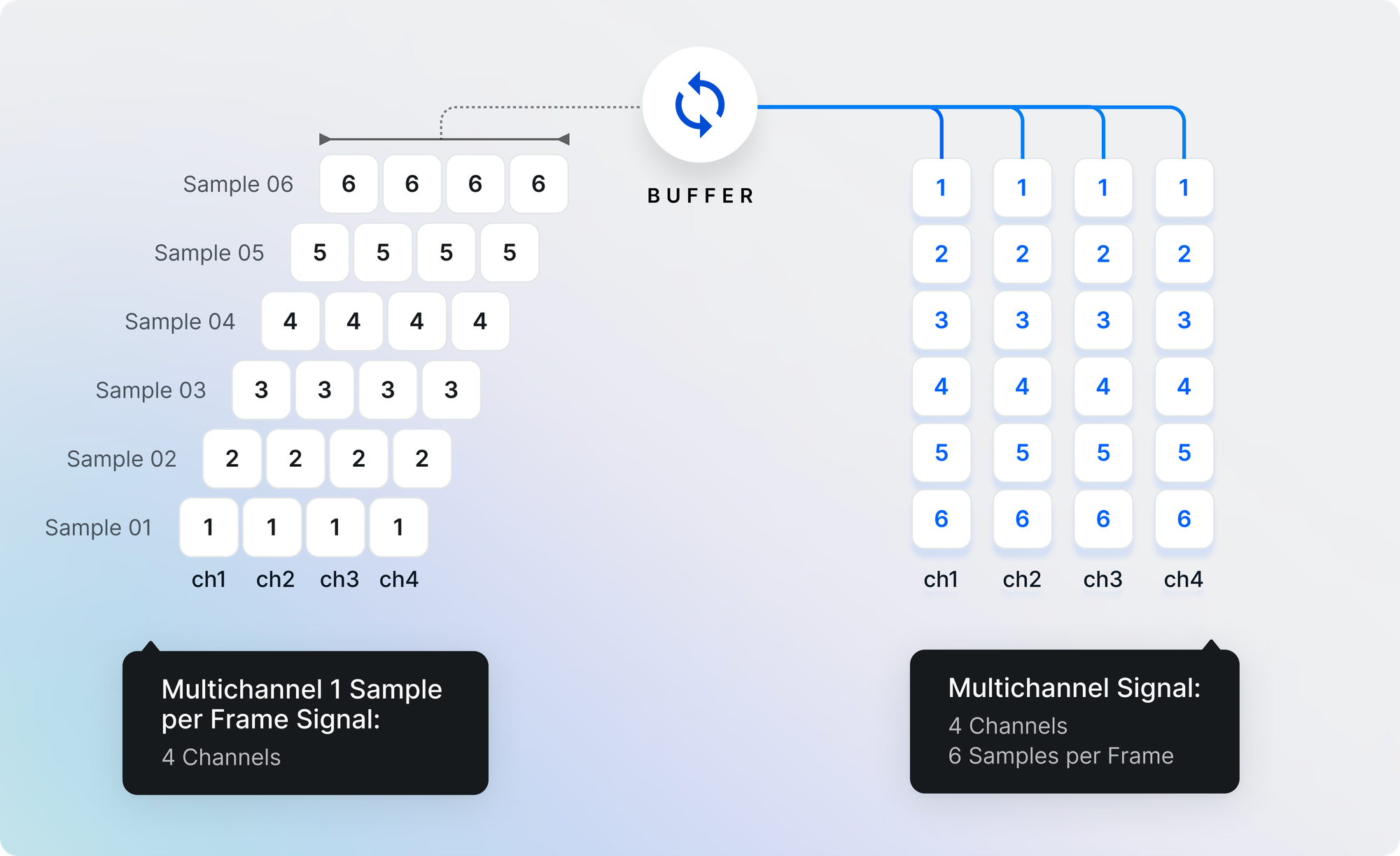

Another key strategy for prioritizing video streaming is Buffer Management. In the realm of video streaming, maintaining the health of the buffer is vital. By ensuring that there is a sufficient amount of video pre-loaded or buffered and aligning each signal of packets, it's possible to prevent interruptions in playback during temporary network issues, as you can see in the figure below:

You've likely encountered the term "buffering" frequently in various real-world products. Buffer management operates on a similar principle – it involves storing incoming and outgoing data packets in memory. The buffer management system plays a crucial role in ensuring that there is adequate memory available for these data packets, thus facilitating smooth and continuous video playback.

3. Data Transfer: Efficiency and Reliability

As discussed in Module One, Real-Time Data Transmitting With WebRTC, WebRTC fundamentally facilitates data transfer through data channels, such as images, files, and plain text. These data channels are versatile, supporting a broad spectrum of activities, including real-time text exchanges and the downloading/uploading of files for cloud backups and synchronization.

Although data transfer via these channels may not necessitate the immediacy of real-time transmissions like voice or video, it still requires both efficiency and reliability to function optimally. A crucial method for optimizing data transfer is Traffic Shaping, a key component of Quality of Service (QoS) technology. As previously discussed, this bandwidth management technique is vital in regulating network data transfer, ensuring efficient and smooth data flow across the network.

Data transfers vary in their urgency, and network administrators need to recognize this. Appropriate priorities can be assigned by categorizing data transfers according to their criticality. This prioritization should be based on both urgency and user preferences.

For instance, if certain users prioritize text exchanges over downloading media files, it would be more effective to give precedence to handling text-related packets first. Such targeted prioritization can significantly enhance the user experience by aligning network resource allocation with user needs and preferences.

Conclusion

This lesson delved into optimizing live streaming performance through effective bitrates/bandwidth balancing and implementing traffic priority strategies. Far from being optional, these aspects are essential in WebRTC, especially if your goal is to deliver a high level of user experience. Understanding and applying these techniques ensures smooth, high-quality streaming and communication via WebRTC.