Moderating Video Calls

When there are users interacting over the internet, it's important to think about how they can interact safely and be protected from harm. We want to ensure our users have safe, positive experiences on our platforms.

With Stream Video, it's possible to moderate both the audio and the video of a Stream video call to keep users safe.

Moderation Workflow

Stream’s moderation system revolves around three high-level stages that you can access either from the Stream dashboard or directly through the Python SDK:

- Policy: A policy (sometimes called a config) maps ML labels like INSULT or SCAM to actions (keep, flag, remove). Create or update it in the dashboard or with the Python SDK.

- Check: Every piece of content is analysed against a chosen policy. The check call returns a

recommended_actionin realtime so your application can act immediately. - Review Queue: Items that are flagged land in a review queue for human moderators. Retrieve them in code or manage them in the dashboard UI. A moderator’s can then make a decision on which action to take (ban user, delete content, mark reviewed, etc.).

Automating Moderation Actions

The recommended_action returned by the Check call (or stored on a review-queue item) is designed for automation. In your backend you can map each action to a concrete response:

| recommended_action | Typical response |

|---|---|

keep | Do nothing |

flag | Leave in review queue or soft-mute the sender |

remove / block | Delete message, mute/ban or kick user from call |

The actions above can be triggered by the Python SDK so you can integrate moderation into any event-driven workflow. This means you can programmatically mute live audio, display UI warnings, or trigger analytics - all without waiting for human review.

For the full list of endpoints and payload shapes, see the Moderation API documentation.

Severity Levels

Depending on the severity level, you can set the API to return a different response, e.g. for less severe infractions it could flag content, then for more severe cases you can have it automatically block the user.

Moderating Audio

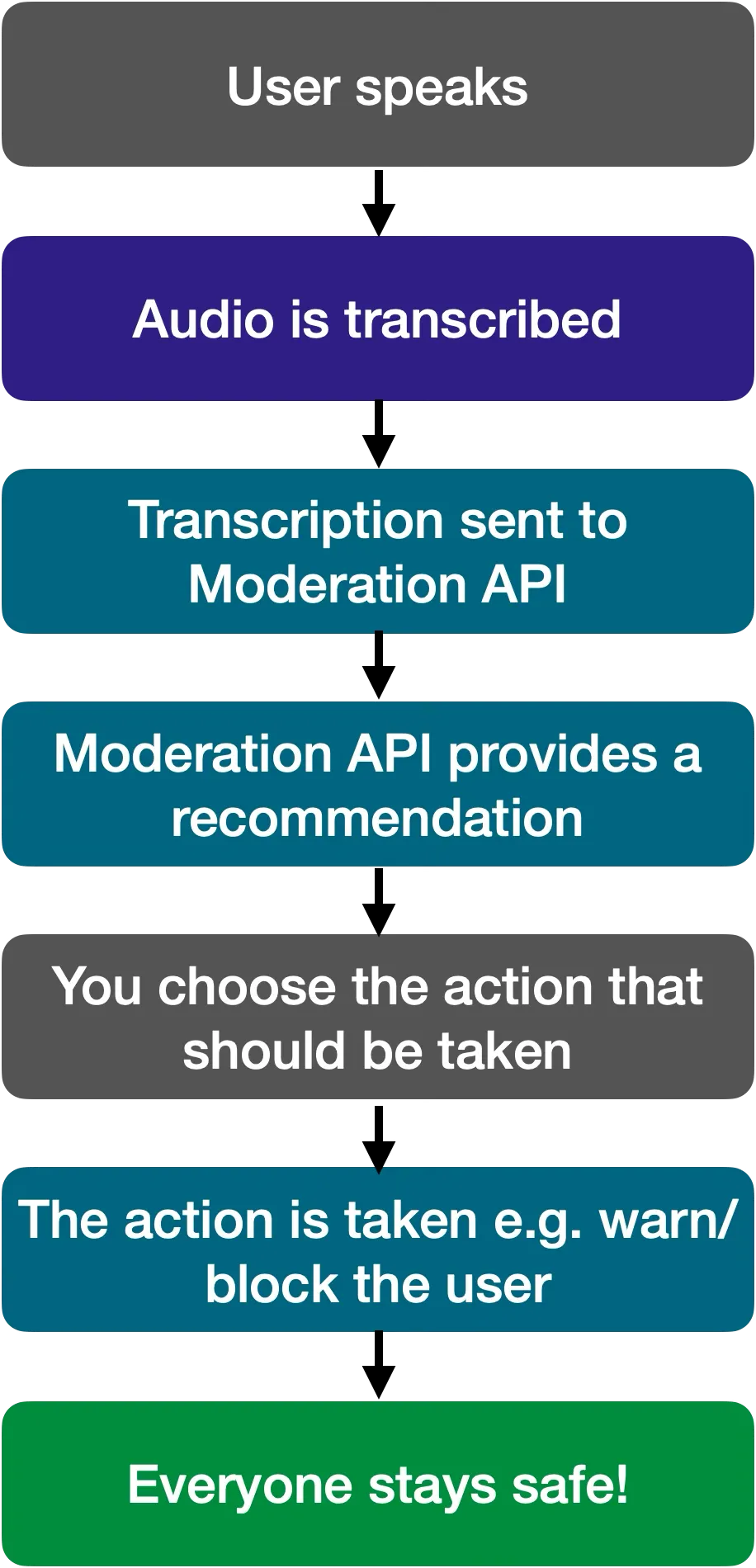

Audio moderation follows this loop:

- Transcribe speech: Use a Speech-to-Text (STT) plugin (e.g. Deepgram, Wizper) to turn raw PCM audio into text in real-time.

- Send transcript to Stream Moderation: Pass each utterance to the Moderation API, which provides a verdict.

- Act on the verdict: Flag, mute, kick or ban the speaker automatically or alert a human moderator.

Because everything happens live, users can be removed from the conversation within seconds of saying something harmful.

Moderation in a Stream Video Call

You can interact with Stream's Moderation API endpoints via the Stream Dashboard or via the Stream Python SDK. If you're integrating this into your backend app, it's better to use the SDK to call the API so more of the workflow can be automated. You will find more information on the Moderation API endpoints on this reference page.

Get Started with Audio Moderation

This how-to guide will show you how to use audio moderation in a Stream video call.

You can also adapt this working example on GitHub (that the guide above is based on) for your own application.

Moderation Documentation

See our moderation documentation for the full list of features.