By moderating content and enforcing appropriate consequences on users that post text, images, and video that violates community guidelines, you can keep your users safe, increase trust, and protect your brand's reputation.

Moderating UGC can be complex, as you could combine different tools, methods, and hires to create your unique strategy. So, read on to learn how to identify and handle multiple content mediums needing moderation, the platforms and technology to help you do it, best practices, and more.

Defining Sensitive Content

The scope of sensitive content needing moderation is broad, as the intent behind it can be and why and how your team should censor it can vary. For example, one user might accidentally publish their home address or other personally identifiable information (PII) they wish you to remove. But, another user might dox someone—which would require your team to not only remove the victim's personal information and penalize the user who posted them, too.

Hate speech, graphic videos or images, nudity, spam, and scam content interrupt your user experience, dissolve trust within your community, and pull focus away from the purpose of your business. Your brand can reserve judgment on what UGC requires moderation depending on your audience and maturity level.

Why is Content Moderation Important?

User engagement drives the performance metrics that mean the most to your business. Your in-app experience must create a safe and enjoyable environment users will want to reopen again and again. If they encounter trolls, bullies, explicit content, spam, or disturbing media, they won't want to stick around and create a community with other users.

Having a content moderation plan and implementation team is vital so your brand can preserve what makes it unique and attractive to downloaders at scale. Establishing guidelines and courses of action to take against those who violate the rules allows your business to protect itself from legal issues, a high user churn rate, and poor public perception.

The 3 Types of Content To Moderate

While the first medium that comes to mind might be written messages, most UGC today consists of images and videos, which require a different moderation approach. Let's explore the three variations of sensitive content and their unique moderation solutions.

1. Text

Depending on your platform's media capabilities, written text might be the only way for users to communicate and express themselves. The variety of ways to leverage text might seem overwhelming—forums, posts, comments, private messages, group channels, etc.— fortunately, it's a medium of content that AI and machine learning-based chat moderation tools have mastered at scale. Algorithms can scan text of different lengths, languages, and styles for unwanted content according to your specifications and word/topic blocklists. However, some harmful text content might not contain blocked words while still carrying a sentiment that damages your community or reputation.

2. Images

In theory, identifying inappropriate images seems simple, but many nuanced factors are at play. For example, detecting nudity or explicit images in UGC might flag famous, innocuous artwork. Or, your image detection might fail to identify what one area of the world considers inappropriate dress or subject matter versus the U.S. To effectively moderate images on your platform, AI models are best used with human moderators and user reporting to give the most complete and contextual moderation experience.

3. Video

Video is the third most difficult type of content to moderate because it requires more time to review and assess the media. Text and images simply need a glance and the occasional additional context. But, a video could be hours in length and flagged for the content of only a few frames, requiring more time spent by your moderation team on a single case. Video can also require text-based and audio moderation for inappropriate subtitles or recordings. However, while challenging, you must vigilantly guard your platform against harmful multi-media UGC. Your business will lose user trust and credibility if you tolerate community guideline violations.

4 Ways to Moderate Unsafe Content

1. Pre-Moderation

This method puts all user submissions in a queue for moderation before posting content. While effective, pre-moderation is a time and labor-intensive strategy. But, there are better choices for some app types, for example, online communities that prioritize barrier-free engagement. It is better suited for a platform with a vulnerable audience that needs high levels of protection, like those frequented by minors.

2. Post-Moderation

Post-moderation might be a fit if your business's audience is more mature and promotes user engagement. It permits users to publish content immediately and simultaneously adds it to a queue for moderation. This moderation strategy is highly manual and limits scale significantly, as a team member must review and approve every comment, post, thread, etc.

3. Reactive Moderation

This method combines user reporting efforts with moderation team review and assessment. In a typical reactive moderation flow, a user posts content, and another community member flags it for review if they find it offensive or in breach of community guidelines. The primary benefit of this strategy is that your moderators save time by only reviewing content that users have designated as needing moderation instead of having to assess every piece of UGC. The risk of implementing a reactive moderation strategy is that users might fail to flag harmful content and let it remain on your platform, damaging your reputation and eroding users' trust.

4. Automated Moderation

Automated moderation combines various ML and AI tools to filter, flag, and reject UGC of all types. These solutions can range from blocklists and filters to block IP addresses to algorithms trained to detect inappropriate images, audio, and video. Automated moderation can adapt to fit any type of use case and streamline your trust and safety process without putting the responsibility of reporting harmful UGC on platform users.

Top 5 Content Moderation Tools

1. Hive

Hive offers a full suite of audio, visual, text, and AI-generated content detection and moderation services. Its moderation solutions detect bullying, sexual, hate, violence, and spam content and allow moderators to set filters for profanity, noise, and PII usage. Hive integrates with other APIs and supports moderation in seven languages, English, Spanish, Arabic, Hindi, German, Portuguese, and French. Hive's moderation dashboard makes it easy for trust and safety teams to manage multiple solutions across their platform holistically.

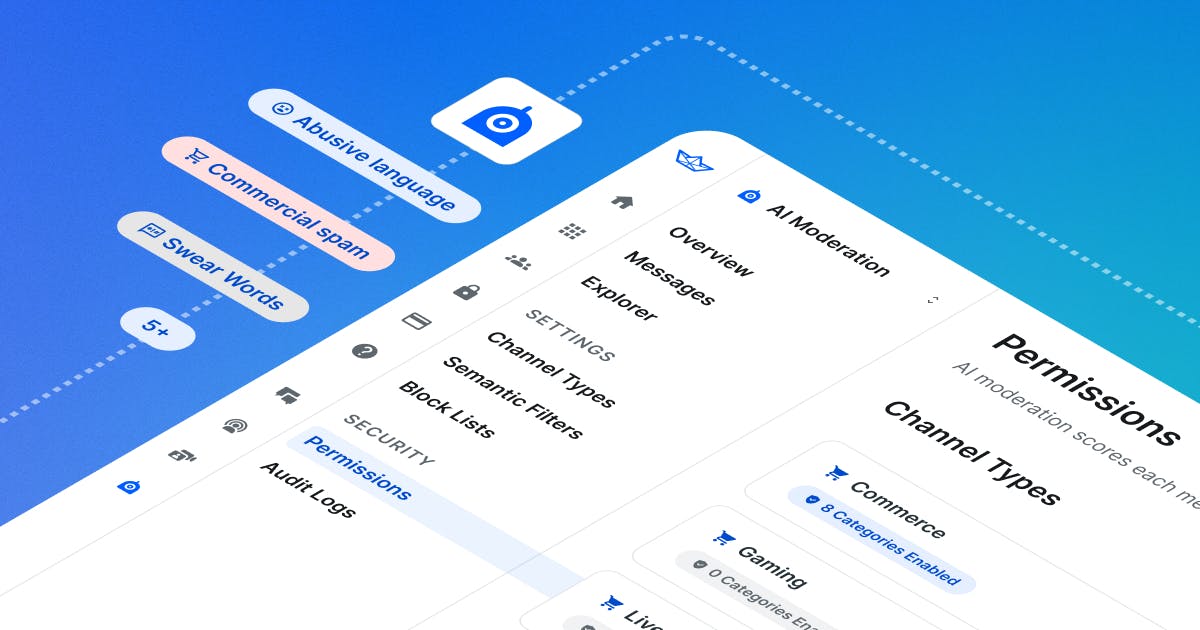

2. Stream Auto Moderation

Stream's Auto Moderation API helps moderation teams find, monitor, and resolve harmful content with minimal effort, maximum coverage, and AI-driven message flagging. It adapts to your community's context and expectations with powerful machine-learning models and configurable policies. Auto Moderation comes with moderator-centric tooling and dashboards and standard content moderation features, plus sentiment analysis and behavioral nudge moderation functionalities that make flagging, reviewing, and censoring content easy for all use cases, from live streaming to gaming.

3. WebPurify

WebPurify is on a mission to make the Internet a safer place for children through moderation of text, video, metaverse, and image content. They offer a unique hybrid moderation solution, combining sophisticated AI with a team of outsourced human moderators to support your business. WebPurify's Automated Intelligent Moderation (AIM) API service offers 24/7/365 protection from the risks associated with having user-generated content on brand channels---detecting and removing offensive content and unwanted images in real time. They cover 15 languages, provide a one-click CMS plug-in, custom block and allow lists, and email, phone, and URL filters.

4. Pattr.io

Pattr.io is a conversational AI platform that empowers brands to have engaging, effective, and safe conversations with their customers at scale. The company provides AI-powered content moderation solutions, including comment moderation, image moderation, outsourced human moderation, and customizable filters brands can leverage when conversing and serving their users online. Pattr supports users with 24/7 online support and easily integrates with Facebook, Twitter, and Instagram so that brands can engage safely within their services and socially with prospects and customers.

5. Sightengine

Sightengine specializes in real-time video and image moderation and anonymization to keep users safe and protected. The company's AI-powered tool detects more than just nudity, gore, and harmful content. The tool groups those subjects into a "Standard" category but also detects content that isn't optimal for UX, like if people are wearing sunglasses in photos, if images are low quality, if there is duplicate content, and more. It is a fast and scalable, easy-to-integrate solution that prides itself on moderation accuracy and its high security-compliance standards.

Best Practices for Moderating User-Generated Content

Establish & Socialize Community Guidelines

Define the rules of posting UGC on your site to inform users of the environment you want to create for your community. Think about your brand's personality and audience when drafting your rules; the more mature your user base is, the less stringent your guidelines might need to be. Post your content rules somewhere easily accessible within your platform and require new users to read through and agree to follow the guidelines before engaging with others. This will ensure that if a user violates the rules, you have a record of them consenting to follow them and an argument for removing them from your community.

Set Violation Protocols

Defining fair and rational consequences of content violation is a vital step when creating a safe, positive social community. Your protocols on what actions to take when a piece of content requires moderation must be well-defined for transparency's sake among your users and your moderation team. Violation protocols should define moderation methods, how to review content by medium, how to assess user-reported content, when to ban a user, a guide to initiating legal recourse, and what moderation tools are at your team's disposal. Publishing your business's violation protocols is as important as the community guidelines.

Leverage Moderation Platforms

Automating certain aspects of your moderation strategy can lighten the load for your moderation team and user base while ensuring your community maintains a high level of safety. Most moderation solutions can be easily integrated with your service and won't interrupt performance or user experience. Moderation tools come with varying levels of sophistication and content-medium specialties and fill the gaps in your moderation team's skill set, like assisting in language translation. You must identify the moderation functions you'd like a tool to solve before you can narrow the field to your top choices.

Identify a Community Manager or Moderator

AI-powered moderation tools are integral to creating an efficient strategy but cannot replace human judgment. Assigning a community manager or moderator to your internal team or within your loyal user base can bring context and nuance to moderation. These people can pick up on the sarcasm and context clues that automated moderation tools cannot, creating a less strict and more natural approach to censorship. Community managers who regularly engage with your customers and content offer a unique point of view. They can recognize an appropriate tone of content to match your brand's personality better than anyone.

Understanding AI-Powered Content Moderation

ML algorithms remove the burden of content moderation from trust and safety teams. They are trained using large datasets of previously flagged content to learn the signs of harmful, inappropriate, or off-topic UGC. A detailed understanding of these parameters enables automated moderation tools to accurately flag, block, mute, ban, and censor multi-media content from users who violate community rules.

Ready to Build Your Trust & Safety Team?

Regardless of your content moderation method, you'll need to consider building a trust and safety team to support your strategy and serve as a resource to your brand's community. The members of this moderation team should be familiar with your community guidelines, understand how to enforce violation consequences correctly, get to know your audience, and feel comfortable managing any automated moderation tools you choose to leverage.