{

"actor": "jimmy",

"verb": "post",

"object": {

"title": "this is the title",

"body": "this is the body..."

},

"image": "https://example.com/post_image.png"

}Feeds v2 Moderation

These docs are for Feeds v2. Quickstart documentation for Feeds v3 will come shortly.

Introduction

Moderation in Feeds is made possible through the work on the new moderation API. Moderation capabilities have been added to the Feeds API with the following features:

- Automatic moderation of new activities

- Automatic moderation of activities on update

- Automatic moderation of new reactions

- Automatic moderation of reactions on update

- Flagging activities

- Flagging reactions

- Flagging users

- Prevent banned users from reading a feed

- Prevent banned users from creating activities/reactions

Prerequisites

- Since moderation in Feeds is behind a feature flag, make sure this is enabled for your app. Get in touch with Stream Support Team for this purpose.

Create Moderation Policy

A moderation policy is a set of rules that define how content should be moderated in your application. We have a detailed guide on What Is Moderation Policy.

Also please follow the following guides on creating moderation policy for feeds:

- Step 1: Creating Moderation Policy For Feeds

- Step 2: Setup Policy for Text Moderation

- Step 3: Setup Policy for Image Moderation

- Step 4: Test Moderation Policy

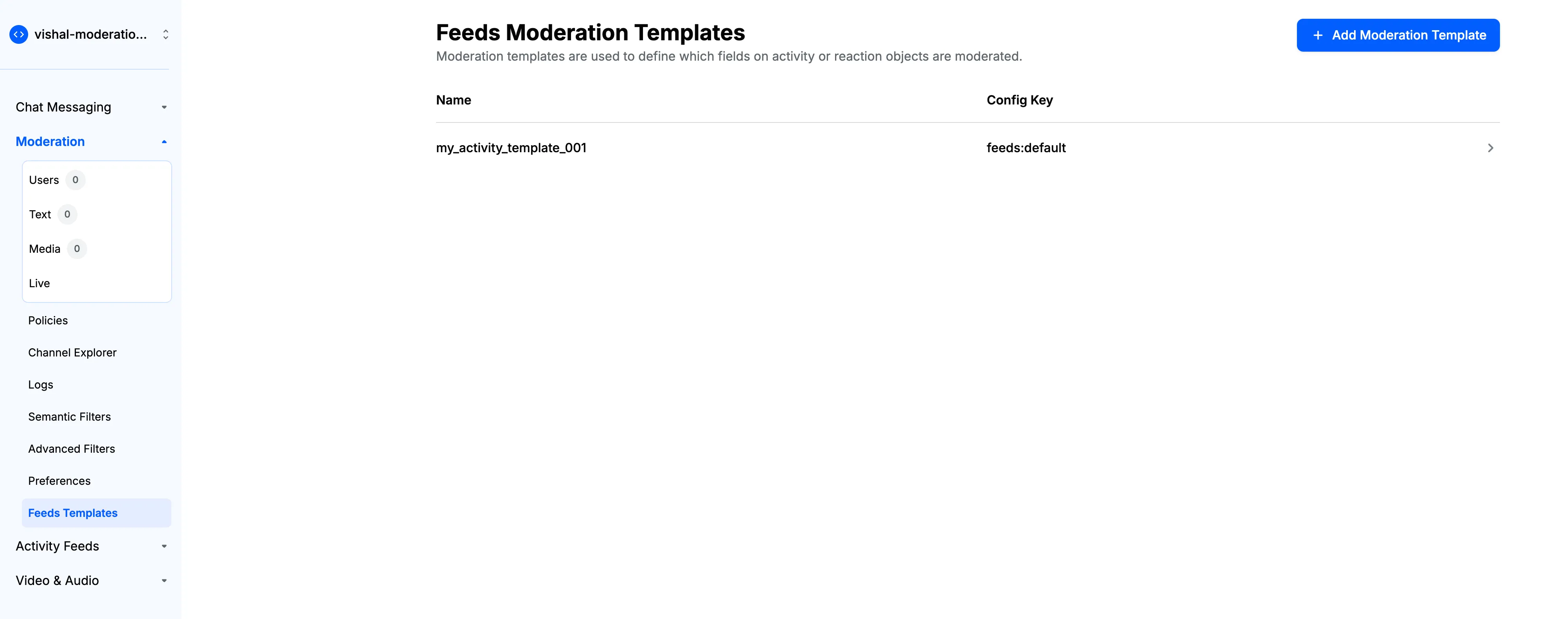

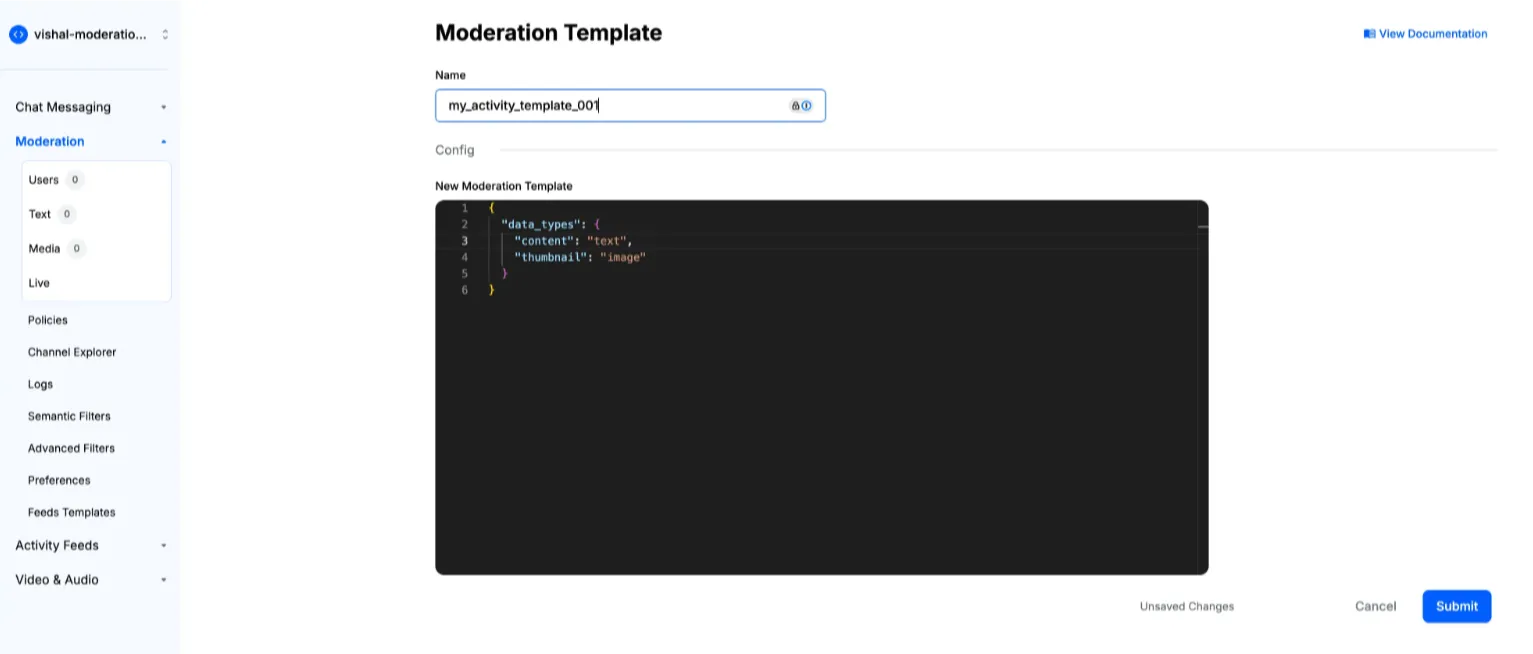

Setup Moderation Templates

Moderation templates are Feeds-specific and are needed because activities (or reactions) are generic in structure. The template is used to tell the API which part(s) of the activity should be subject to moderation and which type they are (text or image). As an example, your activities might look like this:

and you want to moderate the fields object.title, object.body and image you would create a moderation template like this, lets name it mod_tmpl_1

{

"data_types": {

"object.title": "text",

"object.body": "text",

"image": "image"

}

}If you want to moderate reactions, you need to create a separate template for it. As an example, your reaction might look like this:

{

"text": "This is the reaction text",

"attachments": ["https://example.com/post_image.png"]

}and you want to moderate the fields text and attachments, you would create a moderation template like this, lets name it moderation_template_reaction

{

"data_types": {

"text": "text",

"attachments": "image"

}

}If you have activities that varies in structure (or reactions) you can create multiple templates to use for them as well.

It’s important to understand that when it comes to moderation in feeds, the user will be determined by the actor on the activities and the user_id on reactions. The feeds moderation integration will automatically create users on the moderation side to be able to track bans/blocks etc there. This is not to be confused with the Feeds user which is a separate entity.

Client Side Feeds Integration

Auto moderation of activities

To enable moderation of activities for client-side integration, you need to include the required_moderation_template (Moderation Template which you created for activities) in your JWT token payload. This ensures that any activities created from the client are automatically moderated according to your policy. You must include this specific JWT token only for activities and not for reactions.

Generate JWT Token

- Navigate to your Stream Dashboard and copy the Secret from the Feeds Overview section

- Go to https://jwt.io to generate your token

- Use this payload template (customize the fields as needed):

{

"resource": "feed",

"action": "write",

"feed_id": "feed123",

"user_id": "user123",

"required_moderation_template": "mod_tmpl_1"

}Important: Replace

"mod_tmpl_1"with the name of your specific moderation template.

- Enter your secret in the "Verify Signature" section at jwt.io

- The generated JWT token can now be used with your client-side SDK and the moderation works with it.

Auto moderation of reactions

Important: You need a different JWT Token for reactions for moderation to work with it. Don't confuse this with the token you generated above for the auto moderation of activities.

To enable moderation of reactions for client-side integration, you need to include the required_moderation_template (Moderation Template which you created for reactions) in your JWT token payload. This ensures that any activities created from the client are automatically moderated according to your policy. You must include this specific JWT token only for reactions and not for activities.

Generate JWT Token

- Navigate to your Stream Dashboard and copy the Secret from the Feeds Overview section

- Go to https://jwt.io to generate your token

- Use this payload template (customize the fields as needed):

{

"resource": "reactions",

"action": "write",

"feed_id": "feed123",

"user_id": "user123",

"required_moderation_template": "moderation_template_reaction"

}Important: Replace

"moderation_template_reaction"with the name of your specific moderation template.

- Enter your secret in the "Verify Signature" section at jwt.io

- The generated JWT token can now be used with your client-side SDK and the moderation works with it.

Server Side Feeds Integration

Auto Moderation of Activities

Moderation is not supported on batch activity inserts (the Feeds API doesn't support partial responses, and the moderation API doesn't have a batch endpoint for this yet)

Now that you have a moderation config and at least one moderation template, you can start automatically moderating content in your feeds. Auto-moderation allows you to proactively screen activities before they are added to feeds, helping maintain content quality and safety.

When an activity with a moderation template is added, Stream will:

- Extract the content specified in the template (text fields, images, etc.)

- Run it through the configured moderation policy

- Take the appropriate action based on policy rules (block, flag, etc.)

To enable auto-moderation for activities, simply supply the moderation_template field in your activity object when making requests to the feeds API, like so:

You need to supply the moderation_template field while adding the activity. Learn how to create moderation templates in the Setup Moderation Templates section.

{

"actor": "jimmy",

"verb": "post",

"object": {

"title": "this is the title",

"body": "this is the body..."

},

"image": "https://example.com/post_image.png",

"moderation_template": "mod_tmpl_1" // this points to the moderation template

}Here are code examples showing how to add an activity with the moderation_template field:

const response = await ctx.alice.feed("user").addActivity({

verb: "eat",

object: "object",

moderation_template: "moderation_template_activity",

a: "pissoar",

text: "pissoar",

attachment: {

images: ["image1", "image2"],

},

foreign_id: "random_foreign_id",

time: new Date(),

});

response.moderation.recommended_action.should.eq("remove");

response.moderation.status.should.eq("complete");var newActivity2 = new Activity("actor_id", "my_verb", "my_object")

{

ForeignId = "r-test-2",

Time = DateTime.Parse("2000-08-17T16:32:32"),

};

newActivity2.SetData("moderation_template", "moderation_template_test_images");

newActivity2.SetData("a", "pissoar");

var attachments = new Dictionary<string, object>();

string[] images = new string[] { "image1", "image2" };

attachments["images"] = images;

newActivity2.SetData("attachment", attachments);

var response = await this.UserFeed.AddActivityAsync(newActivity2);

var modResponse = response.GetData<ModerationResponse>("moderation");

Console.WriteLine(modResponse.Status);

Assert.AreEqual(modResponse.Status, "complete");

// At this point, this activity will be available in dashboard for review.

// But if needed, you can check the RecommendedAction result and choose

// to delete the activity as part of integration.

// Please note that activities are hard deleted, and so not recoverable.

Assert.AreEqual(modResponse.RecommendedAction, "remove");Activity activity =

Activity.builder()

.actor("test")

.verb("test")

.object("test")

.moderationTemplate("moderation_template_activity")

.extraField("text", "pissoar")

.extraField("attachment", images)

.foreignID("for")

.time(new Date())

.build();

Activity activityResponse = client.flatFeed("user", "1").addActivity(activity).join();

assertNotNull(activityResponse);

ModerationResponse m = activityResponse.getModerationResponse();

assertEquals(m.getStatus(), "complete");

// At this point, this activity will be available in dashboard for review.

// But if needed, you can check the RecommendedAction result and choose

// to delete the activity as part of integration.

// Please note that activities are hard deleted, and so not recoverable.

assertEquals(m.getRecommendedAction(), "remove");Auto moderation of reactions

To add a reaction with moderation, you need to supply the moderation_template field in the request.

const responseReaction = await client.reactions.add(

"comment",

responseActivity.id,

{

text: "pissoar",

moderation_template: "moderation_template_reaction",

},

);

responseReaction.moderation.response.recommended_action.should.eq("remove");

responseReaction.moderation.response.status.should.eq("complete");var a = new Activity("user:1", "like", "cake")

{

ForeignId = "cake:1",

Time = DateTime.UtcNow,

Target = "johnny",

};

var activity = await this.UserFeed.AddActivityAsync(a);

var data = new Dictionary<string, object>() { { "field", "value" }, { "number", 2 }, };

var r = await Client.Reactions.AddAsync("like", activity.Id, "bobby", data);

Assert.NotNull(r);

var response = await Client.Moderation.FlagReactionAsync(r.Id, r.UserId, "blood");

Assert.NotNull(response);Reaction r =

Reaction.builder()

.kind("like")

.activityID(activityResponse.getID())

.userID("user123")

.extraField("text", "pissoar")

.moderationTemplate("moderation_template_reaction")

.build();

// At this point, this reaction will be available in dashboard for review.

// But if needed, you can check the RecommendedAction result and choose

// to delete the reaction as part of integration

Reaction reactionResponse = client.reactions().add("user", r).join();

ModerationResponse m = reactionResponse.getModerationResponse();

assertEquals(m.getStatus(), "complete");

assertEquals(m.getRecommendedAction(), "remove");User-Driven Actions

Stream provides APIs for users to flag content that they find inappropriate. This helps maintain community standards by allowing users to participate in content moderation. When a user flags content, it is sent to the moderation dashboard for review by moderators.

The following sections demonstrate how to implement user-driven flagging for different types of content.

Flag User

To flag a user, you can use the moderation API. This will mark the user for review and prevent them from creating new activities or reactions.

// Flag a user using the client method

const response = await client.flagUser("user-id", {

reason: "spam",

user_id: "flagging-user-id",

});

// Or flag using the user object

const user = client.user("user-id");

const response2 = await user.flag({

reason: "inappropriate_content",

user_id: "flagging-user-id",

});var userId = Guid.NewGuid().ToString();

var userData = new Dictionary<string, object>

{

{ "field", "value" },

{ "is_admin", true },

};

var u = await Client.Users.AddAsync(userId, userData);

Assert.NotNull(u);

Assert.NotNull(u.CreatedAt);

Assert.NotNull(u.UpdatedAt);

var response = await Client.Moderation.FlagUserAsync(userId, "blood");

Assert.NotNull(response);Response flagResponse = client.moderation().flagUser(userId, "<reason>", null).join();Flag Activity

To flag an activity, you can use the moderation API. This will mark the activity for review and prevent it from being displayed.

var newActivity = new Activity("vishal", "test", "1");

newActivity.SetData<string>("stringint", "42");

newActivity.SetData<string>("stringdouble", "42.2");

newActivity.SetData<string>("stringcomplex", "{ \"test1\": 1, \"test2\": \"testing\" }");

var response = await this.UserFeed.AddActivityAsync(newActivity);

Assert.IsNotNull(response);

var response1 = await Client.Moderation.FlagActivityAsync(response.Id, response.Actor, "blood");

Assert.NotNull(response1);Response flagResponse =

client.moderation().flagActivity(activityResponse.getID(), "<actor_id>", "<reason>", null).join();Flag Reaction

To flag a reaction, you can use the moderation API. This will mark the reaction for review.

You need to supply the moderation_template field while adding the reaction. Learn how to create moderation templates in the Setup Moderation Templates section.

var a = new Activity("user:1", "like", "cake")

{

ForeignId = "cake:1",

Time = DateTime.UtcNow,

Target = "johnny",

};

var activity = await userFeed.AddActivityAsync(a);

Assert.NotNull(activity)

var data = new Dictionary<string, object>() { { "text", "value" }, { "number", 2 }, };

// "default_reactions" is the moderation template created for reaction

// don't forget to supply the moderationTemplate. otherwise flagging reaction won't work

var r = await client.Reactions.AddAsync("like", activity.Id, "bobby", data, null,"default_reactions");

Assert.NotNull(r)

var response = await client.Moderation.FlagReactionAsync(r.Id, r.UserId, "user-report");

Assert.NotNull(response)Monitoring Moderated Content

This concludes the setup for moderation. You can try sending a message in your chat application to see how the moderation policy works in real-time. Remember to monitor and adjust your policies as needed to maintain a safe and positive environment for your users. You can monitor all the flagged or blocked content from the dashboard. You have access to three separate queues on the dashboard.

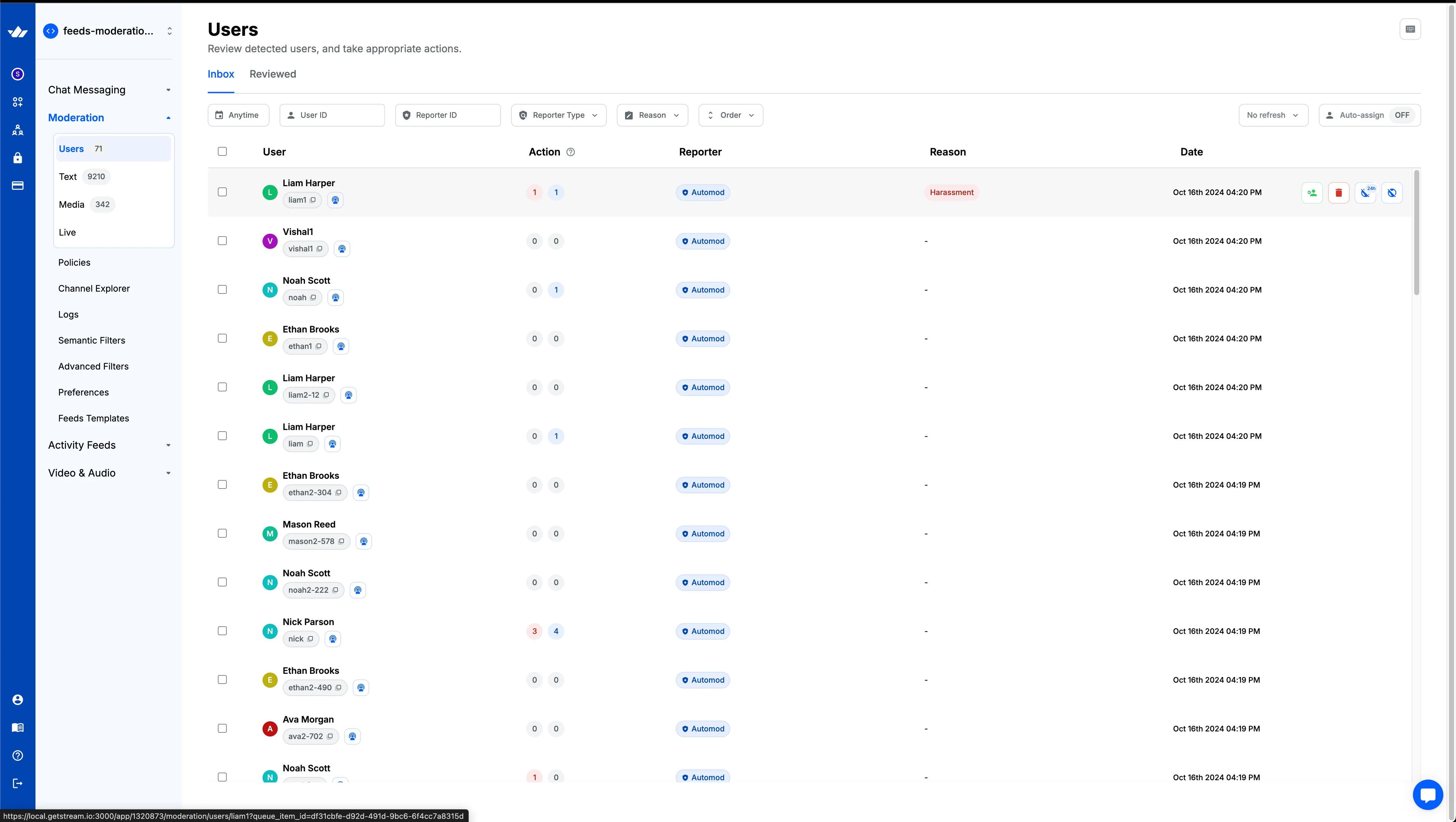

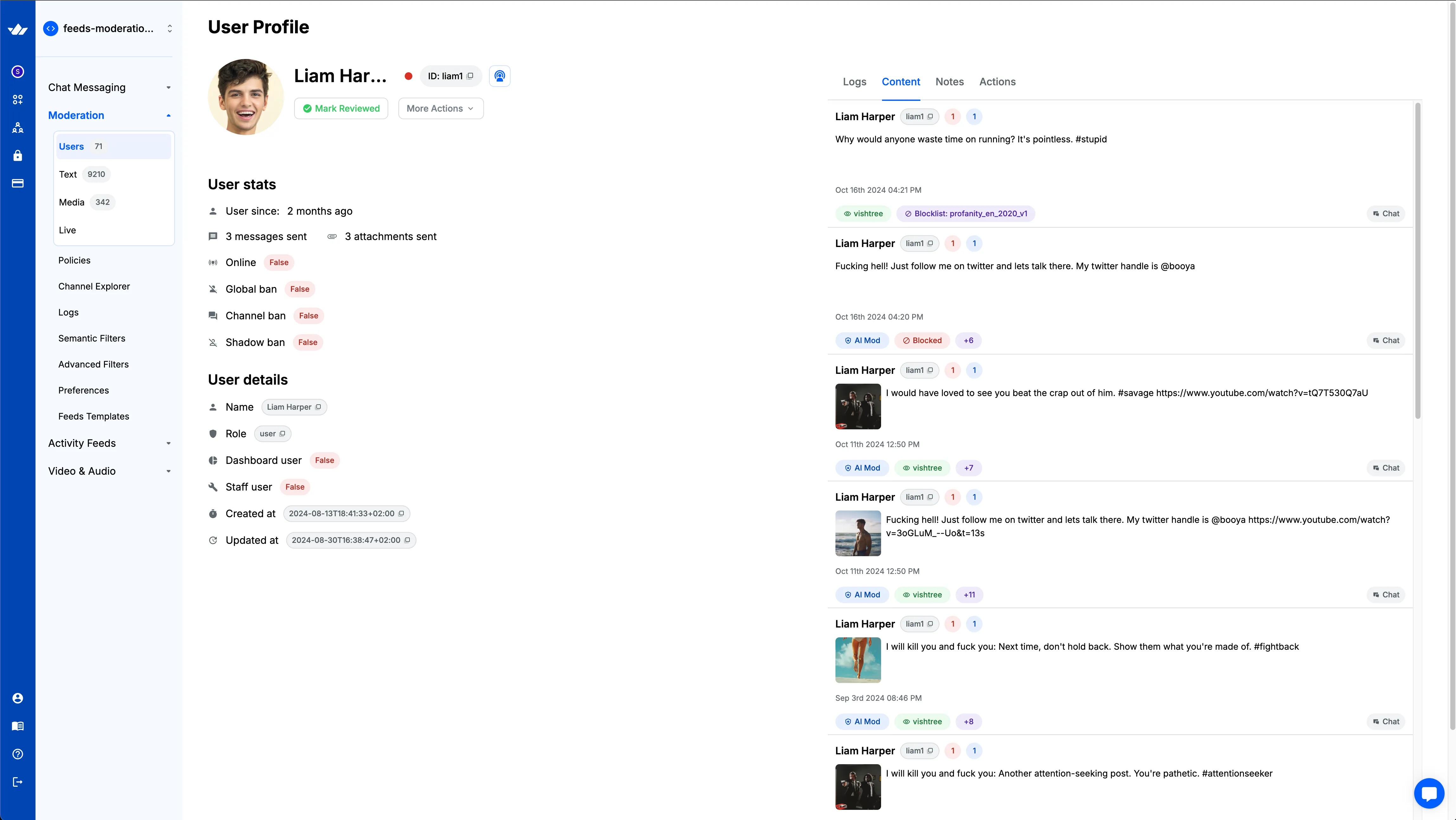

Users Queue

This queue contains the list of all users who were flagged by another user or users who have at least one flagged content. As a moderator, you can take certain actions on a user entirely or on the content posted by that user. All the available actions will be visible when you hover over a user in the list. The available actions on users are as follows:

- Mark Reviewed: This action indicates that you have reviewed the user's profile or content and determined that no further action is needed at this time. It helps keep track of which users have been assessed by moderators, ensuring efficient management of the queue. This will also mark all the content from this user as reviewed.

- Permanently Ban User: This action permanently restricts the user from accessing or participating in the platform. It's typically used for severe or repeated violations of community guidelines. When a user is permanently banned, they are unable to log in, post content, or interact with other users. This action should be used judiciously, as it's a final measure for handling problematic users.

- Temporarily Ban User: This action temporarily restricts a user's access to the platform for a specified period of time. It's often used as a warning or corrective measure for less severe violations. During the ban period, the user cannot log in or interact with the platform. This allows them time to reflect on their behavior while giving moderators a chance to review the situation before deciding on further action.

- Delete the User

- Delete all the content from the user

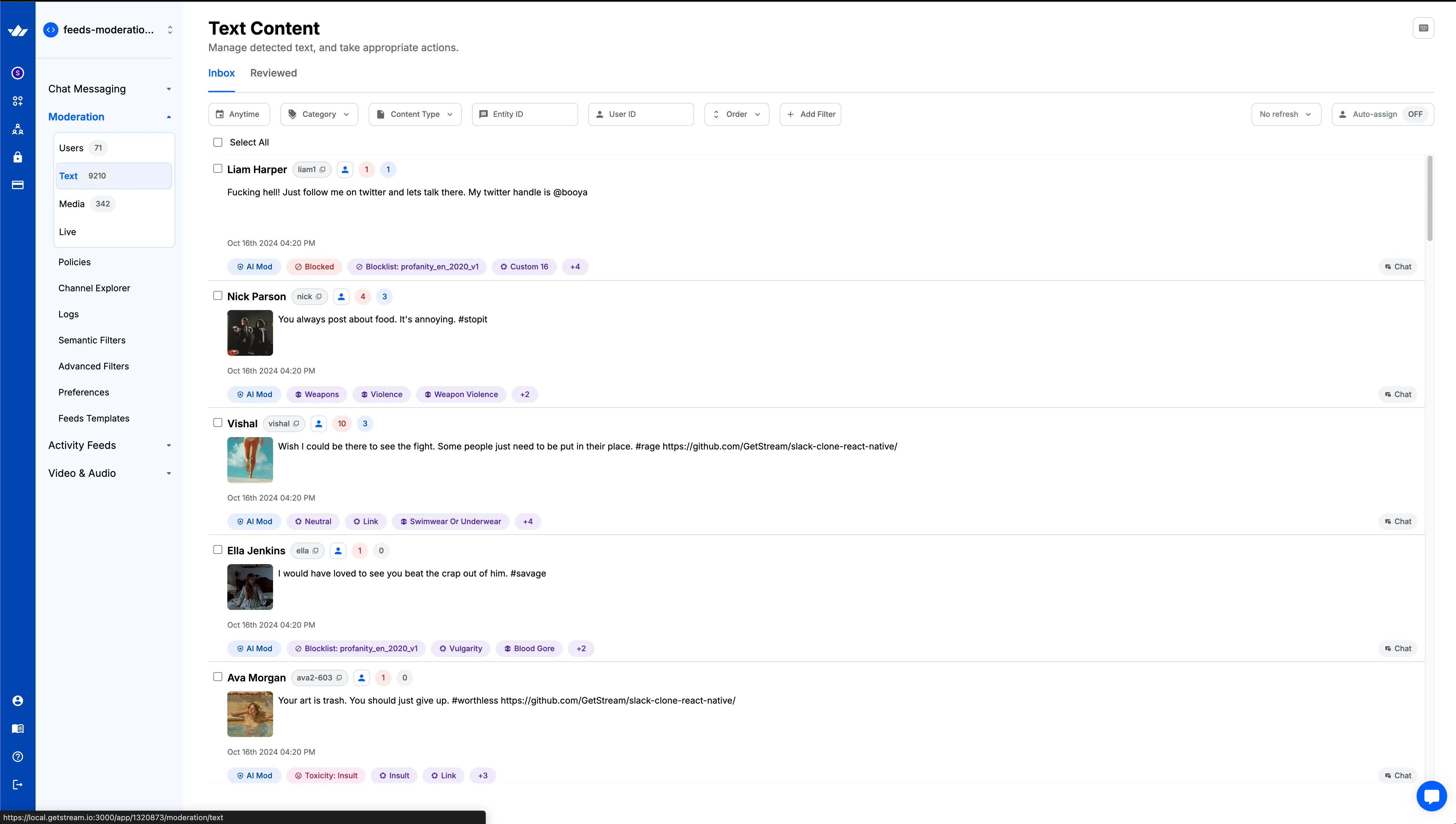

Text Queue

This queue contains all the text contents that have been flagged or blocked by the moderation system. As a moderator, you can review these contents and take appropriate actions. The available actions for each content in the Text Queue are:

- Mark Reviewed: This action indicates that you have reviewed the content and determined it doesn't require further action. It helps keep track of which contents have been addressed by moderators.

- Delete: This action removes the message entirely from the platform.

- Unblock: If a content was automatically blocked by the moderation system, but upon review you determine it's actually acceptable, you can use this action to unblock it. This allows the content to be visible in the application.

These actions provide moderators with the flexibility to handle different situations appropriately, ensuring a fair and safe environment for all users.

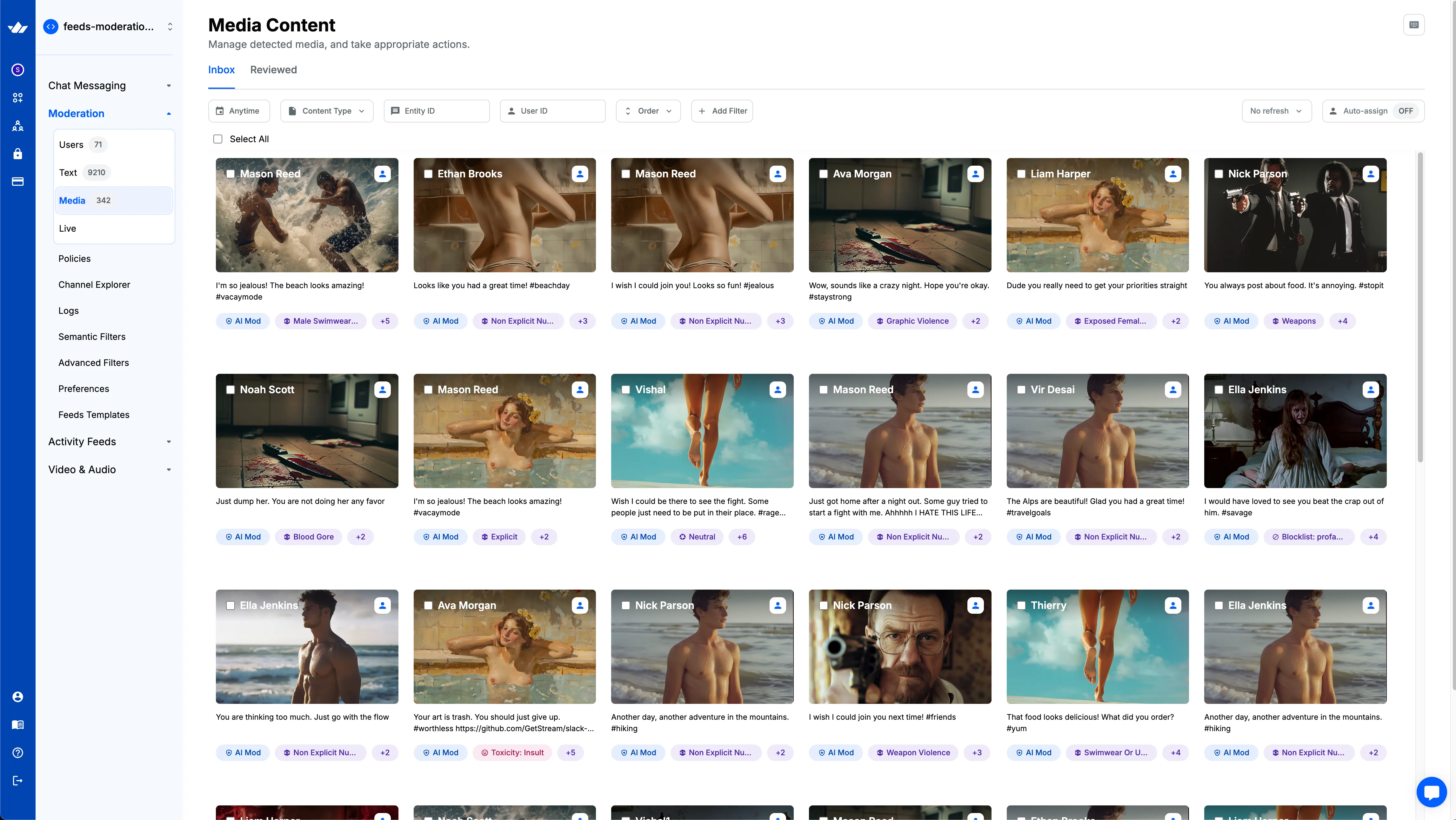

Media Queue

The Media Queue is specifically designed for handling images, videos, and other media content that has been flagged or blocked by the moderation system. This queue allows moderators to review visual content that may violate community guidelines or pose potential risks. Similar to the Text Queue, moderators can take various actions on the items in this queue, such as marking them as reviewed, deleting inappropriate content, or unblocking media that was mistakenly flagged.