flowchart TD

A[Create Moderation Policy] -->|Configure policy settings| B[Submit Content]

B -->|Use Check API| C[Receive Recommendation]

C -->|Analyze result| D{Take Action}

D -->|Flag| E[Review Queue]

D -->|Block| F[Content Blocked]

D -->|Shadow Block| G[Content Hidden]

E --> H[View in Dashboard]

F --> H

G --> H

H -->|Actions taken| I[Webhook Notifications]

style A fill:#e1f3ff

style B fill:#e1f3ff

style C fill:#e1f3ff

style D fill:#ffe1e1

style E fill:#f0e1ff

style F fill:#f0e1ff

style G fill:#f0e1ff

style H fill:#e1ffe4

style I fill:#fff3e1Custom Content Moderation

Stream's moderation APIs can be used to moderate custom content such as user profiles, avatars, and other content types. This guide shows you how to use Stream's moderation APIs to moderate custom content.

The overall process of moderating custom content is as follows:

- Create a moderation policy

- Submit content for moderation

- Process the recommended action

- Monitor results in the Dashboard

- Receive notifications of dashboard actions

Let's go through each step in detail.

Create a moderation policy

A moderation policy is a set of rules that define how content should be moderated in your application. We have a detailed guide on What Is Moderation Policy.

Also please follow the following guides on creating moderation policy for custom content:

- Step 1: Creating Moderation Policy For Custom Content

- Step 2: Setup Policy for Text Moderation

- Step 3: Setup Policy for Image Moderation

- Step 4: Test Moderation Policy

Submit custom content to the moderation Check API

You can use the moderation Check API to submit custom content to the moderation engine and receive a recommended action.

// This code example uses the javascript client for the Stream Chat.

// https://github.com/GetStream/stream-chat-js

const client = new StreamChat(apiKey, apiSecret);

const result = await client.moderation.check(

"entity_type",

"entity_id",

"entity_creator_id",

{

texts: ["this is bullshit", "f*ck you as***le"],

images: ["example.com/test.jpg"],

},

"custom:my-content-platform-1", // unique identifier for the policy

{

force_sync: true,

},

);

console.log(result.item.recommended_action); // flag | remove | shadow_blockIn this example:

entity_typeis the type of the entity you are moderating. You can provide any generic identifier for the entity type e.g.,user:profile,user:avatar,user:cover_image, etc.entity_idis the unique identifier for the entity you are moderating.entity_creator_idis the unique identifier for the creator of the entity you are moderating.textsis the array of texts you want to moderate.imagesis the array of images you want to moderate.custom:my-content-platform-1is the unique identifier for the moderation policy you created earlier.force_syncis an optional parameter that you can use to ensure all the moderation engines are run synchronously. This is mainly useful if you want to do image moderation synchronously.

Query Flagged Content

You can use the moderation QueryReviewQueue API to query flagged content. This is useful if you want to review the flagged content in your app.

const filters = { entity_type: "entity_type" };

const sort = [{ field: "created_at", direction: -1 }];

const options = { next: null }; // cursor pagination

const { items, next } = await client.moderation.queryReviewQueue(

filters,

sort,

options,

);Monitoring Moderated Content

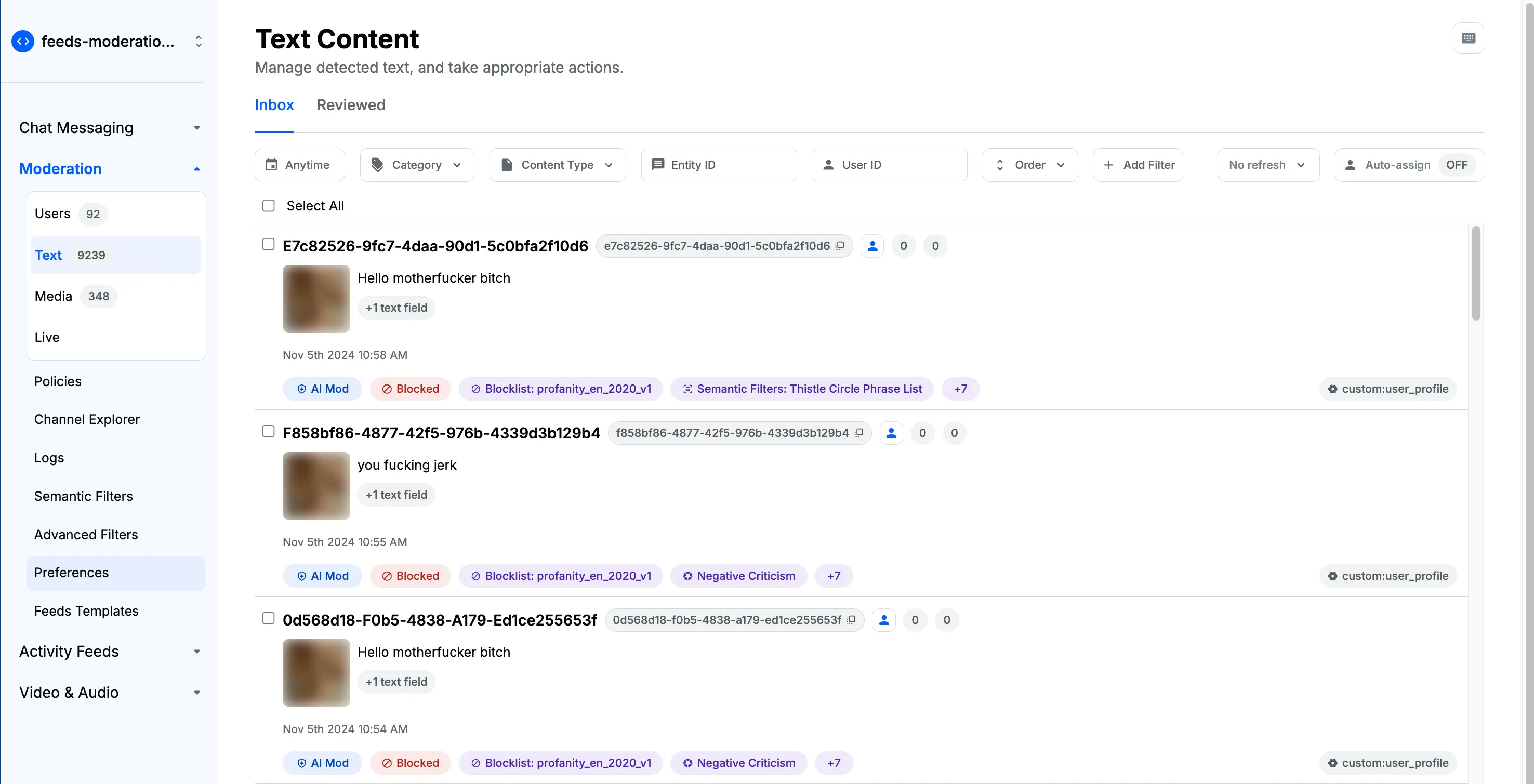

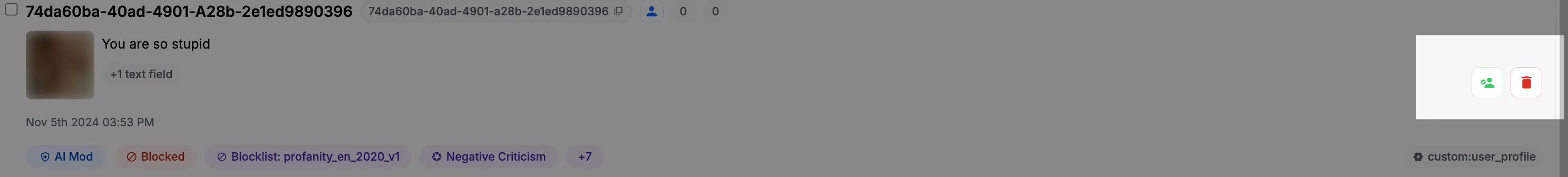

You can monitor all the flagged or blocked content from the dashboard. You will find three separate queues on the dashboard.

- Users Queue: This queue contains all the users who were flagged by another user or users who have at least one flagged content. As a moderator, you can take certain actions on the user entirely or on the content posted by that user.

- Text Queue: This queue contains all the text content that has been flagged or blocked by the moderation system.

- Media Queue: This queue contains all the media content that has been flagged or blocked by the moderation system.

You can take appropriate actions on the content from each queue.

The available actions for each content in the Text and Media Queue are:

- Mark Reviewed: This action indicates that you have reviewed the content and determined it doesn't require further action. It helps keep track of which contents have been addressed by moderators.

- Delete: We provide Delete action button out-of-the-box, but actual logic to delete the content needs to be handled by your backend via webhook.

We also provide a way to support more custom actions for your application on Stream Dashboard. Please get in touch with us at Stream Support Team if you need this feature.

Webhook Integration

You can receive webhook notifications for actions taken on the content from the dashboard. Please refer to the Webhook Integration guide for more details. E.g., if moderator presses delete button for specific content on the dashboard, you will receive a webhook notification with the action details. And accordingly you can delete the content from your platform.