const response = await client.moderation.queryReviewQueue(

{ entity_type: "stream:chat:v1:message", has_text: true },

[{ field: "created_at", direction: -1 }],

{ next: null },

);Build Moderation Dashboard

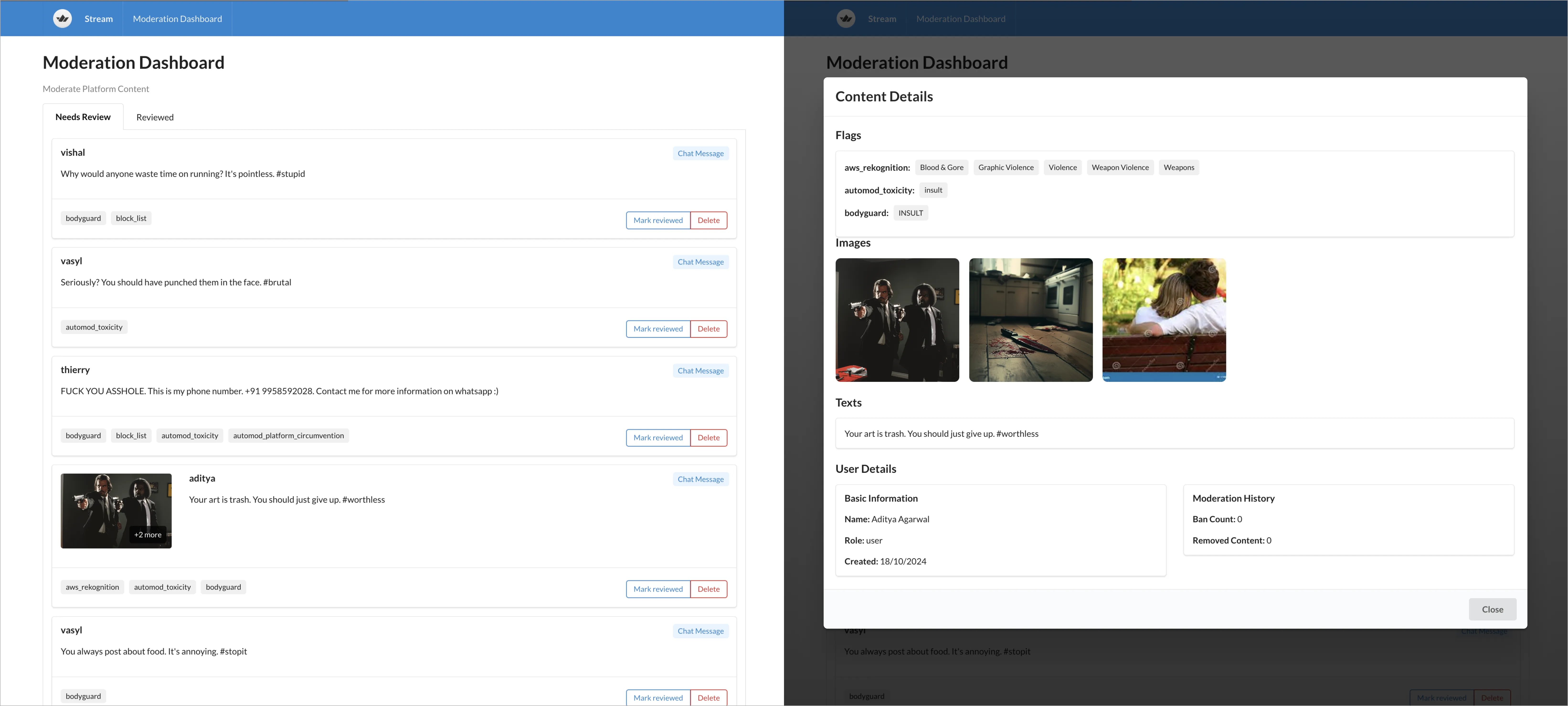

Stream offers a built-in moderation dashboard to assist you in moderating content. However, you also have the option to create a custom moderation dashboard tailored to your product's needs. Stream provides all the necessary APIs that are used to build the moderation dashboard.

We have provided an example application that you can use as a starting point to build your own moderation dashboard. Please check the Moderation Dashboard Example for more details.

This guide will walk you through the process of building your own moderation dashboard using Stream's moderation APIs.

It is recommended to begin by setting up moderation for your specific use case. Please refer to the following guides:

- Quick Start Guide for Chat Moderation

- Quick Start Guide for Feeds Moderation

- Quick Start Guide for Custom Content Moderation

Once you have set up moderation for your use case, you can use the following APIs to build your own moderation dashboard.

There are three types of moderation UI you can build:

- UI to list flagged content

- UI to take action on flagged content

- UI to configure moderation policy

Build UI for Review Queue

Stream provides a QueryReviewQueue API to query flagged content. You can use this API to build your own moderation dashboard to review flagged content. This is the underlying API that powers the moderation dashboard in the Stream dashboard.

Text Review Queue

You can use following code to query all the flagged contents in the review queue.

The entity_type is the type of content you are moderating. For example:

- If you are moderating chat messages, the

entity_typewould bestream:chat:v1:message. - If you are moderating feed activities, the

entity_typewould bestream:feed:v2:activity. - If you are moderating feed comments, the

entity_typewould bestream:feed:v2:reaction. - If you are moderating custom content, the

entity_typewould be the type of your custom content.

response.items will contain the list of flagged items. Here, "item" refers to Review Queue Item.

The following is an example of how to build a UI to list flagged content in the review queue.

import React, { useState, useEffect } from "react";

const client = new StreamChat("api_key", "api_secret");

const ReviewQueueList = () => {

const [items, setItems] = useState([]);

useEffect(() => {

const fetchData = async () => {

const response = await client.moderation.queryReviewQueue(

{ entity_type: "stream:chat:v1:message", has_text: true },

[{ field: "created_at", direction: -1 }],

{ next: null },

);

setItems(response.items);

};

fetchData();

}, []);

return (

<ul>

{items.map((item) => (

<li key={item.id}>

<p>

<strong>ID:</strong> {item.id}

</p>

<p>

<strong>Texts:</strong> {item.moderation_payload.texts.join(", ")}

</p>

<p>

<strong>Images:</strong> {item.moderation_payload.images.join(", ")}

</p>

<p>

<strong>Videos:</strong> {item.moderation_payload.videos.join(", ")}

</p>

<p>

<strong>Message:</strong> {item.message}

</p>

<p>

<strong>Entity Creator:</strong> {item.entity_creator}

</p>

<p>

<strong>Entity ID:</strong> {item.entity_id}

</p>

<p>

<strong>Entity Type:</strong> {item.entity_type}

</p>

<p>

<strong>Flags:</strong>{" "}

{item.flags.map((flag) => flag.type).join(", ")}

</p>

<p>

<strong>Recommended Action:</strong> {item.recommended_action}

</p>

<p>

<strong>Created At:</strong> {item.created_at}

</p>

</li>

))}

</ul>

);

};You can iterate over item.flags to get the list of moderation engines that flagged the content.

for (const flag of item.flags) {

console.log(flag.type); // moderation engine type

console.log(flag.labels); // list of labels or harm types detected

console.log(flag.custom); // more details about the flag

}Media Review Queue

You can use following code to query all the flagged messages with images in the review queue.

const response = await client.moderation.queryReviewQueue(

{ entity_type: "stream:chat:v1:message", has_image: true },

[{ field: "created_at", direction: -1 }],

{ next: null },

);Blocked Content

You can use following code to query all the blocked contents in the review queue.

const response = await client.moderation.queryReviewQueue(

{ entity_type: "stream:chat:v1:message", recommended_action: "remove" },

[{ field: "created_at", direction: -1 }],

{ next: null },

);Reviewed Content

You can use following code to query all the reviewed contents in the review queue.

const response = await client.moderation.queryReviewQueue(

{ entity_type: "stream:chat:v1:message", reviewed: true },

[{ field: "created_at", direction: -1 }],

{ next: null },

);You can find more details about the QueryReviewQueue API here.

Build UI to Take Action on Review Queue Items

Stream provides a SubmitAction API to take action on review queue items. You can use this API to build your own moderation dashboard to take action on flagged content. This is the underlying API that powers the moderation dashboard in the Stream dashboard.

Support you want to have a button to mark a flagged content

const markAsReviewed = async (itemId: string) => {

await client.moderation.submitAction("mark_reviewed", itemId)

}

<Button onClick={() => markAsReviewed("item_id")}>Mark as Reviewed</Button>Please refer to the SubmitAction API for more examples as listed below:

- Ban a user

- Delete Chat Message

- Delete Feeds Activity

- Delete User

- Delete Feeds Reaction

- Unban User

- Restore Chat Message or Activity or Reaction

- Unblock Message

Build UI to Configure Moderation Policy

Stream provides a UpsertConfig API to configure moderation policy. You can use this API to build your own moderation dashboard to configure moderation policy.

Please refer to the UpsertConfig API for more details.