AI Semantic Filters

Semantic Filters offer the ability to create custom filters tailored to your unique needs and based on the meaning of text content rather than exact text string matching. For example, instead of blocking the exact phrase "buy now," a semantic filter can block variations like "purchase immediately" or "acquire right away" that convey the same meaning. Another example could be filtering out a longer statement like "I had a terrible experience with this product and would not recommend it to anyone" and its variations such as "My experience with this item was awful, and I wouldn't suggest it to others" or "I had a bad time using this product, and I don't think anyone should buy it."

Configuration

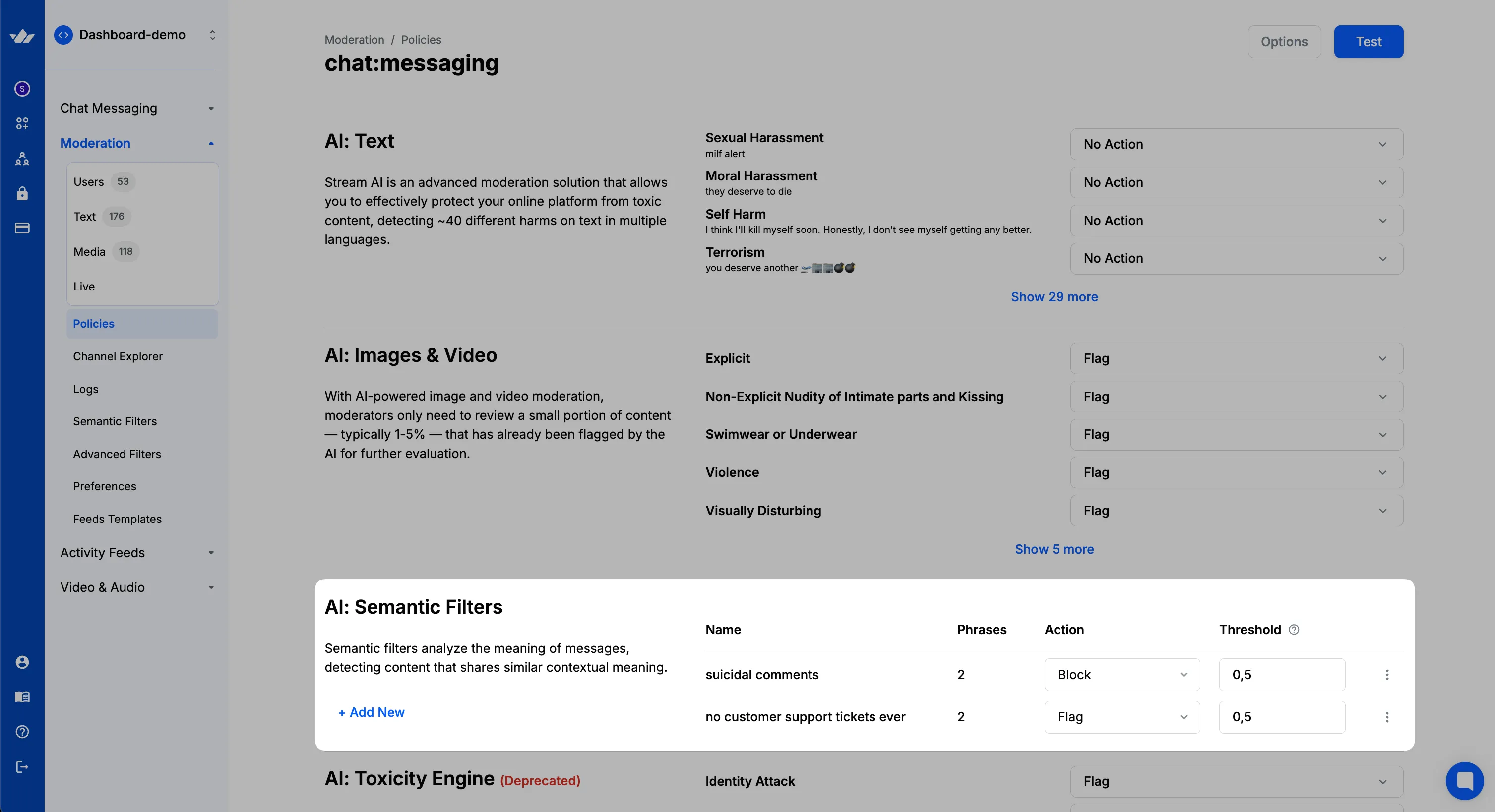

Make sure to go through the Creating A Policy section as a prerequisite.

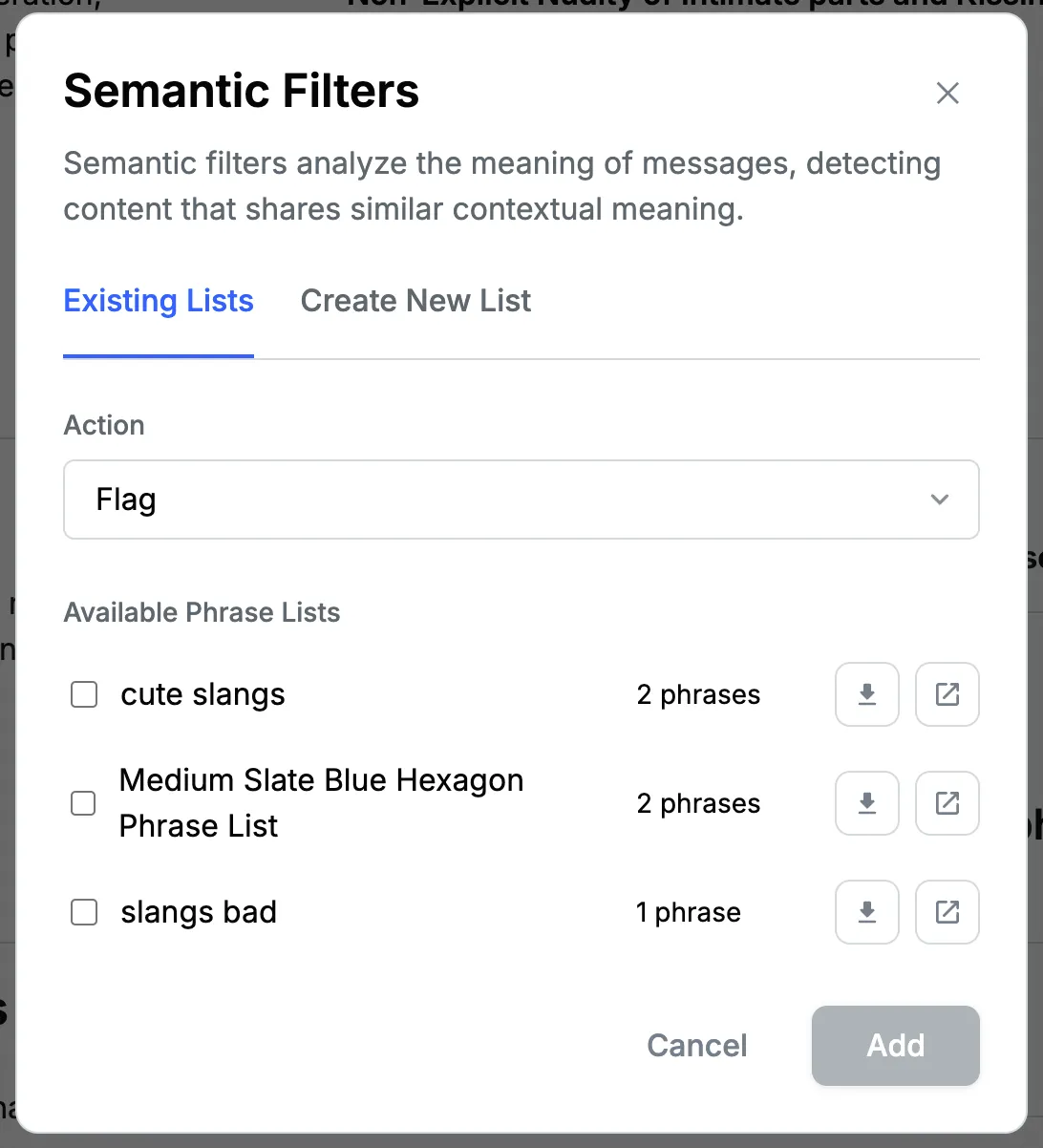

To set up semantic filters, in the "Semantic Filters" section on the policy page, click on the "+ Add New" button. Here you can either choose from existing lists (which you may have created in the past) or quickly create a new semantic filter list.

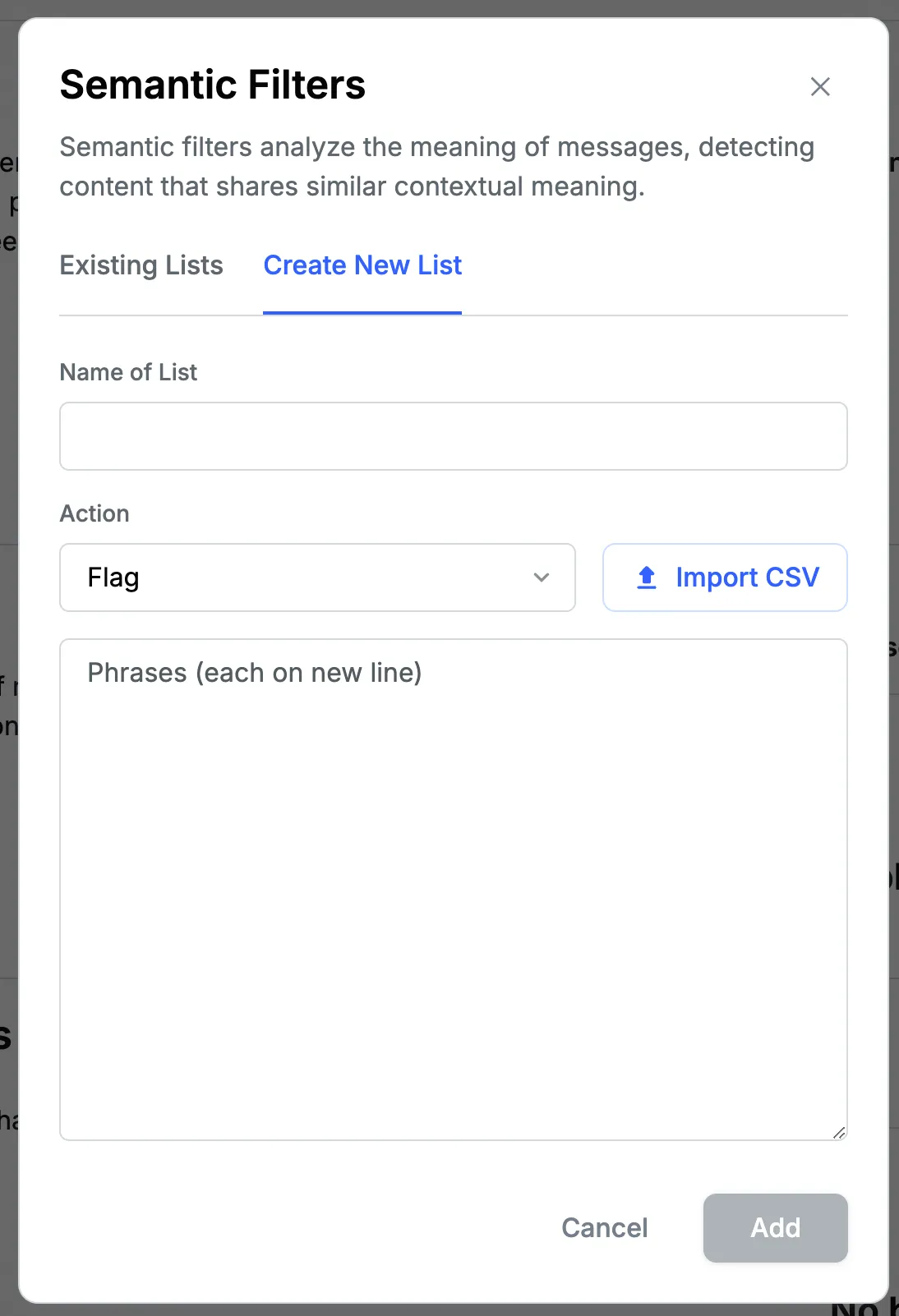

To create a new semantic filter list:

- Give your semantic filter a name and description.

- Add seed phrases that represent the type of content you want to filter. These phrases should capture the essence of the harmful or unwanted content. For example,

- If you want to filter out content related to hate speech, you might add seed phrases like "I hate [group]", "[group] should die", or "All [group] are [negative attribute]". These seed phrases help the AI understand the type of content you want to moderate.

- Choose the action to be taken when content matches these semantic filters: Flag, Block, Shadow Block.

- Click "Add" to activate your new semantic filter.

Filters Based on Sentence Similarity and Semantic Analysis

Our custom filters leverage cutting-edge technologies that go beyond traditional blocklists. They utilize sophisticated techniques like sentence similarity, which focuses on the high-level meaning and intent of the text content rather than just specific words. By analyzing the semantic structure of sentences, our filters can identify content that aligns with your community guidelines, even if the wording is different.

While crafting these filters, it's important to take into account some key considerations to maximize effectiveness.

Considerations when building filters

Phrases with unrelated meaning:

Text content that contains statements with different meanings may confuse the model, as it is not obvious to understand what the actual intent of the text content is (e.g.,“I just finished baking some cookies. Do you have any movie recommendations?”). This is often the case with long text content that contains multiple sentences with varying intents. Semantic filters work best on single sentences where the meaning is very clear, such as in chat text content.Handling Short Text Content:

Very short text content, such as single words, might lack context, making it difficult to accurately interpret intent. Blocklists are a better solution in these cases (e.g.,"movie").Managing Intent Mismatch:

The perceived intent might not always align with the actual content. Be cautious when interpreting user intent based solely on the words used.Accounting for Uncommon Words:

Certain uncommon words (for instance, mentions of products or companies) might disproportionately influence similarity calculations, potentially causing text content with different intents to be grouped together if one of those words appears in the text content. This can be an advantage to avoid mentions of certain words or topics (e.g., writing the name of the movie"Titanic"in a filter will block a lot of text content containing this word, while a more common word such as"day"won’t be such a strong filter).Detecting Opposite Meanings:

Sentence similarity models struggle to distinguish opposite meanings. For instance,"I love this movie"and"I hate this movie"are similar sentences as they are both statements about a movie.

Building Effective Custom Filters

Specificity and Context:

- Prioritize specificity when designing your custom filters.

- Tailor filters to target particular types of content or language patterns relevant to your community.

- Select words and phrases likely to appear in content that breaches community guidelines.

- Example: Customize filters to identify specific spoilers from well-known films, such as:

"Darth Vader is Luke's father.""Romeo and Juliet die at the end.""The Titanic sinks."

- Incorporate synonyms or alternative expressions of sensitive terms for comprehensive coverage.

Exploring Variations:

- Add as many sentences to your custom filter list as necessary.

- Include text content that has previously been blocked.

- Examples:

"Make money fast!""Get rich quick schemes."

Accuracy vs. Recall:

- Adjust filter sensitivity to find the right balance for your community.

- Determine focus:

- Precision: Minimize false positives by creating very specific filters that capture closely resembling text content. Trade-off: some harmful content may go undetected.

- Recall: Ensure no inappropriate content is overlooked (false negatives). A more sensitive filter captures a broader range of text content, which may result in some false positives.

Combine with ⛔ Advanced Filters:

- Strengthen filtering strategy by integrating semantic filters with Blocklist and Regex Filters.

- This combined approach captures a wider array of content while maintaining precision.

- Example: Add specific words, emails, or domains to the Advanced Filters.

Tools to help you tune your Semantic Filters

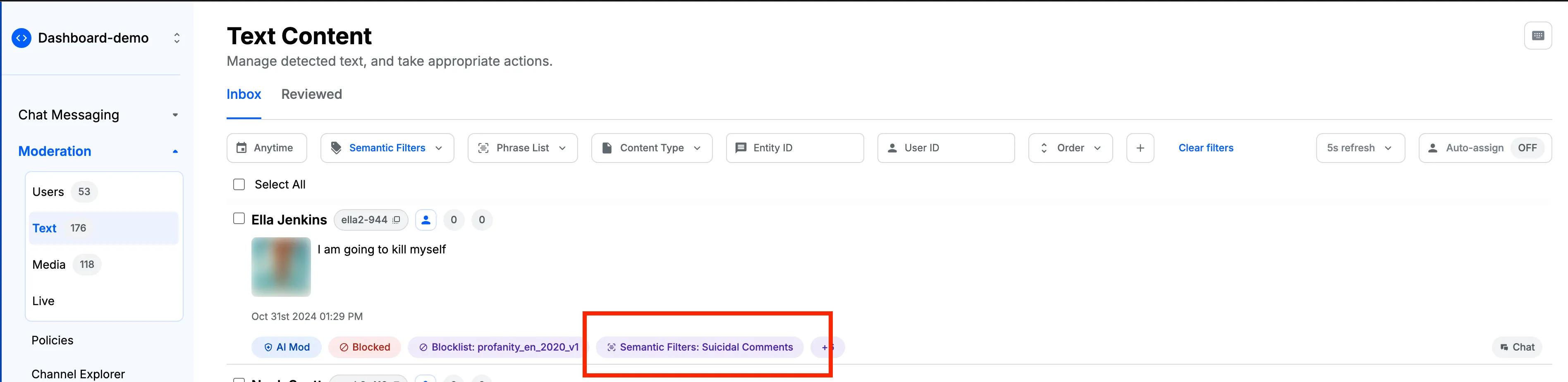

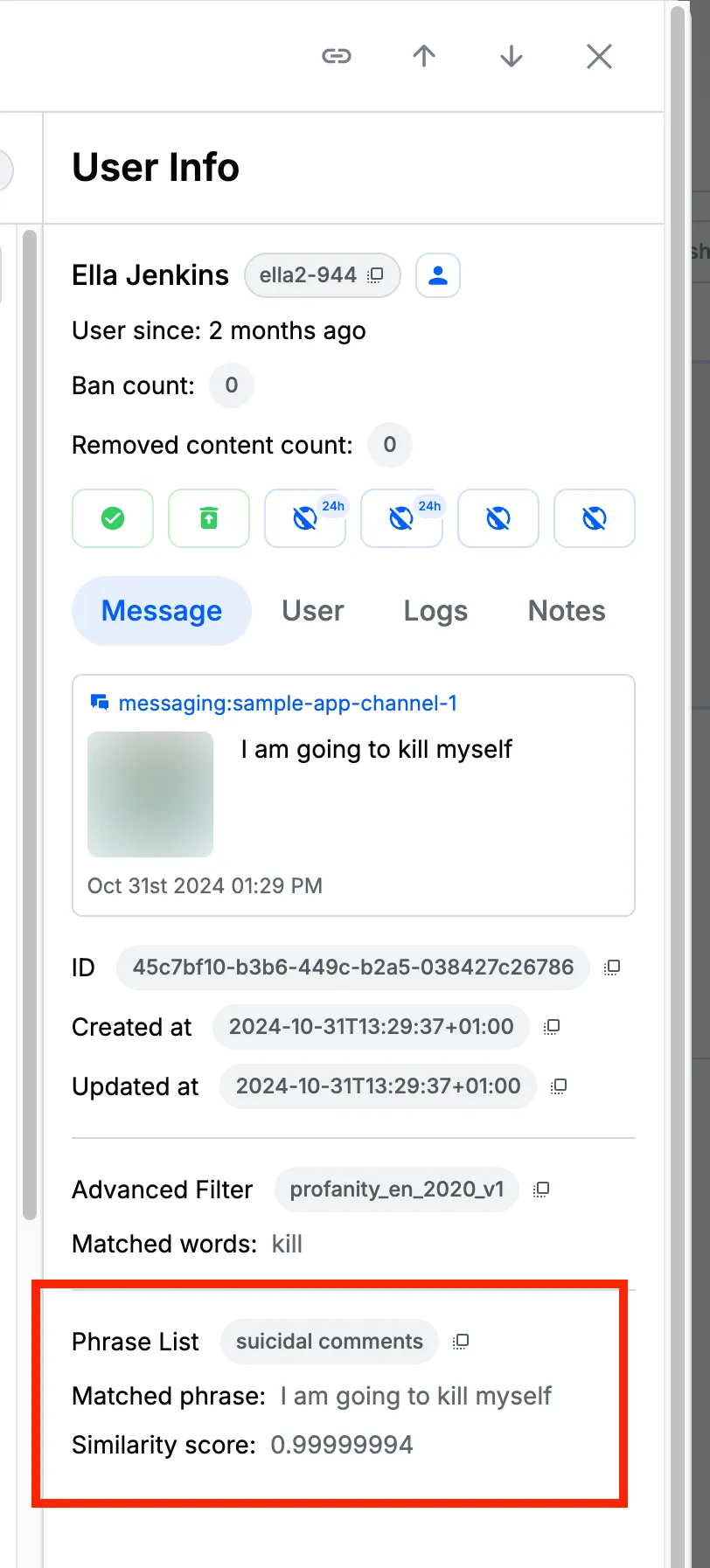

- Matching Phrase: Each time content is flagged by Semantic Filters, you can check the exact phrase list and the phrase that matched with the detected text content, as well as the similarity score with this top matching phrase. Understanding this information will help moderators adjust the phrases they have set if they are overmatching with incoming text content.

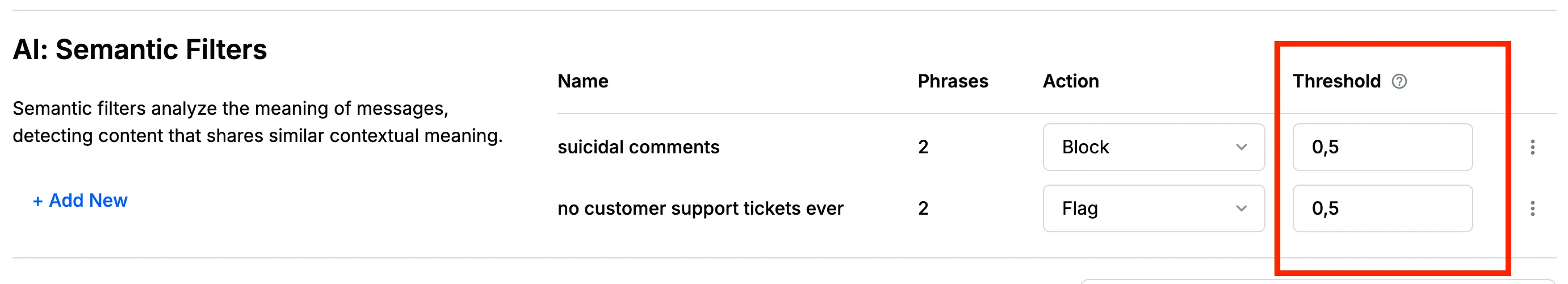

- Similarity Score: For every phrase list within policy, we allow moderators to set an action as well as a similarity threshold. This threshold is used to determine how similar the detected text content needs to be to the phrase list content in order to trigger the action.

- Setting the right action should reflect your strategy. If the type of harm you intend to detect with this phrase list is very abusive and has a clear pattern that you have already captured in the phrase list, then you may want to consider (Block or Bounce), for example, while if the phrase list is still new and experimental or if the target harm isn’t as critical, you may relax the action to (Flag), for example.

- Setting the threshold is another very important parameter; the higher the threshold, the less chance there will be a match with the incoming text content. By default, the value will be set to 0.7 (the range for similarity is from 0→1), but if you want to ensure more similarity between detected text content and phrase list content, then raising the threshold may be useful.

- Semantic Filter Tester: In order to ensure having the best phrase list setup to detect a certain type of harm, moderators can use the Semantic Filter tester to manually test a certain phrase list against all different text content they target.

- The tester will share the matching score, which will also help with setting the similarity threshold as more understanding of the scores will develop while using the tester.

- As of now, the tester is available as a REST API, but in the future, this will also be part of the Moderation dashboard as well. Testing Phrase-lists with different input messages using Moderation API

Conclusion

Leverage semantic filtering with AutoMod to craft powerful custom filters based on intent and meaning. By following these user-centric best practices, you can actively shape a respectful and secure text content environment for your community. Feel free to reach out to our support team for any assistance or questions regarding configuring and optimizing semantic filters.