// Create a global rule (applies to all configurations)

await client.moderation.upsertModerationRule({

name: "Spam Detection",

description: "Detects and bans users for spam behavior",

team: "moderation",

config_keys: [], // Empty array makes it global

id: "spam-detection",

rule_type: "user",

enabled: true,

cooldown_period: "24h",

conditions: [

{

type: "text_rule",

text_rule_params: {

threshold: 5,

time_window: "1h",

llm_harm_labels: {

SCAM: "Fraudulent content, phishing attempts, or deceptive practices",

PLATFORM_BYPASS:

"Content that attempts to circumvent platform moderation systems",

},

},

},

],

logic: "AND",

action: {

type: "ban_user",

ban_options: {

duration: 3600,

reason: "Spam behavior detected",

shadow_ban: false,

ip_ban: false,

},

},

});

// Create a config-specific rule (applies only to specific configurations)

await client.moderation.upsertModerationRule({

name: "Chat Specific Rule",

description: "Rule that only applies to chat channels",

team: "moderation",

config_keys: ["chat:messaging", "chat:support"],

id: "chat-toxicity",

rule_type: "user",

enabled: true,

cooldown_period: "12h",

conditions: [

{

type: "text_rule",

text_rule_params: {

threshold: 3,

time_window: "1h",

llm_harm_labels: {

HATE_SPEECH:

"Content that promotes hatred, discrimination, or violence against groups",

HARASSMENT: "Unwanted behavior intended to disturb or upset",

},

},

},

],

logic: "AND",

action: {

type: "flag_user",

flag_user_options: {

reason: "Toxic behavior in chat channels",

},

},

});Rule Builder

The Rule Builder is a powerful moderation feature that automatically takes action against users based on their behavior patterns. Instead of moderating each message individually, the Rule Builder tracks user violations over time and triggers actions when users reach certain thresholds.

The rule builder is part of AI Moderation offering. Please contact support to enable this feature.

How It Works

The Rule Builder monitors user behavior across all your moderation tools and automatically responds when users violate your community guidelines repeatedly. For example:

- Spam Detection: Ban users who send 5+ spam messages within 1 hour

- Toxic Behavior: Flag users who post 3+ hate speech messages within 24 hours

- New User Abuse: Shadow ban new accounts that violate rules within their first day

- Underage Users: Ban underage users who are involved in illegal activities

- Content Frequency: Flag users who post 50+ messages within 1 hour

Key Benefits

- Automated Response: No manual intervention needed for common violation patterns

- Flexible Rules: Create custom rules that match your community's specific needs

- Time-Based Tracking: Consider user behavior over time, not just individual messages

- Multiple Actions: Choose from ban, flag, shadow ban, or other actions

- Real-Time Processing: Rules are evaluated immediately as users post content

Creating Your First Rule

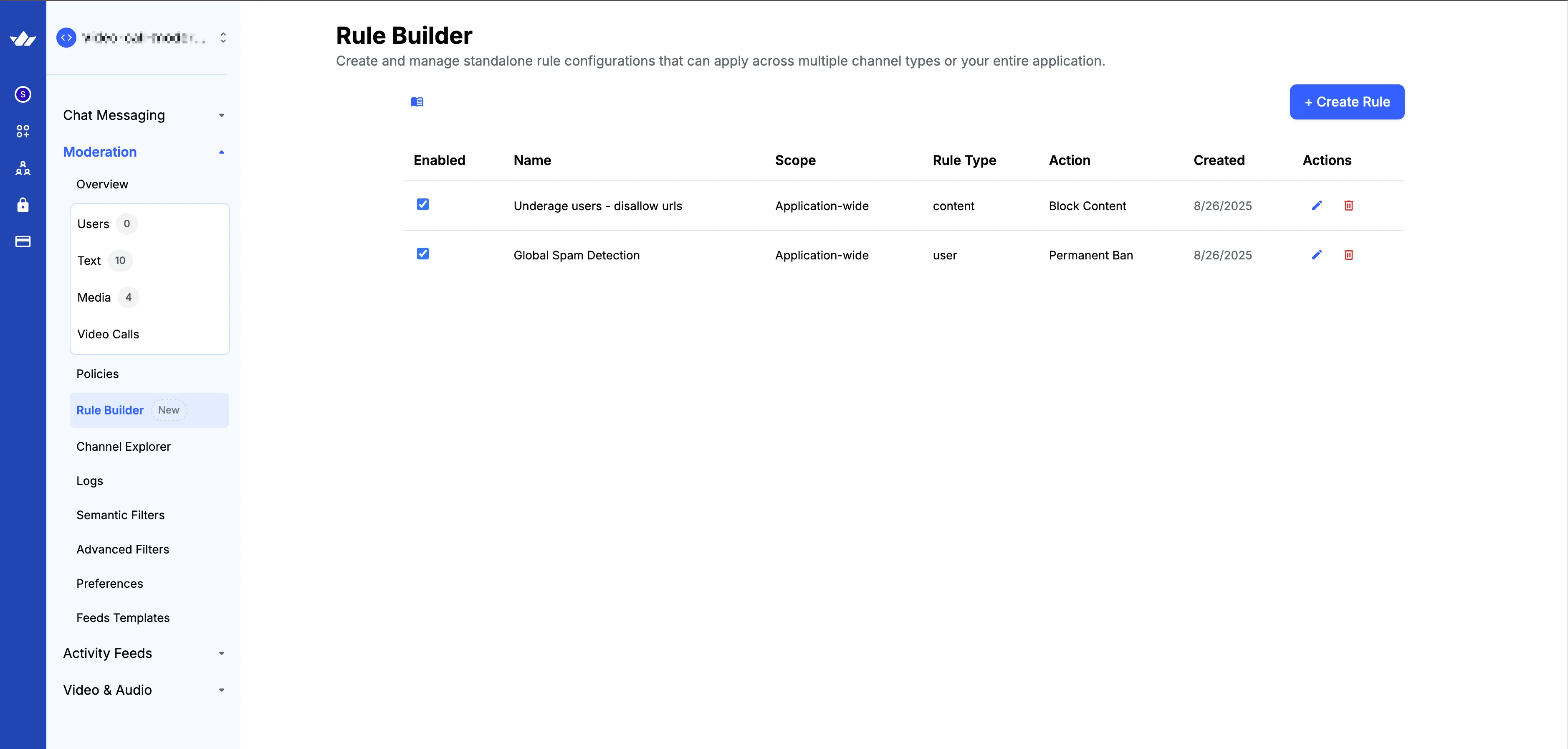

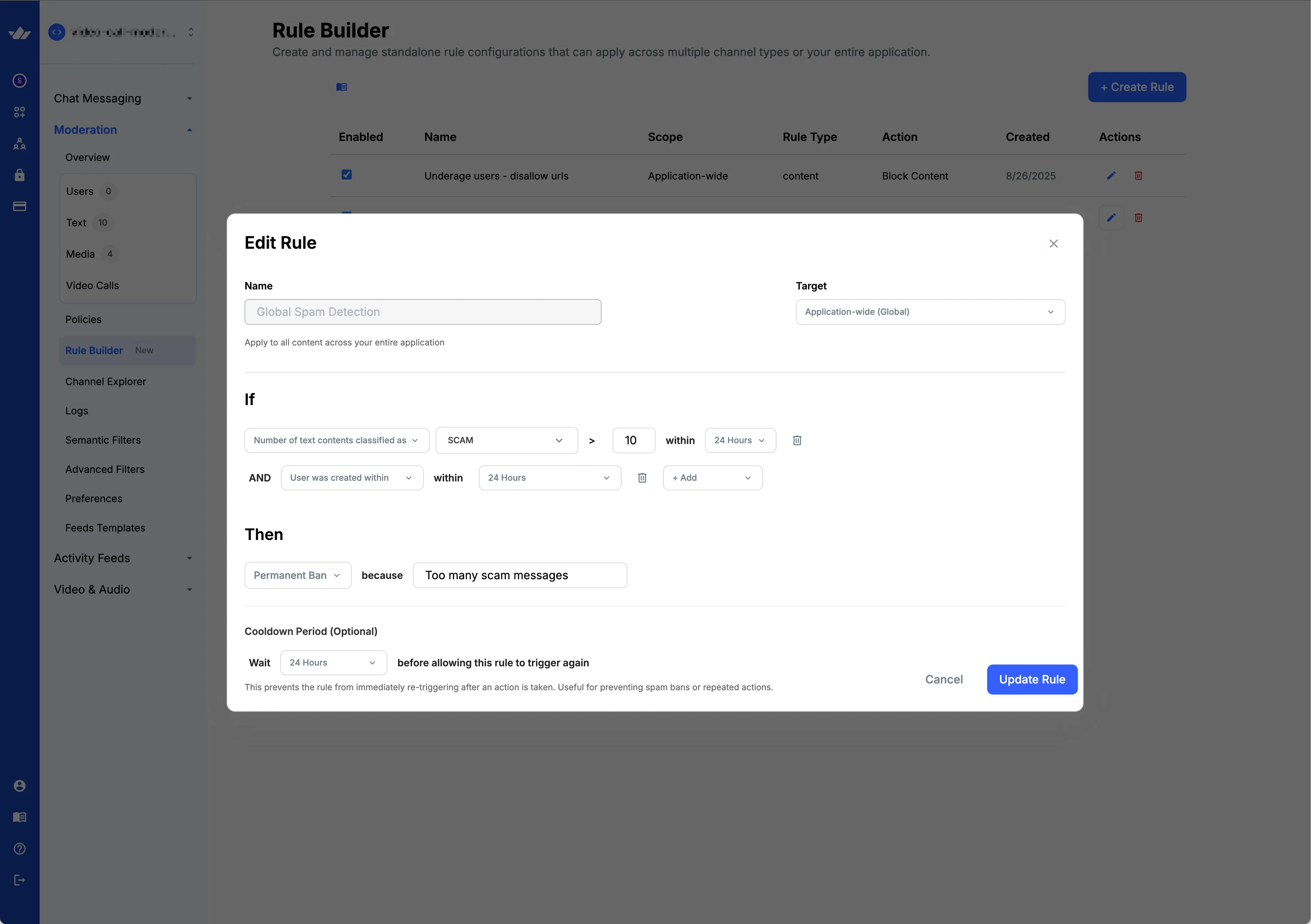

Dashboard

You can create rules in the dashboard by navigating to Moderation > Policies > [Select a policy] > Rule Builder.

You can create a new rule by clicking on "Create Rule" button.

You can either setup a user-type rule or a content-type rule. The difference between the two is that user-type rules are applied to the user due to their behavior and content-type rules are applied to the content.

For example, if you want to ban a user who is spamming, you can create a user-type rule. If you want to block a content that is bad, you can create a content-type rule.

API

You can create rules using the UpsertModerationRule API.

Querying Rules

You can query existing rules using the QueryModerationRules API.

// Query all rules

const allRules = await client.moderation.queryModerationRules({

filter: {},

sort: [{ field: "created_at", direction: -1 }],

limit: 25,

});

// Query rules by name

const spamRules = await client.moderation.queryModerationRules({

filter: {

name: { $autocomplete: "spam" },

},

});

// Query global rules only

const globalRules = await client.moderation.queryModerationRules({

filter: {

is_global: true,

},

});

// Query config-specific rules

const configRules = await client.moderation.queryModerationRules({

filter: {

config_keys: { $in: ["chat:messaging"] },

},

});Managing Rules

// Get a specific rule by ID

const rule = await client.moderation.getModerationRule("rule-id-here");

// Update an existing rule (use same upsertModerationRule API)

await client.moderation.upsertModerationRule({

name: "Updated Spam Detection",

description: "Updated spam detection with new thresholds",

team: "moderation",

config_keys: [],

id: "spam-detection",

rule_type: "user",

enabled: true,

cooldown_period: "24h",

// ... updated rule definition

});

// Delete a rule

await client.moderation.deleteModerationRule("rule-id-here");Global vs Config-Specific Rules

Rules can be scoped to apply either globally across your entire application or only to specific moderation configurations.

Global Rules

Global rules apply to all the content in your application, as long as it reaches the moderation system.

{

"name": "Global Spam Detection",

"description": "Applies to all configurations",

"team": "moderation",

"config_keys": [], // Empty array = global rule

"id": "global-spam-detection",

"rule_type": "user",

"enabled": true,

"cooldown_period": "24h",

"conditions": [

// ... conditions

],

"logic": "AND",

"action": {

// ... action definition

}

}Config-Specific Rules

Config-specific rules only apply to the moderation configurations you specify. List the configuration keys in the config_keys array.

E.g., if you have a rule that only applies to chat, you can set the config_keys to ["chat:messaging", "chat:support"], then counters will only be counted for messages sent in the channel types mentioned.

{

"name": "Chat-Only Rule",

"description": "Only applies to chat configurations",

"team": "moderation",

"config_keys": ["chat:messaging", "chat:support"], // Specific configs

"id": "chat-only-rule",

"rule_type": "user",

"enabled": true,

"cooldown_period": "12h",

"conditions": [

// ... conditions

],

"logic": "AND",

"action": {

// ... action definition

}

}Use Cases:

- Global Rules: Account-level violations, severe content policies, cross-platform spam detection

- Config-Specific Rules: Channel-specific rules, different content standards per product area

Basic Rule Structure

Every rule has three main parts:

- Conditions: What behavior to watch for

- Threshold: How many violations before taking action

- Action: What to do when the threshold is reached

Example: Complete Rule Structure

{

"name": "Spam Detection",

"description": "Detects and bans users for spam behavior",

"team": "moderation",

"config_keys": ["chat:messaging", "chat:support"],

"id": "spam-detection",

"rule_type": "user",

"enabled": true,

"cooldown_period": "24h",

"conditions": [

{

"type": "text_rule",

"text_rule_params": {

"threshold": 5,

"time_window": "1h",

"llm_harm_labels": {

"SCAM": "Fraudulent content, phishing attempts, or deceptive practices",

"PLATFORM_BYPASS": "Content that attempts to circumvent platform moderation systems"

}

}

},

{

"type": "content_count_rule",

"content_count_rule_params": {

"threshold": 50,

"time_window": "1h"

}

}

],

"logic": "OR",

"action": {

"type": "ban_user",

"ban_options": {

"duration": 3600,

"reason": "Spam behavior detected",

"shadow_ban": false,

"ip_ban": false

}

}

}This rule:

- Watches for spam and advertising content

- Triggers when a user posts 5+ spam messages within 1 hour or 50+ messages within 1 hour

- Bans the user for 1 hour when triggered

User-Type Rules (Track User Behavior Over Time)

User-type rules track violations across multiple pieces of content and trigger actions when users reach certain thresholds over time.

Text-Based Rules

Track violations in text content like messages, comments, or posts over time.

LLM Harm Labels (Configurable):

When LLM configurability is enabled, you can use detailed harm labels with custom descriptions:

- Harassment: SEXUAL_HARASSMENT, HARASSMENT, BULLYING

- Hate Speech: HATE_SPEECH, RACISM, HOMOPHOBIA, MISOGYNY

- Threats: THREAT, TERRORISM, SELF_HARM

- Inappropriate Content: SEXUALLY_EXPLICIT, DRUG_EXPLICIT, WEAPON_EXPLICIT

- Spam: SCAM, PLATFORM_BYPASS, SPAM

Legacy Harm Labels:

For AI Text (NLP) engine, you can still use simple string arrays:

["HATE_SPEECH", "THREAT", "SPAM"]

Example with LLM Labels:

{

"type": "text_rule",

"text_rule_params": {

"threshold": 3,

"time_window": "24h",

"llm_harm_labels": {

"HATE_SPEECH": "Content that promotes hatred, discrimination, or violence against groups",

"THREAT": "Content containing threats of violence or harm against individuals or groups"

},

"severity": "HIGH"

}

}Example with Legacy Labels:

{

"type": "text_rule",

"text_rule_params": {

"threshold": 3,

"time_window": "24h",

"harm_labels": ["HATE_SPEECH", "THREAT"],

"severity": "HIGH"

}

}This rule tracks how many hate speech or threat messages a user posts within 24 hours and triggers when they reach 3 violations.

Semantic Filters:

You can also use Semantic Filters to track violations based on custom filters that match content by meaning rather than exact text. Semantic filters are useful for detecting variations of phrases or concepts that convey the same meaning.

Example with Semantic Filters:

{

"type": "text_rule",

"text_rule_params": {

"threshold": 3,

"time_window": "24h",

"semantic_filter_names": ["spam_filter", "profanity_filter"],

"semantic_filter_min_threshold": 0.8

}

}This rule tracks how many messages match your custom semantic filters (spam_filter or profanity_filter) with a similarity score of at least 0.8 within 24 hours and triggers when they reach 3 violations.

Parameters:

semantic_filter_names: Array of semantic filter names to check (e.g.,["spam_filter", "profanity_filter"])semantic_filter_min_threshold: Minimum similarity score required (0.0-1.0). Higher values require closer matches to your filter examples.

Image-Based Rules

Track violations in uploaded images over time.

Available Labels:

- Explicit Content: Explicit, Non-Explicit Nudity

- Violence: Violence, Visually Disturbing

- Inappropriate: Drugs & Tobacco, Alcohol, Rude Gestures

- Hate Symbols: Hate Symbols

Example:

{

"type": "image_rule",

"image_rule_params": {

"threshold": 1,

"time_window": "24h",

"harm_labels": ["Explicit", "Violence"]

}

}This rule tracks how many explicit or violent images a user uploads within 24 hours and triggers when they reach 1 violation.

User-Based Rules

Check user account properties.

Example:

{

"type": "user_created_within",

"user_created_within_params": {

"time_window": "24h"

}

}This condition is true for users who created their account within the last 24 hours.

User Custom Property Rules

Check custom properties stored on user accounts.

Example:

{

"type": "user_custom_property",

"user_custom_property_params": {

"property_name": "trust_score",

"property_value": "low",

"operator": "equals"

}

}This condition checks if a user has a custom property called "trust_score" with a value of "low".

Available Operators:

"equals": Property value must exactly match the specified value"not_equals": Property value must not match the specified value"contains": Property value must contain the specified value (for string properties)"greater_than": Property value must be greater than the specified value (for numeric properties)"less_than": Property value must be less than the specified value (for numeric properties)

Use Cases:

- Filter based on user reputation scores

- Apply different rules for different user tiers

- Check user verification status

- Apply rules based on user preferences or settings

Content Count Rules

Track how many messages a user posts over time.

Example:

{

"type": "content_count_rule",

"content_count_rule_params": {

"threshold": 50,

"time_window": "1h"

}

}This triggers when a user posts 50+ messages within 1 hour.

Identical Content Count Rules

Track when a user sends the same content multiple times. This is useful for detecting spam bots or users who repeatedly post identical messages.

Example:

{

"type": "user_identical_content_count",

"user_identical_content_count_params": {

"threshold": 3,

"time_window": "1h"

}

}This triggers when a user sends the same content (text + attachments) 3 or more times within 1 hour.

Important Notes:

- Time window: Supports "30m" (30 minutes) or "1h" (1 hour) time windows

- Very short messages are automatically filtered out to reduce false positives

- Content matching includes both text and attachments (images/videos)

- Intelligent content detection identifies identical messages even with minor variations

Use Cases:

- Detect spam bots posting the same promotional message repeatedly

- Identify users who copy-paste the same content across multiple channels

- Catch automated accounts that send identical messages

Available Actions

These actions affect the user account and are typically used with user-type rules that track behavior over time.

Ban User

Temporarily or permanently ban a user from your platform.

{

"type": "ban_user",

"ban_options": {

"duration": 86400,

"reason": "Multiple violations detected",

"shadow_ban": false,

"ip_ban": false

}

}Options:

duration: Ban length in seconds (0 = permanent)reason: Reason shown to moderatorsshadow_ban: User can post but content is hiddenip_ban: Also ban the user's IP address

Flag User

Create a review item for manual moderator review of the user.

{

"type": "flag_user",

"flag_user_options": {

"reason": "Suspicious behavior pattern detected"

}

}Examples

Example 1: Toxic User Detection

This rule identifies users who consistently engage in harmful behavior over time, targeting both hate speech and excessive profanity.

{

"name": "Toxic User Detection",

"description": "Bans users who consistently post harmful content",

"team": "moderation",

"config_keys": [],

"id": "toxic-user",

"rule_type": "user",

"enabled": true,

"cooldown_period": "7d",

"logic": "OR",

"conditions": [

{

"type": "text_rule",

"text_rule_params": {

"threshold": 5,

"time_window": "24h",

"llm_harm_labels": {

"HATE_SPEECH": "Content that promotes hatred, discrimination, or violence against groups",

"HARASSMENT": "Unwanted behavior intended to disturb or upset",

"THREAT": "Content containing threats of violence or harm against individuals or groups"

}

}

},

{

"type": "text_rule",

"text_rule_params": {

"threshold": 50,

"time_window": "24h",

"blocklist_match": ["profanity_en_2020_v1"]

}

}

],

"action": {

"type": "ban_user",

"ban_options": {

"duration": 604800,

"reason": "Toxic behavior detected",

"shadow_ban": false,

"ip_ban": true

}

}

}What it does:

- First condition: Triggers if a user posts 5 or more messages containing hate speech, harassment, or threats within 24 hours

- Second condition: Triggers if a user posts 50 or more messages that match the profanity blocklist within 24 hours

- Logic: Uses "OR" logic, meaning either condition can trigger the rule

- Action: Bans the user for 7 days (604,800 seconds) and also bans their IP address to prevent them from creating new accounts

When to use this rule:

- Communities with strict content policies

- Platforms that need to quickly remove toxic users

- Situations where you want to prevent users from circumventing bans with new accounts

Example 2: New User Spam Protection

This rule specifically targets spam from newly created accounts, which are often used by bots or malicious users.

{

"name": "New User Spam Protection",

"description": "Shadow bans new users who post spam content",

"team": "moderation",

"config_keys": ["chat:messaging"],

"id": "new-user-spam",

"rule_type": "user",

"enabled": true,

"cooldown_period": "6h",

"logic": "AND",

"conditions": [

{

"type": "text_rule",

"text_rule_params": {

"threshold": 3,

"time_window": "1h",

"llm_harm_labels": {

"SPAM": "Unsolicited promotional content or repetitive messages",

"SCAM": "Fraudulent content, phishing attempts, or deceptive practices"

}

}

},

{

"type": "user_created_within",

"user_created_within_params": {

"time_window": "24h"

}

}

],

"action": {

"type": "ban_user",

"ban_options": {

"duration": 3600,

"reason": "New user spam detected",

"shadow_ban": true,

"ip_ban": false

}

}

}What it does:

- First condition: Triggers if a user posts 3 or more spam or advertising messages within 1 hour

- Second condition: Only applies to users whose accounts are less than 24 hours old

- Logic: Uses "AND" logic, meaning both conditions must be true for the rule to trigger

- Action: Shadow bans the user for 1 hour (3,600 seconds), meaning they can still post but their content is hidden from other users

When to use this rule:

- Platforms with high bot activity

- Communities that want to give new users a chance but prevent immediate spam

- Situations where you want to test if a user is legitimate before fully banning them

Example 3: Message Frequency Abuse

This rule catches users who are posting too many messages too quickly, especially when combined with spam content.

{

"id": "message-flood",

"name": "Message Frequency Abuse",

"rule_type": "user",

"enabled": true,

"cooldown_period": "12h",

"logic": "AND",

"conditions": [

{

"type": "content_count_rule",

"content_count_rule_params": {

"threshold": 50,

"time_window": "1h"

}

},

{

"type": "text_rule",

"text_rule_params": {

"threshold": 5,

"time_window": "1h",

"harm_labels": ["SCAM", "PLATFORM_BYPASS"],

"contains_url": true

}

}

],

"action": {

"type": "flag_user",

"flag_user_options": {

"reason": "Excessive messaging with spam content"

}

}

}What it does:

- First condition: Triggers if a user posts 50 or more messages within 1 hour (regardless of content)

- Second condition: Triggers if a user posts 5 or more messages classified as spam or flood content within 1 hour

- Logic: Uses "AND" logic, meaning both conditions must be true for the rule to trigger

- Action: Flags the user for manual review by moderators instead of automatically banning them

When to use this rule:

- Communities where legitimate users might post frequently (like gaming chats)

- Situations where you want human oversight before taking action

- Platforms that want to distinguish between active users and spam bots

Example 4: Severe User Violation Pattern

This rule provides user-level action for severe violations tracked over time.

{

"id": "severe-user-violation",

"name": "Severe User Violation",

"rule_type": "user",

"enabled": true,

"cooldown_period": "30d",

"logic": "OR",

"conditions": [

{

"type": "text_rule",

"text_rule_params": {

"threshold": 1,

"time_window": "24h",

"harm_labels": ["TERRORISM"]

}

},

{

"type": "image_rule",

"image_rule_params": {

"threshold": 1,

"time_window": "24h",

"harm_labels": ["Violence", "Hate Symbols"]

}

}

],

"action": {

"type": "ban_user",

"ban_options": {

"duration": 0,

"reason": "Severe content violation",

"shadow_ban": false,

"ip_ban": true

}

}

}What it does:

- First condition: Triggers if a user posts even 1 message containing terrorism-related content within 24 hours

- Second condition: Triggers if a user uploads even 1 image containing violence or hate symbols within 24 hours

- Logic: Uses "OR" logic, meaning either condition can trigger the rule

- Action: Permanently bans the user (duration: 0 seconds) and bans their IP address

When to use this rule:

- Platforms with zero-tolerance policies for certain content

- Communities that need to comply with legal requirements

- Situations where you want to permanently remove users who post severe violations

Example 5: Coordinated Behavior Detection

This rule identifies patterns that suggest coordinated abuse or bot activity.

{

"id": "coordinated-behavior",

"name": "Coordinated Behavior Detection",

"rule_type": "user",

"enabled": true,

"cooldown_period": "48h",

"logic": "AND",

"conditions": [

{

"type": "content_count_rule",

"content_count_rule_params": {

"threshold": 100,

"time_window": "24h"

}

},

{

"type": "text_rule",

"text_rule_params": {

"threshold": 10,

"time_window": "24h",

"harm_labels": ["SPAM", "ADS", "SCAM"]

}

},

{

"type": "text_rule",

"text_rule_params": {

"threshold": 5,

"time_window": "24h",

"contains_url": true

}

}

],

"action": {

"type": "flag_user",

"flag_user_options": {

"reason": "Potential coordinated spam or bot activity"

}

}

}What it does:

- First condition: Triggers if a user posts 100 or more messages within 24 hours

- Second condition: Triggers if a user posts 10 or more spam, advertising, or scam messages within 24 hours

- Third condition: Triggers if a user posts 5 or more messages containing URLs within 24 hours

- Logic: Uses "AND" logic, meaning all three conditions must be true for the rule to trigger

- Action: Flags the user for manual review by moderators

When to use this rule:

- Platforms experiencing coordinated spam attacks

- Communities that want to identify potential bot networks

- Situations where you need to investigate before taking action

Example 6: Trust Score Based Moderation

This rule applies stricter moderation to users with low trust scores, requiring fewer violations to trigger action.

{

"id": "trust-score-moderation",

"name": "Trust Score Based Moderation",

"rule_type": "user",

"enabled": true,

"cooldown_period": "24h",

"logic": "AND",

"conditions": [

{

"type": "user_custom_property",

"user_custom_property_params": {

"property_name": "trust_score",

"property_value": "low",

"operator": "equals"

}

},

{

"type": "text_rule",

"text_rule_params": {

"threshold": 2,

"time_window": "1h",

"harm_labels": ["SPAM", "HARASSMENT"]

}

}

],

"action": {

"type": "ban_user",

"ban_options": {

"duration": 7200,

"reason": "Low trust user with violations",

"shadow_ban": true,

"ip_ban": false

}

}

}What it does:

- First condition: Only applies to users who have a custom property "trust_score" set to "low"

- Second condition: Triggers if the user posts 2 or more spam or harassment messages within 1 hour

- Logic: Uses "AND" logic, meaning both conditions must be true for the rule to trigger

- Action: Shadow bans the user for 2 hours (7,200 seconds)

When to use this rule:

- Platforms with user reputation systems

- Communities that want to apply different standards based on user history

- Situations where new or problematic users need stricter oversight

Content-Type Rules (Evaluate Individual Content)

Content-type rules evaluate individual pieces of content and can trigger immediate actions or be combined with user-type rules.

Text Content Rules

Evaluate individual text content for immediate action.

Example with LLM Labels:

{

"type": "text_content",

"text_content_params": {

"llm_harm_labels": {

"TERRORISM": "Content related to terrorism, terrorist organizations, or terrorist activities"

},

"severity": "HIGH"

}

}Example with Legacy Labels:

{

"type": "text_content",

"text_content_params": {

"harm_labels": ["TERRORISM"],

"severity": "HIGH"

}

}This rule evaluates each individual text message and triggers immediately if it contains terrorism-related content.

Complete Rule Example:

{

"name": "Immediate Text Filter",

"description": "Immediately blocks threatening content",

"team": "moderation",

"config_keys": [],

"id": "immediate-text-filter",

"rule_type": "content",

"enabled": true,

"logic": "OR",

"conditions": [

{

"type": "text_content",

"text_content_params": {

"llm_harm_labels": {

"TERRORISM": "Content related to terrorism, terrorist organizations, or terrorist activities",

"THREAT": "Content containing threats of violence or harm against individuals or groups"

},

"severity": "HIGH"

}

}

],

"action": {

"type": "block_content",

"remove_content_options": {

"reason": "Immediate removal of threatening content"

}

}

}Image Content Rules

Evaluate individual images for immediate action.

Example:

{

"type": "image_content",

"image_content_params": {

"harm_labels": ["Explicit", "Violence"]

}

}This rule evaluates each individual image and triggers immediately if it contains explicit or violent content.

Complete Rule Example:

{

"name": "Immediate Image Filter",

"description": "Immediately flags inappropriate image content",

"team": "moderation",

"config_keys": [],

"id": "immediate-image-filter",

"rule_type": "content",

"enabled": true,

"logic": "OR",

"conditions": [

{

"type": "image_content",

"image_content_params": {

"harm_labels": ["Explicit", "Violence", "Hate Symbols"]

}

}

],

"action": {

"type": "flag_content",

"flag_content_options": {

"reason": "Inappropriate image content detected"

}

}

}Video Content Rules

Evaluate individual videos for immediate action.

Example:

{

"type": "video_content",

"video_content_params": {

"harm_labels": ["Explicit", "Violence"]

}

}This rule evaluates each individual video and triggers immediately if it contains explicit or violent content.

Complete Rule Example:

{

"name": "Immediate Video Filter",

"description": "Immediately blocks inappropriate video content",

"team": "moderation",

"config_keys": [],

"id": "immediate-video-filter",

"rule_type": "content",

"enabled": true,

"logic": "OR",

"conditions": [

{

"type": "video_content",

"video_content_params": {

"harm_labels": ["Explicit", "Violence"]

}

}

],

"action": {

"type": "block_content",

"remove_content_options": {

"reason": "Inappropriate video content detected"

}

}

}Available Actions

These actions affect individual pieces of content and are typically used with content-type rules that evaluate content immediately.

Flag Content

Flag the specific content for manual review.

{

"type": "flag_content",

"flag_content_options": {

"reason": "Content violates community guidelines"

}

}Block Content

Block the specific content from the platform.

{

"type": "block_content",

"remove_content_options": {

"reason": "Content violates community guidelines"

}

}Examples

Example 1: Zero-Tolerance Content Filter

This rule provides immediate action for the most serious violations using content-type rules.

{

"id": "zero-tolerance-filter",

"name": "Zero-Tolerance Content Filter",

"rule_type": "content",

"enabled": true,

"logic": "OR",

"conditions": [

{

"type": "text_content",

"text_content_params": {

"harm_labels": ["TERRORISM"]

}

},

{

"type": "image_content",

"image_content_params": {

"harm_labels": ["Violence", "Hate Symbols"]

}

}

],

"action": {

"type": "block_content",

"remove_content_options": {

"reason": "Severe content violation"

}

}

}What it does:

- First condition: Triggers immediately if the current text message contains terrorism-related content

- Second condition: Triggers immediately if the current image contains violence or hate symbols

- Logic: Uses "OR" logic, meaning either condition can trigger the rule

- Action: Immediately removes the violating content

When to use this rule:

- Platforms with zero-tolerance policies for certain content

- Communities that need immediate content filtering

- Situations where you want to remove content instantly without affecting the user account

Example 2: Multi-Content Type Filter

This rule demonstrates how to filter multiple content types using content-type rules for immediate action.

{

"id": "multi-content-filter",

"name": "Multi-Content Type Filter",

"rule_type": "content",

"enabled": true,

"logic": "OR",

"conditions": [

{

"type": "text_content",

"text_content_params": {

"harm_labels": ["SPAM", "SCAM"],

"contains_url": true

}

},

{

"type": "image_content",

"image_content_params": {

"harm_labels": ["Explicit"]

}

},

{

"type": "video_content",

"video_content_params": {

"harm_labels": ["Violence"]

}

}

],

"action": {

"type": "flag_content",

"flag_content_options": {

"reason": "Multiple content violations detected"

}

}

}What it does:

- First condition: Triggers immediately if the current text message contains spam/scam content AND includes a URL

- Second condition: Triggers immediately if the current image contains explicit content

- Third condition: Triggers immediately if the current video contains violent content

- Logic: Uses "OR" logic, meaning any condition can trigger the rule

- Action: Flags the content for manual review by moderators

When to use this rule:

- Platforms that want to catch multiple types of violations in a single rule

- Communities that need immediate content filtering across different media types

- Situations where you want to flag content for review rather than removing it immediately

Example 3: Spam Link Detection

This rule catches spam and phishing attempts by detecting suspicious links in messages.

{

"id": "spam-link-detection",

"name": "Spam Link Detection",

"rule_type": "content",

"enabled": true,

"logic": "AND",

"conditions": [

{

"type": "text_content",

"text_content_params": {

"contains_url": true

}

},

{

"type": "text_content",

"text_content_params": {

"blocklist_match": ["phishing_2023", "malware_links"]

}

}

],

"action": {

"type": "block_content",

"remove_content_options": {

"reason": "Suspicious URL detected"

}

}

}What it does:

- First condition: Triggers if the current message contains a link

- Second condition: Triggers if the current message matches known spam or phishing patterns

- Logic: Uses "AND" logic, meaning both conditions must be true for the rule to trigger

- Action: Immediately removes messages with suspicious links

When to use this rule:

- Platforms with high spam activity

- Communities that want to protect users from malicious links

- Situations where you want to automatically remove suspicious content

Call Moderation

For real-time video and audio call moderation, see the Call Moderation documentation.

Key Differences

| Aspect | User-Type Rules | Content-Type Rules |

|---|---|---|

| Evaluation Timing | Track over time, trigger when threshold reached | Evaluate immediately per content piece |

| Threshold | Required (e.g., 3 violations in 24h) | Not applicable (immediate evaluation) |

| Time Window | Required (e.g., "24h", "7d") | Not applicable |

| Use Case | Pattern detection, repeated violations | Immediate content filtering |

| Actions | User actions (ban_user, flag user) | Content actions (flag content, block_content) |

Action Selection Guidelines

- User-Type Rules: Use user actions (ban_user, flag user) when you want to take action against the user account based on their behavior pattern

- Content-Type Rules: Use content actions (flag content, block_content) when you want to take action against specific content pieces

- Call-Type Rules: See Call Moderation for call-specific actions and escalation

- Mixed Rules: You can use any action type, but consider whether you want to affect the user or just the content

Time Windows

Specify how long to track user behavior (only applicable to user-type rules):

"30m": 30 minutes"1h": 1 hour"24h": 24 hours"7d": 7 days"30d": 30 days

Cooldown Periods

The Rule Builder supports cooldown periods to prevent immediate re-triggering of rules after an action has been taken. This is particularly useful when users are banned and then unbanned by administrators.

When a rule with a cooldown period is triggered and an action is taken (like banning a user), the system records this action with an expiration time. During the cooldown period, the same rule will not trigger again for that user, even if they continue to violate the conditions.

Configuration

Add a cooldown_period field to your rule configuration:

{

"name": "Spam Detection with Cooldown",

"description": "Spam detection rule with 24h cooldown",

"team": "moderation",

"config_keys": [],

"id": "spam-detection",

"rule_type": "user",

"enabled": true,

"cooldown_period": "24h",

"conditions": [

// ... conditions

],

"action": {

"type": "ban_user",

"ban_options": {

"duration": 3600,

"reason": "Spam behavior detected",

"shadow_ban": false,

"ip_ban": false

}

}

}Example Scenario

- User violates rule: User posts 5 spam messages in 1 hour

- Rule triggers: User gets banned for 1 hour

- Admin unbans user: Administrator manually unbans the user

- User posts again: User immediately posts more spam messages

- Cooldown active: Rule does not trigger again due to 24-hour cooldown

- After cooldown: User can trigger the rule again after 24 hours

Use Cases

- Post-Ban Protection: Prevent immediate re-banning after manual unbans

- Graduated Response: Give users time to reflect before facing consequences again

- Administrative Flexibility: Allow admins to override rules without immediate re-triggering

Best Practices

Start Simple

Begin with basic rules and gradually add complexity as you understand your community's needs.

Set Reasonable Thresholds

- Too low: May catch legitimate users

- Too high: May miss problematic behavior

- Start conservative and adjust based on results

Use Appropriate Time Windows

- Short windows (1-6 hours): Catch immediate abuse

- Medium windows (24-48 hours): Catch persistent violators

- Long windows (7-30 days): Catch chronic offenders

Configure Cooldown Periods

- Short cooldowns (1-6 hours): For minor violations where users should get another chance quickly

- Medium cooldowns (24-48 hours): For moderate violations where users need time to reflect

- Long cooldowns (7-30 days): For serious violations where users need significant time before facing consequences again

Test Your Rules

Use the test mode to verify your rules work as expected before enabling them in production.

Monitor Performance

Watch for rules that trigger too frequently or not enough, and adjust accordingly.

Common Use Cases

Gaming Communities

- Detect toxic players who harass others repeatedly

- Identify spam bots posting promotional content

- Flag users who post inappropriate content in chat

Social Platforms

- Prevent harassment campaigns against specific users

- Detect coordinated spam or bot activity

- Flag users who post explicit content

Business Applications

- Protect customer support channels from spam

- Detect fake accounts created for abuse

- Maintain professional communication standards