AI Image Moderation

Stream's image moderation engine, powered by AWS Rekognition, uses advanced AI to detect potentially harmful or inappropriate images asynchronously. This means that images are processed in the background to avoid any latency in your application, with moderation results and configured actions being applied as soon as the analysis is complete.

Supported Categories

The image moderation engine can detect the following categories of content:

- Explicit: Detects pornographic content and explicit sexual acts.

- Non-Explicit Nudity: Identifies non-pornographic nudity, including exposed intimate body parts and intimate kissing.

- Swimwear/Underwear: Detects images of people in revealing swimwear or underwear.

- Violence: Identifies violent acts, fighting, weapons, and physical aggression.

- Visually Disturbing: Detects gore, blood, mutilation, and other disturbing imagery.

- Drugs & Tobacco: Recognizes drug-related content, paraphernalia, and tobacco products.

- Alcohol: Identifies alcoholic beverages and alcohol consumption.

- Rude Gestures: Detects offensive hand gestures and inappropriate body language.

- Gambling: Identifies gambling-related content, including cards, dice, and slot machines.

- Hate Symbols: Recognizes extremist symbols, hate group imagery, and offensive symbols.

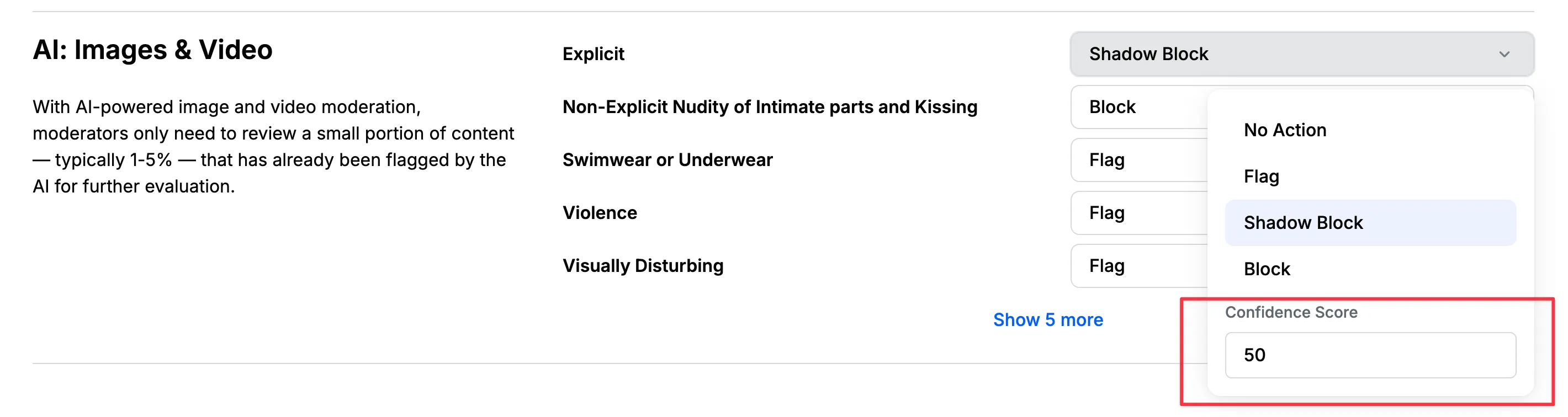

Confidence Scores

For each category, you can set a confidence score threshold between 1 and 100 that determines when actions should be triggered. For example:

- A threshold of 90 is very strict and will only trigger on highly confident matches.

- A threshold of 60 is more lenient and may catch more potentially problematic content.

- Lower thresholds increase sensitivity but may lead to more false positives.

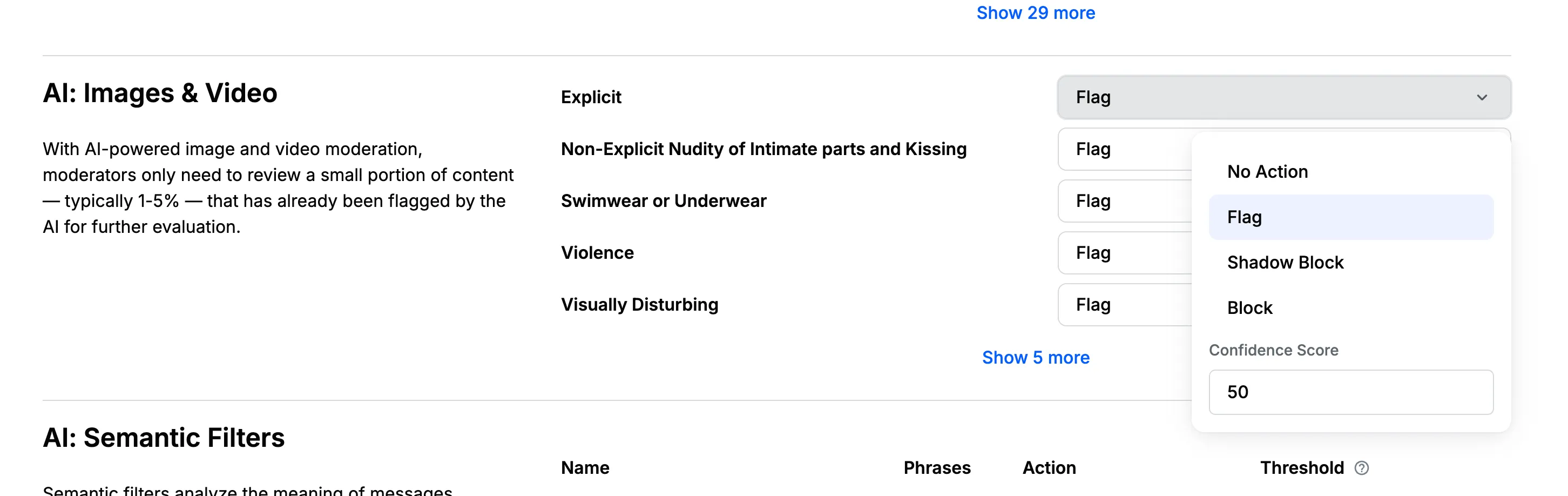

We recommend starting with default thresholds and adjusting based on your needs:

- Use higher thresholds (80-90) for categories requiring strict enforcement.

- Use medium thresholds (60-70) for categories needing balanced detection.

- Use lower thresholds (40-50) for maximum sensitivity where false positives are acceptable.

Configuration

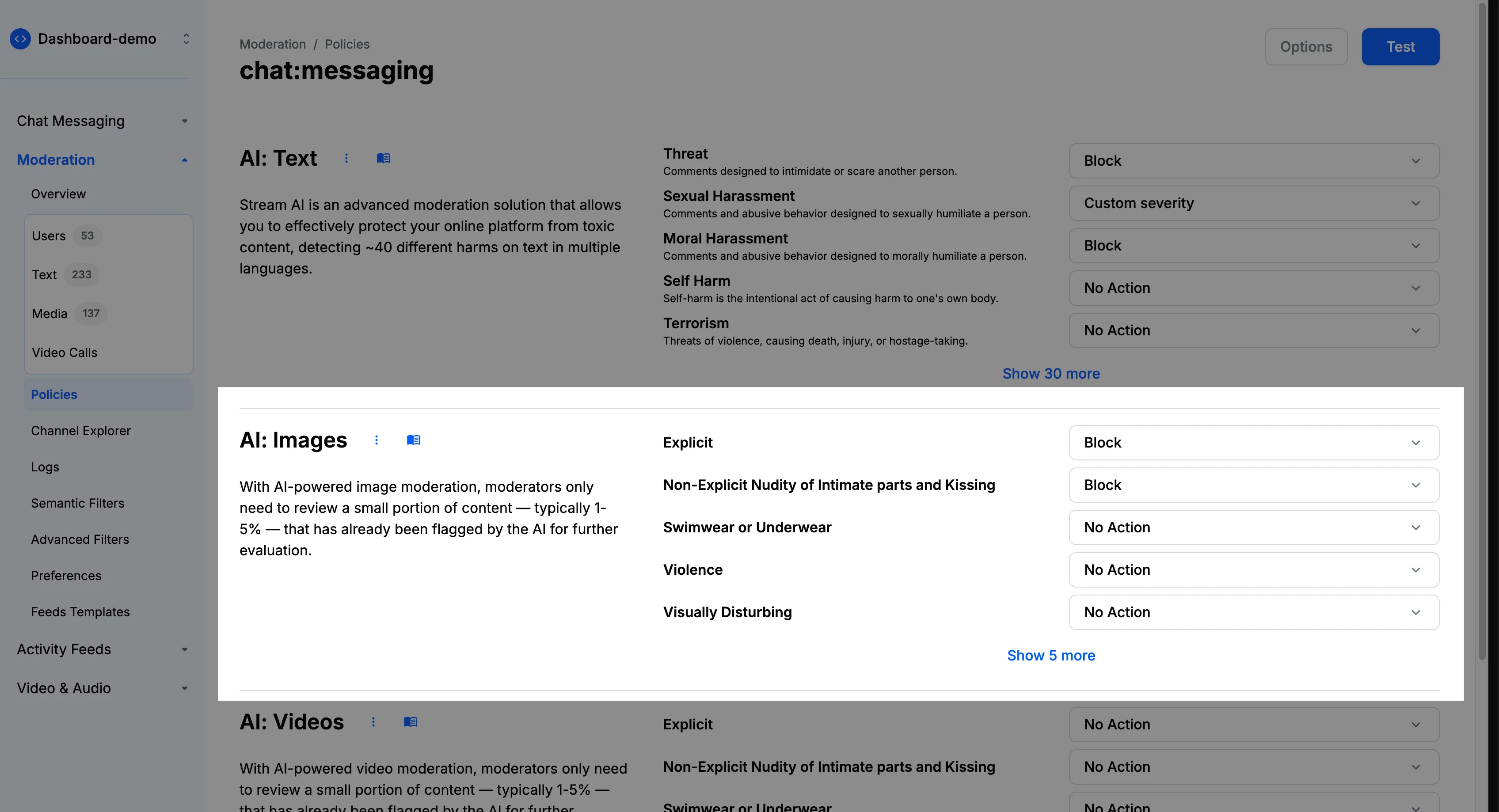

Make sure to go through the Creating A Policy section as a prerequisite.

Here's how to set up image moderation in your policy:

- Navigate to the "Image Moderation" section in your moderation policy settings.

- You'll find various categories of image content that can be moderated, such as nudity, violence, or hate symbols.

- For each category, choose the appropriate action: Flag, Block, or Shadow Block.

- You can also set a confidence threshold. This threshold represents the level of certainty at which the AI system considers its classification to be accurate. A higher threshold means the AI is more confident about its detection. You can adjust this threshold based on your moderation needs—a lower threshold will catch more potential violations but may increase false positives, while a higher threshold will be more selective but might miss some borderline cases.

Image moderation works asynchronously to avoid latency in your application. Thus, content will be sent successfully at first, but will be moderated in the background. If the image is found to be inappropriate, the content will be flagged or blocked.

How It Works

When an image is uploaded:

- The image is accepted immediately to maintain low latency.

- Analysis begins asynchronously in the background.

- AI models evaluate the image against all configured categories.

- If confidence thresholds are met, configured actions are applied.

- The message or post is updated based on moderation results.

- Flagged images appear in the Media Queue for moderator review.

Best Practices

- Configure confidence scores based on your tolerance for false positives.

- Use the "Flag" action for borderline cases that need human review.

- Use "Block" for clearly inappropriate content.

- Monitor the Media Queue regularly to validate automated decisions.

- Adjust thresholds based on observed accuracy.

- Consider your audience and community standards when configuring rules.