{

"review_queue_item": {

--> See ReviewQueueItem shape in the table below

},

"flags": [

--> See ModerationEventFlag shape in the table below

],

"action": {

--> See ModerationEventActionLog shape in the table below

},

"type": "review_queue_item.new",

"created_at": "timestamp",

"received_at": "timestamp"

}Webhooks

Webhooks provide a powerful way to implement custom logic for moderation.

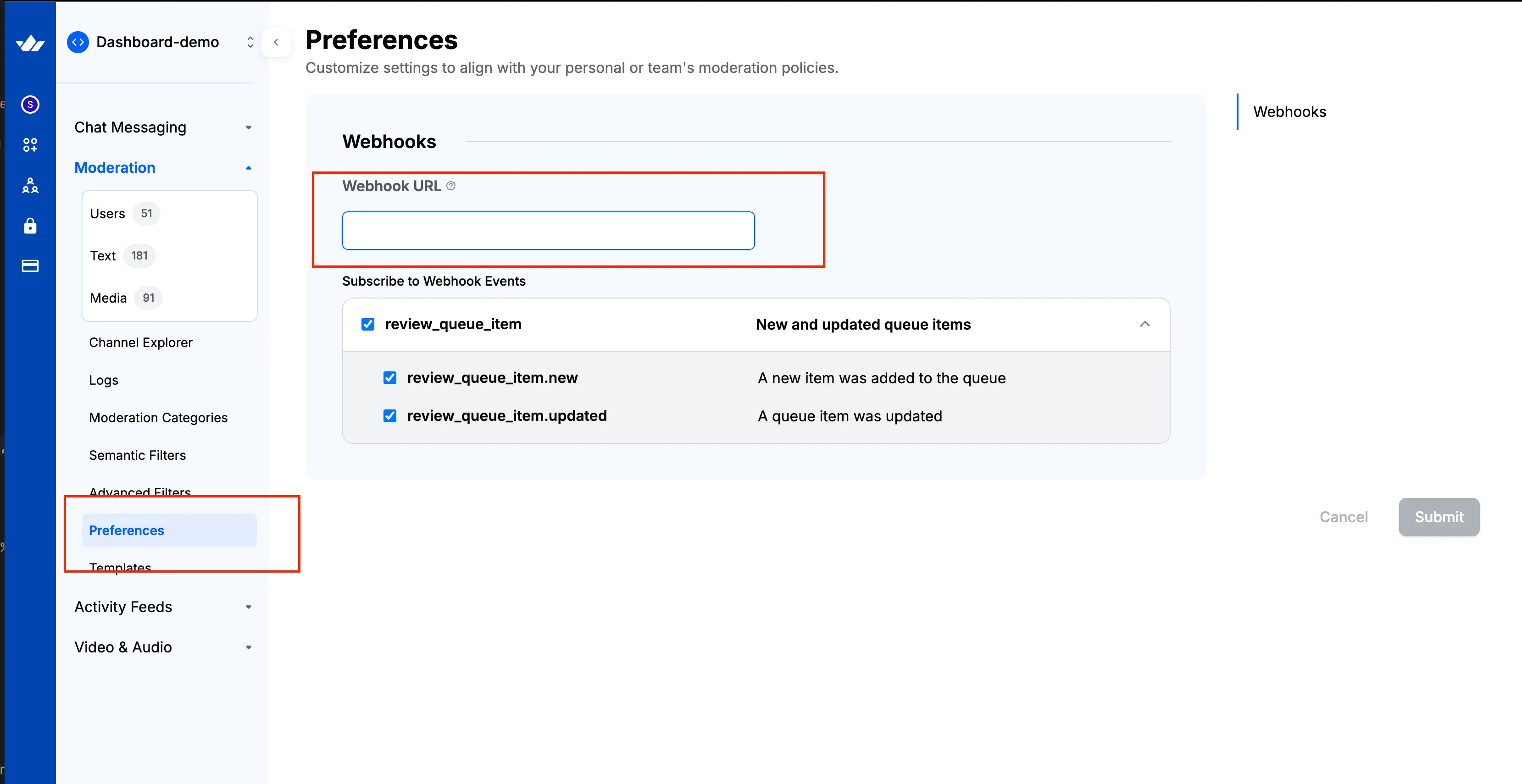

Configure webhook URL

You can configure the webhook URL from the Stream dashboard.

- Go to the dashboard

- Select the app for which you want to receive webhook events

- Click on "Preferences" under the "Moderation" section in the left navigation

- Set the URL as shown in the following screenshot

Webhook events

The webhook url will receive following events

review_queue_item.new- This event notifies you of new content available for review. The content could be a message, activity, reaction, or even a user profile.

- This event is triggered when content is flagged for the first time. Consequently, you'll also receive the associated flags as part of the payload.

review_queue_item.updated- This event notifies you when existing flagged content receives additional flags or when a moderator performs an action on flagged content.

- The payload includes the new flags or details of the action performed.

moderation_check.completed- This event notifies you when the Moderation Check has been completed.

- This event is triggered for both flag and non-flag actions.

- This event is fired when the moderation check for an entity has finished (after sync and async checks). It is emitted even when no flags were raised.

- The payload includes the entity identifiers and the final

recommended_action.

moderation_rule.triggered- This event notifies you when a moderation rule from the Rule Builder is triggered.

- This event is triggered when rule conditions are met and actions are executed.

- Available for

user,content, andcallrule types. - For call rules, includes

violation_numberto track escalation sequences. - The payload includes rule information, triggered actions, and entity details.

review_queue_item.new

The review_queue_item.new event payload is structured as follows:

review_queue_item.updated

The review_queue_item.updated event payload is structured as follows:

{

"review_queue_item": {

--> See ReviewQueueItem shape in the table below

},

"flags": [

--> See ModerationEventFlag shape in the table below

],

"action": {

--> See ModerationEventActionLog shape in the table below

},

"type": "review_queue_item.updated",

"created_at": "timestamp",

"received_at": "timestamp"

}moderation_check.completed

The moderation_check.completed event payload is structured as follows:

{

"type": "moderation_check.completed",

"created_at": "timestamp",

"entity_id": "user123-iyVcizkX4KhoiOJf2YOS5",

"entity_type": "stream:chat:v1:message",

"recommended_action": "flag",

"review_queue_item_id": "03eab71c-d2bf-418b-982a-15efcfdebb47"

}This event contains the following fields:

| Key | Type | Description |

|---|---|---|

type | string | The event type, which is always "moderation_check.completed" |

created_at | datetime | Timestamp when the moderation check was completed |

entity_id | string | Unique identifier of the entity that was moderated |

entity_type | string | Type of the entity that was moderated (e.g., "stream:chat:v1:message") |

recommended_action | string | Action recommended by the moderation system ("flag" or "remove") |

review_queue_item_id | string | Unique identifier of the associated review queue item |

moderation_rule.triggered

The moderation_rule.triggered event payload is structured as follows:

{

"type": "moderation_rule.triggered",

"created_at": "2026-01-20T23:45:04.485362361Z",

"rule": {

"id": "0450e7d6-9386-4eb9-a720-f3999979989c",

"name": "Nudity or hate speech not allowed from men",

"type": "call",

"description": "Detects inappropriate content in video calls"

},

"violation_number": 1,

"entity_id": "default:default_test-4013",

"entity_type": "stream:v1:call",

"user_id": "test-user-2025b49e-ef45-473f-bb90-4f8f679c8581",

"triggered_actions": ["mute_video", "call_warning"],

"review_queue_item_id": "abc123"

}This event contains the following fields:

| Key | Type | Description | Possible Values |

|---|---|---|---|

type | string | The event type, which is always "moderation_rule.triggered" | "moderation_rule.triggered" |

created_at | datetime | Timestamp when the rule was triggered | |

rule | object | Object containing rule information | |

rule.id | string | Unique identifier for the rule | |

rule.name | string | Human-readable rule name | |

rule.type | string | Rule type | "call", "user", or "content" |

rule.description | string | Rule description | |

violation_number | int | (Call rules only) The violation number that triggered this event (1st violation, 2nd violation, etc.) | Only present for call rules. For user and content rules, this field is not included. |

entity_id | string | The ID of the entity that triggered the rule | For call rules: call CID (e.g., "default:default_test-4013"). For content rules: message ID. |

entity_type | string | The type of entity that triggered the rule | For call rules: "stream:v1:call". For content rules: "stream:chat:v1:message". |

user_id | string | The ID of the user who triggered the rule | |

triggered_actions | array | Array of action types that were executed | Examples: ["mute_video", "call_warning"], ["ban"], ["flag"] |

review_queue_item_id | string | (Optional) The review queue item ID if the violation was flagged for review | Only present if the rule action includes flagging for review |

Note: Webhook events are sent once per rule per violation number per entity. For call rules with action sequences, if multiple keyframes or closed captions trigger the same rule with the same violation number, only one webhook event is sent.

Shapes

ReviewQueueItem Shape

This shape contains the following fields:

| Key | Type | Description | Possible Values |

|---|---|---|---|

id | string | Unique identifier of the review queue item | |

created_at | datetime | Timestamp when the review queue item was created | |

updated_at | datetime | Timestamp when the review queue item was last updated | |

entity_type | string | Describes the type of entity under review | Default types include:

entity_type can be any unique string. |

entity_id | string | The id of the message, activity or reaction | |

moderation_payload | object | exact content which was sent for auto moderation E.g., { texts: ["fuck you"], images: ["https://sampleimage.com/test.jpg"], videos: [] } | |

status | string | Possible values are as following:

| |

recommended_action | Action recommended by stream moderation engines for the entity/content. | Possible values are as following:

| |

completed_at | datetime | Timestamp when all the moderation engines finished assessing the content | |

languages | array | ||

severity | int | ||

ai_text_severity | string | Possible values are as following:

| |

reviewed_at | datetime | Time at which the entity was reviewed | |

reviewed_by | string | Id of the moderator who reviewed the item. This value is set when moderator takes an action on review queue item from dashboard | |

message (only applicable to Chat product) | object | Chat message object under review | |

call (only applicable to Video call moderation) | object | Call object from which moderated frames are taken | |

entity_creator | object | The user who created the entity under review. In case of chat, its the user who sent the message under review. In case of activity feeds, its the actor of the activity/reaction | |

entity_creator_id | string | The id of the user who created the entity under review. In case of chat, its the user who sent the message under review. In case of activity feeds, its the actor of the activity/reaction | |

flags | array | List of reasons the item was flagged for moderation. | |

flags_count | int | Count of flags returned | |

bans | array | List of bans administered to the user | |

config_key | string | Policy key for the moderation policy |

ModerationEventFlag Shape

This shape contains an array of flags associated with the moderation event. Each flag is represented as follows:

| Key | Type | Description | Possible Values |

|---|---|---|---|

type | string | Type of the flag. This represents the name of moderation provider which created the flag. |

|

reason | string | Description of the reason for the flag | |

created_at | string | Timestamp when the flag was created | |

updated_at | string | Timestamp when the flag was updated | |

labels | array | Classification labels for the content under review from moderation engine (e.g., ai_text, ai_image etc) which created this flag | |

result | object | Complete result object from moderation engine. | |

entity_type | string | Describes the type of entity under review | Default types include:

entity_type can be any unique string. |

entity_id | string | The id of the message, activity or reaction | |

moderation_payload | object | exact content which was sent for auto moderation E.g., { texts: ["fuck you"], images: ["https://sampleimage.com/test.jpg"], videos: [] } | |

review_queue_id | string | Unique identifier of the review queue item | |

user_id | string | Id of the user that reported the content |

ModerationEventActionLog Shape

This shape logs actions taken during the moderation event:

| Key | Type | Description | Possible Values |

|---|---|---|---|

id | string | uuid for action log | Unique identifier for the action log |

created_at | datetime | Timestamp when this action was performed | |

type | string | Type of action performed on the review queue item. |

|

user_id | string | Id of the user (or moderator) who performed the action | |

reason | string | Reason attached by moderator for the action. This can be any string value | |

custom | object | Additional data regarding the action. | In case of ban type action, custom object will contain following properties:

delete_user action, custom object will contain following properties:

delete_reaction or delete_message action, custom object will contain following properties:

|

target_user_id | string | This is same as entity creator id of the review queue item. |

Moderation Check Completed Event Payload Structure

When a moderation check completes, the webhook payload is structured as follows:

{

"entity_id": "string",

"entity_type": "string",

"recommended_action": "keep | flag | remove",

"review_queue_item_id": "string",

"type": "moderation_check.completed",

"created_at": "timestamp",

"received_at": "timestamp"

}This shape contains the following fields:

| Key | Type | Description | Possible Values |

|---|---|---|---|

entity_id | string | The id of the entity that was moderated (e.g., message id, activity id, reaction id, or user id). | |

entity_type | string | Describes the type of entity that was moderated. | Default types include:

entity_type can be any unique string. |

recommended_action | string | Final action recommended by Stream’s moderation engines for the entity/content. | Possible values are as following:

|

review_queue_item_id | string | Unique identifier of the associated review queue item created for this moderation check. | |

type | string | Event type | moderation_check.completed |

created_at | datetime | Timestamp when the event was created | |

received_at | datetime | Timestamp when the event was received by your webhook endpoint |